Hi all,

I am currently working with some nested JSON files and attempting to load it from the blob container to azure sql db using azure data factory.

All of the JSON files have different structures as the fields and columns vary in each one, which is why I thought it would be best to use a flexible staging table in Azure SQL db to store each JSON file's content as raw JSON.

At the moment, I have a pipeline set up with a get metadata activity, linked to a for each activity. Inside the for each activity, I have a lookup activity to read all the file contents then a stored procedure activity.

The above method worked, but only for the smaller files as most of the JSON files I have exceeds the limit for the lookup activity.

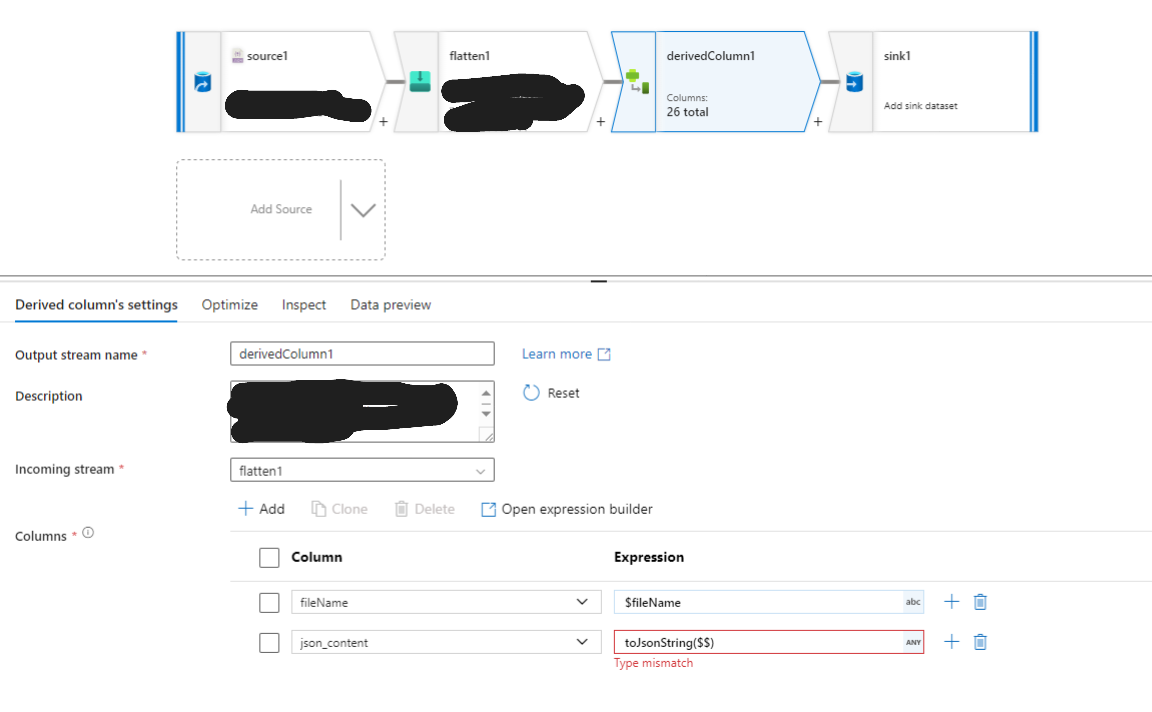

I have tried different methods such as the copy data activity and also a data flow so that I don't run into the size limit of the lookup activity, but I am facing errors when trying to dynamically map the file name and dynamically pass the JSON file content.

Please can someone advise what the best practice is to load multiple large nested JSON files with different structures.

Thank you!