Hi @정 근오

Welcome to Microsoft Q&A Forum. Thanks for posting your query here!

To upload large amount of data (over 50 GB) effectively to an Azure Storage container, you may consider using Azcopy tool to Upload files to Azure Blob storage by using AzCopy from on-premises or cloud (Use this command-line tool to easily copy data to and blobs from Azure Blobs, Blob Files, and Table Storage with optimal performance.) AzCopy supports concurrency and parallelism, and the ability to resume copy operations when interrupted. It provides high-performance for uploading, downloading larger files.

If you are using drag and drop and encounter issues, consider using bulk copy programs like Robocopy guide for Robocopy or rsync. These tools are recommended for transferring large files as they provide additional resiliency and can retry operations in case of intermittent errors.

If you want to upload larger files to file share or blob storage, there is an Azure Storage Data Movement Library.

Choose an Azure solution for data transfer: This article provides an overview of some of the common Azure data transfer solutions. The article also links out to recommended options depending on the network bandwidth in your environment and the size of the data you intend to transfer.

If network constraints make uploading data over the internet impractical, you can use Azure Data Box devices to transfer large datasets. You can copy your data to these devices and then ship them back to Microsoft for upload into Blob Storage.

Upload large amounts of random data in parallel to Azure storage

Hope this helps in resolving your problem. If the issue still persists, please feel free to contact Microsoft Q&A Forum. We will be glad to assist you closely.

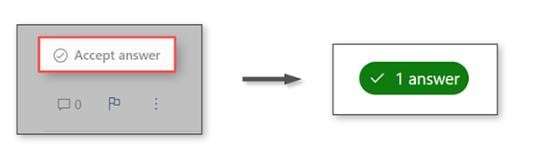

Please do consider to "Accept the answer” and “up-vote” wherever the information provided helps you, this can be beneficial to other community members.