Hi Brunda Yelakala (INFOSYS LIMITED),

Thanks for reaching out to Microsoft Q&A.

You can read custom config values in a spark notebook as shown below.

Step1: Add your key-value pairs inside the config.txt file:

Copy

spark.executorEnv.environmentName ppe

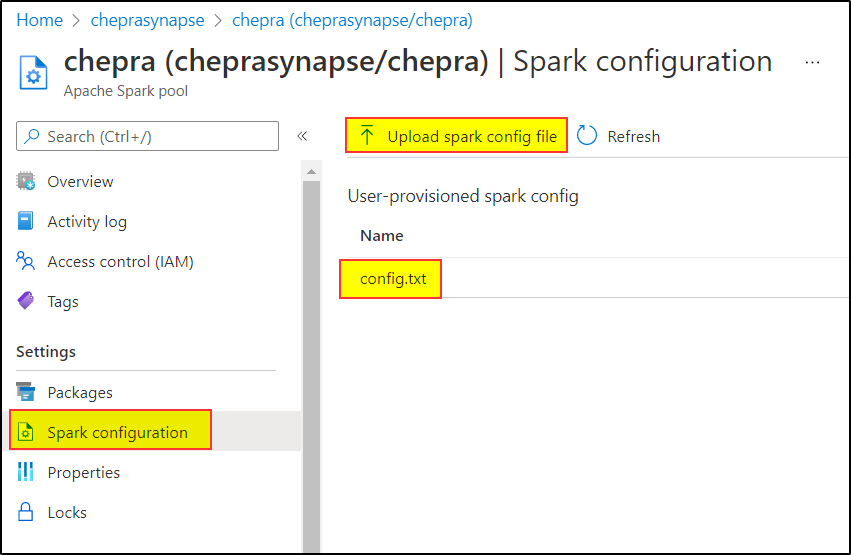

Step2: You can upload a config file to your spark pool.

Step3: Which you are then able to access inside your notebooks:

Copy

envName: str = spark.sparkContext.environment.get('environmentName', 'get')

Please let us know if it helps

Please do not forget to "Accept the answer” and “up-vote” wherever the information provided helps you, this can be beneficial to other community members.