Background

Synapse Apache Spark configurations allow users to create a blueprint for a Spark configuration that can be used for customizing the Spark behavior. This blueprint can be assigned to a Spark Pool (and will be used as new default for new Notebook sessions) or it can be used when running a particular Notebook.

Goal

I'd like to customize the Spark config and set spark.executor.memoryOverheadFactor=0.1 but at the same time unset/remove the config spark.executor.memoryOverhead that is set my Microsoft by default.

spark.executor.memoryOverheadFactor only has and effect if spark.executor.memoryOverhead is not set explicitly.

What I tried so far

1. Using the Synapse Web Studio: leaving the value empty --> validation error 🛑

2. Using the Synapse Web Studio: setting the value to 0 --> value in Session is actually set to 0 🛑

{

"name": "sc_user_mbe_memory",

"properties": {

"configs": {

"spark.executor.memoryOverhead": "0",

"spark.executor.memoryOverheadFactor": "0.1"

},

"created": "2025-03-08T12:23:10.3620000+01:00",

"annotations": [],

"configMergeRule": {

"artifact.currentOperation.spark.executor.memoryOverhead": "replace",

"artifact.currentOperation.spark.executor.memoryOverheadFactor": "replace"

}

}

}

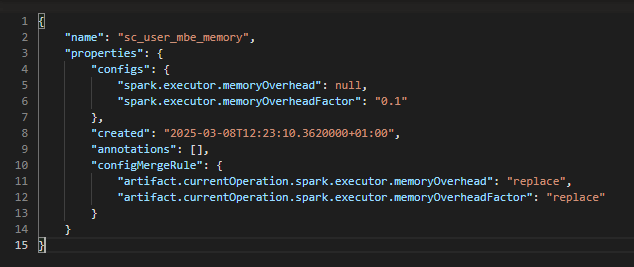

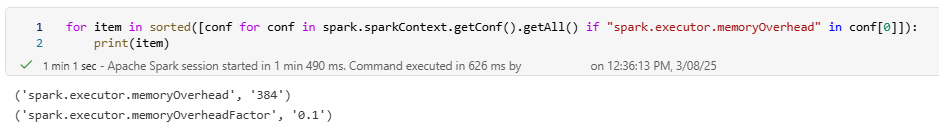

3. Editing the JSON representation of the config in GIT; setting the value to null --> value in Session is actually set to 384 (Microsoft's default) 🛑

{

"name": "sc_user_mbe_memory",

"properties": {

"configs": {

"spark.executor.memoryOverhead": null,

"spark.executor.memoryOverheadFactor": "0.1"

},

"created": "2025-03-08T12:23:10.3620000+01:00",

"annotations": [],

"configMergeRule": {

"artifact.currentOperation.spark.executor.memoryOverhead": "replace",

"artifact.currentOperation.spark.executor.memoryOverheadFactor": "replace"

}

}

}

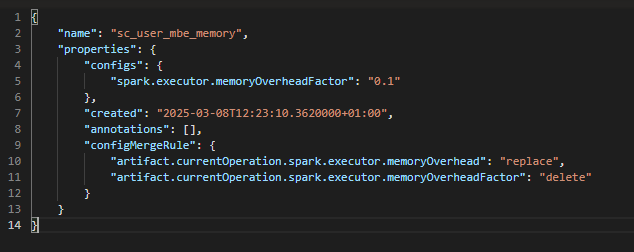

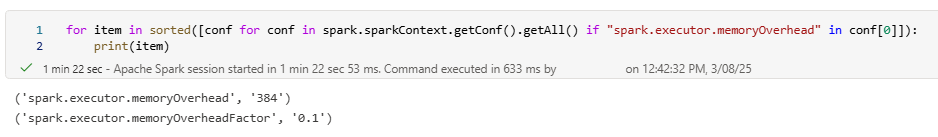

4. Editing the JSON representation of the config in GIT; setting configMergeRule to delete --> value in Session is actually set to 384 (Microsoft's default) 🛑

{

"name": "sc_user_mbe_memory",

"properties": {

"configs": {

"spark.executor.memoryOverheadFactor": "0.1"

},

"created": "2025-03-08T12:23:10.3620000+01:00",

"annotations": [],

"configMergeRule": {

"artifact.currentOperation.spark.executor.memoryOverhead": "replace",

"artifact.currentOperation.spark.executor.memoryOverheadFactor": "delete"

}

}

}

Additional thoughts

In a running session, it is possible to unset configs like this spark.conf.unset("spark.executor.memoryOverhead"). However, since this config has a deeper impact on the hardware/memory allocation, it can only be set before/during session start. Trying to unset it from a running session will lead to CANNOT_MODIFY_CONFIG. Therefore this is no feasible workaround.