Build a basic chat app in Python using Azure AI Foundry SDK

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

In this quickstart, we walk you through setting up your local development environment with the Azure AI Foundry SDK. We write a prompt, run it as part of your app code, trace the LLM calls being made, and run a basic evaluation on the outputs of the LLM.

Prerequisites

- Before you can follow this quickstart, complete the Azure AI Foundry playground quickstart to deploy a gpt-4o-mini model into a project.

Install the Azure CLI and sign in

You install the Azure CLI and sign in from your local development environment, so that you can use your user credentials to call the Azure OpenAI service.

In most cases you can install the Azure CLI from your terminal using the following command:

You can follow instructions How to install the Azure CLI if these commands don't work for your particular operating system or setup.

After you install the Azure CLI, sign in using the az login command and sign-in using the browser:

az login

Alternatively, you can log in manually via the browser with a device code.

az login --use-device-code

Create a new Python environment

First you need to create a new Python environment to use to install the package you need for this tutorial. DO NOT install packages into your global python installation. You should always use a virtual or conda environment when installing python packages, otherwise you can break your global install of Python.

If needed, install Python

We recommend using Python 3.10 or later, but having at least Python 3.8 is required. If you don't have a suitable version of Python installed, you can follow the instructions in the VS Code Python Tutorial for the easiest way of installing Python on your operating system.

Create a virtual environment

If you already have Python 3.10 or higher installed, you can create a virtual environment using the following commands:

Activating the Python environment means that when you run python or pip from the command line, you then use the Python interpreter contained in the .venv folder of your application.

Note

You can use the deactivate command to exit the python virtual environment, and can later reactivate it when needed.

Install packages

Install azure-ai-projects(preview), azure-ai-inference (preview), and azure-identity packages:

pip install azure-ai-projects azure-ai-inference azure-identity

Build your chat app

Create a file named chat.py. Copy and paste the following code into it.

from azure.ai.projects import AIProjectClient

from azure.identity import DefaultAzureCredential

project_connection_string = "<your-connection-string-goes-here>"

project = AIProjectClient.from_connection_string(

conn_str=project_connection_string, credential=DefaultAzureCredential()

)

chat = project.inference.get_chat_completions_client()

response = chat.complete(

model="gpt-4o-mini",

messages=[

{

"role": "system",

"content": "You are an AI assistant that speaks like a techno punk rocker from 2350. Be cool but not too cool. Ya dig?",

},

{"role": "user", "content": "Hey, can you help me with my taxes? I'm a freelancer."},

],

)

print(response.choices[0].message.content)

Insert your connection string

Your project connection string is required to call the Azure OpenAI service from your code.

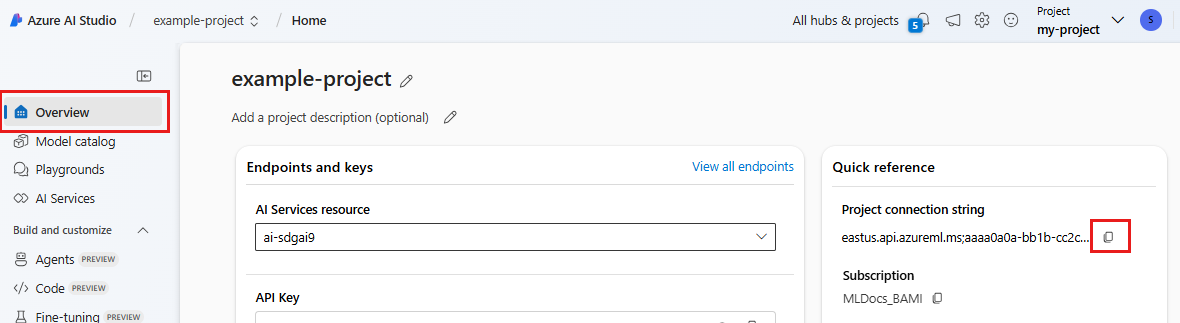

Find your connection string in the Azure AI Foundry project you created in the Azure AI Foundry playground quickstart. Open the project, then find the connection string on the Overview page.

Copy the connection string and replace <your-connection-string-goes-here> in the chat.py file.

Run your chat script

Run the script to see the response from the model.

python chat.py

Generate prompt from user input and a prompt template

The script uses hardcoded input and output messages. In a real app you'd take input from a client application, generate a system message with internal instructions to the model, and then call the LLM with all of the messages.

Let's change the script to take input from a client application and generate a system message using a prompt template.

Remove the last line of the script that prints a response.

Now define a

get_chat_responsefunction that takes messages and context, generates a system message using a prompt template, and calls a model. Add this code to your existing chat.py file:from azure.ai.inference.prompts import PromptTemplate def get_chat_response(messages, context): # create a prompt template from an inline string (using mustache syntax) prompt_template = PromptTemplate.from_string( prompt_template=""" system: You are an AI assistant that speaks like a techno punk rocker from 2350. Be cool but not too cool. Ya dig? Refer to the user by their first name, try to work their last name into a pun. The user's first name is {{first_name}} and their last name is {{last_name}}. """ ) # generate system message from the template, passing in the context as variables system_message = prompt_template.create_messages(data=context) # add the prompt messages to the user messages return chat.complete( model="gpt-4o-mini", messages=system_message + messages, temperature=1, frequency_penalty=0.5, presence_penalty=0.5, )Note

The prompt template uses mustache format.

The get_chat_response function could be easily added as a route to a FastAPI or Flask app to enable calling this function from a front-end web application.

Now simulate passing information from a frontend application to this function. Add the following code to the end of your chat.py file. Feel free to play with the message and add your own name.

if __name__ == "__main__": response = get_chat_response( messages=[{"role": "user", "content": "what city has the best food in the world?"}], context={"first_name": "Jessie", "last_name": "Irwin"}, ) print(response.choices[0].message.content)

Run the revised script to see the response from the model with this new input.

python chat.py