Tutorial: Part 1 - Set up project and development environment to build a custom knowledge retrieval (RAG) app with the Azure AI Foundry SDK

In this tutorial, you use the Azure AI Foundry SDK (and other libraries) to build, configure, and evaluate a chat app for your retail company called Contoso Trek. Your retail company specializes in outdoor camping gear and clothing. The chat app should answer questions about your products and services. For example, the chat app can answer questions such as "which tent is the most waterproof?" or "what is the best sleeping bag for cold weather?".

This tutorial is part one of a three-part tutorial. This part one gets you ready to write code in part two and evaluate your chat app in part three. In this part, you:

- Create a project

- Create an Azure AI Search index

- Install the Azure CLI and sign in

- Install Python and packages

- Deploy models into your project

- Configure your environment variables

If you've completed other tutorials or quickstarts, you might have already created some of the resources needed for this tutorial. If you have, feel free to skip those steps here.

This tutorial is part one of a three-part tutorial.

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

Prerequisites

- An Azure account with an active subscription. If you don't have one, create an account for free.

Create a project

To create a project in Azure AI Foundry, follow these steps:

- Go to the Home page of Azure AI Foundry.

- Select + Create project.

- Enter a name for the project. Keep all the other settings as default.

- Projects are created in hubs. If you see Create a new hub select it and specify a name. Then select Next. (If you don't see Create new hub, don't worry; it's because a new one is being created for you.)

- Select Customize to specify properties of the hub.

- Use any values you want, except for Region. We recommend you use either East US2 or Sweden Central for the region for this tutorial series.

- Select Next.

- Select Create project.

Deploy models

You need two models to build a RAG-based chat app: an Azure OpenAI chat model (gpt-4o-mini) and an Azure OpenAI embedding model (text-embedding-ada-002). Deploy these models in your Azure AI Foundry project, using this set of steps for each model.

These steps deploy a model to a real-time endpoint from the Azure AI Foundry portal model catalog:

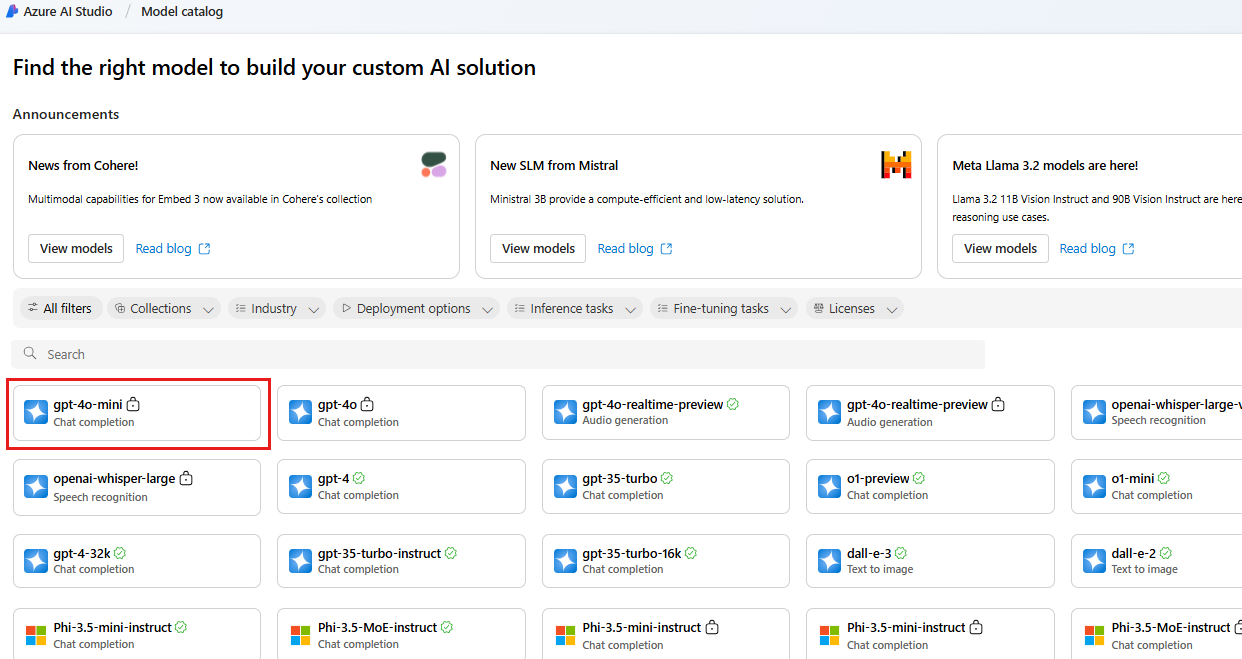

On the left navigation pane, select Model catalog.

Select the gpt-4o-mini model from the list of models. You can use the search bar to find it.

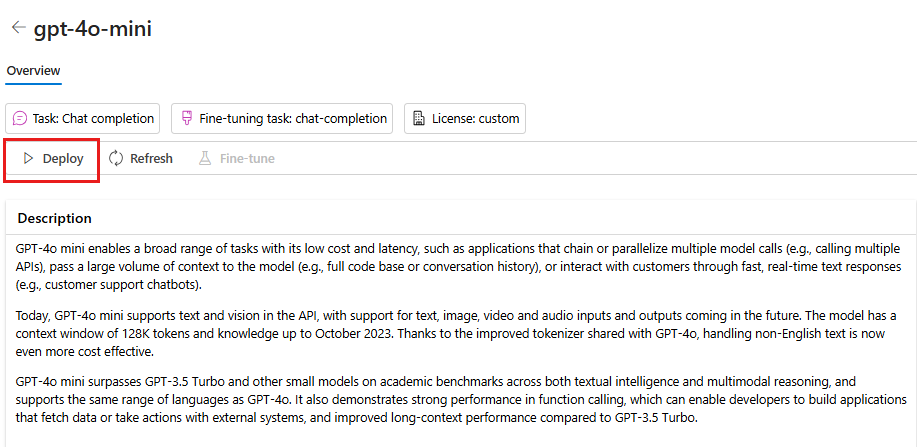

On the model details page, select Deploy.

Leave the default Deployment name. select Deploy. Or, if the model isn't available in your region, a different region is selected for you and connected to your project. In this case, select Connect and deploy.

After you deploy the gpt-4o-mini, repeat the steps to deploy the text-embedding-ada-002 model.

Create an Azure AI Search service

The goal with this application is to ground the model responses in your custom data. The search index is used to retrieve relevant documents based on the user's question.

You need an Azure AI Search service and connection in order to create a search index.

Note

Creating an Azure AI Search service and subsequent search indexes has associated costs. You can see details about pricing and pricing tiers for the Azure AI Search service on the creation page, to confirm cost before creating the resource.

If you already have an Azure AI Search service, you can skip to the next section.

Otherwise, you can create an Azure AI Search service using the Azure portal.

Tip

This step is the only time you use the Azure portal in this tutorial series. The rest of your work is done in Azure AI Foundry portal or in your local development environment.

- Create an Azure AI Search service in the Azure portal.

- Select your resource group and instance details. You can see details about pricing and pricing tiers on this page.

- Continue through the wizard and select Review + assign to create the resource.

- Confirm the details of your Azure AI Search service, including estimated cost.

- Select Create to create the Azure AI Search service.

Connect the Azure AI Search to your project

If you already have an Azure AI Search connection in your project, you can skip to Install the Azure CLI and sign in.

In the Azure AI Foundry portal, check for an Azure AI Search connected resource.

In Azure AI Foundry, go to your project and select Management center from the left pane.

In the Connected resources section, look to see if you have a connection of type Azure AI Search.

If you have an Azure AI Search connection, you can skip ahead to the next section.

Otherwise, select New connection and then Azure AI Search.

Find your Azure AI Search service in the options and select Add connection.

Use API key for Authentication.

Important

The API key option isn't recommended for production. To select and use the recommended Microsoft Entra ID authentication option, you must also configure access control for the Azure AI Search service. Assign the Search Index Data Contributor and Search Service Contributor roles to your user account. For more information, see Connect to Azure AI Search using roles and Role-based access control in Azure AI Foundry portal.

Select Add connection.

Install the Azure CLI and sign in

You install the Azure CLI and sign in from your local development environment, so that you can use your user credentials to call the Azure OpenAI service.

In most cases you can install the Azure CLI from your terminal using the following command:

You can follow instructions How to install the Azure CLI if these commands don't work for your particular operating system or setup.

After you install the Azure CLI, sign in using the az login command and sign-in using the browser:

az login

Alternatively, you can log in manually via the browser with a device code.

az login --use-device-code

Create a new Python environment

First you need to create a new Python environment to use to install the package you need for this tutorial. DO NOT install packages into your global python installation. You should always use a virtual or conda environment when installing python packages, otherwise you can break your global install of Python.

If needed, install Python

We recommend using Python 3.10 or later, but having at least Python 3.8 is required. If you don't have a suitable version of Python installed, you can follow the instructions in the VS Code Python Tutorial for the easiest way of installing Python on your operating system.

Create a virtual environment

If you already have Python 3.10 or higher installed, you can create a virtual environment using the following commands:

Activating the Python environment means that when you run python or pip from the command line, you then use the Python interpreter contained in the .venv folder of your application.

Note

You can use the deactivate command to exit the python virtual environment, and can later reactivate it when needed.

Install packages

Install azure-ai-projects(preview) and azure-ai-inference (preview), along with other required packages.

First, create a file named requirements.txt in your project folder. Add the following packages to the file:

azure-ai-projects azure-ai-inference[prompts] azure-identity azure-search-documents pandas python-dotenv opentelemetry-apiInstall the required packages:

pip install -r requirements.txt

Create helper script

Create a folder for your work. Create a file called config.py in this folder. This helper script is used in the next two parts of the tutorial series. Add the following code:

# ruff: noqa: ANN201, ANN001

import os

import sys

import pathlib

import logging

from azure.identity import DefaultAzureCredential

from azure.ai.projects import AIProjectClient

from azure.ai.inference.tracing import AIInferenceInstrumentor

# load environment variables from the .env file

from dotenv import load_dotenv

load_dotenv()

# Set "./assets" as the path where assets are stored, resolving the absolute path:

ASSET_PATH = pathlib.Path(__file__).parent.resolve() / "assets"

# Configure an root app logger that prints info level logs to stdout

logger = logging.getLogger("app")

logger.setLevel(logging.INFO)

logger.addHandler(logging.StreamHandler(stream=sys.stdout))

# Returns a module-specific logger, inheriting from the root app logger

def get_logger(module_name):

return logging.getLogger(f"app.{module_name}")

# Enable instrumentation and logging of telemetry to the project

def enable_telemetry(log_to_project: bool = False):

AIInferenceInstrumentor().instrument()

# enable logging message contents

os.environ["AZURE_TRACING_GEN_AI_CONTENT_RECORDING_ENABLED"] = "true"

if log_to_project:

from azure.monitor.opentelemetry import configure_azure_monitor

project = AIProjectClient.from_connection_string(

conn_str=os.environ["AIPROJECT_CONNECTION_STRING"], credential=DefaultAzureCredential()

)

tracing_link = f"https://ai.azure.com/tracing?wsid=/subscriptions/{project.scope['subscription_id']}/resourceGroups/{project.scope['resource_group_name']}/providers/Microsoft.MachineLearningServices/workspaces/{project.scope['project_name']}"

application_insights_connection_string = project.telemetry.get_connection_string()

if not application_insights_connection_string:

logger.warning(

"No application insights configured, telemetry will not be logged to project. Add application insights at:"

)

logger.warning(tracing_link)

return

configure_azure_monitor(connection_string=application_insights_connection_string)

logger.info("Enabled telemetry logging to project, view traces at:")

logger.info(tracing_link)

Configure environment variables

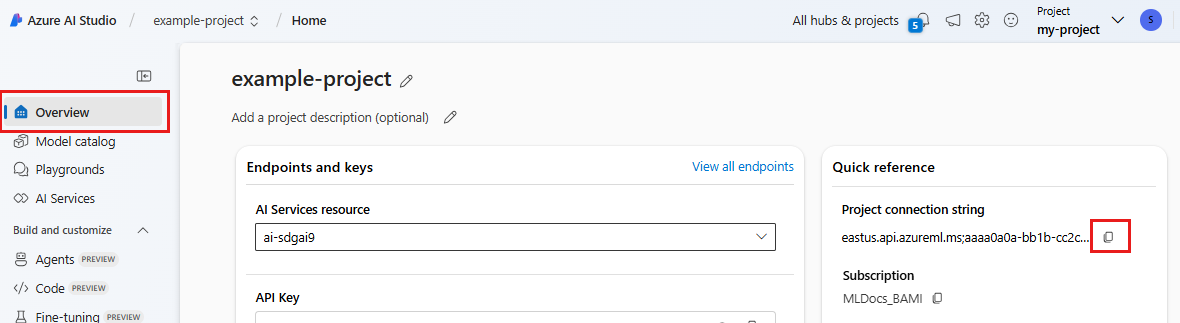

Your project connection string is required to call the Azure OpenAI service from your code. In this quickstart, you save this value in a .env file, which is a file that contains environment variables that your application can read.

Create a .env file, and paste the following code:

AIPROJECT_CONNECTION_STRING=<your-connection-string>

AISEARCH_INDEX_NAME="example-index"

EMBEDDINGS_MODEL="text-embedding-ada-002"

INTENT_MAPPING_MODEL="gpt-4o-mini"

CHAT_MODEL="gpt-4o-mini"

EVALUATION_MODEL="gpt-4o-mini"

Find your connection string in the Azure AI Foundry project you created in the Azure AI Foundry playground quickstart. Open the project, then find the connection string on the Overview page. Copy the connection string and paste it into the

.envfile.

If you don't yet have a search index, keep the value "example-index" for

AISEARCH_INDEX_NAME. In Part 2 of this tutorial you'll create the index using this name. If you have previously created a search index that you want to use instead, update the value to match the name of that search index.If you changed the names of the models when you deployed them, update the values in the

.envfile to match the names you used.

Tip

If you're working in VS Code, close and reopen the terminal window after you've saved changes in the .env file.

Warning

Ensure that your .env is in your .gitignore file so that you don't accidentally check it into your git repository.

Clean up resources

To avoid incurring unnecessary Azure costs, you should delete the resources you created in this tutorial if they're no longer needed. To manage resources, you can use the Azure portal.

But don't delete them yet, if you want to build a chat app in the next part of this tutorial series.

Next step

In this tutorial, you set up everything you need to build a custom chat app with the Azure AI SDK. In the next part of this tutorial series, you build the custom app.