How to use Azure OpenAI Service in Azure AI Foundry portal

You might have existing Azure OpenAI Service resources and model deployments that you created using the old Azure OpenAI Studio or via code. You can pick up where you left off by using your existing resources in Azure AI Foundry portal.

This article describes how to:

- Use Azure OpenAI Service models outside of a project.

- Use Azure OpenAI Service models and an Azure AI Foundry project.

Tip

You can use Azure OpenAI Service in Azure AI Foundry portal without creating a project or a connection. When you're working with the models and deployments, we recommend that you work outside of a project. Eventually, you want to work in a project for tasks such as managing connections, permissions, and deploying the models to production.

Use Azure OpenAI models outside of a project

You can use your existing Azure OpenAI model deployments in Azure AI Foundry portal outside of a project. Start here if you previously deployed models using the old Azure OpenAI Studio or via the Azure OpenAI Service SDKs and APIs.

To use Azure OpenAI Service outside of a project, follow these steps:

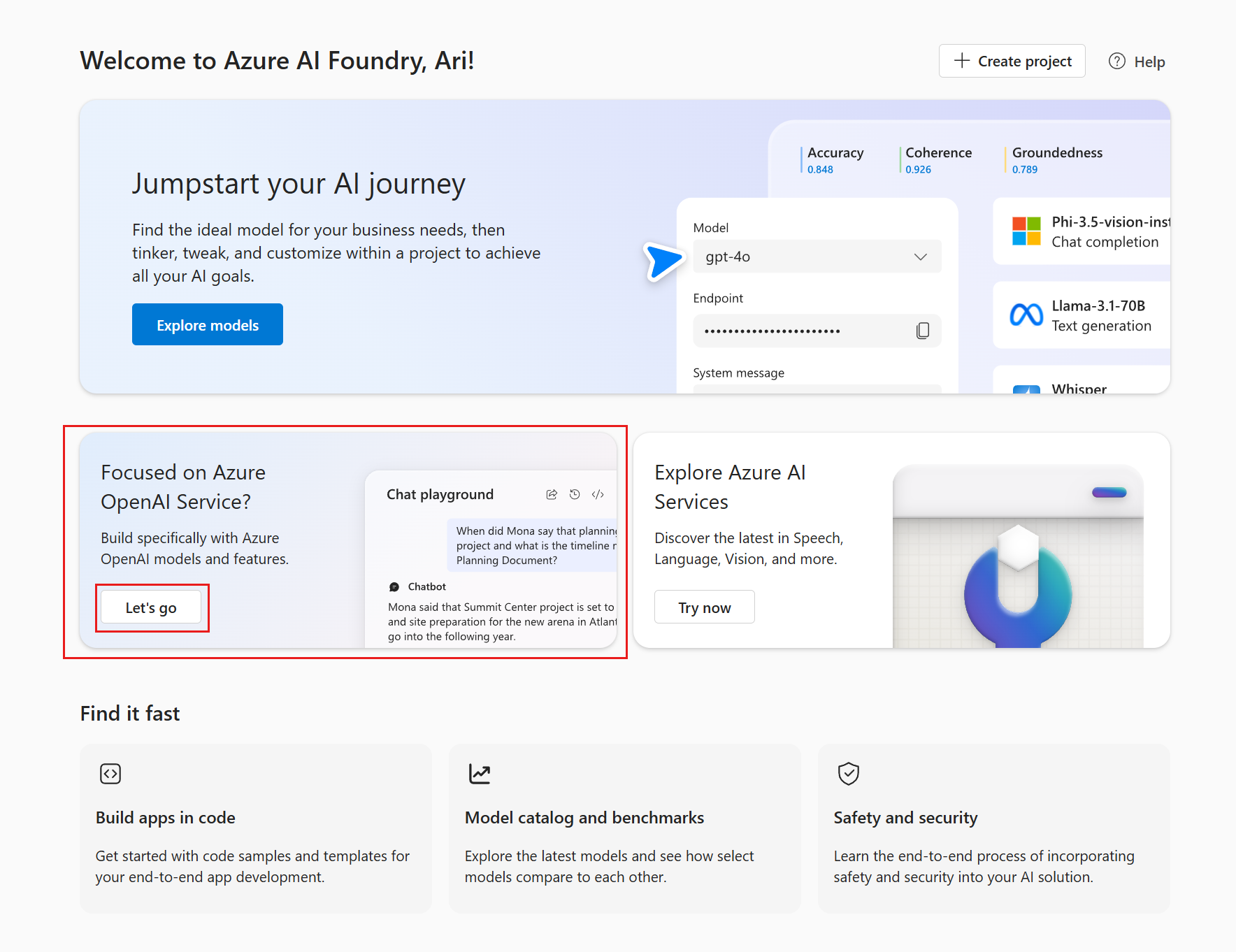

Go to the Azure AI Foundry home page and make sure you're signed in with the Azure subscription that has your Azure OpenAI Service resource.

Find the tile that says Focused on Azure OpenAI Service? and select Let's go.

If you don't see this tile, you can also go directly to the Azure OpenAI Service page in Azure AI Foundry portal.

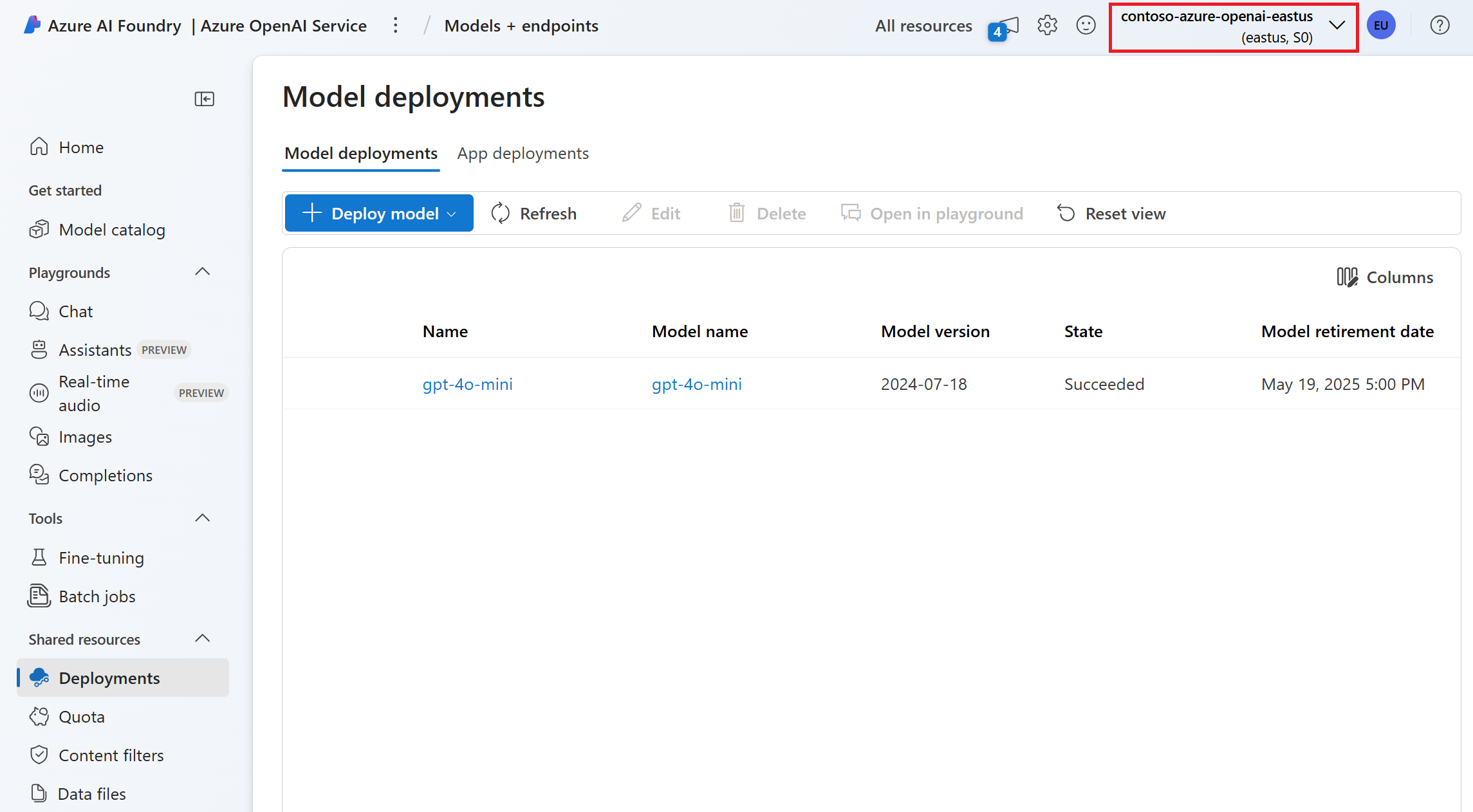

You should see your existing Azure OpenAI Service resources. In this example, the Azure OpenAI Service resource

contoso-azure-openai-eastusis selected.If your subscription has multiple Azure OpenAI Service resources, you can use the selector or go to All resources to see all your resources.

If you create more Azure OpenAI Service resources later (such as via the Azure portal or APIs), you can also access them from this page.

Use Azure OpenAI Service in a project

You might eventually want to use a project for tasks such as managing connections, permissions, and deploying models to production. You can use your existing Azure OpenAI Service resources in an Azure AI Foundry project.

Let's look at two ways to connect Azure OpenAI Service resources to a project:

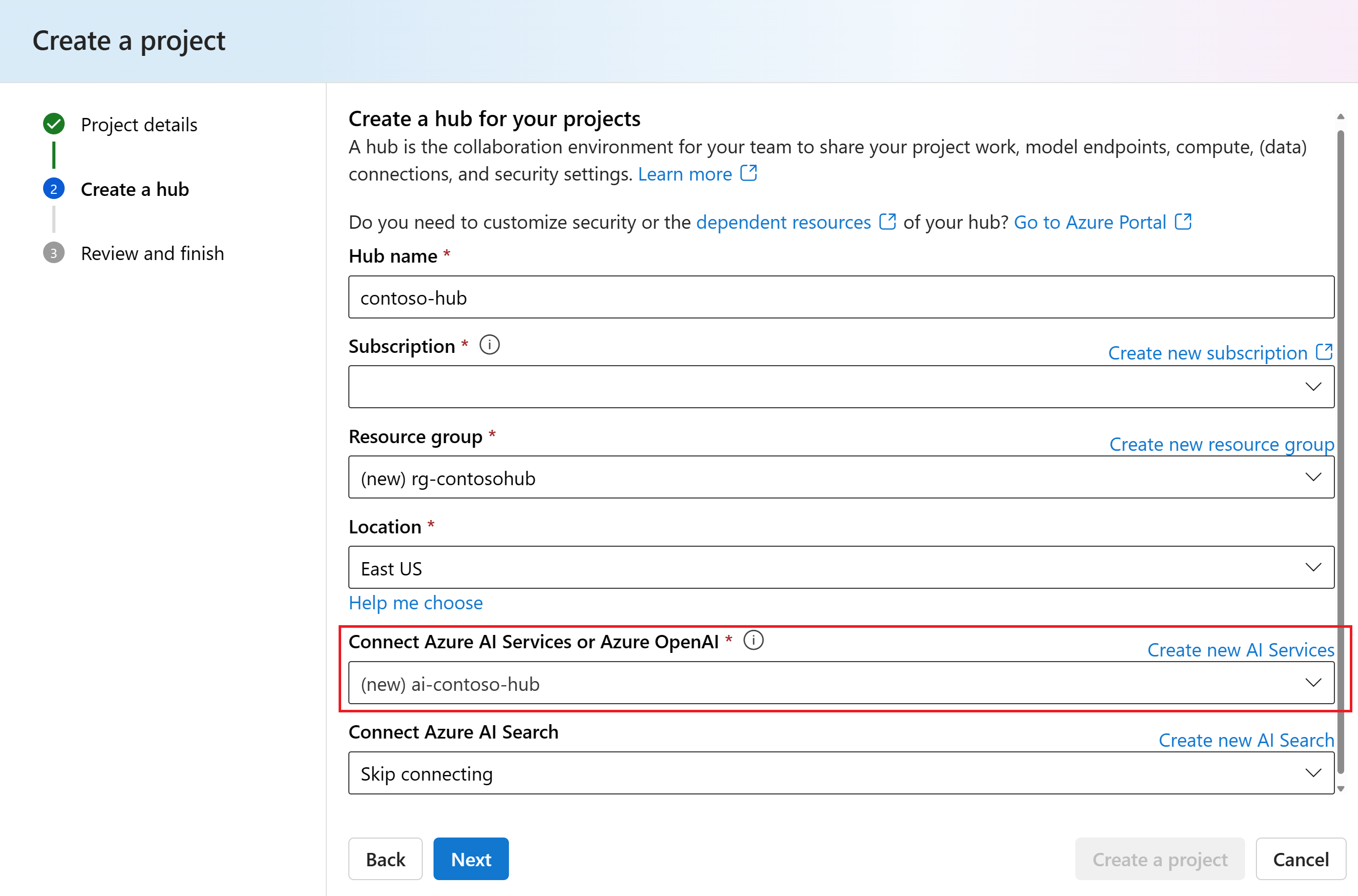

Connect Azure OpenAI Service when you create a project for the first time

When you create a project for the first time, you also create a hub. When you create a hub, you can select an existing Azure AI services resource (including Azure OpenAI) or create a new AI services resource.

For more details about creating a project, see the create an Azure AI Foundry project how-to guide or the create a project and use the chat playground quickstart.

Connect Azure OpenAI Service after you create a project

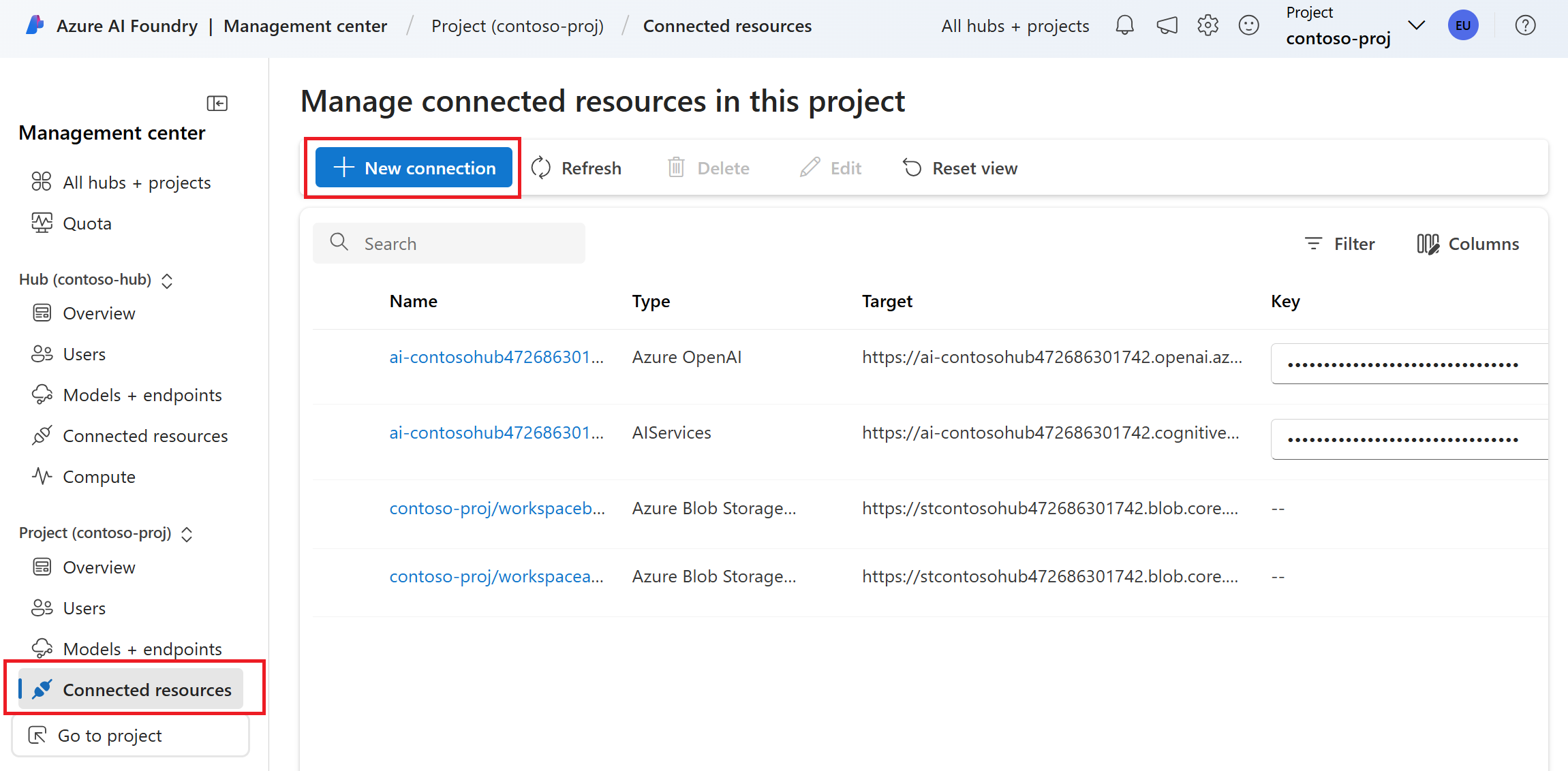

If you already have a project and you want to connect your existing Azure OpenAI Service resources, follow these steps:

Go to your Azure AI Foundry project.

Select Management center from the left pane.

Select Connected resources (under Project) from the left pane.

Select + New connection.

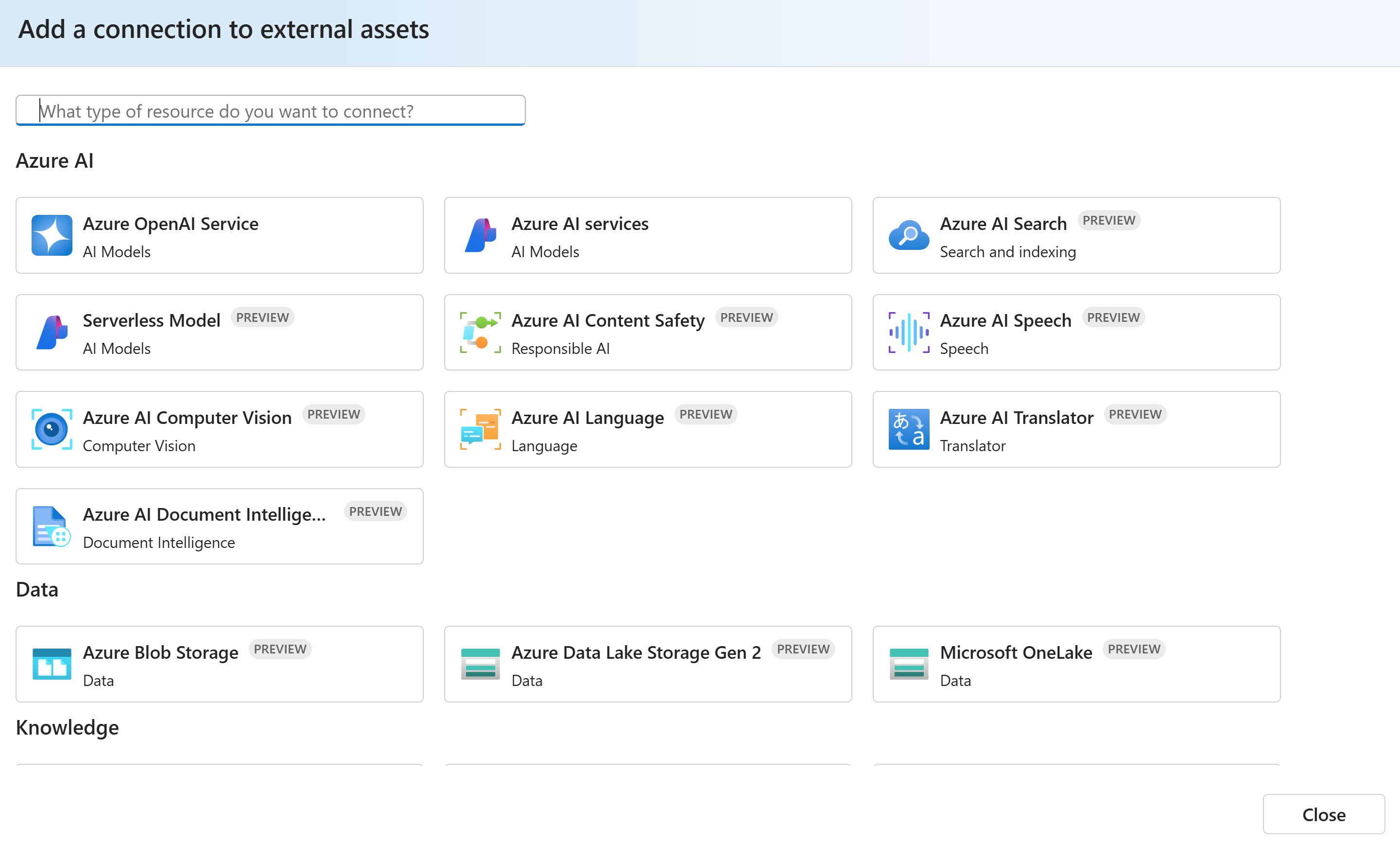

On the Add a connection to external assets page, select the kind of AI service that you want to connect to the project. For example, you can select Azure OpenAI Service, Azure AI Content Safety, Azure AI Speech, Azure AI Language, and other AI services.

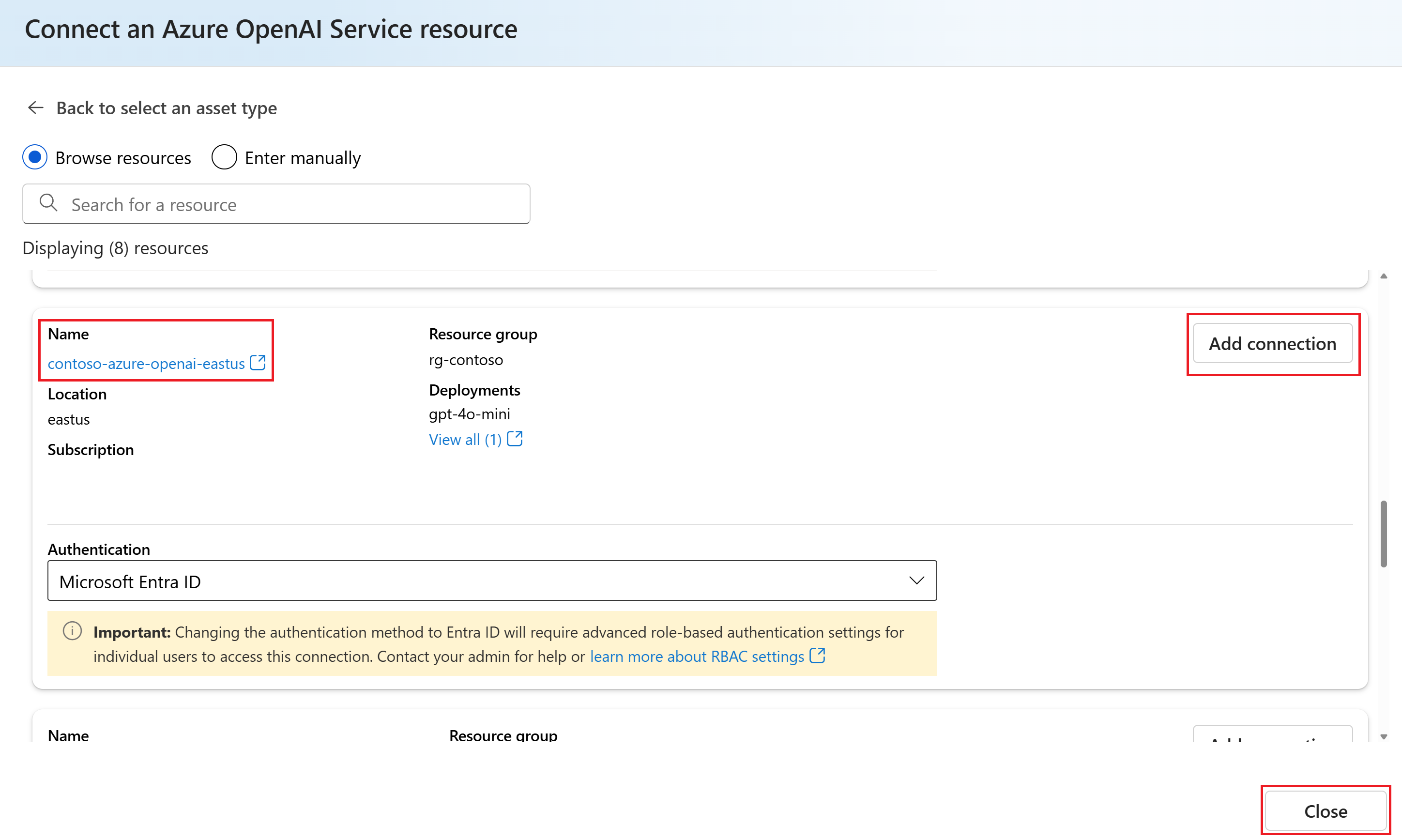

On the next page in the wizard, browse or search to find the resource you want to connect. Then select Add connection.

After the resource is connected, select Close to return to the Connected resources page. You should see the new connection listed.

Try Azure OpenAI models in the playgrounds

You can try Azure OpenAI models in the Azure OpenAI Service playgrounds outside of a project.

Tip

You can also try Azure OpenAI models in the project-level playgrounds. However, while you're only working with the Azure OpenAI Service models, we recommend working outside of a project.

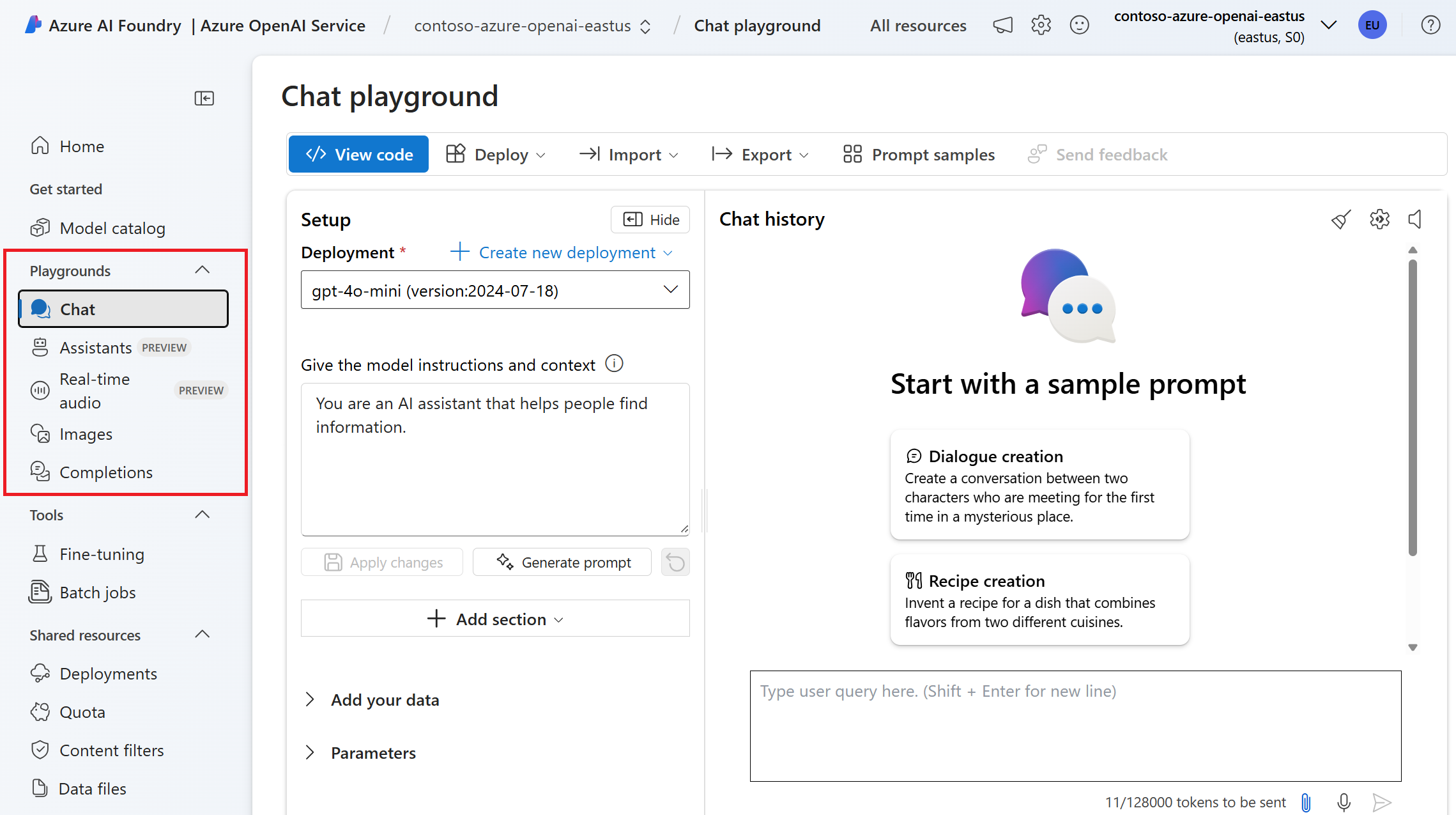

Go to the Azure OpenAI Service page in Azure AI Foundry portal.

Select a playground from under Resource playground in the left pane.

Here are a few guides to help you get started with Azure OpenAI Service playgrounds:

- Quickstart: Use the chat playground

- Quickstart: Get started using Azure OpenAI Assistants

- Quickstart: Use GPT-4o in the real-time audio playground

- Quickstart: Analyze images and video with GPT-4 for Vision in the playground

Each playground has different model requirements and capabilities. The supported regions will vary depending on the model. For more information about model availability per region, see the Azure OpenAI Service models documentation.

Fine-tune Azure OpenAI models

In Azure AI Foundry portal, you can fine-tune several Azure OpenAI models. The purpose is typically to improve model performance on specific tasks or to introduce information that wasn't well represented when you originally trained the base model.

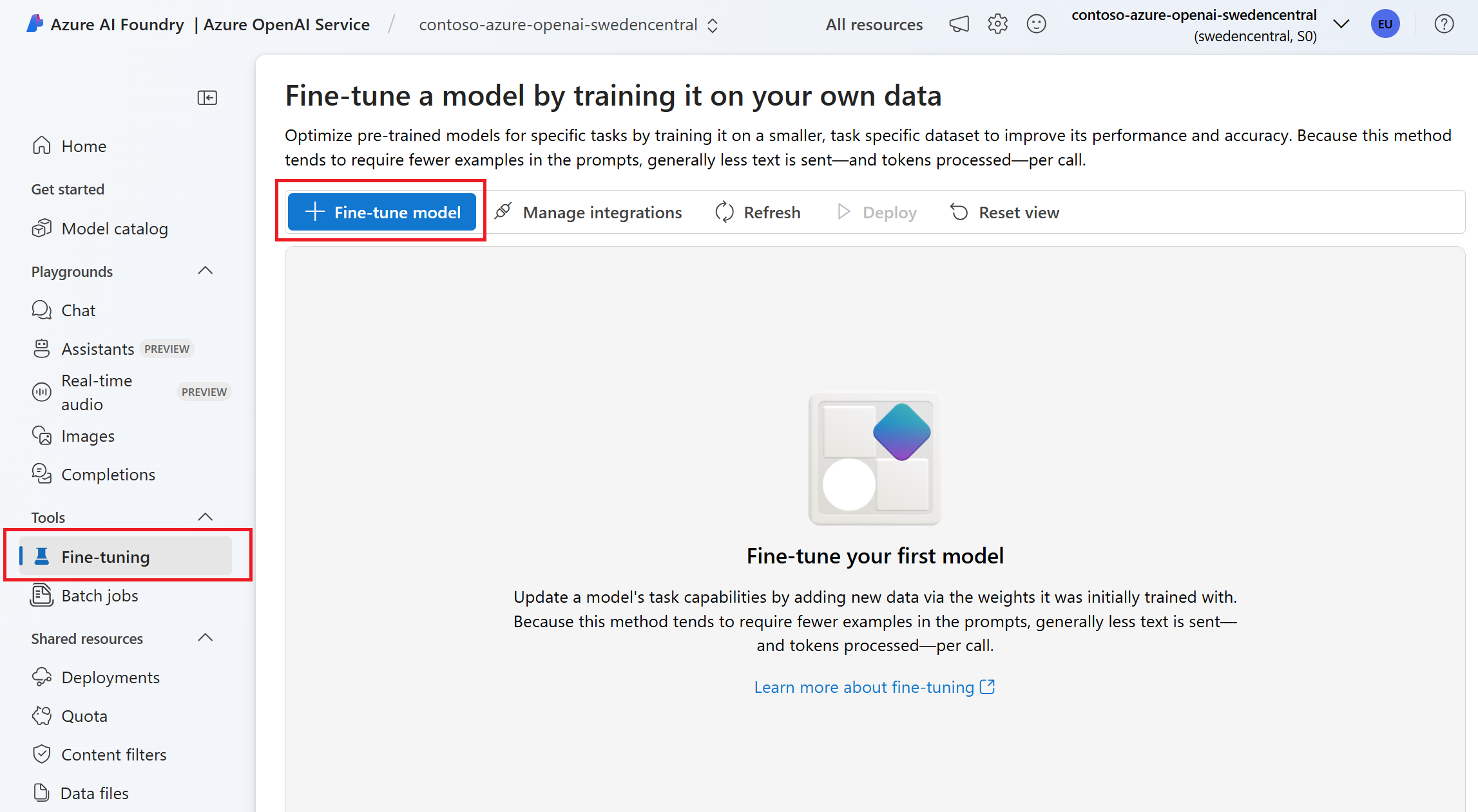

Go to the Azure OpenAI Service page in Azure AI Foundry portal to fine-tune Azure OpenAI models.

Select Fine-tuning from the left pane.

Select + Fine-tune model in the Generative AI fine-tuning tabbed page.

Follow the detailed how to guide to fine-tune the model.

For more information about fine-tuning Azure AI models, see:

- Overview of fine-tuning in Azure AI Foundry portal

- How to fine-tune Azure OpenAI models

- Azure OpenAI models that are available for fine-tuning

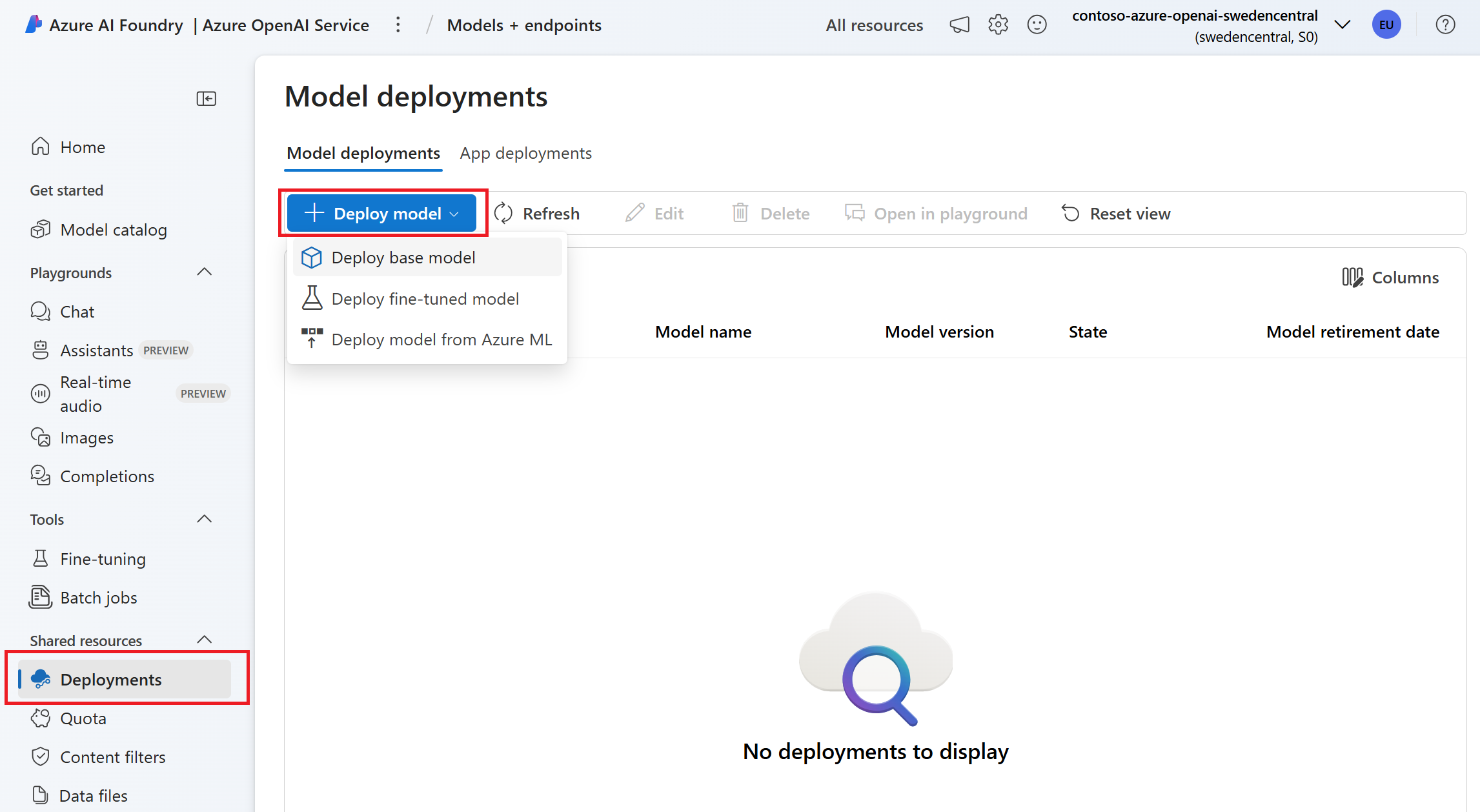

Deploy models to production

You can deploy Azure OpenAI base models and fine-tuned models to production via the Azure AI Foundry portal.

Go to the Azure OpenAI Service page in Azure AI Foundry portal.

Select Deployments from the left pane.

You can create a new deployment or view existing deployments. For more information about deploying Azure OpenAI models, see Deploy Azure OpenAI models to production.

Develop apps with code

At some point, you want to develop apps with code. Here are some developer resources to help you get started with Azure OpenAI Service and Azure AI services:

- Azure OpenAI Service and Azure AI services SDKs

- Azure OpenAI Service and Azure AI services REST APIs

- Quickstart: Get started building a chat app using code

- Quickstart: Get started using Azure OpenAI Assistants

- Quickstart: Use real-time speech to text