Hi MJ-1983,

Yes You are right that SMB file shares can be mapped as network drives on local machines, which isn't directly possible with Blob storage, there are several tools and methods you can use to copy or mirror local data to Azure Blob storage,

AzCopy is a powerful command-line tool designed for copying data to and from Azure Blob storagetorage. It supports high-performance data transfer and can be scheduled using scripts or task schedulers.

Azure Data Factory: Azure Data Factory is a cloud-based data integration service that allows you to create data pipelines for moving and transforming data. It can be used to copy data from on-premises to Azure Blob storage. ADF provides a graphical interface and supports various data sources and destinations.

Robocopy with Azure Blob Storage: While Robocopy itself doesn't directly support Azure Blob storage, you can use it in combination with tools like AzCopy. For example, you can use Robocopy to copy data to a local staging area and then use AzCopy to transfer the data to Azure Blob storage.

Resilio Connect is a real-time, cross-platform file synchronization tool that supports Azure Blob storage. It offers high-speed data transfer and synchronization capabilities, making it a robust alternative to AzCopy.

Rclone is an open-source command-line program that supports various cloud storage providers, including Azure Blob storage. It offers features like data synchronization, copying, and mounting cloud storage as a local drive.

example of using AzCopy to copy data from a local directory to Azure Blob storage:

azcopy copy "C:\local\path" "https://<storage-account-name>.blob.core.windows.net/<container-name>?<SAS-token>" --recursive

For ongoing synchronization, you can set up a scheduled task or cron job to run the AzCopy command at regular intervals.

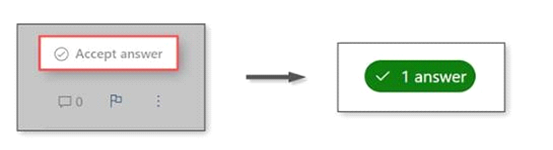

Please do not forget to "Accept the answer” and “up-vote” wherever the information provided helps you, this can be beneficial to other community members.

If you have any other questions or are still running into more issues, let me know in the "comments" and I would be glad to assist you.