Hi @lukstudy

Greetings & Welcome to the Microsoft Q&A forum! Thank you for sharing your query.

To address the "quota exceed exception" for a free trial user subscription, you may need to adjust your cluster configuration. Here are some recommendations for configuring your compute cluster:

Worker Type - Choose an appropriate worker type based on your workload requirements. The specific type will depend on the nature of your tasks (e.g., memory-intensive vs. compute-intensive).

Min Workers and Max Workers - When enabling autoscaling, set a minimum number of workers to ensure that your job has enough resources to start, and a maximum number to prevent exceeding your quota. For example, you might set a minimum of 2 workers and a maximum of 10 workers, but this should be tailored to your specific workload.

Driver Type - Similar to worker types, select a driver type that matches your workload requirements. The driver should have sufficient resources to handle the orchestration of your tasks.

Reference: Compute configuration reference

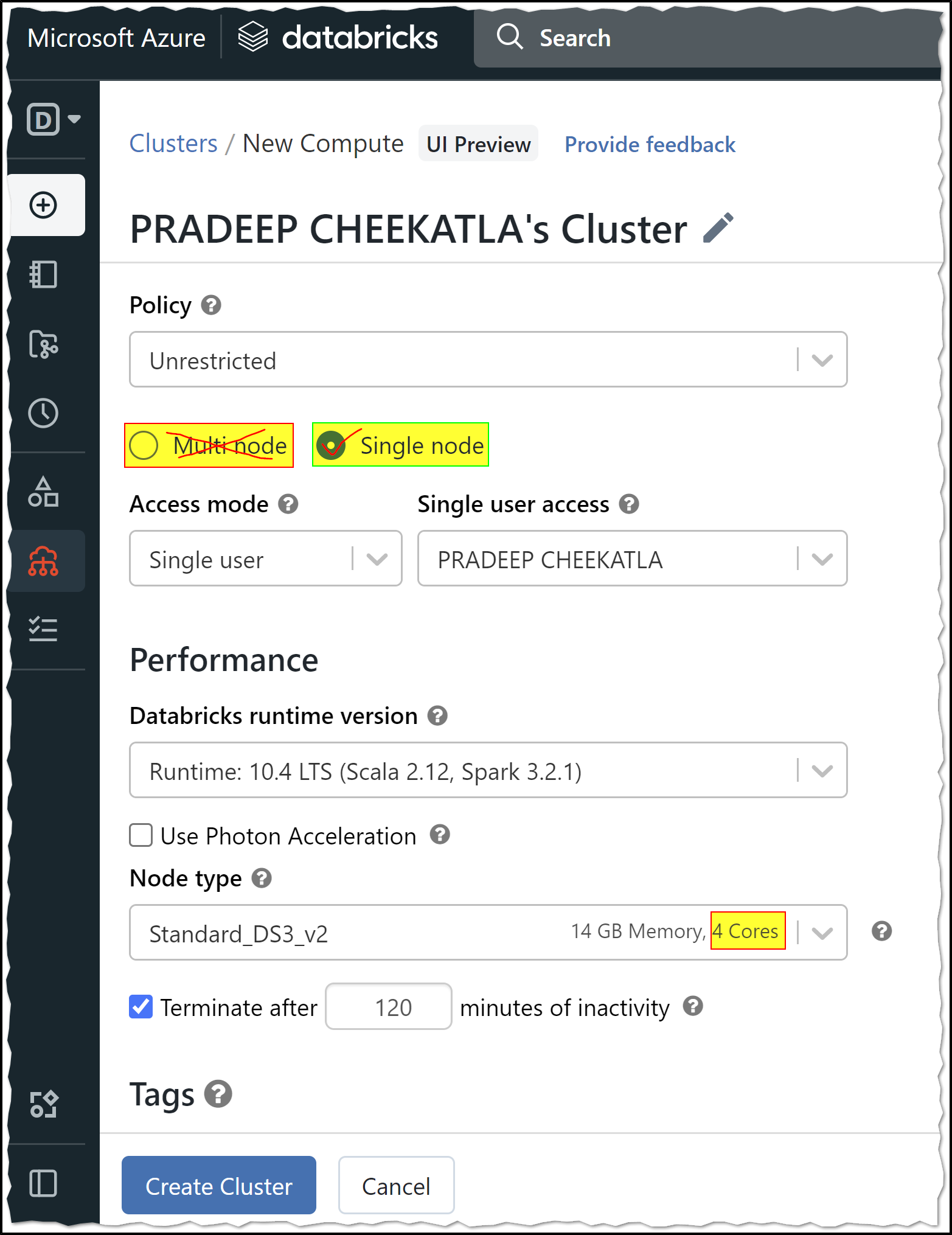

Note: Azure Databricks Cluster - multi node is not available under the Azure free trial/Student/Pass subscription.

Reason: Azure free trial/Student/Pass subscription has a limit of 4 cores, and you cannot create Databricks cluster multi node using a Student Subscription because it requires more than 8 cores.

You need to upgrade to a Pay-As-You-Go subscription to create Azure Databricks clusters with multi mode.

Note: Azure Student subscriptions aren't eligible for limit or quota increases. If you have a Student subscription, you can upgrade to a Pay-As-You-Go subscription.

You can use Azure Student subscription to create a Single node cluster which will have one Driver node with 4 cores.

A Single Node cluster is a cluster consisting of a Spark driver and no Spark workers. Such clusters support Spark jobs and all Spark data sources, including Delta Lake. In contrast, Standard clusters require at least one Spark worker to run Spark jobs.

Single Node clusters are helpful in the following situations:

- Running single node machine learning workloads that need Spark to load and save data

- Lightweight exploratory data analysis (EDA)

For more details, Azure Databricks - Single Node clusters

Hope this helps. Do let us know if you any further queries.

If this answers your query, do click Accept Answer and Yes for was this answer helpful. And, if you have any further query do let us know.