Tutorial: Stream and consume events to and from Real-Time Intelligence by using an Apache Kafka endpoint in an eventstream

In this tutorial, you learn how to use the Apache Kafka endpoint provided by a custom endpoint source in the enhanced capabilities of Microsoft Fabric event streams to stream events to Real-Time Intelligence. (A custom endpoint is called a custom app in the standard capabilities of Fabric event streams.) You also learn how to consume these streaming events by using the Apache Kafka endpoint from an eventstream's custom endpoint destination.

In this tutorial, you:

- Create an eventstream.

- Get the Kafka endpoint from a custom endpoint source.

- Send events with a Kafka application.

- Get the Kafka endpoint from a custom endpoint destination.

- Consume events with a Kafka application.

Prerequisites

- Get access to a workspace with Contributor or higher permissions where your eventstream is located.

- Get a Windows machine and install the following components:

- Java Development Kit (JDK) 1.7+

- A Maven binary archive (download and install)

- Git

Create an eventstream in Microsoft Fabric

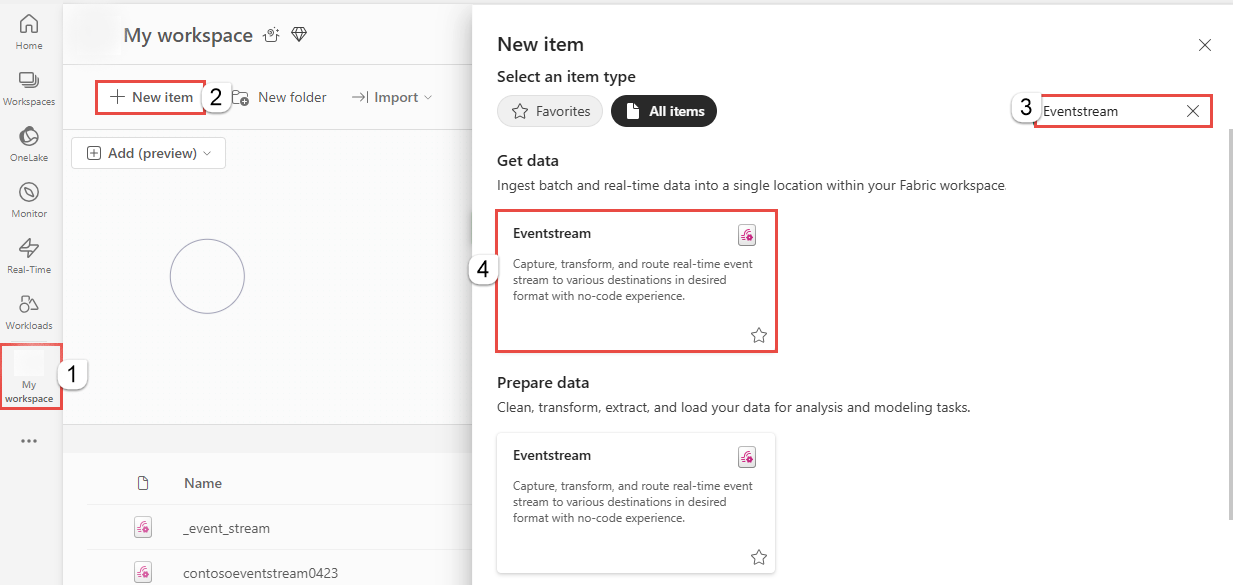

Navigate to the Fabric portal.

Select My workspace on the left navigation bar.

On the My workspace page, select + New item on the command bar.

On the New item page, search for Eventstream, and then select Eventstream.

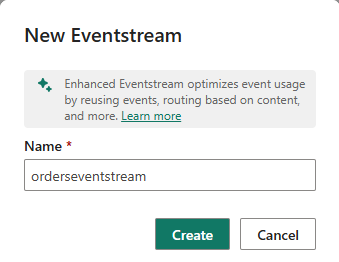

In the New Eventstream window, enter a name for the eventstream, and then select Create.

Creation of the new eventstream in your workspace can take a few seconds. After the eventstream is created, you're directed to the main editor where you can start with adding sources to the eventstream.

Retrieve the Kafka endpoint from an added custom endpoint source

To get a Kafka topic endpoint, add a custom endpoint source to your eventstream. The Kafka connection endpoint is then readily available and exposed within the custom endpoint source.

To add a custom endpoint source to your eventstream:

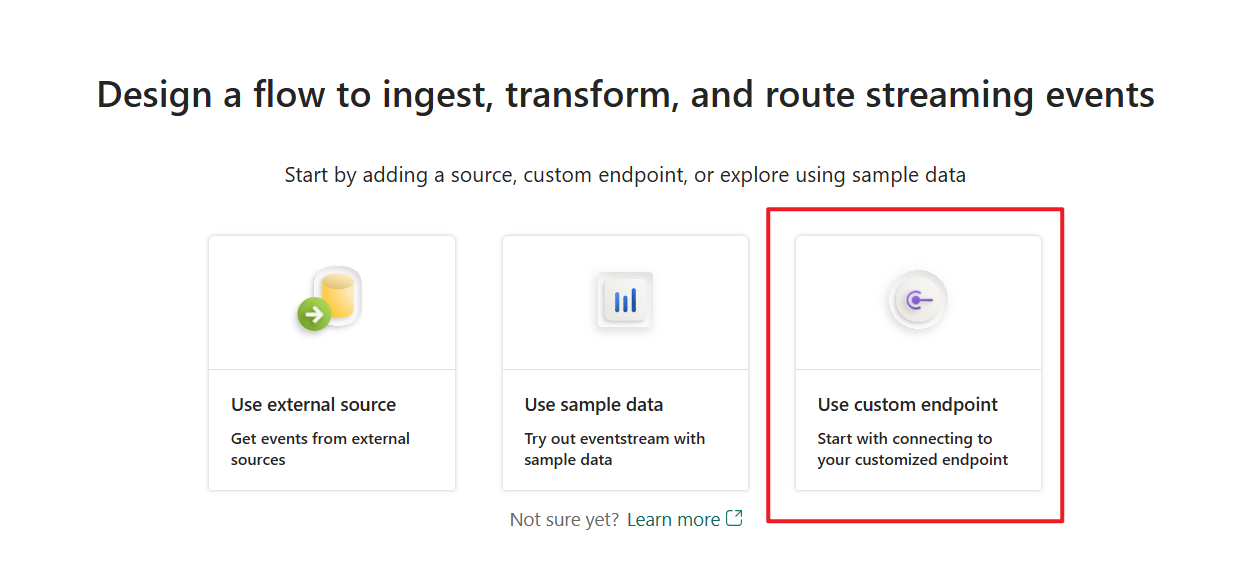

On your eventstream home page, select Use custom endpoint if it's an empty eventstream.

Or, on the ribbon, select Add source > Custom endpoint.

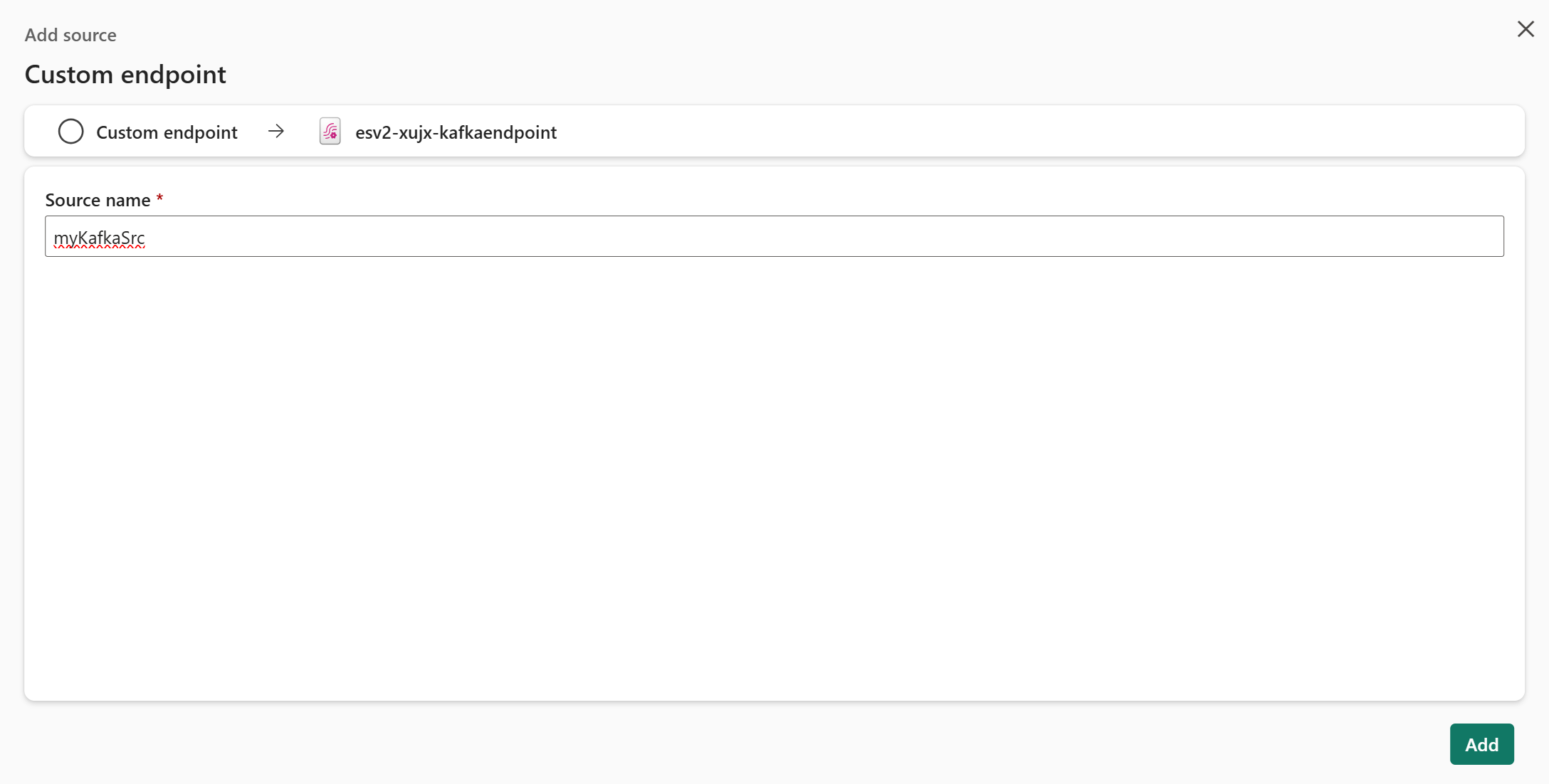

Enter a Source name value for the custom endpoint, and then select Add.

Check that the custom endpoint source appears on the eventstream's canvas in edit mode, and then select Publish.

After you successfully publish the eventstream, you can retrieve its details, including information about Kafka endpoint. Select the custom endpoint source tile on the canvas. Then, in the bottom pane of the custom endpoint source node, select the Kafka tab.

On the SAS Key Authentication page, you can get the following important Kafka endpoint information:

bootstrap.servers={YOUR.BOOTSTRAP.SERVER}security.protocol=SASL_SSLsasl.mechanism=PLAINsasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="{YOUR.CONNECTION.STRING}";

{YOUR.BOOTSTRAP.SERVER}is the Bootstrap server value on the SAS Key Authentication page.{YOUR.CONNECTION.STRING}can be either the Connection string-primary key value or the Connection string-secondary key value. Choose one to use.For more information about the SAS Key Authentication and Sample code pages, see Kafka endpoint details.

Send events with a Kafka application

With the important Kafka information that you obtained from the preceding step, you can replace the connection configurations in your existing Kafka application. Then you can send the events to your eventstream.

Here's one application based on the Azure Event Hubs SDK written in Java by following the Kafka protocol. To use this application to stream events to your eventstream, use the following steps to replace the Kafka endpoint information and execute it properly:

Clone the Azure Event Hubs for Kafka repository.

Go to azure-event-hubs-for-kafka/quickstart/java/producer.

Update the configuration details for the producer in src/main/resources/producer.config as follows:

bootstrap.servers={YOUR.BOOTSTRAP.SERVER}security.protocol=SASL_SSLsasl.mechanism=PLAINsasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="{YOUR.CONNECTION.STRING}";

Replace

{YOUR.BOOTSTRAP.SERVER}with the Bootstrap server value. Replace{YOUR.CONNECTION.STRING}with either the Connection string-primary key value or the Connection string-secondary key value. Choose one to use.Update the topic name with the new topic name in

src/main/java/TestProducer.javaas follows:private final static String TOPIC = "{YOUR.TOPIC.NAME}";.You can find the

{YOUR.TOPIC.NAME}value on the SAS Key Authentication page under the Kafka tab.Run the producer code and stream events into the eventstream:

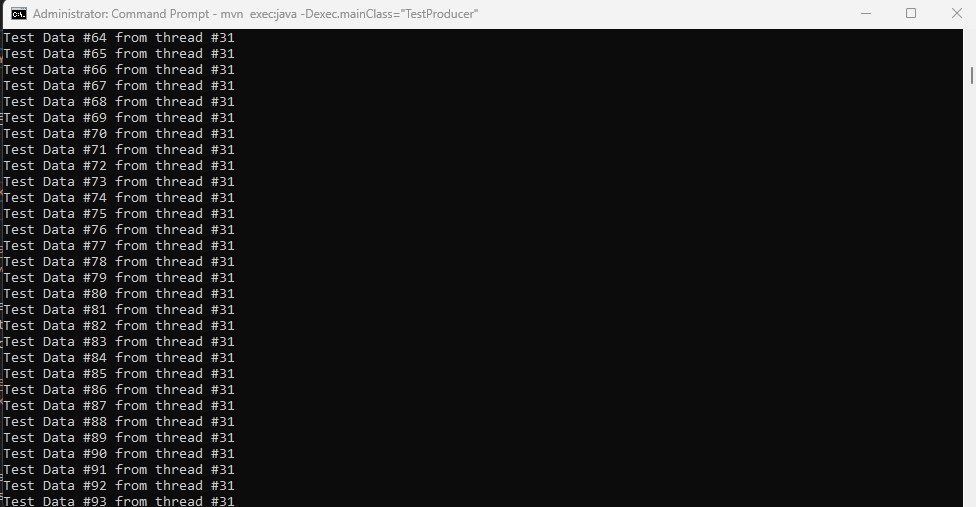

mvn clean packagemvn exec:java -Dexec.mainClass="TestProducer"

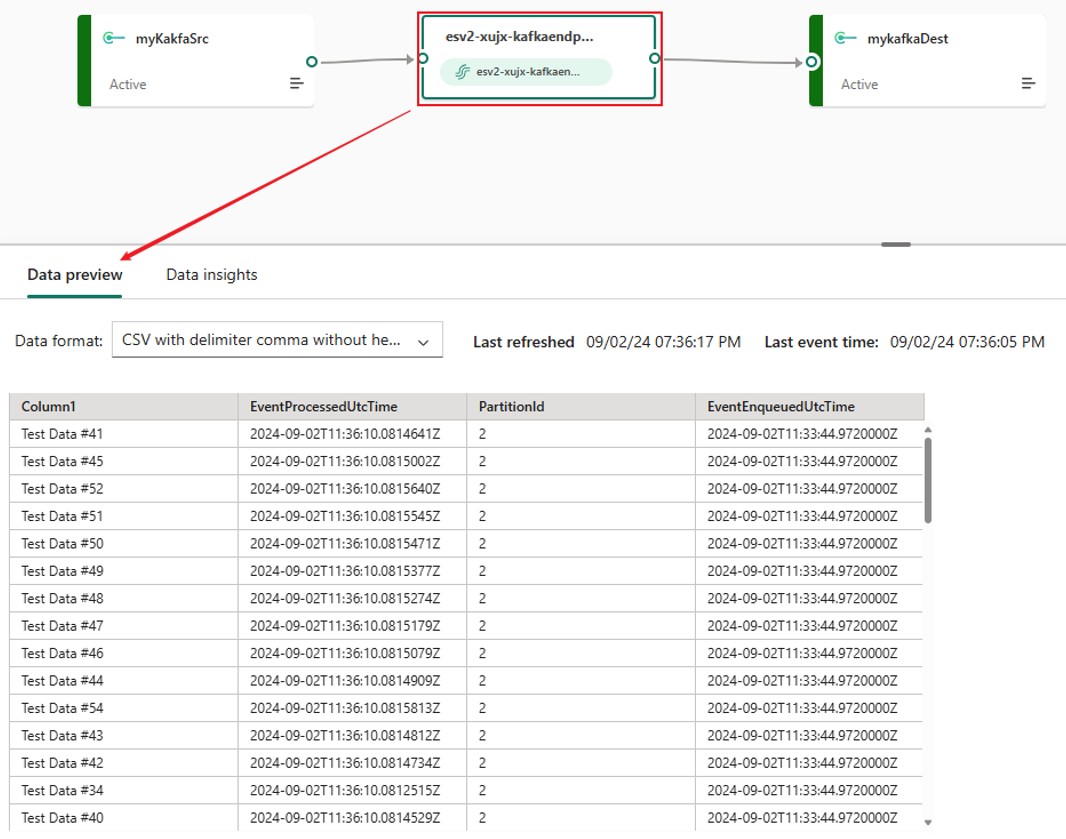

Preview the data that you sent with this Kafka application. Select the eventstream node, which is the middle node that displays your eventstream name.

Select the data format CSV with delimiter comma without header. This choice matches the format in which the application streamed the event data.

Get the Kafka endpoint from an added custom endpoint destination

You can add a custom endpoint destination to get the Kafka connection endpoint details for consuming events from your eventstream. After you add the destination, you can get the information from the destination's Details pane in the live view.

From the Basic page, you can get the Consumer group value. You need this value to configure the Kafka consumer application later.

From the SAS Key Authentication page, you can get the important Kafka endpoint information:

bootstrap.servers={YOUR.BOOTSTRAP.SERVER}security.protocol=SASL_SSLsasl.mechanism=PLAINsasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="{YOUR.CONNECTION.STRING}";

{YOUR.BOOTSTRAP.SERVER} is the Bootstrap server value. {YOUR.CONNECTION.STRING} can be either the Connection string-primary key value or the Connection string-secondary key value. Choose one to use.

Consume events with a Kafka application

Now you can use another application in the Azure Event Hubs for Kafka repository to consume the events from your eventstream. To use this application for consuming events from your eventstream, follow these steps to replace the Kafka endpoint details and run it appropriately:

Clone the Azure Event Hubs for Kafka repository.

Go to azure-event-hubs-for-kafka/quickstart/java/consumer.

Update the configuration details for the consumer in src/main/resources/consumer.config as follows:

bootstrap.servers={YOUR.BOOTSTRAP.SERVER}group.id={YOUR.EVENTHUBS.CONSUMER.GROUP}security.protocol=SASL_SSLsasl.mechanism=PLAINsasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString"password="{YOUR.CONNECTION.STRING}";

Replace

{YOUR.BOOTSTRAP.SERVER}with the Bootstrap server value. You can get the{YOUR.EVENTHUBS.CONSUMER.GROUP}value from the Basic page on the Details pane for the custom endpoint destination. Replace{YOUR.CONNECTION.STRING}with either the Connection string-primary key value or the Connection string-secondary key value. Choose one to use.Update the topic name with the new topic name on the SAS Key Authentication page in src/main/java/TestConsumer.java as follows:

private final static String TOPIC = "{YOUR.TOPIC.NAME}";.You can find the

{YOUR.TOPIC.NAME}value on the SAS Key Authentication page under the Kafka tab.Run the consumer code and stream events into the eventstream:

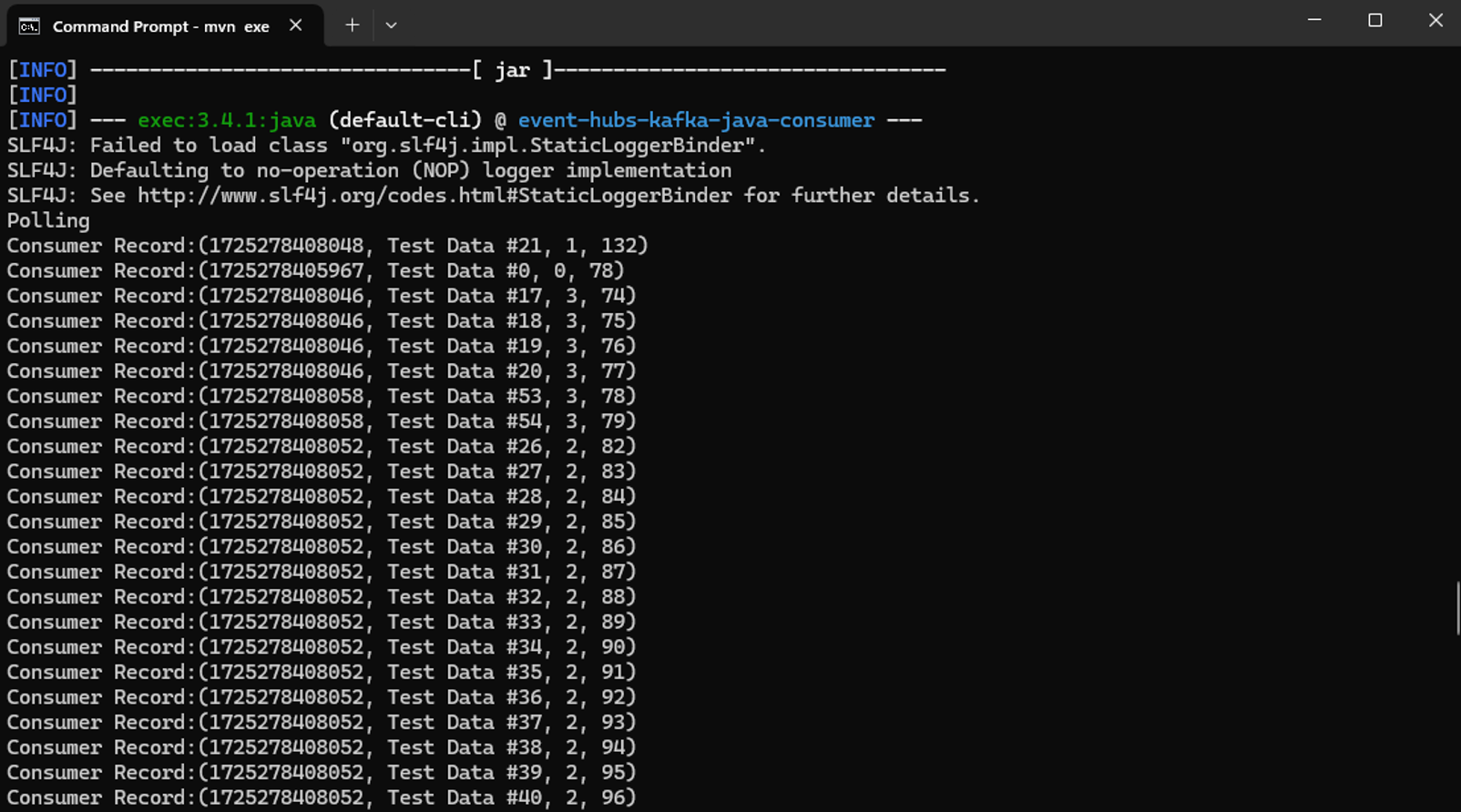

mvn clean packagemvn exec:java -Dexec.mainClass="TestConsumer"

If your eventstream has incoming events (for example, your previous producer application is still running), verify that the consumer is now receiving events from your eventstream topic.

By default, Kafka consumers read from the end of the stream rather than the beginning. A Kafka consumer doesn't read any events that are queued before you begin running the consumer. If you start your consumer but it isn't receiving any events, try running your producer again while your consumer is polling.

Conclusion

Congratulations. You learned how to use the Kafka endpoint exposed from your eventstream to stream and consume the events within your eventstream. If you already have an application that's sending or consuming from a Kafka topic, you can use the same application to send or consume the events within your eventstream without any code changes. Just change the connection's configuration information.