Managing Data quality for critical data elements (preview)

Critical data elements (CDEs) are a logical grouping of important columns across tables in your data sources that allow you to strategically focus your governance efforts where you'll have the most effect.

Microsoft Purview Data Quality offers an integrated solution for measuring the quality of Critical Data Elements (CDEs), enabling organizations to ensure that these key data elements meet the required standards for accuracy, completeness, consistency, and integrity.

Organizations can establish specific quality thresholds that CDEs must meet to maintain their quality. Those thresholds are applied at the logical CDE level, but trickle down to all the individual columns that comprise the CDE. These rules can encompass various aspects of data quality, including validation, cleansing, standardization, and enrichment. For example: data quality rules might specify that customer addresses must be standardized to a specific format, or that employee IDs must adhere to a certain pattern.

Once data quality rules are applied to CDEs, Microsoft Purview Data Quality systematically evaluates the underlying physical data elements to assess their compliance with these rules. By using Purview Data Quality's integrated approach, organizations can proactively monitor and manage the quality of their critical data elements, ensuring that they remain reliable, accurate, and fit for purpose. This not only enhances decision-making processes but also helps mitigate risks associated with data errors or inconsistencies, ultimately driving better business outcomes.

Supported asset types

- Azure Data Lake Storage (ADLS Gen2)

- File Types: Delta and Parquet

- Azure SQL Database

- Fabric data estate in OneLake is including shortcut and mirroring data estate. Data Quality scanning is supported only for Lakehouse delta tables and parquet files.

- Mirroring data estate: CosmosDB, Snowflake, Azure SQL

- Shortcut data estate: AWS S3, GCS, AdlsG2, and dataverse

- Azure Synapse serverless and data warehouse

- Azure Databricks Unity Catalog

- Snowflake

- Google Big Query (Private Preview)

Available data quality rules for CDEs

Microsoft Purview Data Quality enables configuration of the below rules for CDEs. Selecting a rule will take you to the general data quality rules article for more information.

| Rule | Definition |

|---|---|

| Unique values | Confirms that the values in a column are unique. |

| Data type match | Confirms that the values in a column match their data type requirements. |

| Empty/blank fields | Looks for blank and empty fields in a column where there should be values. |

Configure data quality for CDEs

If you haven't already, create a critical data element(CDE) and add columns.

Open your CDE by:

- Open Microsoft Purview Unified Catalog and select Data management drop-down and Governance domains submenu.

- Select a governance domain from the list.

- Select the Critical data elements tile.

- Select a critical data element from the list.

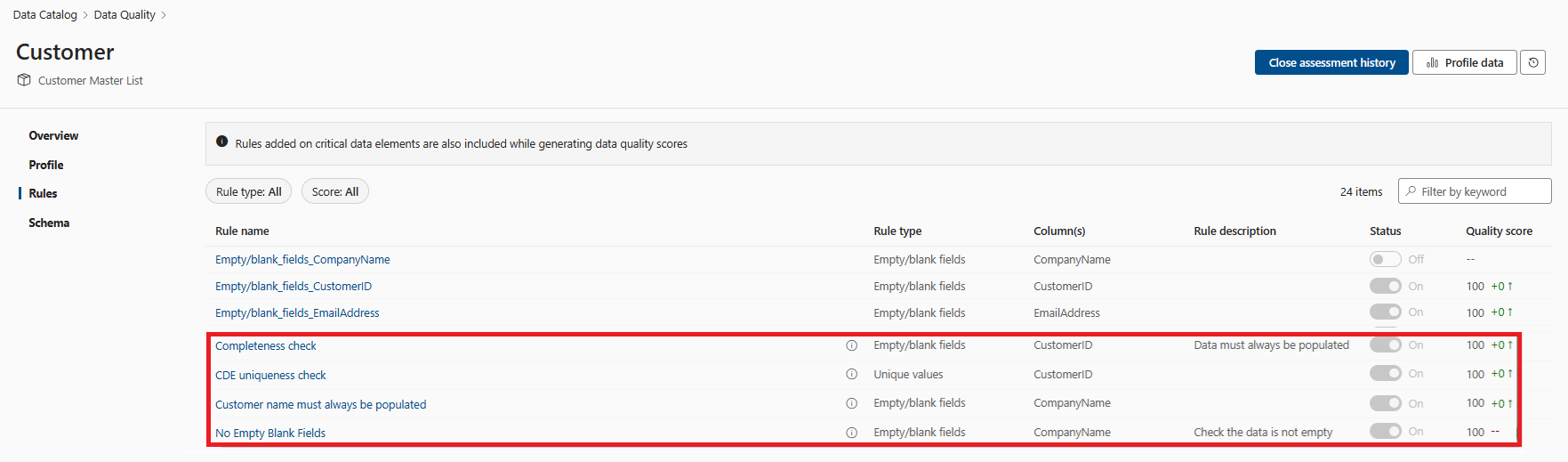

Select the Data quality tab in your critical data element.

Add a new rule to the critical data element by selecting New rule.

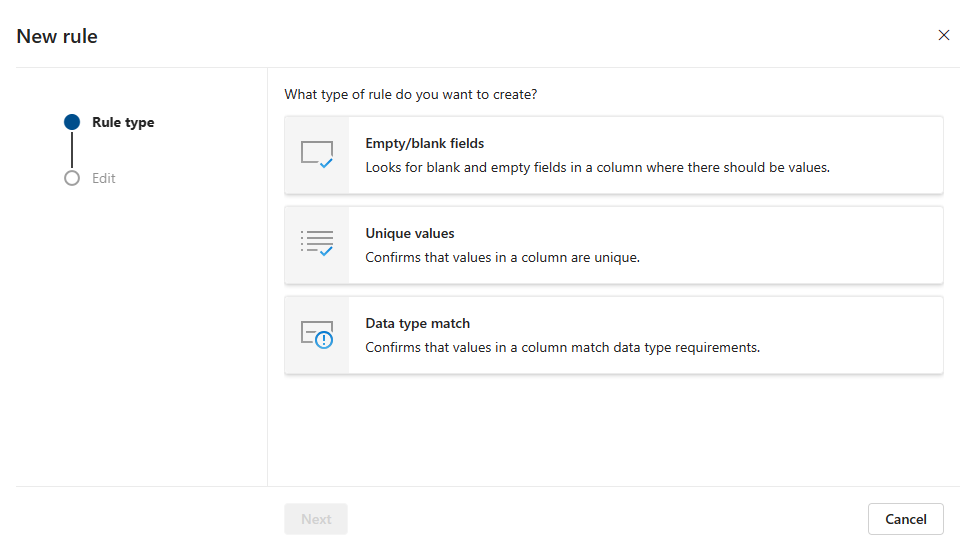

Select the data quality rule type you want to use and select Next.

Provide the details necessary for your rule type.

Choose whether you'd like to toggle the rule Off or On.

Select Create.

Execute data quality rules for CDEs

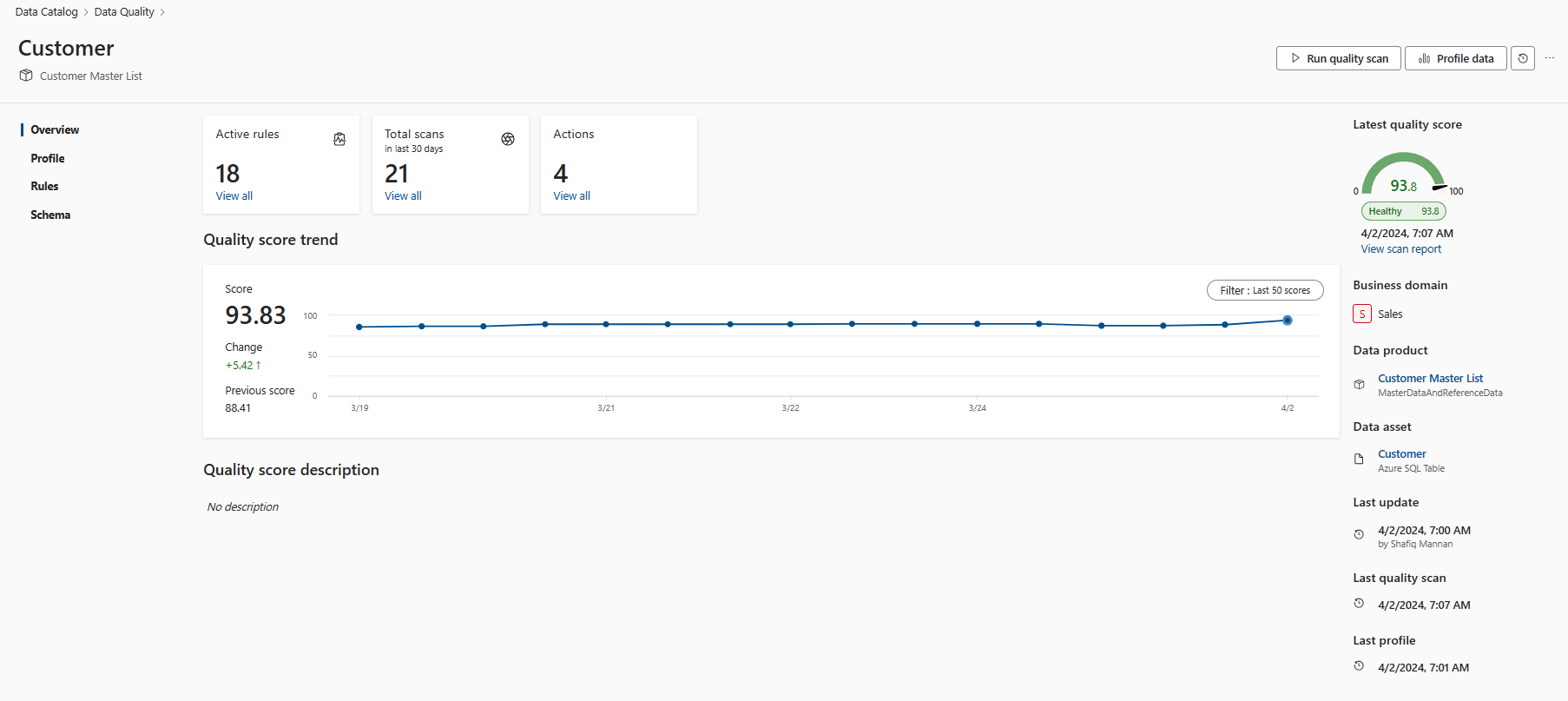

When a data quality scan is run for an available data asset that has a column associated with a CDE, the data quality rules you've configured for that CDE will produce a score.

Schedule or run a data quality scan for your data assets associated with your CDE.

Monitor the progress of the data quality scanning job as it executes, ensuring that it completes without errors or interruptions. Check the applied data quality rules ran successfully from the history snapshot.

Review the results of the scanning job to assess the quality of the CDE data asset based on the applied rules.

Analyze the findings from the data quality scanning job to identify any issues, anomalies, or areas of improvement related to the CDE data asset. This could involve cleansing, standardizing, or enriching the data to improve its quality.