Getting from Dataflow Generation 1 to Dataflow Generation 2

Dataflow Gen2 is the new generation of dataflows. The new generation of dataflows resides alongside the Power BI Dataflow (Gen1) and brings new features and improved experiences. The following section provides a comparison between Dataflow Gen1 and Dataflow Gen2.

Feature overview

| Feature | Dataflow Gen2 | Dataflow Gen1 |

|---|---|---|

| Author dataflows with Power Query | ✓ | ✓ |

| Shorter authoring flow | ✓ | |

| AutoSave and background publishing | ✓ | |

| Data destinations | ✓ | |

| Improved monitoring and refresh history | ✓ | |

| Integration with data pipelines | ✓ | |

| High-scale compute | ✓ | |

| Get Data via Dataflows connector | ✓ | ✓ |

| Direct Query via Dataflows connector | ✓ | |

| Incremental refresh | ✓ | ✓ |

| AI Insights support | ✓ |

Shorter authoring experience

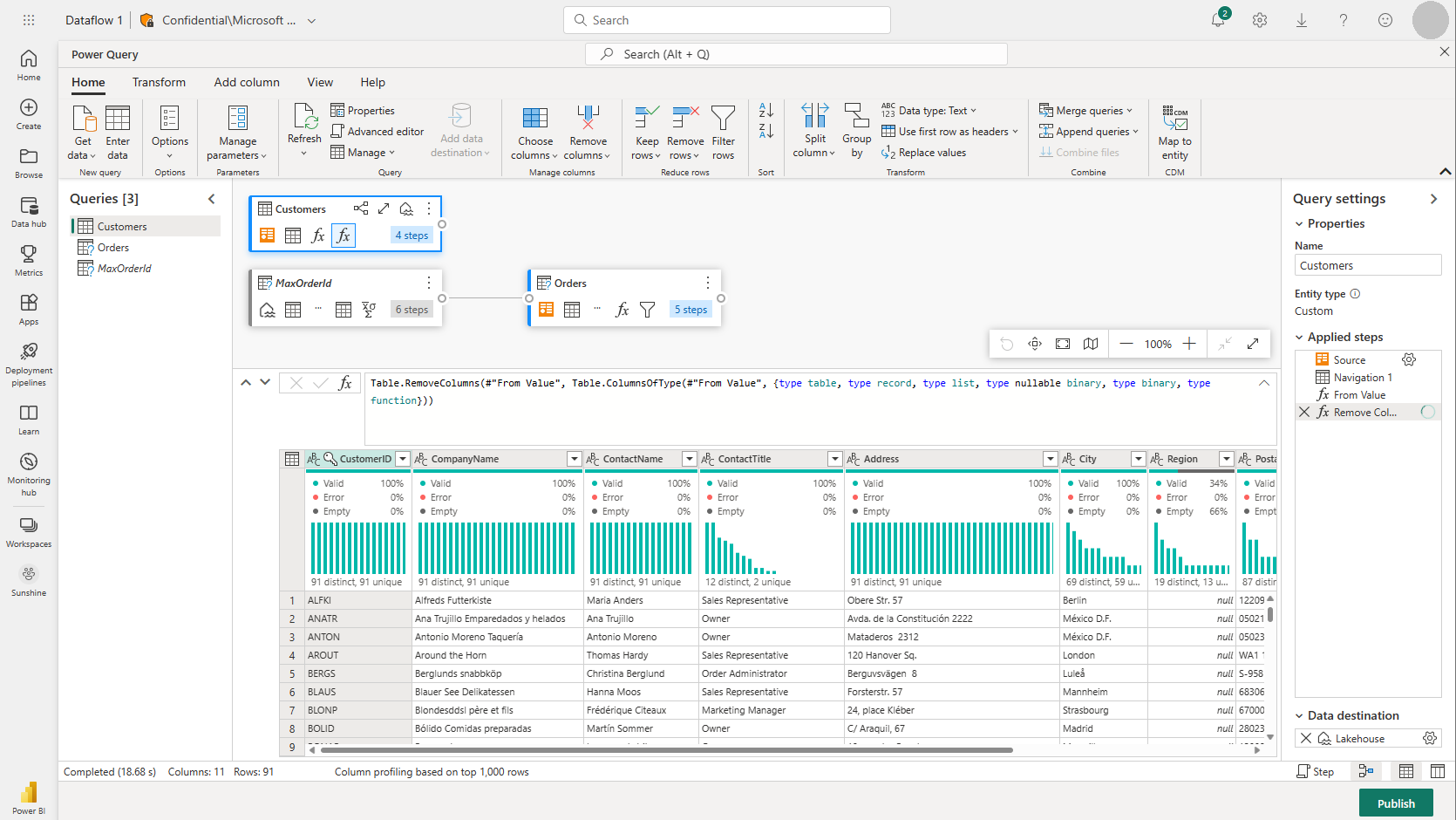

Working with Dataflow Gen2 feels like coming home. We kept the full Power Query experience you're used to in Power BI dataflows. When you enter the experience, you're guided step-by-step for getting the data into your dataflow. We also shorten the authoring experience to reduce the number of steps required to create dataflows, and added a few new features to make your experience even better.

New dataflow save experience

With Dataflow Gen2, we changed how saving a dataflow works. Any changes made to a dataflow are autosaved to the cloud. So you can exit the authoring experience at any point and continue from where you left off at a later time. Once you're done authoring your dataflow, you publish your changes and those changes are used when the dataflow refreshes. In addition, publishing the dataflow saves your changes and runs validations that must be performed in the background. This feature lets you save your dataflow without having to wait for validation to finish.

To learn more about the new save experience, go to Save a draft of your dataflow.

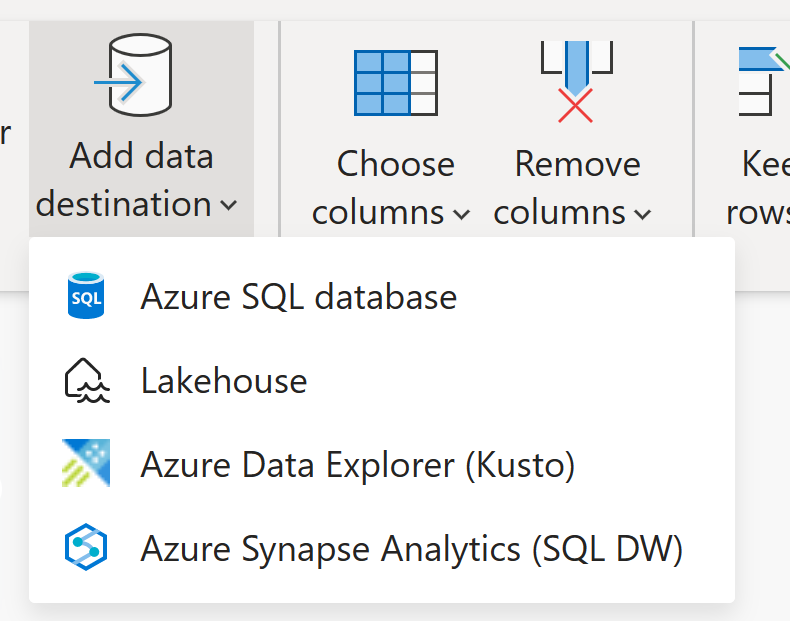

Data destinations

Similar to Dataflow Gen1, Dataflow Gen2 allows you to transform your data into dataflow's internal/staging storage where it can be accessed using the Dataflow connector. Dataflow Gen2 also allows you to specify a data destination for your data. Using this feature, you can now separate your ETL logic and destination storage. This feature benefits you in many ways. For example, you can now use a dataflow to load data into a lakehouse and then use a notebook to analyze the data. Or you can use a dataflow to load data into an Azure SQL database and then use a data pipeline to load the data into a data warehouse.

In Dataflow Gen2, we added support for the following destinations and many more are coming soon:

- Fabric Lakehouse

- Azure Data Explorer (Kusto)

- Azure Synapse Analytics (SQL DW)

- Azure SQL Database

Note

To load your data to the Fabric Warehouse, you can use the Azure Synapse Analytics (SQL DW) connector by retrieving the SQL connection string. More information: Connectivity to data warehousing in Microsoft Fabric

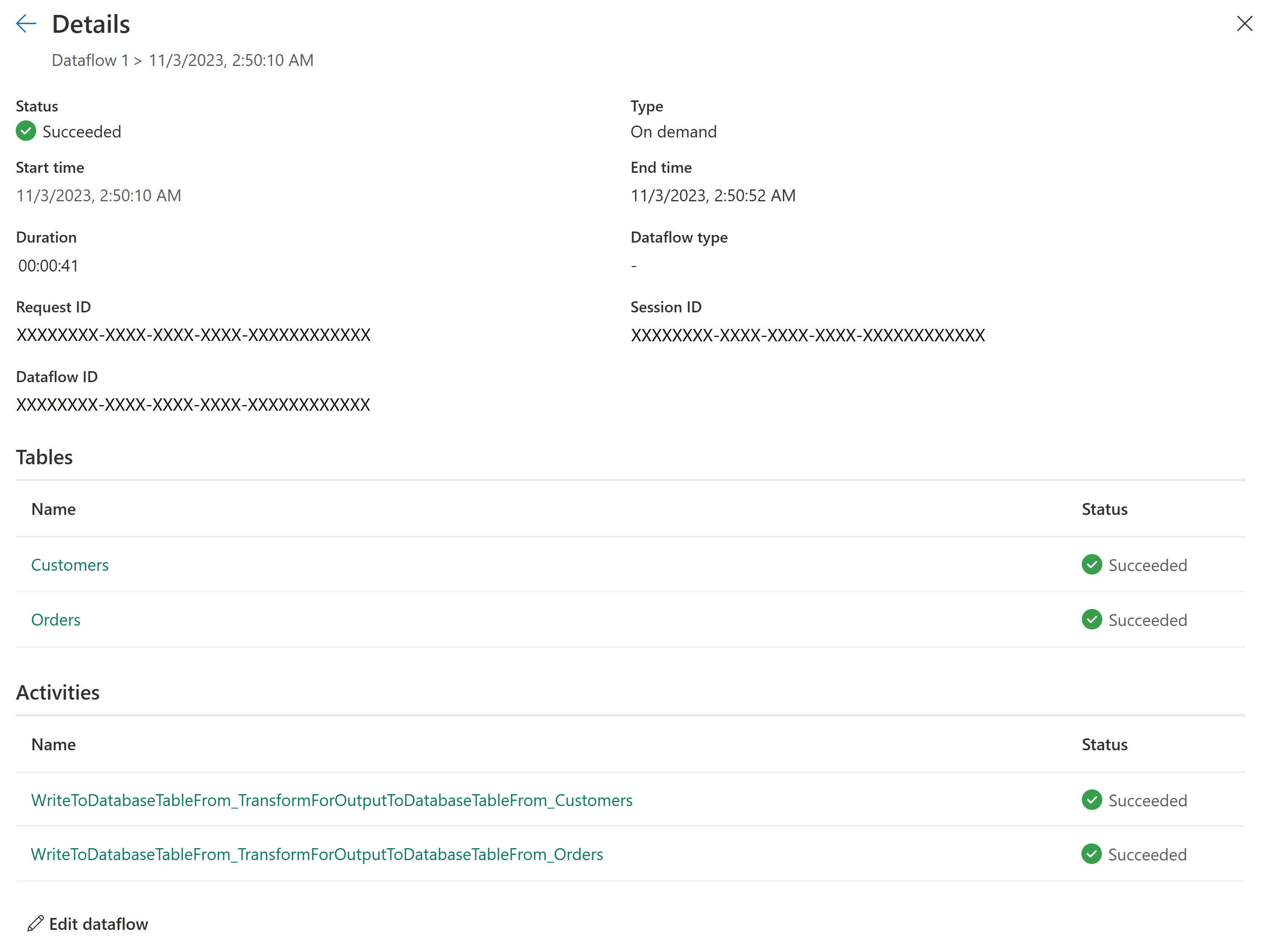

New refresh history and monitoring

With Dataflow Gen2, we introduce a new way for you to monitor your dataflow refreshes. We integrate support for Monitoring Hub and give our Refresh History experience a major upgrade.

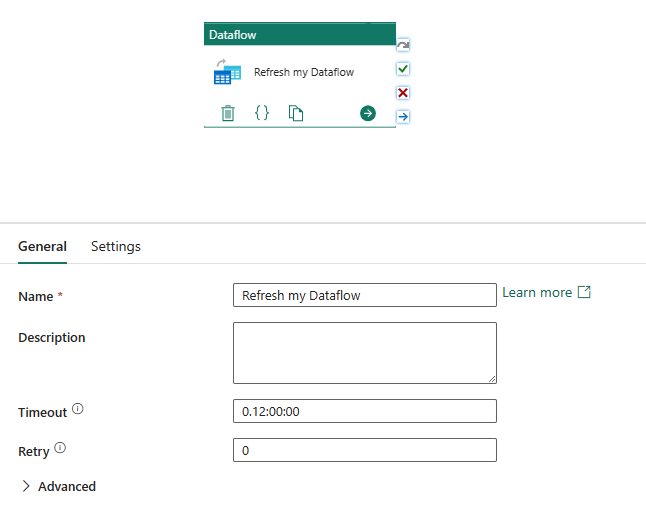

Integration with data pipelines

Data pipelines allow you to group activities that together perform a task. An activity is a unit of work that can be executed. For example, an activity can copy data from one location to another, run a SQL query, execute a stored procedure, or run a Python notebook.

A pipeline can contain one or more activities that are connected by dependencies. For example, you can use a pipeline to ingest and clean data from an Azure blob, and then kick off a Dataflow Gen2 to analyze the log data. You can also use a pipeline to copy data from an Azure blob to an Azure SQL database, and then run a stored procedure on the database.

Save as draft

With Dataflow Gen2, we introduce a worry free experience by removing the need for publishing to save your changes. With save as draft functionality, we store a draft version of your dataflow every time you make a change. Did you lose internet connectivity? Did you accidentally close your browser? No worries; we got your back. Once you return to your dataflow, your recent changes are still there and you can continue where you left off. This is a seamless experience and doesn't require any input from you. This allows you to work on your dataflow without having to worry about losing your changes or having to fix all the query errors before you can save your changes. To learn more about this feature, go to Save a draft of your dataflow.

High scale compute

Similar to Dataflow Gen1, Dataflow Gen2 also features an enhanced compute engine to improve performance of both transformations of referenced queries and get data scenarios. To achieve this, Dataflow Gen2 creates both Lakehouse and Warehouse items in your workspace, and uses them to store and access data to improve performance for all your dataflows.

Licensing Dataflow Gen1 vs Gen2

Dataflow Gen2 is the new generation of dataflows that resides alongside the Power BI dataflow (Gen1) and brings new features and improved experiences. It requires a Fabric capacity or a Fabric trial capacity. To understand better how licensing works for dataflows you can read the following article: Microsoft Fabric concepts and licenses

Try out Dataflow Gen2 by reusing your queries from Dataflow Gen1

You probably have many Dataflow Gen1 queries and you're wondering how you can try them out in Dataflow Gen2. We have a few options for you to recreate your Gen1 dataflows as Dataflow Gen2.

Export your Dataflow Gen1 queries and import them into Dataflow Gen2

You can now export queries in both the Dataflow Gen1 and Gen2 authoring experiences and save them to a PQT file you can then import into Dataflow Gen2. For more information, go to Use the export template feature.

Copy and paste in Power Query

If you have a dataflow in Power BI or Power Apps, you can copy your queries and paste them in the editor of your Dataflow Gen2. This functionality allows you to migrate your dataflow to Gen2 without having to rewrite your queries. For more information, go to Copy and paste existing Dataflow Gen1 queries.