Use embedding models from Azure AI Foundry model catalog for integrated vectorization

Important

This feature is in public preview under Supplemental Terms of Use. The 2024-05-01-Preview REST API supports this feature.

In this article, learn how to access the embedding models in the Azure AI Foundry model catalog for vector conversions during indexing and in queries in Azure AI Search.

The workflow includes model deployment steps. The model catalog includes embedding models from Microsoft and other companies. Deploying a model is billable per the billing structure of each provider.

After the model is deployed, you can use it for integrated vectorization during indexing, or with the Azure AI Foundry vectorizer for queries.

Tip

Use the Import and vectorize data wizard to generate a skillset that includes an AML skill for deployed embedding models on Azure AI Foundry. AML skill definition for inputs, outputs, and mappings are generated by the wizard, which gives you an easy way to test a model before writing any code.

Prerequisites

Azure AI Search, any region and tier.

Azure AI Foundry and an Azure AI Foundry project.

Supported embedding models

Integrated vectorization and the Import and vectorize data wizard supports the following embedding models in the model catalog:

For text embeddings:

- Cohere-embed-v3-english

- Cohere-embed-v3-multilingual

For image embeddings:

- Facebook-DinoV2-Image-Embeddings-ViT-Base

- Facebook-DinoV2-Image-Embeddings-ViT-Giant

Deploy an embedding model from the Azure AI Foundry model catalog

Open the Azure AI Foundry model catalog. Create a project if you don't have one already.

Apply a filter to show just the embedding models. Under Inference tasks, select Embeddings:

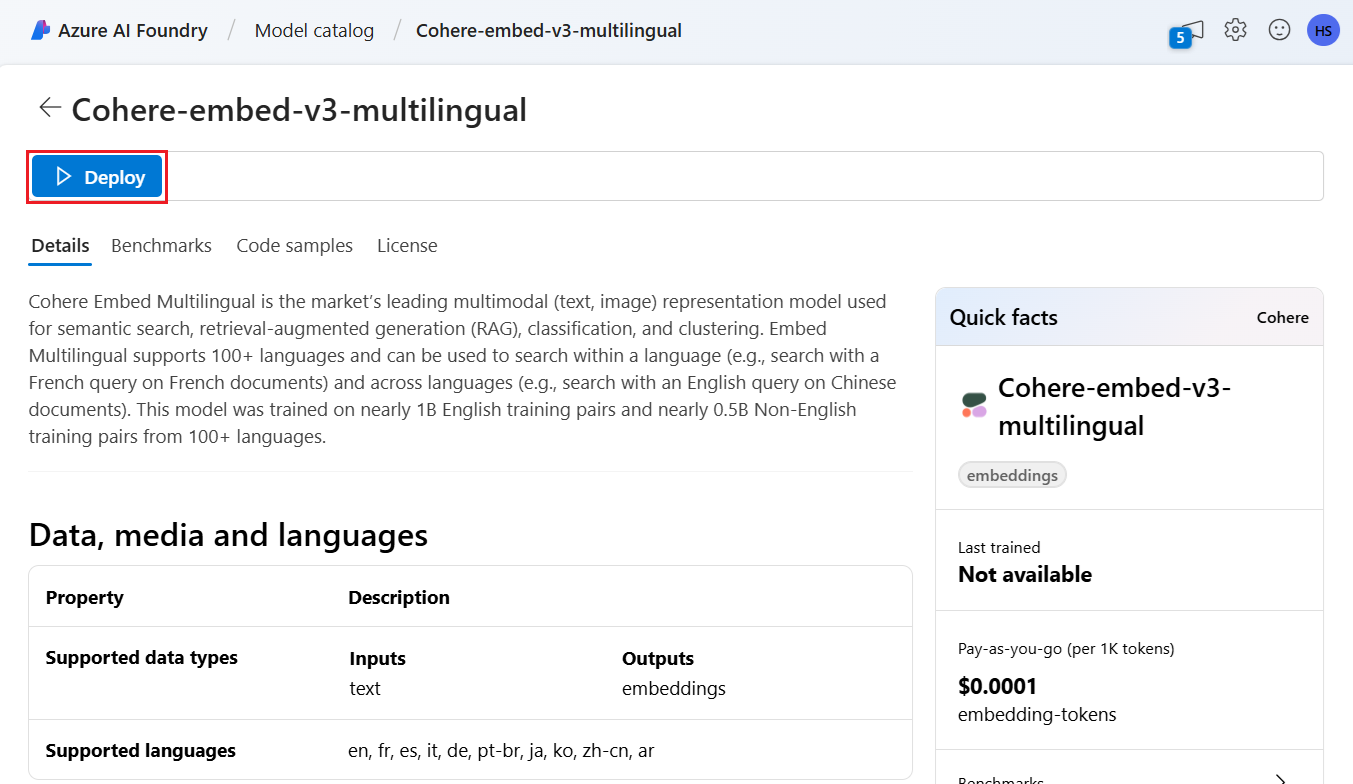

Select a supported model, then select Deploy.

Accept the defaults or modify as needed, and then select Deploy. The deployment details vary depending on which model you select.

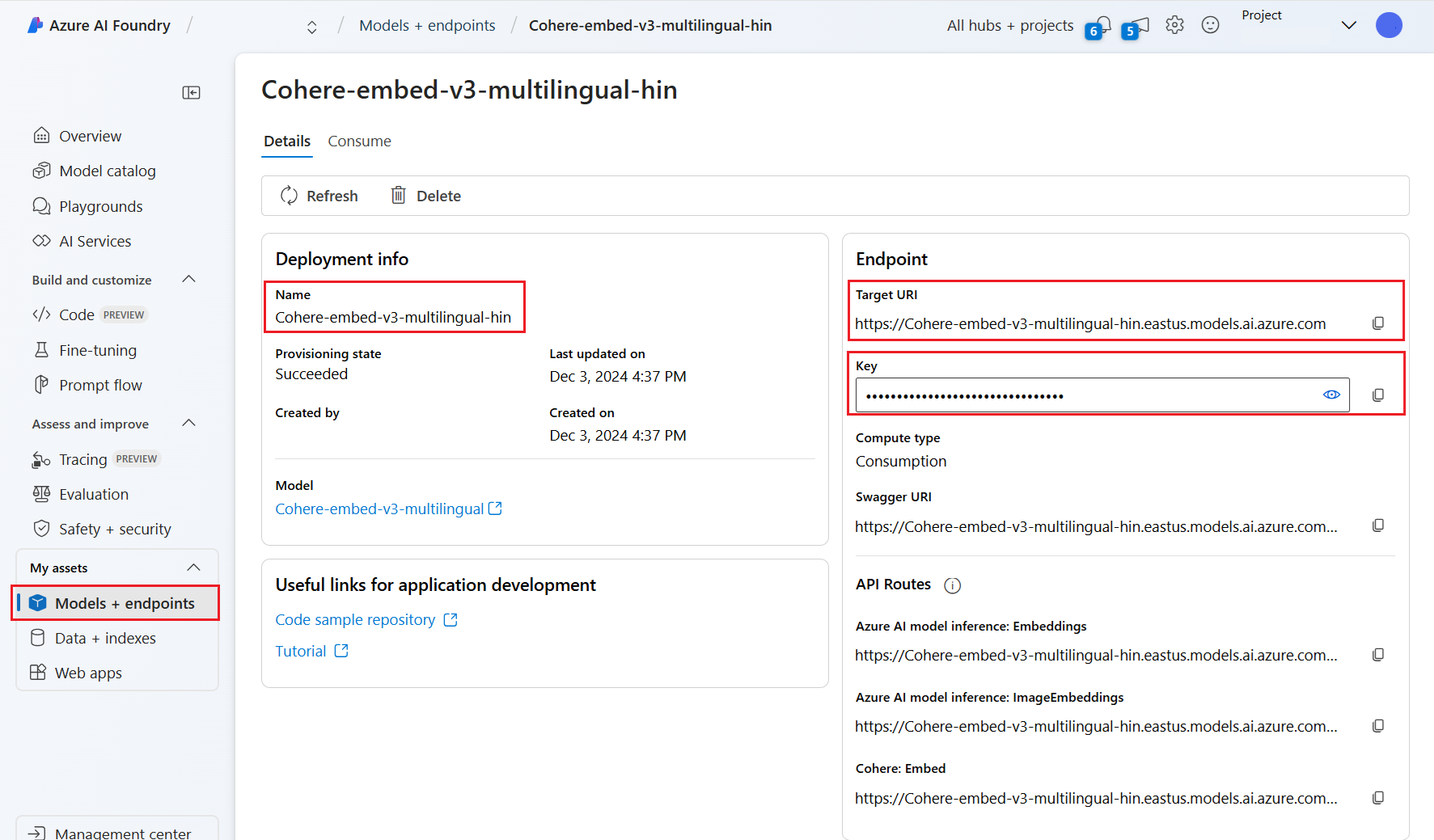

Wait for the model to finish deploying by monitoring the Provisioning State. It should change from "Provisioning" to "Updating" to "Succeeded". You might need to select Refresh every few minutes to see the status update.

Make a note of the target URI, key, and model name. You need these values for the vectorizer definition in a search index, and for the skillset that calls the model endpoints during indexing.

Optionally, you can change your endpoint to use Token authentication instead of Key authentication. If you enable token authentication, you only need to copy the URI and model name, but make a note of which region the model is deployed to.

You can now configure a search index and indexer to use the deployed model.

To use the model during indexing, see steps to enable integrated vectorization. Be sure to use the Azure Machine Learning (AML) skill, and not the AzureOpenAIEmbedding skill. The next section describes the skill configuration.

To use the model as a vectorizer at query time, see Configure a vectorizer. Be sure to use the Azure AI Foundry model catalog vectorizer for this step.

Sample AML skill payloads

When you deploy embedding models from the Azure AI Foundry model catalog you connect to them using the AML skill in Azure AI Search for indexing workloads.

This section describes the AML skill definition and index mappings. It includes sample payloads that are already configured to work with their corresponding deployed endpoints. For more technical details on how these payloads work, read about the Skill context and input annotation language.

This AML skill payload works with the following image embedding models from Azure AI Foundry:

- Facebook-DinoV2-Image-Embeddings-ViT-Base

- Facebook-DinoV2-Image-Embeddings-ViT-Giant

It assumes that your images come from the /document/normalized_images/* path that is created by enabling built in image extraction. If your images come from a different path or are stored as URLs, update all references to the /document/normalized_images/* path according.

The URI and key are generated when you deploy the model from the catalog. For more information about these values, see Add and configure models to Azure AI model inference.

{

"@odata.type": "#Microsoft.Skills.Custom.AmlSkill",

"context": "/document/normalized_images/*",

"uri": "https://myproject-1a1a-abcd.eastus.inference.ml.azure.com/score",

"timeout": "PT1M",

"key": "bbbbbbbb-1c1c-2d2d-3e3e-444444444444",

"inputs": [

{

"name": "input_data",

"sourceContext": "/document/normalized_images/*",

"inputs": [

{

"name": "columns",

"source": "=['image', 'text']"

},

{

"name": "index",

"source": "=[0]"

},

{

"name": "data",

"source": "=[[$(/document/normalized_images/*/data), '']]"

}

]

}

],

"outputs": [

{

"name": "image_features"

}

]

}

Sample Azure AI Foundry vectorizer payload

The Azure AI Foundry vectorizer, unlike the AML skill, is tailored to work only with those embedding models that are deployable via the Azure AI Foundry model catalog. The main difference is that you don't have to worry about the request and response payload, but you do have to provide the modelName, which corresponds to the "Model ID" that you copied after deploying the model in Azure AI Foundry portal.

Here's a sample payload of how you would configure the vectorizer on your index definition given the properties copied from Azure AI Foundry.

For Cohere models, you should NOT add the /v1/embed path to the end of your URL like you did with the skill.

"vectorizers": [

{

"name": "<YOUR_VECTORIZER_NAME_HERE>",

"kind": "aml",

"amlParameters": {

"uri": "<YOUR_URL_HERE>",

"key": "<YOUR_PRIMARY_KEY_HERE>",

"modelName": "<YOUR_MODEL_ID_HERE>"

},

}

]

Connect using token authentication

If you can't use key-based authentication, you can instead configure the AML skill and Azure AI Foundry vectorizer connection for token authentication via role-based access control on Azure. The search service must have a system or user-assigned managed identity, and the identity must have Owner or Contributor permissions for your AML project workspace. You can then remove the key field from your skill and vectorizer definition, replacing it with the resourceId field. If your AML project and search service are in different regions, also provide the region field.

"uri": "<YOUR_URL_HERE>",

"resourceId": "subscriptions/<YOUR_SUBSCRIPTION_ID_HERE>/resourceGroups/<YOUR_RESOURCE_GROUP_NAME_HERE>/providers/Microsoft.MachineLearningServices/workspaces/<YOUR_AML_WORKSPACE_NAME_HERE>/onlineendpoints/<YOUR_AML_ENDPOINT_NAME_HERE>",

"region": "westus", // Only need if AML project lives in different region from search service