Track Azure Synapse Analytics ML experiments with MLflow and Azure Machine Learning

In this article, learn how to enable MLflow to connect to Azure Machine Learning while working in an Azure Synapse Analytics workspace. You can leverage this configuration for tracking, model management and model deployment.

MLflow is an open-source library for managing the life cycle of your machine learning experiments. MLFlow Tracking is a component of MLflow that logs and tracks your training run metrics and model artifacts. Learn more about MLflow.

If you have an MLflow Project to train with Azure Machine Learning, see Train ML models with MLflow Projects and Azure Machine Learning (preview).

Prerequisites

Install libraries

To install libraries on your dedicated cluster in Azure Synapse Analytics:

Create a

requirements.txtfile with the packages your experiments requires, but making sure it also includes the following packages:requirements.txt

mlflow azureml-mlflow azure-ai-mlNavigate to Azure Analytics Workspace portal.

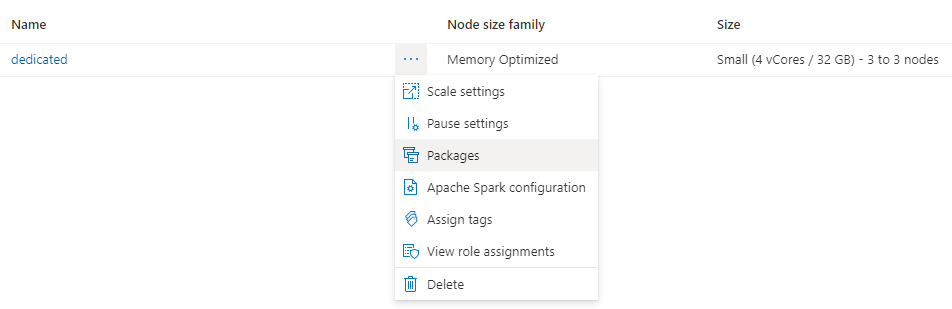

Navigate to the Manage tab and select Apache Spark Pools.

Click the three dots next to the cluster name, and select Packages.

On the Requirements files section, click on Upload.

Upload the

requirements.txtfile.Wait for your cluster to restart.

Track experiments with MLflow

Azure Synapse Analytics can be configured to track experiments using MLflow to Azure Machine Learning workspace. Azure Machine Learning provides a centralized repository to manage the entire lifecycle of experiments, models and deployments. It also has the advantage of enabling easier path to deployment using Azure Machine Learning deployment options.

Configuring your notebooks to use MLflow connected to Azure Machine Learning

To use Azure Machine Learning as your centralized repository for experiments, you can leverage MLflow. On each notebook where you are working on, you have to configure the tracking URI to point to the workspace you will be using. The following example shows how it can be done:

Configure tracking URI

Get the tracking URI for your workspace:

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)Sign in and configure your workspace:

az account set --subscription <subscription-ID> az configure --defaults workspace=<workspace-name> group=<resource-group-name> location=<location>Get the tracking URI by using the

az ml workspacecommand:az ml workspace show --query mlflow_tracking_uri

Configure the tracking URI:

Use the

set_tracking_uri()method to set the MLflow tracking URI to the tracking URI of your workspace.import mlflow mlflow.set_tracking_uri(mlflow_tracking_uri)Tip

Some scenarios involve working in a shared environment like an Azure Databricks cluster or an Azure Synapse Analytics cluster. In these cases, it's useful to set the

MLFLOW_TRACKING_URIenvironment variable at the cluster level rather than for each session. Setting the variable at the cluster level automatically configures the MLflow tracking URI to point to Azure Machine Learning for all sessions in the cluster.

Configure authentication

Once the tracking is configured, you'll also need to configure how the authentication needs to happen to the associated workspace. By default, the Azure Machine Learning plugin for MLflow will perform interactive authentication by opening the default browser to prompt for credentials. Refer to Configure MLflow for Azure Machine Learning: Configure authentication to additional ways to configure authentication for MLflow in Azure Machine Learning workspaces.

For interactive jobs where there's a user connected to the session, you can rely on interactive authentication. No further action is required.

Warning

Interactive browser authentication blocks code execution when it prompts for credentials. This approach isn't suitable for authentication in unattended environments like training jobs. We recommend that you configure a different authentication mode in those environments.

For scenarios that require unattended execution, you need to configure a service principal to communicate with Azure Machine Learning. For information about creating a service principal, see Configure a service principal.

Use the tenant ID, client ID, and client secret of your service principal in the following code:

import os

os.environ["AZURE_TENANT_ID"] = "<Azure-tenant-ID>"

os.environ["AZURE_CLIENT_ID"] = "<Azure-client-ID>"

os.environ["AZURE_CLIENT_SECRET"] = "<Azure-client-secret>"

Tip

When you work in shared environments, we recommend that you configure these environment variables at the compute level. As a best practice, manage them as secrets in an instance of Azure Key Vault.

For instance, in an Azure Databricks cluster configuration, you can use secrets in environment variables in the following way: AZURE_CLIENT_SECRET={{secrets/<scope-name>/<secret-name>}}. For more information about implementing this approach in Azure Databricks, see Reference a secret in an environment variable, or refer to documentation for your platform.

Experiment's names in Azure Machine Learning

By default, Azure Machine Learning tracks runs in a default experiment called Default. It is usually a good idea to set the experiment you will be going to work on. Use the following syntax to set the experiment's name:

mlflow.set_experiment(experiment_name="experiment-name")

Tracking parameters, metrics and artifacts

You can use then MLflow in Azure Synapse Analytics in the same way as you're used to. For details see Log & view metrics and log files.

Registering models in the registry with MLflow

Models can be registered in Azure Machine Learning workspace, which offers a centralized repository to manage their lifecycle. The following example logs a model trained with Spark MLLib and also registers it in the registry.

mlflow.spark.log_model(model,

artifact_path = "model",

registered_model_name = "model_name")

If a registered model with the name doesn't exist, the method registers a new model, creates version 1, and returns a ModelVersion MLflow object.

If a registered model with the name already exists, the method creates a new model version and returns the version object.

You can manage models registered in Azure Machine Learning using MLflow. View Manage models registries in Azure Machine Learning with MLflow for more details.

Deploying and consuming models registered in Azure Machine Learning

Models registered in Azure Machine Learning Service using MLflow can be consumed as:

An Azure Machine Learning endpoint (real-time and batch): This deployment allows you to leverage Azure Machine Learning deployment capabilities for both real-time and batch inference in Azure Container Instances (ACI), Azure Kubernetes (AKS) or our Managed Endpoints.

MLFlow model objects or Pandas UDFs, which can be used in Azure Synapse Analytics notebooks in streaming or batch pipelines.

Deploy models to Azure Machine Learning endpoints

You can leverage the azureml-mlflow plugin to deploy a model to your Azure Machine Learning workspace. Check How to deploy MLflow models page for a complete detail about how to deploy models to the different targets.

Important

Models need to be registered in Azure Machine Learning registry in order to deploy them. Deployment of unregistered models is not supported in Azure Machine Learning.

Deploy models for batch scoring using UDFs

You can choose Azure Synapse Analytics clusters for batch scoring. The MLFlow model is loaded and used as a Spark Pandas UDF to score new data.

from pyspark.sql.types import ArrayType, FloatType

model_uri = "runs:/"+last_run_id+ {model_path}

#Create a Spark UDF for the MLFlow model

pyfunc_udf = mlflow.pyfunc.spark_udf(spark, model_uri)

#Load Scoring Data into Spark Dataframe

scoreDf = spark.table({table_name}).where({required_conditions})

#Make Prediction

preds = (scoreDf

.withColumn('target_column_name', pyfunc_udf('Input_column1', 'Input_column2', ' Input_column3', …))

)

display(preds)

Clean up resources

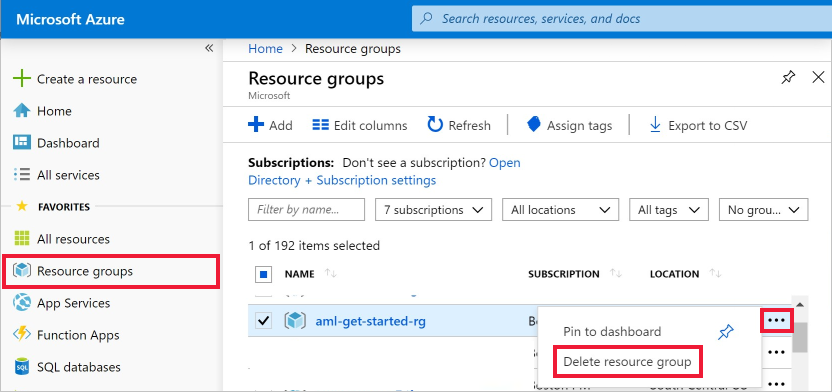

If you wish to keep your Azure Synapse Analytics workspace, but no longer need the Azure Machine Learning workspace, you can delete the Azure Machine Learning workspace. If you don't plan to use the logged metrics and artifacts in your workspace, the ability to delete them individually is unavailable at this time. Instead, delete the resource group that contains the storage account and workspace, so you don't incur any charges:

In the Azure portal, select Resource groups on the far left.

From the list, select the resource group you created.

Select Delete resource group.

Enter the resource group name. Then select Delete.