Track Azure Databricks machine learning experiments with MLflow and Azure Machine Learning

MLflow is an open-source library for managing the life cycle of your machine learning experiments. You can use MLflow to integrate Azure Databricks with Azure Machine Learning to ensure you get the best from both of the products.

In this article, you learn:

- The required libraries needed to use MLflow with Azure Databricks and Azure Machine Learning.

- How to track Azure Databricks runs with MLflow in Azure Machine Learning.

- How to log models with MLflow to get them registered in Azure Machine Learning.

- How to deploy and consume models registered in Azure Machine Learning.

Prerequisites

- The

azureml-mlflowpackage, which handles the connectivity with Azure Machine Learning, including authentication. - An Azure Databricks workspace and cluster.

- An Azure Machine Learning Workspace.

See which access permissions you need to perform your MLflow operations with your workspace.

Example notebooks

The Training models in Azure Databricks and deploying them on Azure Machine Learning repository demonstrates how to train models in Azure Databricks and deploy them in Azure Machine Learning. It also describes how to track the experiments and models with the MLflow instance in Azure Databricks. It describes how to use Azure Machine Learning for deployment.

Install libraries

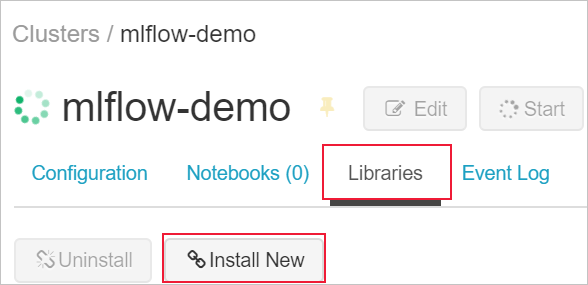

To install libraries on your cluster:

Navigate to the Libraries tab and select Install New.

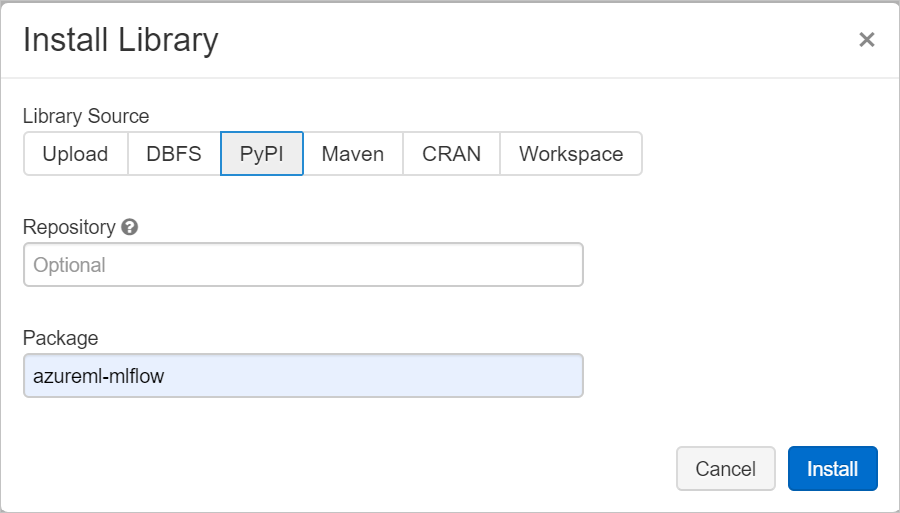

In the Package field, type azureml-mlflow and then select Install. Repeat this step as necessary to install other packages to your cluster for your experiment.

Track Azure Databricks runs with MLflow

You can configure Azure Databricks to track experiments using MLflow in two ways:

- Track in both Azure Databricks workspace and Azure Machine Learning workspace (dual-tracking)

- Track exclusively on Azure Machine Learning

By default, when you link your Azure Databricks workspace, dual-tracking is configured for you.

Dual-track on Azure Databricks and Azure Machine Learning

Linking your Azure Databricks workspace to your Azure Machine Learning workspace enables you to track your experiment data in the Azure Machine Learning workspace and Azure Databricks workspace at the same time. This configuration is called Dual-tracking.

Dual-tracking in a private link enabled Azure Machine Learning workspace isn't currently supported, regardless of outbound rules configuration or if Azure Databricks was deployed in your own network (VNet injection). Configure exclusive tracking with your Azure Machine Learning workspace instead. Notice that this doesn't imply that VNet inject

Dual-tracking isn't currently supported in Microsoft Azure operated by 21Vianet. Configure exclusive tracking with your Azure Machine Learning workspace instead.

To link your Azure Databricks workspace to a new or existing Azure Machine Learning workspace:

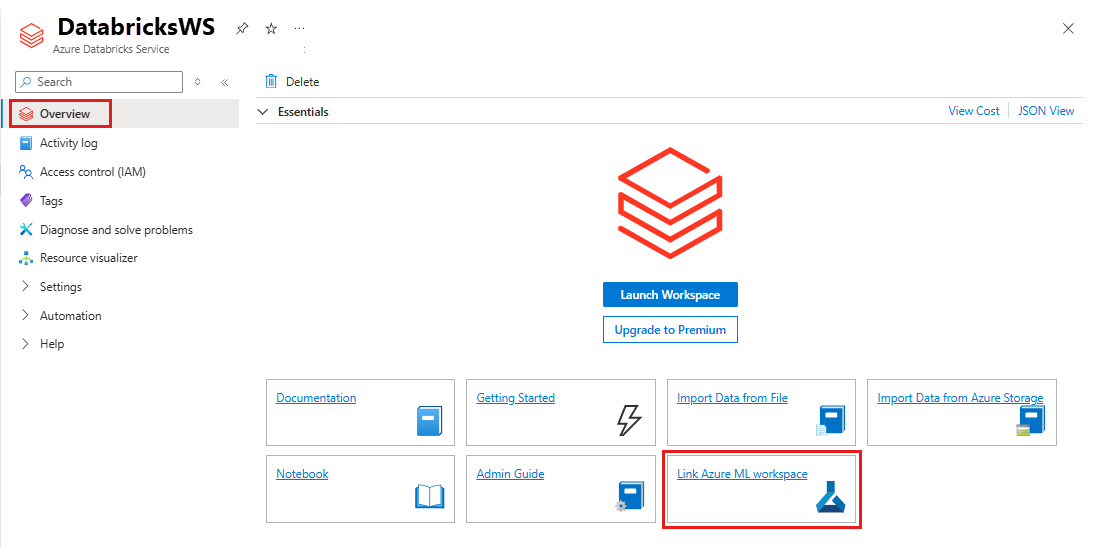

Sign in to the Azure portal.

Navigate to your Azure Databricks workspace Overview page.

Select Link Azure Machine Learning workspace.

After you link your Azure Databricks workspace with your Azure Machine Learning workspace, MLflow tracking is automatically tracked in the following places:

- The linked Azure Machine Learning workspace.

- Your original Azure Databricks workspace.

You can use then MLflow in Azure Databricks in the same way that you're used to. The following example sets the experiment name as usual in Azure Databricks and start logging some parameters.

import mlflow

experimentName = "/Users/{user_name}/{experiment_folder}/{experiment_name}"

mlflow.set_experiment(experimentName)

with mlflow.start_run():

mlflow.log_param('epochs', 20)

pass

Note

As opposed to tracking, model registries don't support registering models at the same time on both Azure Machine Learning and Azure Databricks. For more information, see Register models in the registry with MLflow.

Track exclusively on Azure Machine Learning workspace

If you prefer to manage your tracked experiments in a centralized location, you can set MLflow tracking to only track in your Azure Machine Learning workspace. This configuration has the advantage of enabling easier path to deployment using Azure Machine Learning deployment options.

Warning

For private link enabled Azure Machine Learning workspace, you have to deploy Azure Databricks in your own network (VNet injection) to ensure proper connectivity.

Configure the MLflow tracking URI to point exclusively to Azure Machine Learning, as shown in the following example:

Configure tracking URI

Get the tracking URI for your workspace.

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)Sign in and configure your workspace.

az account set --subscription <subscription> az configure --defaults workspace=<workspace> group=<resource-group> location=<location>You can get the tracking URI using the

az ml workspacecommand.az ml workspace show --query mlflow_tracking_uri

Configure the tracking URI.

The method

set_tracking_uri()points the MLflow tracking URI to that URI.import mlflow mlflow.set_tracking_uri(mlflow_tracking_uri)

Tip

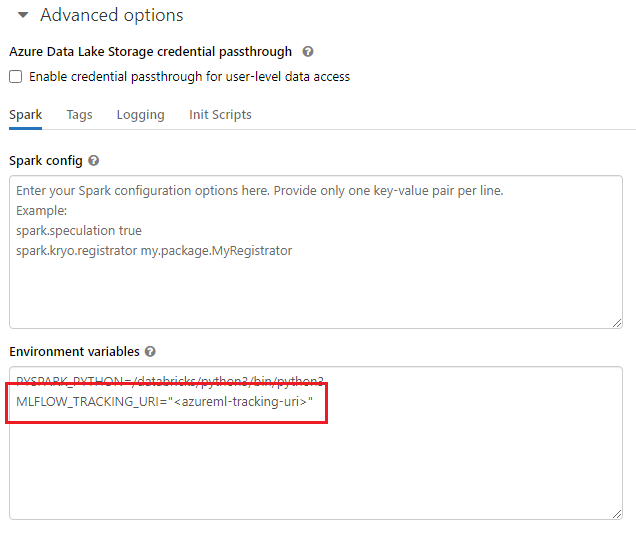

When working with shared environments, like an Azure Databricks cluster, Azure Synapse Analytics cluster, or similar, you can set the environment variable MLFLOW_TRACKING_URI at the cluster level. This approach allows you to automatically configure the MLflow tracking URI to point to Azure Machine Learning for all the sessions that run in the cluster rather than to do it on a per-session basis.

After you configure the environment variable, any experiment running in such cluster is tracked in Azure Machine Learning.

Configure authentication

After you configure tracking, configure how to authenticate to the associated workspace. By default, the Azure Machine Learning plugin for MLflow opens a browser to interactively prompt for credentials. For other ways to configure authentication for MLflow in Azure Machine Learning workspaces, see Configure MLflow for Azure Machine Learning: Configure authentication.

For interactive jobs where there's a user connected to the session, you can rely on interactive authentication. No further action is required.

Warning

Interactive browser authentication blocks code execution when it prompts for credentials. This approach isn't suitable for authentication in unattended environments like training jobs. We recommend that you configure a different authentication mode in those environments.

For scenarios that require unattended execution, you need to configure a service principal to communicate with Azure Machine Learning. For information about creating a service principal, see Configure a service principal.

Use the tenant ID, client ID, and client secret of your service principal in the following code:

import os

os.environ["AZURE_TENANT_ID"] = "<Azure-tenant-ID>"

os.environ["AZURE_CLIENT_ID"] = "<Azure-client-ID>"

os.environ["AZURE_CLIENT_SECRET"] = "<Azure-client-secret>"

Tip

When you work in shared environments, we recommend that you configure these environment variables at the compute level. As a best practice, manage them as secrets in an instance of Azure Key Vault.

For instance, in an Azure Databricks cluster configuration, you can use secrets in environment variables in the following way: AZURE_CLIENT_SECRET={{secrets/<scope-name>/<secret-name>}}. For more information about implementing this approach in Azure Databricks, see Reference a secret in an environment variable, or refer to documentation for your platform.

Name experiment in Azure Machine Learning

When you configure MLflow to exclusively track experiments in Azure Machine Learning workspace, the experiment naming convention has to follow the one used by Azure Machine Learning. In Azure Databricks, experiments are named with the path to where the experiment is saved, for instance /Users/alice@contoso.com/iris-classifier. However, in Azure Machine Learning, you provide the experiment name directly. The same experiment would be named iris-classifier directly.

mlflow.set_experiment(experiment_name="experiment-name")

Track parameters, metrics and artifacts

After this configuration, you can use MLflow in Azure Databricks in the same way as you're used to. For more information, see Log & view metrics and log files.

Log models with MLflow

After your model is trained, you can log it to the tracking server with the mlflow.<model_flavor>.log_model() method. <model_flavor> refers to the framework associated with the model. Learn what model flavors are supported.

In the following example, a model created with the Spark library MLLib is being registered.

mlflow.spark.log_model(model, artifact_path = "model")

The flavor spark doesn't correspond to the fact that you're training a model in a Spark cluster. Instead, it follows from the training framework used. You can train a model using TensorFlow with Spark. The flavor to use would be tensorflow.

Models are logged inside of the run being tracked. That fact means that models are available in either both Azure Databricks and Azure Machine Learning (default) or exclusively in Azure Machine Learning if you configured the tracking URI to point to it.

Important

The parameter registered_model_name has not been specified. For more information about this parameter and the registry, see Registering models in the registry with MLflow.

Register models in the registry with MLflow

As opposed to tracking, model registries can't operate at the same time in Azure Databricks and Azure Machine Learning. They have to use either one or the other. By default, model registries use the Azure Databricks workspace. If you choose to set MLflow tracking to only track in your Azure Machine Learning workspace, the model registry is the Azure Machine Learning workspace.

If you use the default configuration, the following code logs a model inside the corresponding runs of both Azure Databricks and Azure Machine Learning, but it registers it only on Azure Databricks.

mlflow.spark.log_model(model, artifact_path = "model",

registered_model_name = 'model_name')

- If a registered model with the name doesn’t exist, the method registers a new model, creates version 1, and returns a

ModelVersionMLflow object. - If a registered model with the name already exists, the method creates a new model version and returns the version object.

Use Azure Machine Learning registry with MLflow

If you want to use Azure Machine Learning Model Registry instead of Azure Databricks, we recommend that you set MLflow tracking to only track in your Azure Machine Learning workspace. This approach removes the ambiguity of where models are being registered and simplifies the configuration.

If you want to continue using the dual-tracking capabilities but register models in Azure Machine Learning, you can instruct MLflow to use Azure Machine Learning for model registries by configuring the MLflow Model Registry URI. This URI has the same format and value that the MLflow that tracks URI.

mlflow.set_registry_uri(azureml_mlflow_uri)

Note

The value of azureml_mlflow_uri was obtained in the same way as described in Set MLflow tracking to only track in your Azure Machine Learning workspace.

For a complete example of this scenario, see Training models in Azure Databricks and deploying them on Azure Machine Learning.

Deploy and consume models registered in Azure Machine Learning

Models registered in Azure Machine Learning Service using MLflow can be consumed as:

- An Azure Machine Learning endpoint (real-time and batch). This deployment allows you to use Azure Machine Learning deployment capabilities for both real-time and batch inference in Azure Container Instances, Azure Kubernetes, or Managed Inference Endpoints.

- MLFlow model objects or Pandas user-defined functions (UDFs), which can be used in Azure Databricks notebooks in streaming or batch pipelines.

Deploy models to Azure Machine Learning endpoints

You can use the azureml-mlflow plugin to deploy a model to your Azure Machine Learning workspace. For more information about how to deploy models to the different targets How to deploy MLflow models.

Important

Models need to be registered in Azure Machine Learning registry in order to deploy them. If your models are registered in the MLflow instance inside Azure Databricks, register them again in Azure Machine Learning. For more information, see Training models in Azure Databricks and deploying them on Azure Machine Learning

Deploy models to Azure Databricks for batch scoring using UDFs

You can choose Azure Databricks clusters for batch scoring. By using Mlflow, you can resolve any model from the registry you're connected to. You usually use one of the following methods:

- If your model was trained and built with Spark libraries like

MLLib, usemlflow.pyfunc.spark_udfto load a model and used it as a Spark Pandas UDF to score new data. - If your model wasn't trained or built with Spark libraries, either use

mlflow.pyfunc.load_modelormlflow.<flavor>.load_modelto load the model in the cluster driver. You need to orchestrate any parallelization or work distribution you want to happen in the cluster. MLflow doesn't install any library your model requires to run. Those libraries need to be installed in the cluster before running it.

The following example shows how to load a model from the registry named uci-heart-classifier and used it as a Spark Pandas UDF to score new data.

from pyspark.sql.types import ArrayType, FloatType

model_name = "uci-heart-classifier"

model_uri = "models:/"+model_name+"/latest"

#Create a Spark UDF for the MLFlow model

pyfunc_udf = mlflow.pyfunc.spark_udf(spark, model_uri)

For more ways to reference models from the registry, see Loading models from registry.

After the model is loaded, you can use this command to score new data.

#Load Scoring Data into Spark Dataframe

scoreDf = spark.table({table_name}).where({required_conditions})

#Make Prediction

preds = (scoreDf

.withColumn('target_column_name', pyfunc_udf('Input_column1', 'Input_column2', ' Input_column3', …))

)

display(preds)

Clean up resources

If you want to keep your Azure Databricks workspace, but no longer need the Azure Machine Learning workspace, you can delete the Azure Machine Learning workspace. This action results in unlinking your Azure Databricks workspace and the Azure Machine Learning workspace.

If you don't plan to use the logged metrics and artifacts in your workspace, delete the resource group that contains the storage account and workspace.

- In the Azure portal, search for Resource groups. Under services, select Resource groups.

- In the Resource groups list, find and select the resource group that you created to open it.

- In the Overview page, select Delete resource group.

- To verify deletion, enter the resource group's name.