Create a custom event trigger to run a pipeline in Azure Data Factory

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

Event triggers in Azure Data Factory allow you to automate the execution of pipelines based on specific events occurring in your data sources. This is a key feature of event-driven architecture, enabling real-time data integration and processing.

Event-driven architecture is a common data integration pattern that involves production, detection, consumption, and reaction to events. Data integration scenarios often require Azure Data Factory customers to trigger pipelines when certain events occur. Data Factory native integration with Azure Event Grid now covers custom topics. You send events to an Event Grid topic. Data Factory subscribes to the topic, listens, and then triggers pipelines accordingly.

The integration described in this article depends on Azure Event Grid. Make sure that your subscription is registered with the Event Grid resource provider. For more information, see Resource providers and types. You must be able to do the Microsoft.EventGrid/eventSubscriptions/ action. This action is part of the EventGrid EventSubscription Contributor built-in role.

Important

If you're using this feature in Azure Synapse Analytics, ensure that your subscription is also registered with a Data Factory resource provider. Otherwise, you get a message stating that "the creation of an Event Subscription failed."

If you combine pipeline parameters and a custom event trigger, you can parse and reference custom data payloads in pipeline runs. Because the data field in a custom event payload is a freeform, JSON key-value structure, you can control event-driven pipeline runs.

Important

If a key referenced in parameterization is missing in the custom event payload, trigger run fails. You get a message that states the expression can't be evaluated because the keyName property doesn't exist. In this case, no pipeline run is triggered by the event.

Event and trigger use cases

Triggers can be fired by various events, including:

Blob Created: When a new file is uploaded to a specified container. Blob Deleted: When a file is removed from the container. Blob Modified: When an existing file is updated.

You can use events to dynamically control your pipeline executions. For example, when a new data file is uploaded to the 'incoming' folder in Azure Blob Storage, a trigger can automatically start a pipeline to process the data, ensuring timely data integration.

Set up a custom topic in Event Grid

To use the custom event trigger in Data Factory, you need to first set up a custom topic in Event Grid.

Go to Event Grid and create the topic yourself. For more information on how to create the custom topic, see Event Grid portal tutorials and Azure CLI tutorials.

Note

The workflow is different from a storage event trigger. Here, Data Factory doesn't set up the topic for you.

Data Factory expects events to follow the Event Grid event schema. Make sure that event payloads have the following fields:

[

{

"topic": string,

"subject": string,

"id": string,

"eventType": string,

"eventTime": string,

"data":{

object-unique-to-each-publisher

},

"dataVersion": string,

"metadataVersion": string

}

]

Use Data Factory to create a custom event trigger

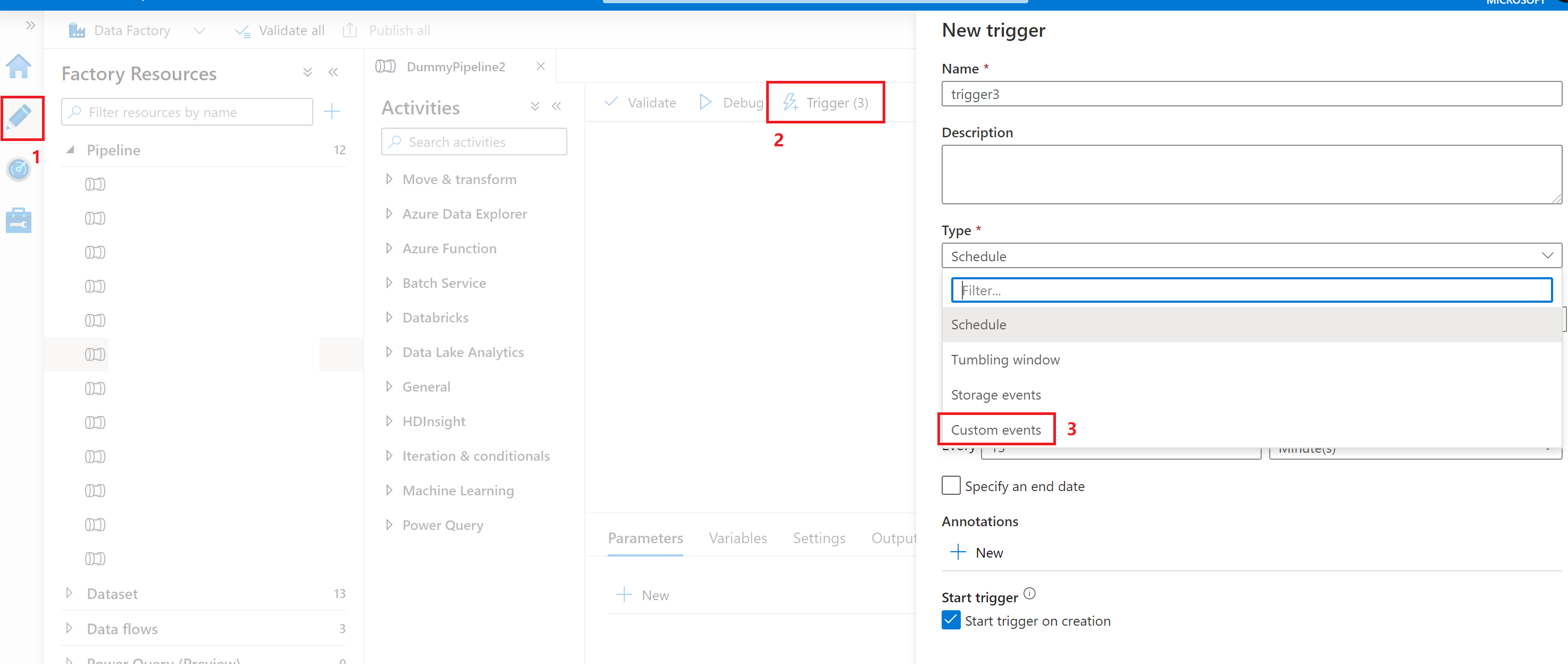

Go to Data Factory and sign in.

Switch to the Edit tab. Look for the pencil icon.

Select Trigger on the menu and then select New/Edit.

On the Add Triggers page, select Choose trigger, and then select + New.

Under Type, select Custom events.

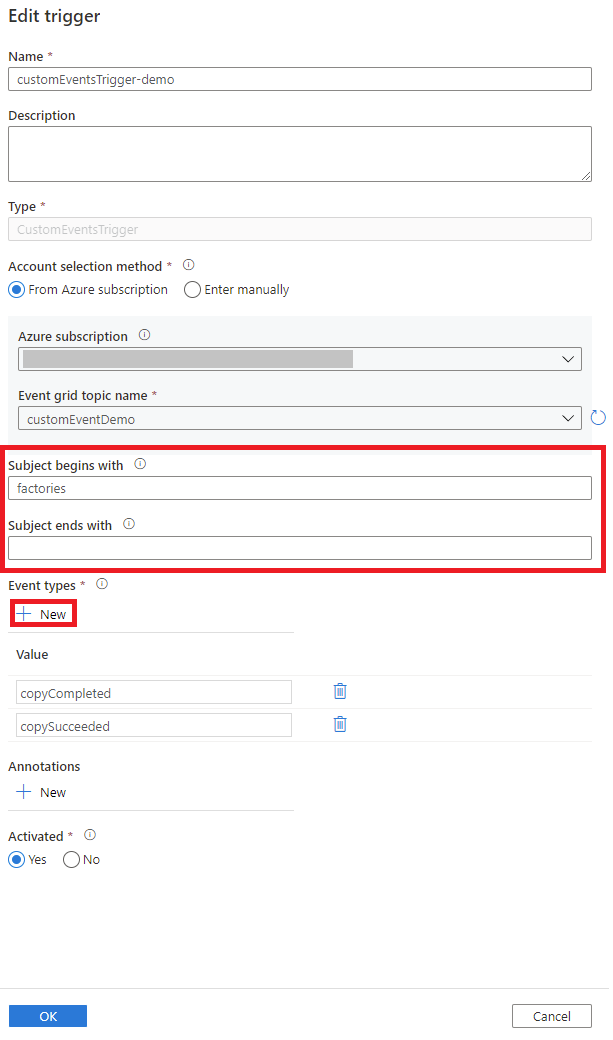

Select your custom topic from the Azure subscription dropdown list or manually enter the event topic scope.

Note

To create or modify a custom event trigger in Data Factory, you need to use an Azure account with appropriate Azure role-based access control (Azure RBAC). No other permission is required. The Data Factory service principal does not require special permission to your Event Grid. For more information about access control, see the Role-based access control section.

The

Subject begins withandSubject ends withproperties allow you to filter for trigger events. Both properties are optional.Use + New to add Event types to filter on. The list of custom event triggers uses an OR relationship. When a custom event with an

eventTypeproperty matches one on the list, a pipeline run is triggered. The event type is case insensitive. For example, in the following screenshot, the trigger matches allcopycompletedorcopysucceededevents that have a subject that begins with factories.

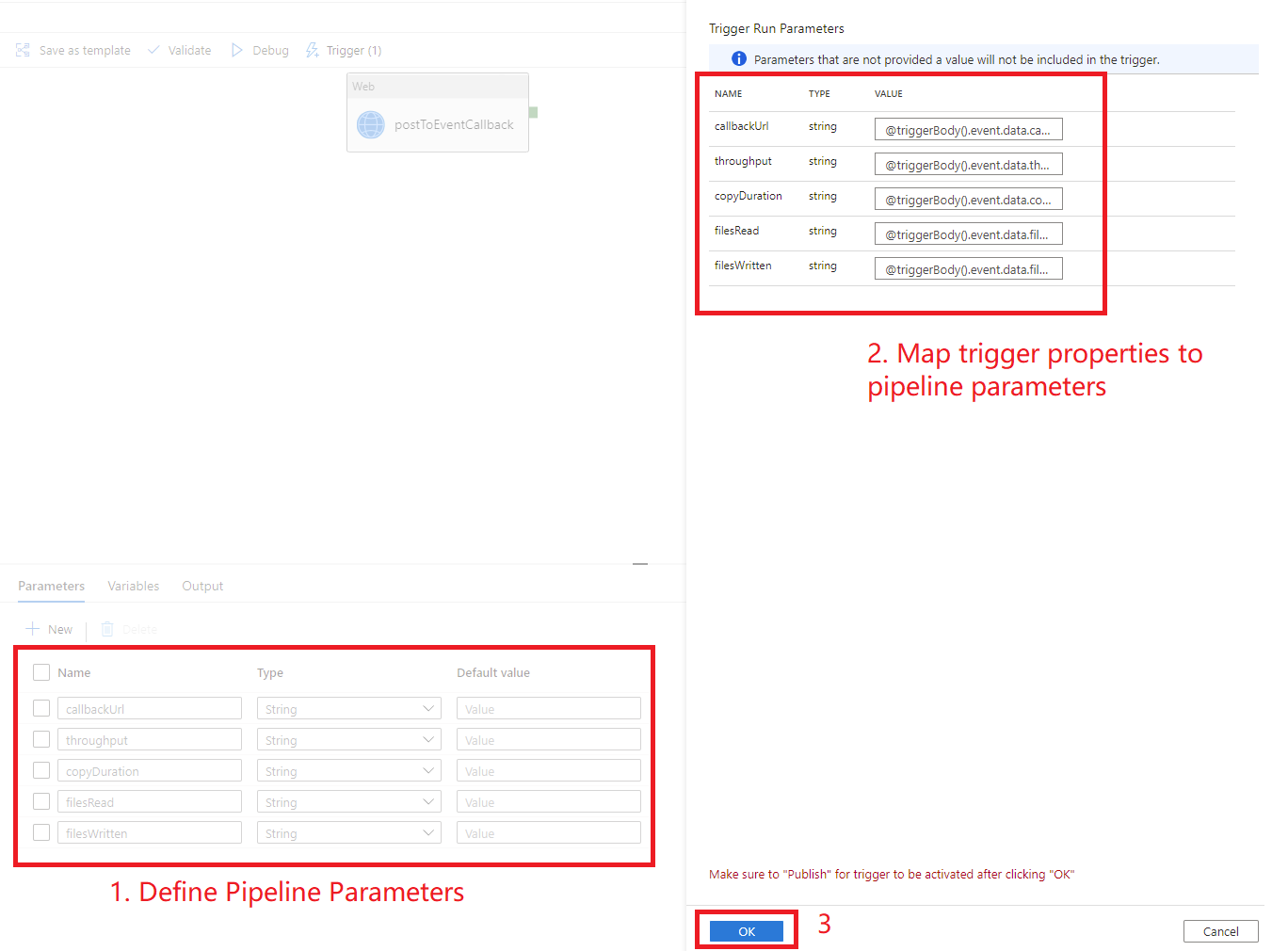

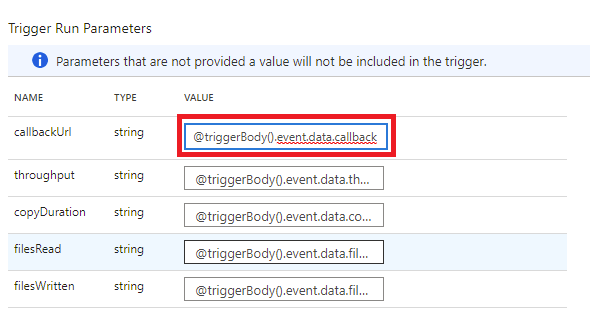

A custom event trigger can parse and send a custom

datapayload to your pipeline. You create the pipeline parameters and then fill in the values on the Parameters page. Use the format@triggerBody().event.data._keyName_to parse the data payload and pass values to the pipeline parameters.For a detailed explanation, see:

After you enter the parameters, select OK.

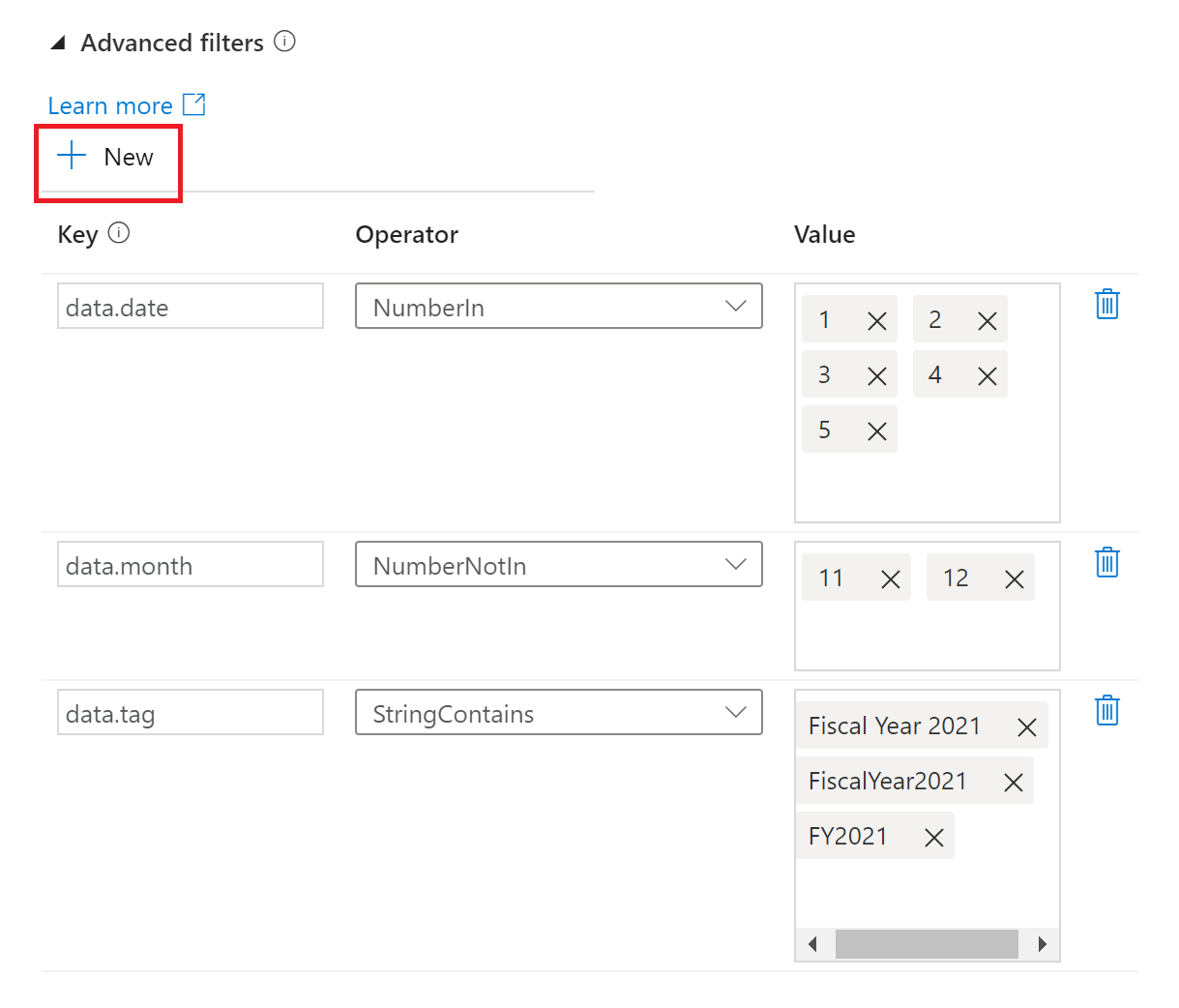

Advanced filtering

Custom event triggers support advanced filtering capabilities, similar to Event Grid advanced filtering. These conditional filters allow pipelines to trigger based on the values of the event payload. For instance, you might have a field in the event payload named Department, and the pipeline should only trigger if Department equals Finance. You might also specify complex logic, such as the date field in list [1, 2, 3, 4, 5], the month field not in the list [11, 12], and if the tag field contains [Fiscal Year 2021, FiscalYear2021, or FY2021].

As of today, custom event triggers support a subset of advanced filtering operators in Event Grid. The following filter conditions are supported:

NumberInNumberNotInNumberLessThanNumberGreaterThanNumberLessThanOrEqualsNumberGreaterThanOrEqualsBoolEqualsStringContainsStringBeginsWithStringEndsWithStringInStringNotIn

Select + New to add new filter conditions.

Custom event triggers also obey the same limitations as Event Grid, such as:

- 5 advanced filters and 25 filter values across all the filters per custom event trigger.

- 512 characters per string value.

- 5 values for

inandnot inoperators. - Keys can't have the

.(dot) character in them, for example,john.doe@contoso.com. Currently, there's no support for escape characters in keys. - The same key can be used in more than one filter.

Data Factory relies on the latest general availability (GA) version of the Event Grid API. As new API versions get to the GA stage, Data Factory expands its support for more advanced filtering operators.

JSON schema

The following table provides an overview of the schema elements that are related to custom event triggers.

| JSON element | Description | Type | Allowed values | Required |

|---|---|---|---|---|

scope |

The Azure Resource Manager resource ID of the Event Grid topic. | String | Azure Resource Manager ID | Yes. |

events |

The type of events that cause this trigger to fire. | Array of strings | Yes, at least one value is expected. | |

subjectBeginsWith |

The subject field must begin with the provided pattern for the trigger to fire. For example, factories only fire the trigger for event subjects that start with factories. |

String | No. | |

subjectEndsWith |

The subject field must end with the provided pattern for the trigger to fire. |

String | No. | |

advancedFilters |

List of JSON blobs, each specifying a filter condition. Each blob specifies key, operatorType, and values. |

List of JSON blobs | No. |

Role-based access control

Data Factory uses Azure RBAC to prohibit unauthorized access. To function properly, Data Factory requires access to:

- Listen to events.

- Subscribe to updates from events.

- Trigger pipelines linked to custom events.

To successfully create or update a custom event trigger, you need to sign in to Data Factory with an Azure account that has appropriate access. Otherwise, the operation fails with the message "Access Denied."

Data Factory doesn't require special permission to your instance of Event Grid. You also do not need to assign special Azure RBAC role permission to the Data Factory service principal for the operation.

Specifically, you need Microsoft.EventGrid/EventSubscriptions/Write permission on /subscriptions/####/resourceGroups//####/providers/Microsoft.EventGrid/topics/someTopics.

- When you author in the data factory (in the development environment, for instance), the Azure account signed in needs to have the preceding permission.

- When you publish through continuous integration and continuous delivery, the account used to publish the Azure Resource Manager template into the testing or production factory needs to have the preceding permission.

Related content

- Get detailed information about trigger execution.

- Learn how to reference trigger metadata in pipeline runs.