Fine-tune models with Azure AI Foundry

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

Fine-tuning refers to customizing a pre-trained generative AI model with additional training on a specific task or new dataset for enhanced performance, new skills, or improved accuracy. The result is a new, custom GenAI model that's optimized based on the provided examples.

Consider fine-tuning GenAI models to:

- Scale and adapt to specific enterprise needs

- Reduce false positives as tailored models are less likely to produce inaccurate or irrelevant responses

- Enhance the model's accuracy for domain-specific tasks

- Save time and resources with faster and more precise results

- Get more relevant and context-aware outcomes as models are fine-tuned for specific use cases

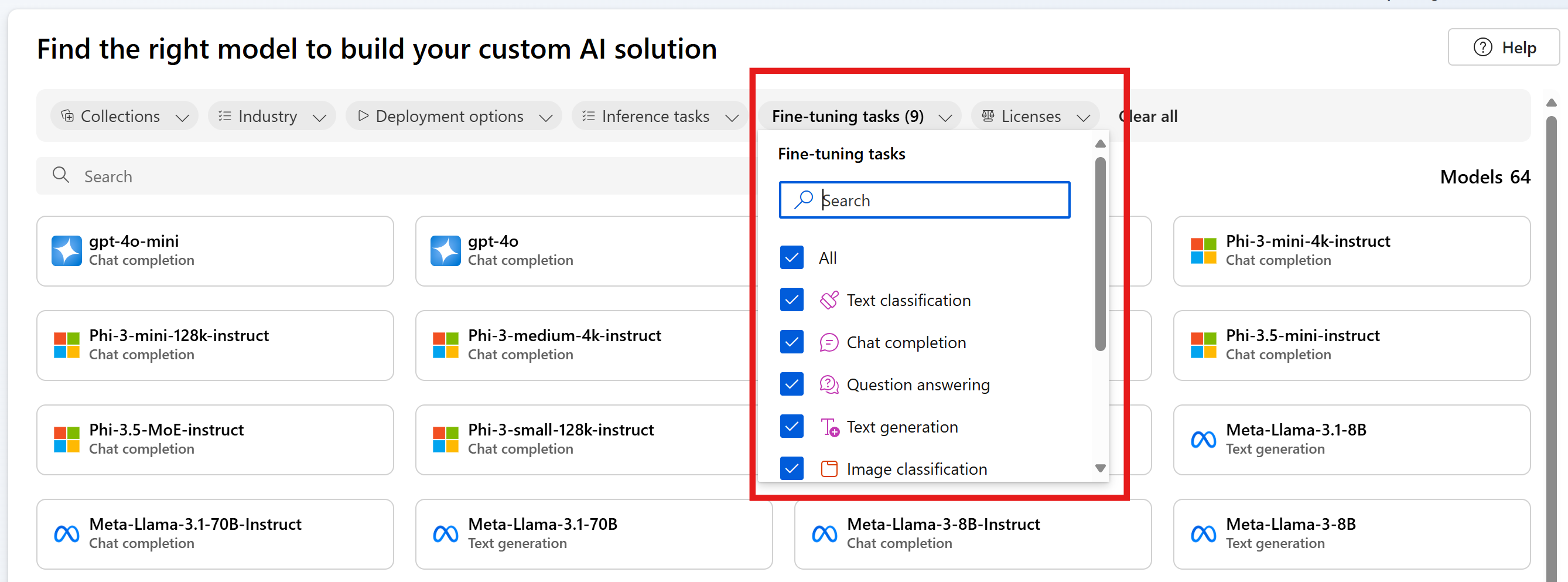

Azure AI Foundry offers several models across model providers enabling you to get access to the latest and greatest in the market. You can discover supported models for fine-tuning through our model catalog by using the Fine-tuning tasks filter and selecting the model card to learn detailed information about each model. Specific models may be subjected to regional constraints, view this list for more details.

This article will walk you through use-cases for fine-tuning and how this can help you in your GenAI journey.

Getting started with fine-tuning

When starting out on your generative AI journey, we recommend you begin with prompt engineering and RAG to familiarize yourself with base models and its capabilities.

- Prompt engineering is a technique that involves designing prompts using tone and style details, example responses, and intent mapping for natural language processing models. This process improves accuracy and relevancy in responses, to optimize the performance of the model.

- Retrieval-augmented generation (RAG) improves LLM performance by retrieving data from external sources and incorporating it into a prompt. RAG can help businesses achieve customized solutions while maintaining data relevance and optimizing costs.

As you get comfortable and begin building your solution, it's important to understand where prompt engineering falls short and that will help you realize if you should try fine-tuning.

- Is the base model failing on edge cases or exceptions?

- Is the base model not consistently providing output in the right format?

- Is it difficult to fit enough examples in the context window to steer the model?

- Is there high latency?

Examples of failure with the base model and prompt engineering can help you identify the data to collect for fine-tuning and establish a performance baseline that you can evaluate and compare your fine-tuned model against. Having a baseline for performance without fine-tuning is essential for knowing whether or not fine-tuning improves model performance.

Here's an example:

A customer wants to use GPT-3.5 Turbo to turn natural language questions into queries in a specific, nonstandard query language. The customer provides guidance in the prompt ("Always return GQL") and uses RAG to retrieve the database schema. However, the syntax isn't always correct and often fails for edge cases. The customer collects thousands of examples of natural language questions and the equivalent queries for the database, including cases where the model failed before. The customer then uses that data to fine-tune the model. Combining the newly fine-tuned model with the engineered prompt and retrieval brings the accuracy of the model outputs up to acceptable standards for use.

Use cases

Base models are already pre-trained on vast amounts of data and most times you'll add instructions and examples to the prompt to get the quality responses that you're looking for - this process is called "few-shot learning". Fine-tuning allows you to train a model with many more examples that you can tailor to meet your specific use-case, thus improving on few-shot learning. This can reduce the number of tokens in the prompt leading to potential cost savings and requests with lower latency.

Turning natural language into a query language is just one use case where you can show not tell the model how to behave. Here are some additional use cases:

- Improve the model's handling of retrieved data

- Steer model to output content in a specific style, tone, or format

- Improve the accuracy when you look up information

- Reduce the length of your prompt

- Teach new skills (i.e. natural language to code)

If you identify cost as your primary motivator, proceed with caution. Fine-tuning might reduce costs for certain use cases by shortening prompts or allowing you to use a smaller model. But there may be a higher upfront cost to training, and you have to pay for hosting your own custom model.

Steps to fine-tune a model

Here are the general steps to fine-tune a model:

- Based on your use case, choose a model that supports your task

- Prepare and upload training data

- (Optional) Prepare and upload validation data

- (Optional) Configure task parameters

- Train your model.

- Once completed, review metrics and evaluate model. If the results don't meet your benchmark, then go back to step 2.

- Use your fine-tuned model

It's important to call out that fine-tuning is heavily dependent on the quality of data that you can provide. It's best practice to provide hundreds, if not thousands, of training examples to be successful and get your desired results.

Supported models for fine-tuning

Now that you know when to use fine-tuning for your use case, you can go to Azure AI Foundry to find models available to fine-tune. For some models in the model catalog, fine-tuning is available by using a serverless API, or a managed compute (preview), or both.

Fine-tuning is available in specific Azure regions for some models that are deployed via serverless APIs. To fine-tune such models, a user must have a hub/project in the region where the model is available for fine-tuning. See Region availability for models in serverless API endpoints for detailed information.

For more information on fine-tuning using a managed compute (preview), see Fine-tune models using managed compute (preview).

For details about Azure OpenAI models that are available for fine-tuning, see the Azure OpenAI Service models documentation or the Azure OpenAI models table later in this guide.

For the Azure OpenAI Service models that you can fine tune, supported regions for fine-tuning include North Central US, Sweden Central, and more.

Fine-tuning Azure OpenAI models

Note

gpt-35-turbo - Fine-tuning of this model is limited to a subset of regions, and isn't available in every region the base model is available.

The supported regions for fine-tuning might vary if you use Azure OpenAI models in an Azure AI Foundry project versus outside a project.

| Model ID | Fine-tuning regions | Max request (tokens) | Training Data (up to) |

|---|---|---|---|

babbage-002 |

North Central US Sweden Central Switzerland West |

16,384 | Sep 2021 |

davinci-002 |

North Central US Sweden Central Switzerland West |

16,384 | Sep 2021 |

gpt-35-turbo (0613) |

East US2 North Central US Sweden Central Switzerland West |

4,096 | Sep 2021 |

gpt-35-turbo (1106) |

East US2 North Central US Sweden Central Switzerland West |

Input: 16,385 Output: 4,096 |

Sep 2021 |

gpt-35-turbo (0125) |

East US2 North Central US Sweden Central Switzerland West |

16,385 | Sep 2021 |

gpt-4 (0613) 1 |

North Central US Sweden Central |

8192 | Sep 2021 |

gpt-4o-mini (2024-07-18) |

North Central US Sweden Central |

Input: 128,000 Output: 16,384 Training example context length: 64,536 |

Oct 2023 |

gpt-4o (2024-08-06) |

East US2 North Central US Sweden Central |

Input: 128,000 Output: 16,384 Training example context length: 64,536 |

Oct 2023 |

1 GPT-4 is currently in public preview.