Document Intelligence invoice model

This content applies to: ![]() v4.0 (GA) | Previous versions:

v4.0 (GA) | Previous versions: ![]() v3.1 (GA)

v3.1 (GA) ![]() v3.0 (GA)

v3.0 (GA) ![]() v2.1 (GA)

v2.1 (GA)

::: moniker-end

This content applies to: ![]() v2.1 | Latest version:

v2.1 | Latest version: ![]() v4.0 (GA)

v4.0 (GA)

The Document Intelligence invoice model uses powerful Optical Character Recognition (OCR) capabilities to analyze and extract key fields and line items from sales invoices, utility bills, and purchase orders. Invoices can be of various formats and quality including phone-captured images, scanned documents, and digital PDFs. The API analyzes invoice text; extracts key information such as customer name, billing address, due date, and amount due; and returns a structured JSON data representation. The model currently supports invoices in 27 languages.

Supported document types:

- Invoices

- Utility bills

- Sales orders

- Purchase orders

Automated invoice processing

Automated invoice processing is the process of extracting key accounts payable fields from billing account documents. Extracted data includes line items from invoices integrated with your accounts payable (AP) workflows for reviews and payments. Historically, the accounts payable process is performed manually and, hence, very time consuming. Accurate extraction of key data from invoices is typically the first and one of the most critical steps in the invoice automation process.

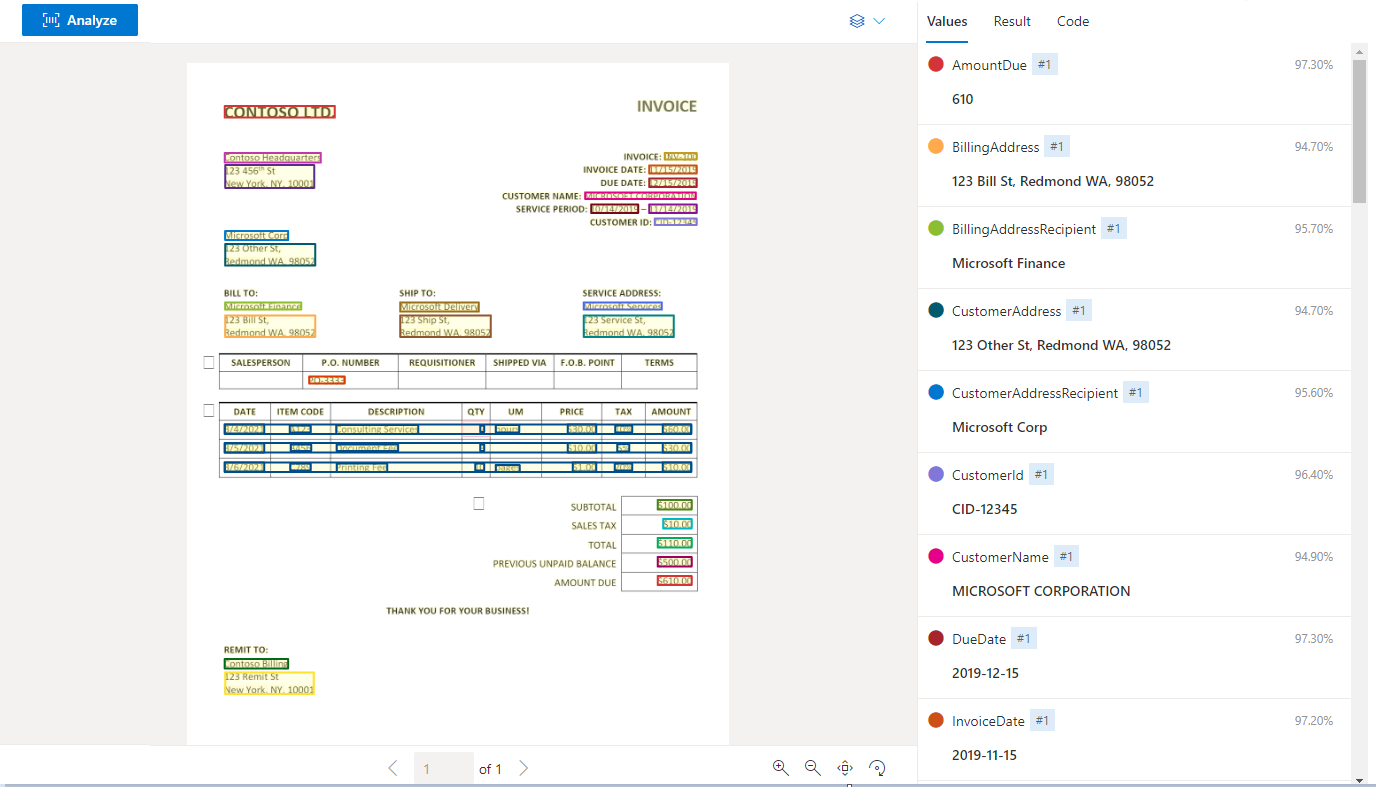

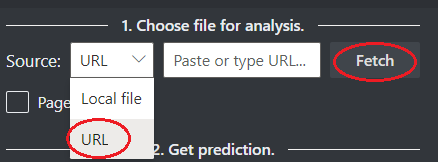

Sample invoice processed with Document Intelligence Studio:

Sample invoice processed with Document Intelligence Sample Labeling tool:

Development options

Document Intelligence v4.0: 2024-11-30 (GA) supports the following tools, applications, and libraries:

| Feature | Resources | Model ID |

|---|---|---|

| Invoice model | • Document Intelligence Studio • REST API • C# SDK • Python SDK • Java SDK • JavaScript SDK |

prebuilt-invoice |

Document Intelligence v3.1 supports the following tools, applications, and libraries:

| Feature | Resources | Model ID |

|---|---|---|

| Invoice model | • Document Intelligence Studio • REST API • C# SDK • Python SDK • Java SDK • JavaScript SDK |

prebuilt-invoice |

Document Intelligence v3.0 supports the following tools, applications, and libraries:

| Feature | Resources | Model ID |

|---|---|---|

| Invoice model | • Document Intelligence Studio • REST API • C# SDK • Python SDK • Java SDK • JavaScript SDK |

prebuilt-invoice |

Document Intelligence v2.1 supports the following tools, applications, and libraries:

| Feature | Resources |

|---|---|

| Invoice model | • Document Intelligence labeling tool • REST API • Client-library SDK • Document Intelligence Docker container |

Input requirements

Supported file formats:

Model PDF Image: JPEG/JPG,PNG,BMP,TIFF,HEIFMicrosoft Office:

Word (DOCX), Excel (XLSX), PowerPoint (PPTX), HTMLRead ✔ ✔ ✔ Layout ✔ ✔ ✔ General Document ✔ ✔ Prebuilt ✔ ✔ Custom extraction ✔ ✔ Custom classification ✔ ✔ ✔ For best results, provide one clear photo or high-quality scan per document.

For PDF and TIFF, up to 2,000 pages can be processed (with a free tier subscription, only the first two pages are processed).

The file size for analyzing documents is 500 MB for paid (S0) tier and

4MB for free (F0) tier.Image dimensions must be between 50 pixels x 50 pixels and 10,000 pixels x 10,000 pixels.

If your PDFs are password-locked, you must remove the lock before submission.

The minimum height of the text to be extracted is 12 pixels for a 1024 x 768 pixel image. This dimension corresponds to about

8point text at 150 dots per inch (DPI).For custom model training, the maximum number of pages for training data is 500 for the custom template model and 50,000 for the custom neural model.

For custom extraction model training, the total size of training data is 50 MB for template model and

1GB for the neural model.For custom classification model training, the total size of training data is

1GB with a maximum of 10,000 pages. For 2024-11-30 (GA), the total size of training data is2GB with a maximum of 10,000 pages.

- Supported file formats: JPEG, PNG, PDF, and TIFF.

- Supported PDF and TIFF, up to 2,000 pages are processed. For free tier subscribers, only the first two pages are processed.

- Supported file size must be less than 50 MB and dimensions at least 50 x 50 pixels and at most 10,000 x 10,000 pixels.

Invoice model data extraction

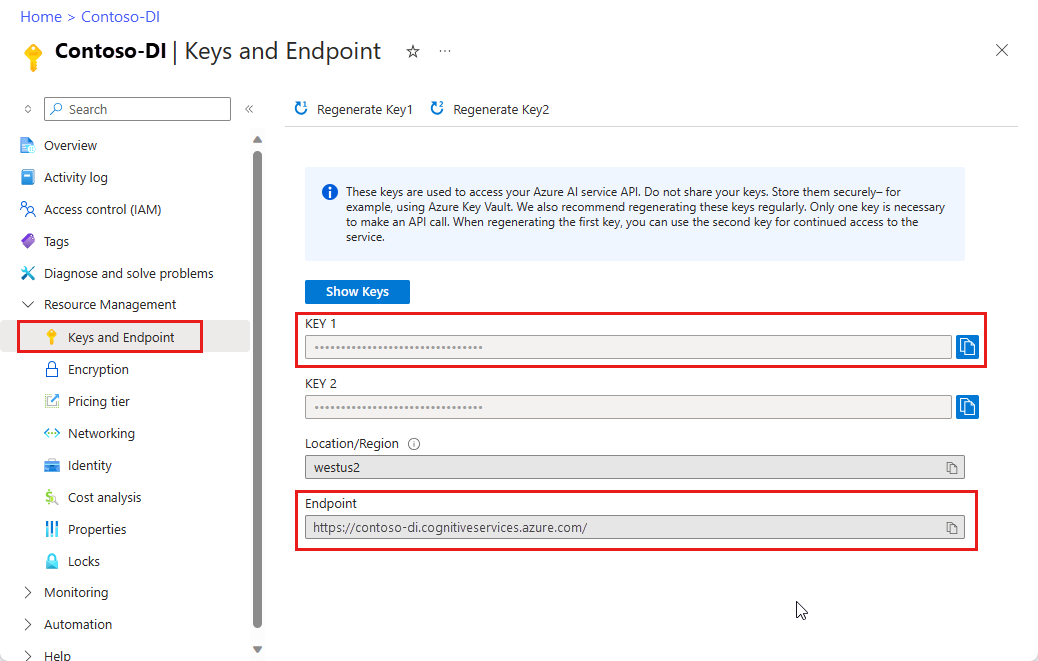

See how data, including customer information, vendor details, and line items, is extracted from invoices. You need the following resources:

An Azure subscription—you can create one for free.

A Document Intelligence instance in the Azure portal. You can use the free pricing tier (

F0) to try the service. After your resource deploys, select Go to resource to get your key and endpoint.

On the Document Intelligence Studio home page, select Invoices.

You can analyze the sample invoice or upload your own files.

Select the Run analysis button and, if necessary, configure the Analyze options :

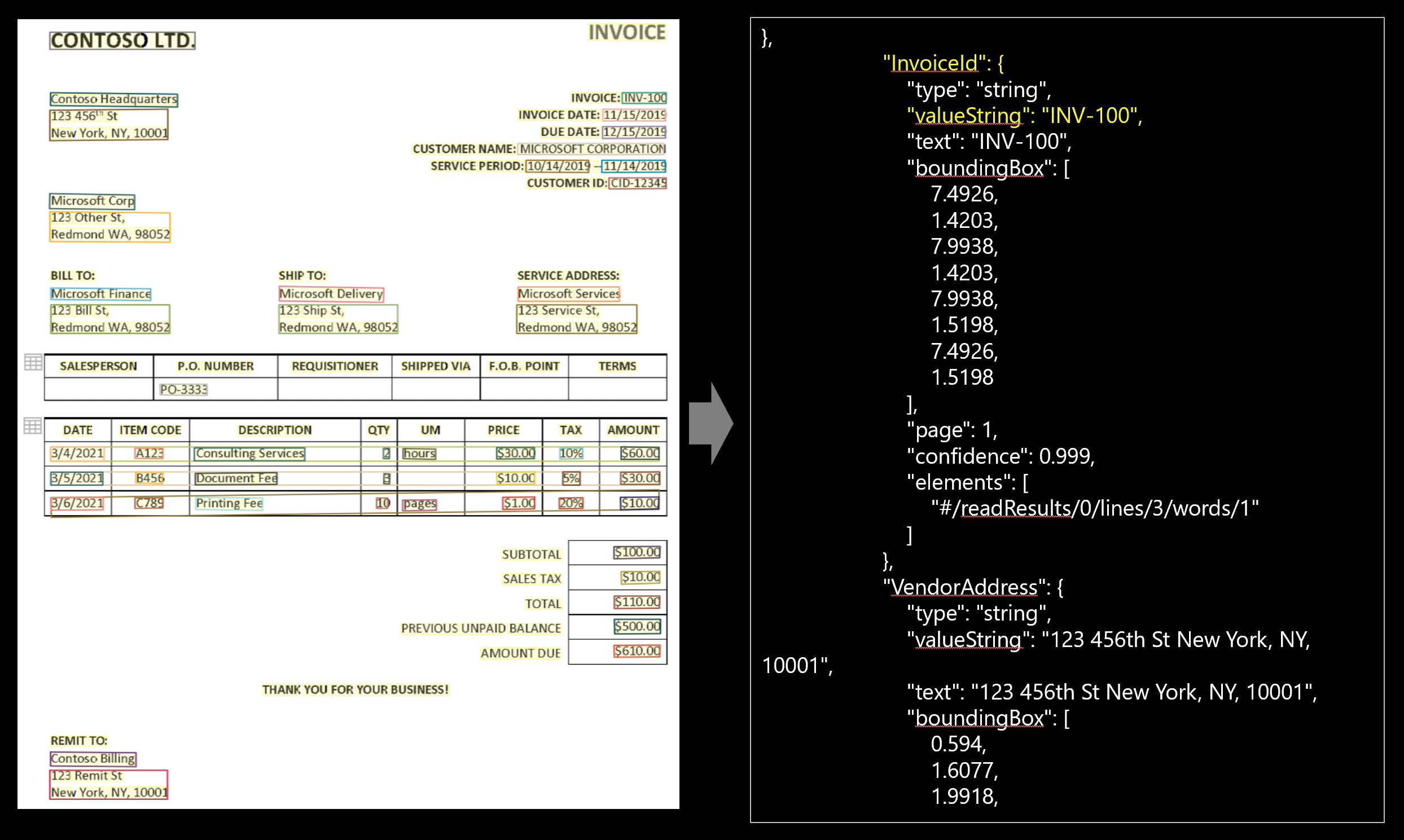

Document Intelligence Sample Labeling tool

Navigate to the Document Intelligence Sample Tool.

On the sample tool home page, select the Use prebuilt model to get data tile.

Select the Form Type to analyze from the dropdown menu.

Choose a URL for the file you would like to analyze from the below options:

In the Source field, select URL from the dropdown menu, paste the selected URL, and select the Fetch button.

In the Document Intelligence service endpoint field, paste the endpoint that you obtained with your Document Intelligence subscription.

In the key field, paste the key you obtained from your Document Intelligence resource.

Select Run analysis. The Document Intelligence Sample Labeling tool calls the Analyze Prebuilt API and analyze the document.

View the results - see the key-value pairs extracted, line items, highlighted text extracted, and tables detected.

Note

The Sample Labeling tool does not support the BMP file format. This is a limitation of the tool not the Document Intelligence Service.

Supported languages and locales

For a complete list of supported languages, see our prebuilt model language support page.

Field extraction

For supported document extraction fields, see the invoice model schema page in our GitHub sample repository.

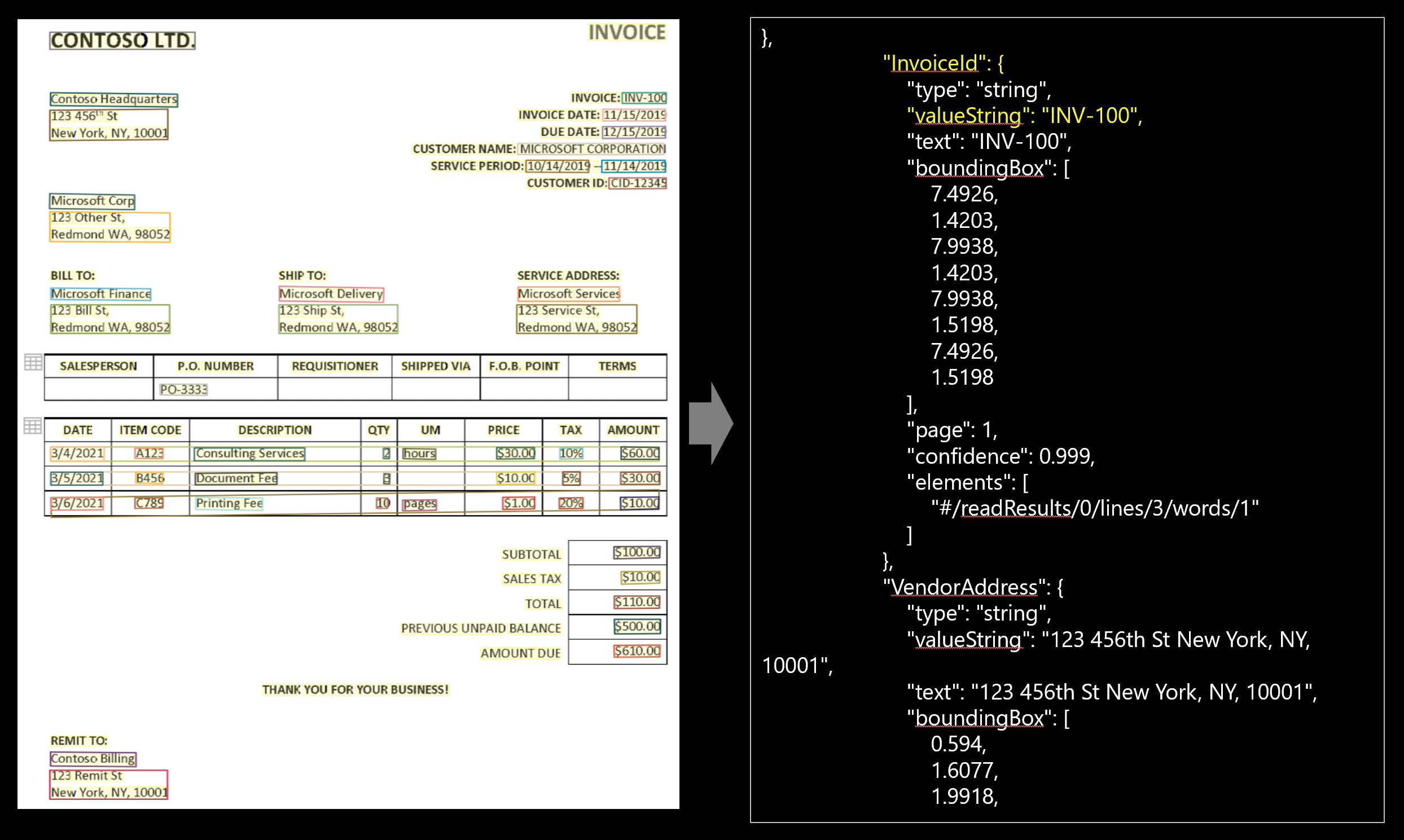

The invoice key-value pairs and line items extracted are in the

documentResultssection of the JSON output.

Key-value pairs

The prebuilt invoice model supports the optional return of key-value pairs. By default, the return of key-value pairs is disabled. Key-value pairs are specific spans within the invoice that identify a label or key and its associated response or value. In an invoice, these pairs could be the label and the value the user entered for that field or telephone number. The AI model is trained to extract identifiable keys and values based on a wide variety of document types, formats, and structures.

Keys can also exist in isolation when the model detects that a key exists, with no associated value or when processing optional fields. For example, a middle name field can be left blank on a form in some instances. Key-value pairs are always spans of text contained in the document. For documents where the same value is described in different ways, for example, customer/user, the associated key is either customer or user (based on context).

JSON output

The JSON output has three parts:

"readResults"node contains all of the recognized text and selection marks. Text is organized via page, then by line, then by individual words."pageResults"node contains the tables and cells extracted with their bounding boxes, confidence, and a reference to the lines and words in readResults."documentResults"node contains the invoice-specific values and line items that the model discovered. It's where to find all the fields from the invoice such as invoice ID, ship to, bill to, customer, total, line items and lots more.

Migration guide

- Follow our Document Intelligence v3.1 migration guide to learn how to use the v3.0 version in your applications and workflows.

::: moniker-end

Next steps

Try processing your own forms and documents with the Document Intelligence Studio.

Complete a Document Intelligence quickstart and get started creating a document processing app in the development language of your choice.

Try processing your own forms and documents with the Document Intelligence Sample Labeling tool.

Complete a Document Intelligence quickstart and get started creating a document processing app in the development language of your choice.