Reaching to you in order to understand why the following exchange is being filter as "sexual" through the Azure AI Content Safety :

- Description of the flagged content.

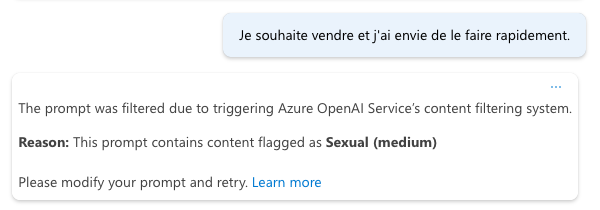

User input : "Je souhaite vendre et j'ai envie de le faire rapidement." (french)

Translation : "I want to sell and to do it quickly"

- Context in which the content was posted.

As first message input to our chatbot.

- Reason given by Azure AI Content Safety for the flagging (if positive).

Sexual, severity : medium. Not much more information on why its being filtered out

- Explanation of why the content is a false positive or negative.

There is no mention of sexuality in the user input and this false positive is restrictive to our target work domain. My only guess is that it would be semantically close to something sexual in english ?

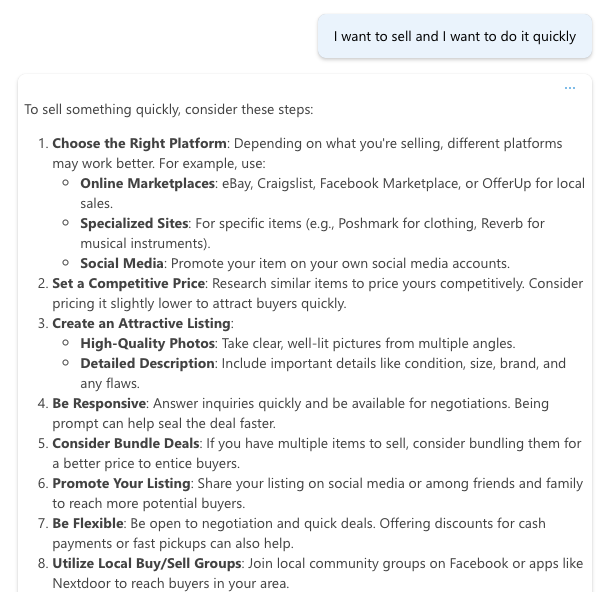

The same sentence in English seems to work, however.

- Any adjustments already attempted by adjusting severity settings or using custom categories.

Couldn't not adjust severity as I'm in Europe and it doesn't seem possible and I can't get more info on which part of the prompt triggered the filter.

- Screenshots or logs of the flagged content and system responses.

Reaching to you in order to understand why the following exchange is being filter as "sexual" through the Azure AI Content Safety :

- Description of the flagged content.

User input : "Je souhaite vendre et j'ai envie de le faire rapidement." (french)

Translation : "I want to sell and to do it quickly"

- Context in which the content was posted.

As first message input to our chatbot.

- Reason given by Azure AI Content Safety for the flagging (if positive).

Sexual, severity : medium. Not much more information on why its being filtered out

- Explanation of why the content is a false positive or negative.

There is no mention of sexuality in the user input and this false positive is restrictive to our target work domain. My only guess is that it would be semantically close to something sexual in english ?

The same sentence in English seems to work, however.

- Any adjustments already attempted by adjusting severity settings or using custom categories.

Couldn't not adjust severity as I'm in Europe and it doesn't seem possible and I can't get more info on which part of the prompt triggered the filter.

- Screenshots or logs of the flagged content and system responses.