Export FHIR data in Azure API for FHIR

Important

Azure API for FHIR will be retired on September 30, 2026. Follow the migration strategies to transition to Azure Health Data Services FHIR® service by that date. Due to the retirement of Azure API for FHIR, new deployments won't be allowed beginning April 1, 2025. Azure Health Data Services FHIR service is the evolved version of Azure API for FHIR that enables customers to manage FHIR, DICOM, and MedTech services with integrations into other Azure services.

The Bulk Export feature allows data to be exported from the FHIR® Server per the FHIR specification.

Before using $export, make sure that the Azure API for FHIR is configured to use it. For configuring export settings and creating an Azure storage account, refer to the configure export data page.

Note

Only storage accounts in the same subscription as that for Azure API for FHIR are allowed to be registered as the destination for $export operations.

Using $export command

After configuring the Azure API for FHIR for export, you can use the $export command to export the data out of the service. The data is stored in the storage account you specified while configuring export. To learn how to invoke the $export command in FHIR server, read documentation in the HL7 FHIR $export specification.

Jobs stuck in a bad state

In some situations, a job may get stuck in a bad state. This can occur if the storage account permissions haven’t been set up properly. One way to validate an export is to check your storage account to see if the corresponding container (that is, ndjson) files are present. If they aren’t present, and there are no other export jobs running, then it's possible the current job is stuck in a bad state. You should cancel the export job by sending a cancellation request and try requeuing the job again. Our default run time for an export in bad state is 10 minutes before it will stop and move to a new job or retry the export.

The Azure API For FHIR supports $export at the following levels:

- System:

GET https://<<FHIR service base URL>>/$export>> - Patient:

GET https://<<FHIR service base URL>>/Patient/$export>> - Group of patients* - Azure API for FHIR exports all related resources but doesn't export the characteristics of the group:

GET https://<<FHIR service base URL>>/Group/[ID]/$export>>

Data is exported in multiple files, each containing resources of only one type. The number of resources in an individual file will be limited. The maximum number of resources is based on system performance. It's currently set to 5,000, but can change. The result is that you might get multiple files for a resource type. The file names follow the format 'resourceName-number-number.ndjson'. The order of the files isn't guaranteed to correspond to any ordering of the resources in the database.

Note

Patient/$export and Group/[ID]/$export may export duplicate resources if the resource is in a compartment of more than one resource, or is in multiple groups.

In addition, checking the export status through the URL returned by the location header during the queuing is supported, along with canceling the actual export job.

Exporting FHIR data to ADLS Gen2

Currently we support $export for ADLS Gen2 enabled storage accounts, with the following limitations:

- Users can’t take advantage of hierarchical namespaces - there isn't a way to target an export to a specific subdirectory within a container. We only provide the ability to target a specific container (where a new folder is created for each export).

- Once an export is complete, nothing is ever exported to that folder again. Subsequent exports to the same container will be inside a newly created folder.

Settings and parameters

Headers

There are two required header parameters that must be set for $export jobs. The values are defined by the current $export specification.

- Accept - application/fhir+json

- Prefer - respond-async

Query parameters

The Azure API for FHIR supports the following query parameters. All of these parameters are optional.

| Query parameter | Defined by the FHIR Spec? | Description |

|---|---|---|

| _outputFormat | Yes | Currently supports three values to align to the FHIR Spec: application/fhir+ndjson, application/ndjson, or ndjson. All export jobs return ndjson and the passed value has no effect on code behavior. |

| _since | Yes | Allows you to only export resources that have been modified since the time provided. |

| _type | Yes | Allows you to specify which types of resources will be included. For example, _type=Patient would return only patient resources. |

| _typefilter | Yes | To request finer-grained filtering, you can use _typefilter along with the _type parameter. The value of the _typeFilter parameter is a comma-separated list of FHIR queries that further restrict the results. |

| _container | No | Specifies the container within the configured storage account where the data should be exported. If a container is specified, the data is exported into a folder in that container. If the container isn’t specified, the data is exported to a new container. |

| _till | No | Allows you to only export resources that have been modified up to the time provided. This parameter is only applicable to System-Level export. In this case, if historical versions haven't been disabled or purged, export guarantees a true snapshot view. In other words, enables time travel. |

| includeAssociatedData | No | Allows you to export history and soft deleted resources. This filter doesn't work with the '_typeFilter' query parameter. Include the value as '_history' to export history (non-latest versioned) resources. Include the value as '_deleted' to export soft deleted resources. |

| _isparallel | No | The "_isparallel" query parameter can be added to the export operation to enhance its throughput. The value needs to be set to true to enable parallelization. Note: Using this parameter may result in an increase in request units consumption over the life of export. |

Note

There is a known issue with the $export operation that could result in incomplete exports with status success. The issue occurs when the is_parallel flag was used. Export jobs executed with _isparallel query parameter starting February 13th, 2024 are impacted with this issue.

Secure Export to Azure Storage

Azure API for FHIR supports a secure export operation. Choose one of the following two options.

Allowing Azure API for FHIR as a Microsoft Trusted Service to access the Azure storage account.

Allowing specific IP addresses associated with Azure API for FHIR to access the Azure storage account. This option provides two different configurations depending on whether the storage account is in the same or different location as the Azure API for FHIR.

Allowing Azure API for FHIR as a Microsoft Trusted Service

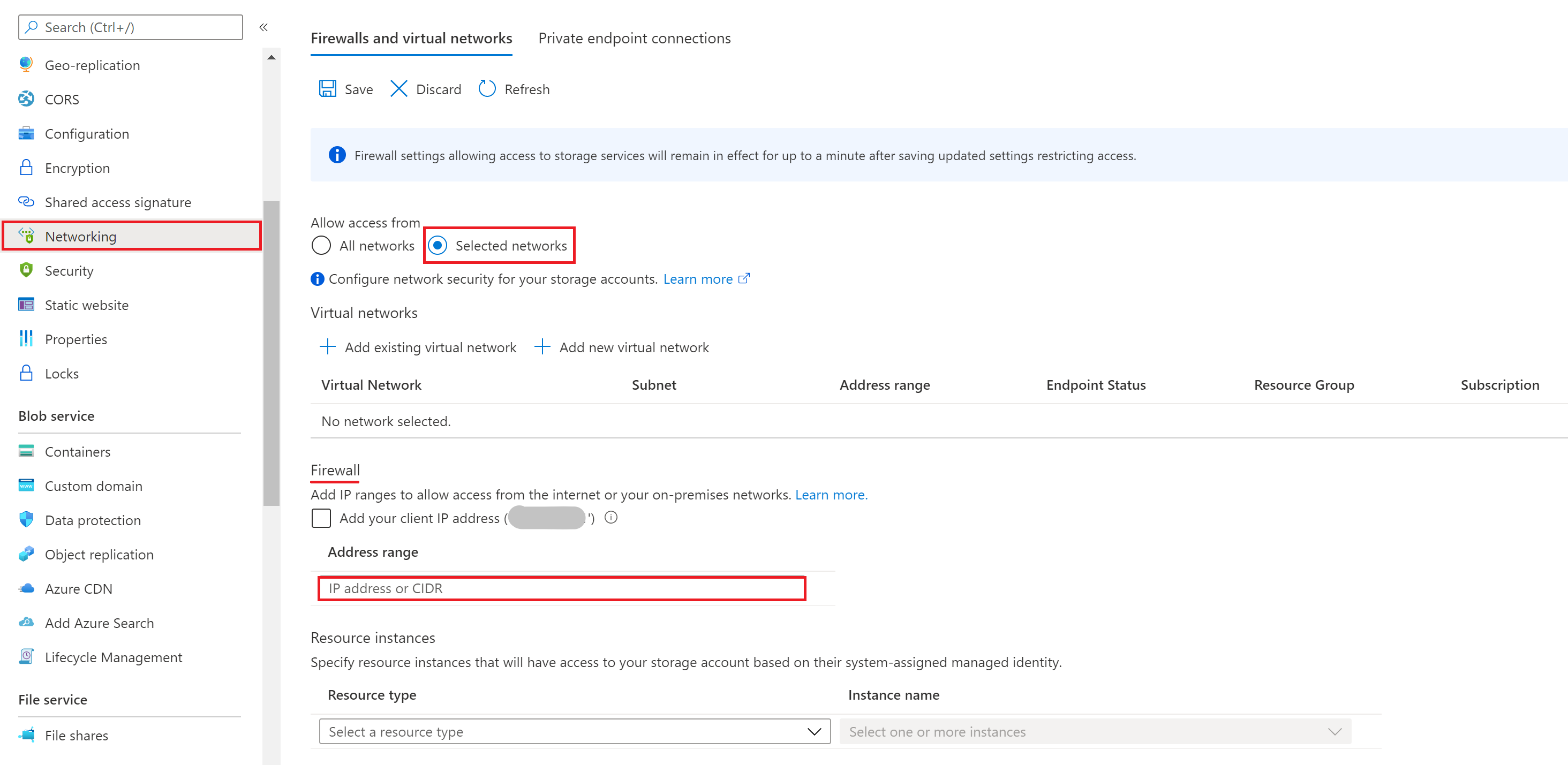

Select a storage account from the Azure portal, and then select the Networking blade. Select Selected networks under the Firewalls and virtual networks tab.

Important

Ensure that you’ve granted access permission to the storage account for Azure API for FHIR using its managed identity. For more information, see Configure export setting and set up the storage account.

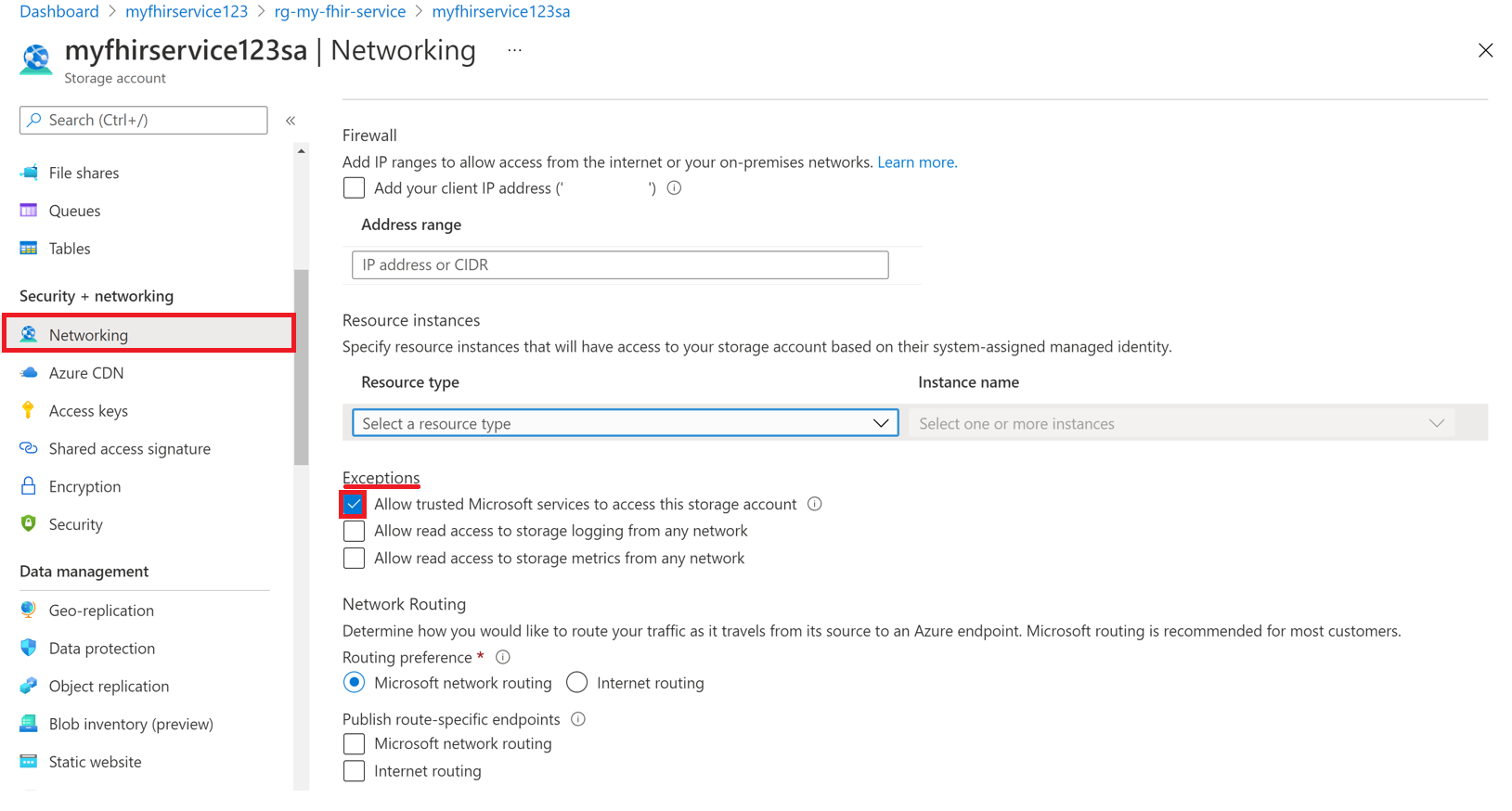

Under the Exceptions section, select the box Allow trusted Microsoft services to access this storage account and save the setting.

You're now ready to export FHIR data to the storage account securely. Note: The storage account is on selected networks and isn’t publicly accessible. To access the files, you can either enable and use private endpoints for the storage account, or enable all networks for the storage account for a short period of time.

Important

The user interface will be updated later to allow you to select the Resource type for Azure API for FHIR and a specific service instance.

Allow specific IP addresses to access the Azure storage account from other Azure regions

In the Azure portal, go to the Azure Data Lake Storage Gen2 account.

On the left menu, select Networking.

Select Enabled from selected virtual networks and IP addresses.

In the Firewall section, in the Address range box, specify the IP address. Add IP ranges to allow access from the internet or your on-premises networks. You can find the IP address in the following table for the Azure region where the FHIR service is provisioned.

Azure region Public IP address Australia East 20.53.44.80 Canada Central 20.48.192.84 Central US 52.182.208.31 East US 20.62.128.148 East US 2 20.49.102.228 East US 2 EUAP 20.39.26.254 Germany North 51.116.51.33 Germany West Central 51.116.146.216 Japan East 20.191.160.26 Korea Central 20.41.69.51 North Central US 20.49.114.188 North Europe 52.146.131.52 South Africa North 102.133.220.197 South Central US 13.73.254.220 Southeast Asia 23.98.108.42 Switzerland North 51.107.60.95 UK South 51.104.30.170 UK West 51.137.164.94 West Central US 52.150.156.44 West Europe 20.61.98.66 West US 2 40.64.135.77

Allow specific IP addresses to access the Azure storage account in the same region

The configuration process for IP addresses in the same region is just like the previous procedure, except that you use a specific IP address range in Classless Inter-Domain Routing (CIDR) format instead (that is, 100.64.0.0/10). You must specify the IP address range (100.64.0.0 to 100.127.255.255) because an IP address for the FHIR service is allocated each time you make an operation request.

Note

It's possible to use a private IP address within the range of 10.0.2.0/24, but there's no guarantee that the operation will succeed in such a case. You can retry if the operation request fails, but until you use an IP address within the range of 100.64.0.0/10, the request won't succeed.

This network behavior for IP address ranges is by design. The alternative is to configure the storage account in a different region.

Next steps

In this article, you learned how to export FHIR resources using $export command. Next, to learn how to export de-identified data, see

Note

FHIR® is a registered trademark of HL7 and is used with the permission of HL7.