Authenticate with Azure DevOps on Databricks

Learn how to configure your Azure DevOps pipelines to provide authentication for Databricks CLI commands and API calls in your automation.

Azure DevOps authentication

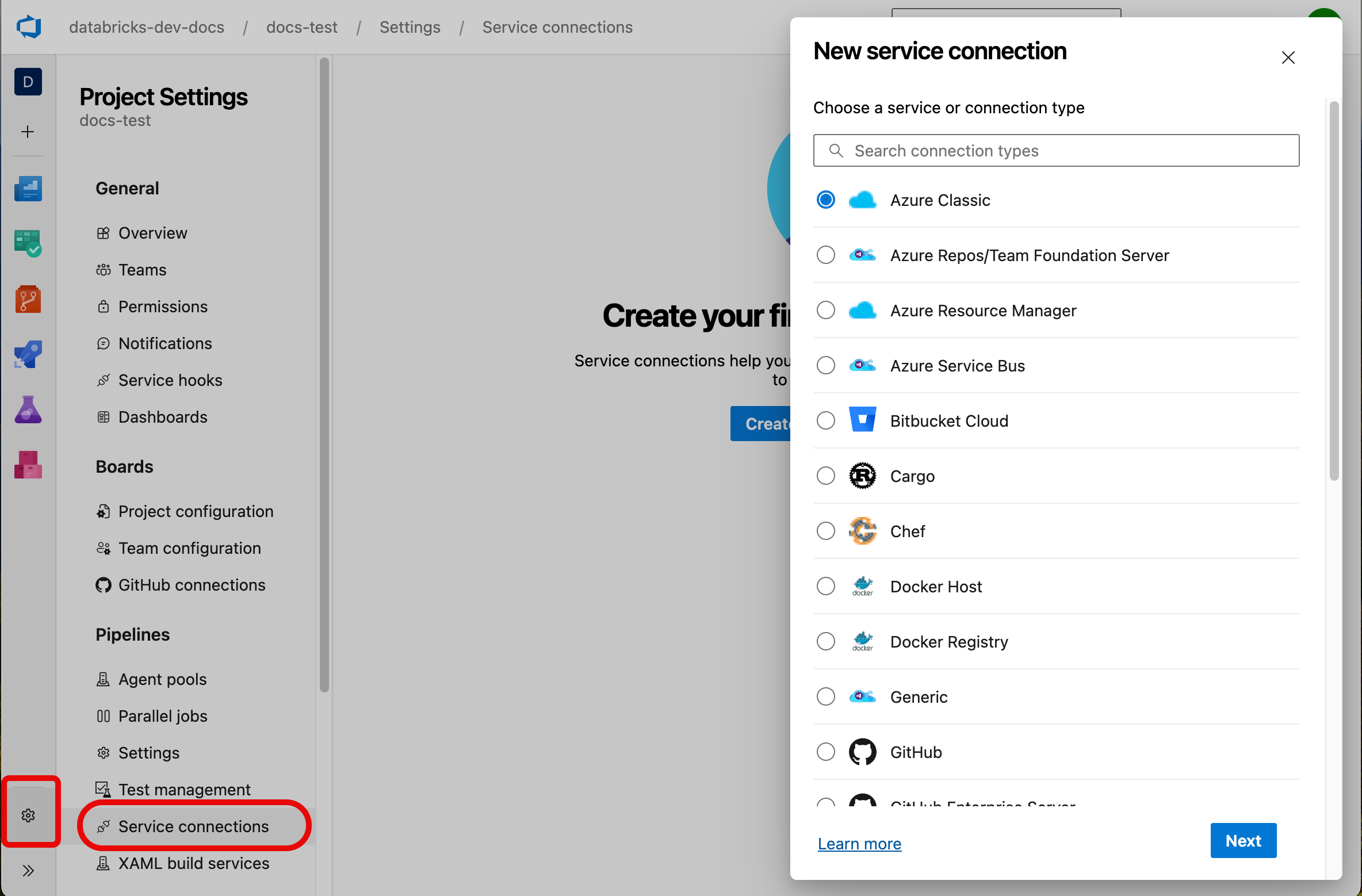

Managing authentication (first-party and third-party) in Azure DevOps is done with service connections. In the Azure DevOps portal, you can access service connections on any project page under Project settings.

To authenticate the Databricks CLI, use the Azure Resource Manager service connection type. For this type, choose one of the following authentication methods:

- Microsoft Entra workload identity federation

- Uses the OpenID Connect (OIDC) protocol to acquire tokens on behalf of a service principal.

- Requires you to configure the Azure DevOps-generated issues and subject identifier with the service principal you intend to use.

- Applicable authentication mechanism: Azure CLI.

- Microsoft Entra service principal

- Uses a client ID and an MS Entra client secret to generate a short-lived OAuth token.

- Requires you to generate a secret for the service principal you intend to use for the service connection.

- Applicable authentication mechanisms: Azure CLI, Microsoft Entra client secrets.

- Microsoft Entra ID managed identity

- Uses the identity assigned to the resource (such as compute) that the CLI is executed on. In the context of Azure DevOps, this is only relevant if you are using self-hosted runners. See Create an Azure Resource Manager service connection to a VM that uses a managed identity.

- Applicable authentication mechanisms: Azure CLI, Microsoft Entra managed identities (formerly called “MSI”).

After you’ve chosen the authentication mechanism that best matches your project’s needs, you must configure it in your Azure DevOps pipeline definition (pipeline.yml) to work with the Azure Databricks CLI.

Configure your Azure DevOps pipeline to use the Azure CLI for authentication

By default, the Azure Databricks CLI will use the Azure CLI as the mechanism to authenticate with Azure Databricks.

Note that using the Azure CLI for authentication requires all calls to the Azure Databricks CLI must be made in an AzureCLI@2 task, which means that there is no way to share an authenticated session in subsequent tasks. Each task authenticates independently, which introduces latency as they are run.

The following Azure Pipelines example configuration uses the Azure CLI to authenticate and run the Azure Databricks CLI bundle deploy command:

- task: AzureCLI@2

inputs:

azureSubscription: {your-azure-subscription-id-here}

useGlobalConfig: true

scriptType: bash

scriptLocation: inlineScript

inlineScript: |

export DATABRICKS_HOST=https://adb...

databricks bundle deploy

When configuring your Azure DevOps pipeline to use the Azure CLI to run Azure Databricks CLI commands, do the following:

- Use

azureSubscriptionto configure the service connection you want to use. - Configure

useGlobalConfigto use the defaultAZURE_CONFIG_FILEbecause thedatabricks bundlecommands use environment variable filtering for subprocesses. If this is not set, these subprocesses won’t be able to find the details of the authenticated session. - If it’s not exported already (such as in a previous step or in the bundle configuration), export the

DATABRICKS_HOSTenvironment variable.

Configure your Azure DevOps pipeline to use a Microsoft Entra client secret for authentiction

If you don’t want to use the Azure CLI for authentication because it adds too much latency, or because you need to use the Azure CLI in a different task type, use a Microsoft Entra client secret. The authentication details must be retrieved from the service connection, so you must use the AzureCLI@2 task in the declaration of your pipeline.

Use the AzureCLI@2 task to retrieve the client ID and client secret from your service connection, and then export them as environment variables. Subsequent tasks can use them directly. For an example, see Use a Microsoft Entra service principal to manage Databricks Git folders.

The following Azure Pipelines example configuration uses a Microsoft Entra client secret to authenticate and run the Azure Databricks CLI bundle deploy command:

- task: AzureCLI@2

inputs:

azureSubscription: {your-azure-subscription-id-here}

addSpnToEnvironment: true

scriptType: bash

scriptLocation: inlineScript

inlineScript: |

echo "##vso[task.setvariable variable=ARM_CLIENT_ID]${servicePrincipalId}"

echo "##vso[task.setvariable variable=ARM_CLIENT_SECRET]${servicePrincipalKey}"

echo "##vso[task.setvariable variable=ARM_TENANT_ID]${tenantId}"

- script: |

export DATABRICKS_HOST=https://adb...

databricks bundle deploy

When configuring your Azure DevOps pipeline to use the Microsoft Entra client secrets to run Azure Databricks CLI commands, do the following:

- Configure

addSpnToEnvironmentto export relevant environment variables to the inline script. - The inline script exports the task-scoped environment variables as job-scoped environment variables under names that the Azure Databricks CLI automatically picks up.

- If it’s not exported already (such as in a previous step or in the bundle configuration), export the

DATABRICKS_HOSTenvironment variable. - If you mark the

ARM_CLIENT_SECRETenvironment variable withissecret=true, you must explicitly add it to each subsequent step that needs it.- If you don’t do this, the

ARM_CLIENT_SECRETenvironment variable will be accessible to every subsequent step. - The

ARM_CLIENT_SECRETenvironment variable is masked in the output regardless of the setting.

- If you don’t do this, the

Configure your Azure DevOps pipeline to use a Microsoft Entra managed identity for authentication

Because Azure managed identity authentication depends on the virtual machine or container configuration to guarantee the Azure Databricks CLI is executed under the right identity, your Azure DevOps pipeline configuration doesn’t require that you specify the AzureCLI@2 task.

The following Azure Pipelines example configuration uses a Microsoft Entra managed identity to authenticate and run the Azure Databricks CLI bundle deploy command:

- script: |

export DATABRICKS_AZURE_RESOURCE_ID=/subscriptions/<id>/resourceGroups/<name>/providers/Microsoft.Databricks/workspaces/<name>

export ARM_CLIENT_ID=eda1f2c4-07cb-4c2c-a126-60b9bafee6d0

export ARM_USE_MSI=true

export DATABRICKS_HOST=https://adb...

databricks current-user me --log-level trace

When configuring your Azure DevOps pipeline to use the Microsoft Entra managed identities to run Azure Databricks CLI commands, do the following:

- The Microsoft Entra managed identity must be assigned the “Contributor” role in the Databricks workspace that it will access.

- The value of the

DATABRICKS_AZURE_RESOURCE_IDenvironment variable is found under Properties for the Azure Databricks instance in the Azure portal. - The value of the

ARM_CLIENT_IDenvironment variable is the client ID of the managed identity.

Note

If the DATABRICKS_HOST environment variable isn’t specified in this configuration, the value will be inferred from DATABRICKS_AZURE_RESOURCE_ID.

Install the Azure Databricks CLI from Azure Pipelines pipeline

After you’ve configured your preferred authentication mechanisms, you must install the Azure Databricks CLI on the host or agent that will run the Azure Databricks CLI commands.

# Install Databricks CLI

- script: |

curl -fsSL https://raw.githubusercontent.com/databricks/setup-cli/main/install.sh | sh

displayName: 'Install Databricks CLI'

Tip

- If you don’t want to automatically install the latest version of the Azure Databricks CLI, replace

mainin the installer URL with a specific version (for example,v0.224.0).

Best practices

Databricks recommends you use Microsoft Entra workload identity federation as the authentication method of choice. It doesn’t rely on secrets and is more secure than other authentication methods. It works automatically with the

AzureCLI@2task without any manual configuration.For more details, see Create an Azure Resource Manager service connection that uses workload identity federation.