Azure guidance for secure isolation

Microsoft Azure is a hyperscale public multitenant cloud services platform that provides you with access to a feature-rich environment incorporating the latest cloud innovations such as artificial intelligence, machine learning, IoT services, big-data analytics, intelligent edge, and many more to help you increase efficiency and unlock insights into your operations and performance.

A multitenant cloud platform implies that multiple customer applications and data are stored on the same physical hardware. Azure uses logical isolation to segregate your applications and data from other customers. This approach provides the scale and economic benefits of multitenant cloud services while rigorously helping prevent other customers from accessing your data or applications.

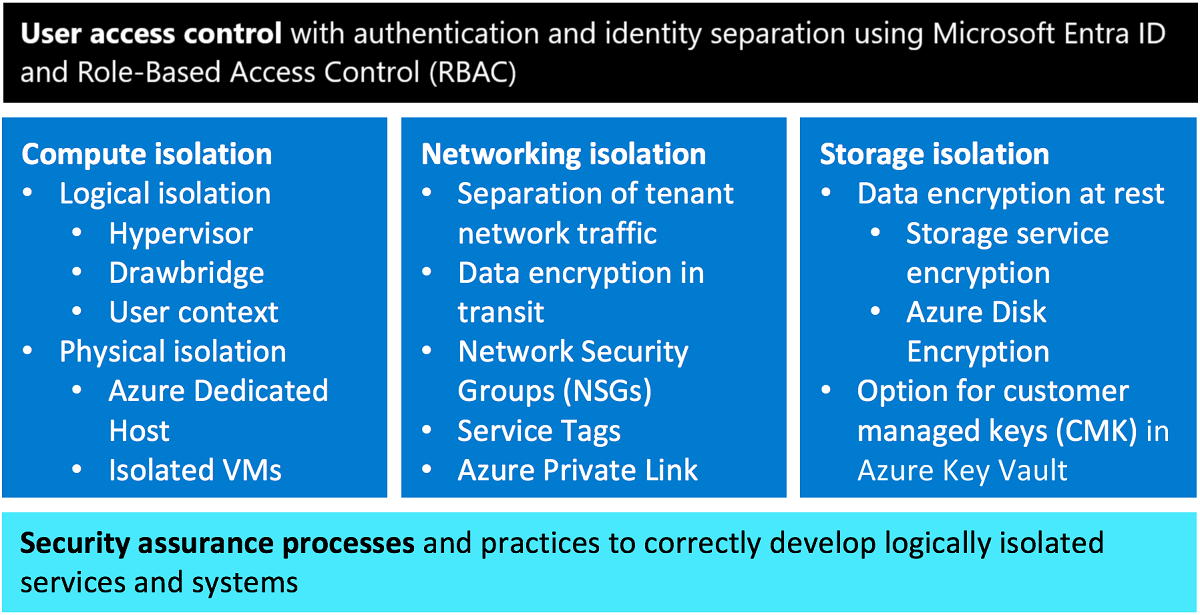

Azure addresses the perceived risk of resource sharing by providing a trustworthy foundation for assuring multitenant, cryptographically certain, logically isolated cloud services using a common set of principles:

- User access controls with authentication and identity separation

- Compute isolation for processing

- Networking isolation including data encryption in transit

- Storage isolation with data encryption at rest

- Security assurance processes embedded in service design to correctly develop logically isolated services

Multi-tenancy in the public cloud improves efficiency by multiplexing resources among disparate customers at low cost; however, this approach introduces the perceived risk associated with resource sharing. Azure addresses this risk by providing a trustworthy foundation for isolated cloud services using a multi-layered approach depicted in Figure 1.

Figure 1. Azure isolation approaches

Figure 1. Azure isolation approaches

A brief summary of isolation approaches is provided below.

User access controls with authentication and identity separation – All data in Azure irrespective of the type or storage location is associated with a subscription. A cloud tenant can be viewed as a dedicated instance of Microsoft Entra ID that your organization receives and owns when you sign up for a Microsoft cloud service. The identity and access stack helps enforce isolation among subscriptions, including limiting access to resources within a subscription only to authorized users.

Compute isolation – Azure provides you with both logical and physical compute isolation for processing. Logical isolation is implemented via:

- Hypervisor isolation for services that provide cryptographically certain isolation by using separate virtual machines and using Azure Hypervisor isolation.

- Drawbridge isolation inside a virtual machine (VM) for services that provide cryptographically certain isolation for workloads running on the same virtual machine by using isolation provided by Drawbridge. These services provide small units of processing using customer code.

- User context-based isolation for services that are composed solely of Microsoft-controlled code and customer code isn't allowed to run.

In addition to robust logical compute isolation available by design to all Azure tenants, if you desire physical compute isolation, you can use Azure Dedicated Host or isolated Virtual Machines, which are deployed on server hardware dedicated to a single customer.

Networking isolation – Azure Virtual Network (VNet) helps ensure that your private network traffic is logically isolated from traffic belonging to other customers. Services can communicate using public IPs or private (VNet) IPs. Communication between your VMs remains private within a VNet. You can connect your VNets via VNet peering or VPN gateways, depending on your connectivity options, including bandwidth, latency, and encryption requirements. You can use network security groups (NSGs) to achieve network isolation and protect your Azure resources from the Internet while accessing Azure services that have public endpoints. You can use Virtual Network service tags to define network access controls on network security groups or Azure Firewall. A service tag represents a group of IP address prefixes from a given Azure service. Microsoft manages the address prefixes encompassed by the service tag and automatically updates the service tag as addresses change, thereby reducing the complexity of frequent updates to network security rules. Moreover, you can use Private Link to access Azure PaaS services over a private endpoint in your VNet, ensuring that traffic between your VNet and the service travels across the Microsoft global backbone network, which eliminates the need to expose the service to the public Internet. Finally, Azure provides you with options to encrypt data in transit, including Transport Layer Security (TLS) end-to-end encryption of network traffic with TLS termination using Key Vault certificates, VPN encryption using IPsec, and Azure ExpressRoute encryption using MACsec with customer-managed keys (CMK) support.

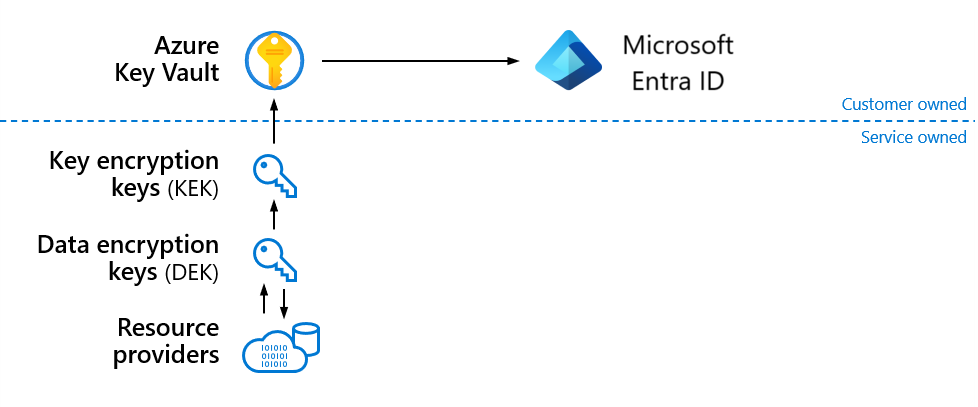

Storage isolation – To ensure cryptographic certainty of logical data isolation, Azure Storage relies on data encryption at rest using advanced algorithms with multiple ciphers. This process relies on multiple encryption keys and services such as Azure Key Vault and Microsoft Entra ID to ensure secure key access and centralized key management. Azure Storage service encryption ensures that data is automatically encrypted before persisting it to Azure Storage and decrypted before retrieval. All data written to Azure Storage is encrypted through FIPS 140 validated 256-bit AES encryption and you can use Key Vault for customer-managed keys (CMK). Azure Storage service encryption encrypts the page blobs that store Azure Virtual Machine disks. Moreover, Azure Disk encryption may optionally be used to encrypt Azure Windows and Linux IaaS Virtual Machine disks to increase storage isolation and assure cryptographic certainty of your data stored in Azure. This encryption includes managed disks.

Security assurance processes and practices – Azure isolation assurance is further enforced by Microsoft's internal use of the Security Development Lifecycle (SDL) and other strong security assurance processes to protect attack surfaces and mitigate threats. Microsoft has established industry-leading processes and tooling that provides high confidence in the Azure isolation guarantee.

In line with the shared responsibility model in cloud computing, as you migrate workloads from your on-premises datacenter to the cloud, the delineation of responsibility between you and cloud service provider varies depending on the cloud service model. For example, with the Infrastructure as a Service (IaaS) model, Microsoft's responsibility ends at the Hypervisor layer, and you're responsible for all layers above the virtualization layer, including maintaining the base operating system in guest VMs. You can use Azure isolation technologies to achieve the desired level of isolation for your applications and data deployed in the cloud.

Throughout this article, call-out boxes outline important considerations or actions considered to be part of your responsibility. For example, you can use Azure Key Vault to store your secrets, including encryption keys that remain under your control.

Note

Use of Azure Key Vault for customer managed keys (CMK) is optional and represents your responsibility.

Extra resources:

This article provides technical guidance to address common security and isolation concerns pertinent to cloud adoption. It also explores design principles and technologies available in Azure to help you achieve your secure isolation objectives.

Tip

For recommendations on how to improve the security of applications and data deployed on Azure, you should review the Azure Security Benchmark documentation.

Identity-based isolation

Microsoft Entra ID is an identity repository and cloud service that provides authentication, authorization, and access control for your users, groups, and objects. Microsoft Entra ID can be used as a standalone cloud directory or as an integrated solution with existing on-premises Active Directory to enable key enterprise features such as directory synchronization and single sign-on.

Each Azure subscription is associated with a Microsoft Entra tenant. Using Azure role-based access control (Azure RBAC), users, groups, and applications from that directory can be granted access to resources in the Azure subscription. For example, a storage account can be placed in a resource group to control access to that specific storage account using Microsoft Entra ID. Azure Storage defines a set of Azure built-in roles that encompass common permissions used to access blob or queue data. A request to Azure Storage can be authorized using either your Microsoft Entra account or the Storage Account Key. In this manner, only specific users can be given the ability to access data in Azure Storage.

Zero Trust architecture

All data in Azure irrespective of the type or storage location is associated with a subscription. A cloud tenant can be viewed as a dedicated instance of Microsoft Entra ID that your organization receives and owns when you sign up for a Microsoft cloud service. Authentication to the Azure portal is performed through Microsoft Entra ID using an identity created either in Microsoft Entra ID or federated with an on-premises Active Directory. The identity and access stack helps enforce isolation among subscriptions, including limiting access to resources within a subscription only to authorized users. This access restriction is an overarching goal of the Zero Trust model, which assumes that the network is compromised and requires a fundamental shift from the perimeter security model. When evaluating access requests, all requesting users, devices, and applications should be considered untrusted until their integrity can be validated in line with the Zero Trust design principles. Microsoft Entra ID provides the strong, adaptive, standards-based identity verification required in a Zero Trust framework.

Note

Extra resources:

- To learn how to implement Zero Trust architecture on Azure, see Zero Trust Guidance Center.

- For definitions and general deployment models, see NIST SP 800-207 Zero Trust Architecture.

Microsoft Entra ID

The separation of the accounts used to administer cloud applications is critical to achieving logical isolation. Account isolation in Azure is achieved using Microsoft Entra ID and its capabilities to support granular Azure role-based access control (Azure RBAC). Each Azure account is associated with one Microsoft Entra tenant. Users, groups, and applications from that directory can manage resources in Azure. You can assign appropriate access rights using the Azure portal, Azure command-line tools, and Azure Management APIs. Each Microsoft Entra tenant is distinct and separate from other Azure ADs. A Microsoft Entra instance is logically isolated using security boundaries to prevent customer data and identity information from comingling, thereby ensuring that users and administrators of one Microsoft Entra ID can't access or compromise data in another Microsoft Entra instance, either maliciously or accidentally. Microsoft Entra ID runs physically isolated on dedicated servers that are logically isolated to a dedicated network segment and where host-level packet filtering and Windows Firewall services provide extra protections from untrusted traffic.

Microsoft Entra ID implements extensive data protection features, including tenant isolation and access control, data encryption in transit, secrets encryption and management, disk level encryption, advanced cryptographic algorithms used by various Microsoft Entra components, data operational considerations for insider access, and more. Detailed information is available from a whitepaper Microsoft Entra Data Security Considerations.

Tenant isolation in Microsoft Entra ID involves two primary elements:

- Preventing data leakage and access across tenants, which means that data belonging to Tenant A can't in any way be obtained by users in Tenant B without explicit authorization by Tenant A.

- Resource access isolation across tenants, which means that operations performed by Tenant A can't in any way impact access to resources for Tenant B.

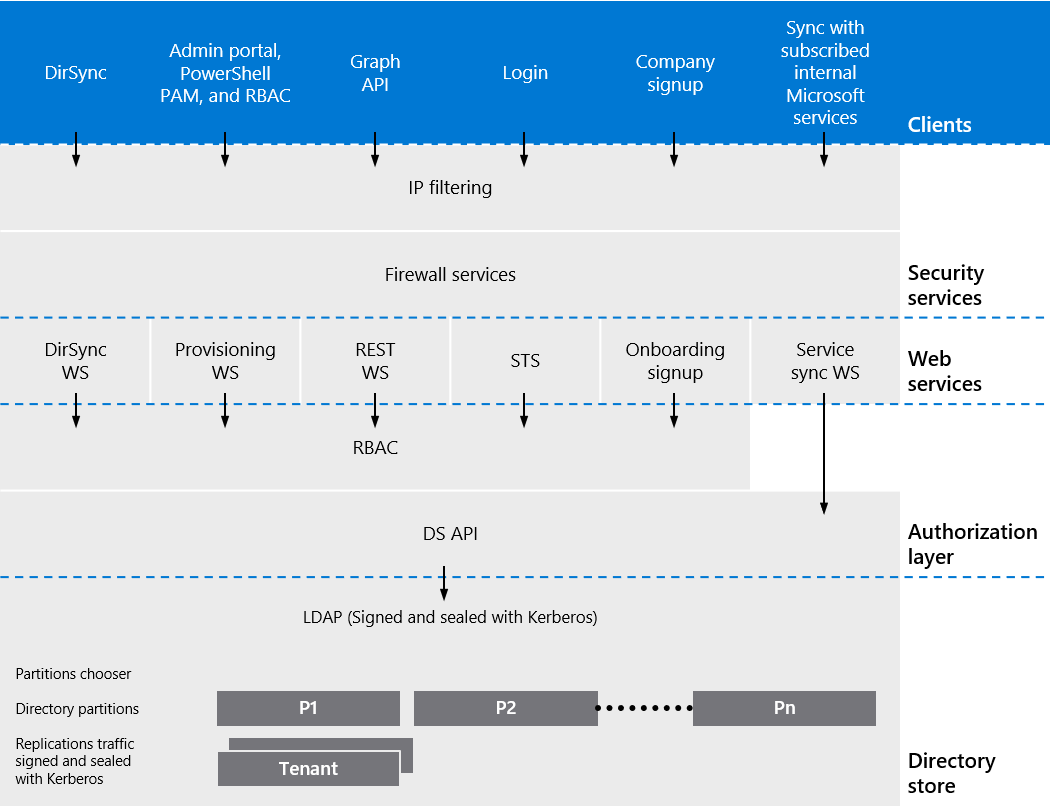

As shown in Figure 2, access via Microsoft Entra ID requires user authentication through a Security Token Service (STS). The authorization system uses information on the user's existence and enabled state through the Directory Services API and Azure RBAC to determine whether the requested access to the target Microsoft Entra instance is authorized for the user in the session. Aside from token-based authentication that is tied directly to the user, Microsoft Entra ID further supports logical isolation in Azure through:

- Microsoft Entra instances are discrete containers and there's no relationship between them.

- Microsoft Entra data is stored in partitions and each partition has a predetermined set of replicas that are considered the preferred primary replicas. Use of replicas provides high availability of Microsoft Entra services to support identity separation and logical isolation.

- Access isn't permitted across Microsoft Entra instances unless the Microsoft Entra instance administrator grants it through federation or provisioning of user accounts from other Microsoft Entra instances.

- Physical access to servers that comprise the Microsoft Entra service and direct access to Microsoft Entra ID's back-end systems is restricted to properly authorized Microsoft operational roles using the Just-In-Time (JIT) privileged access management system.

- Microsoft Entra users have no access to physical assets or locations, and therefore it isn't possible for them to bypass the logical Azure RBAC policy checks.

Figure 2. Microsoft Entra logical tenant isolation

Figure 2. Microsoft Entra logical tenant isolation

In summary, Azure's approach to logical tenant isolation uses identity, managed through Microsoft Entra ID, as the first logical control boundary for providing tenant-level access to resources and authorization through Azure RBAC.

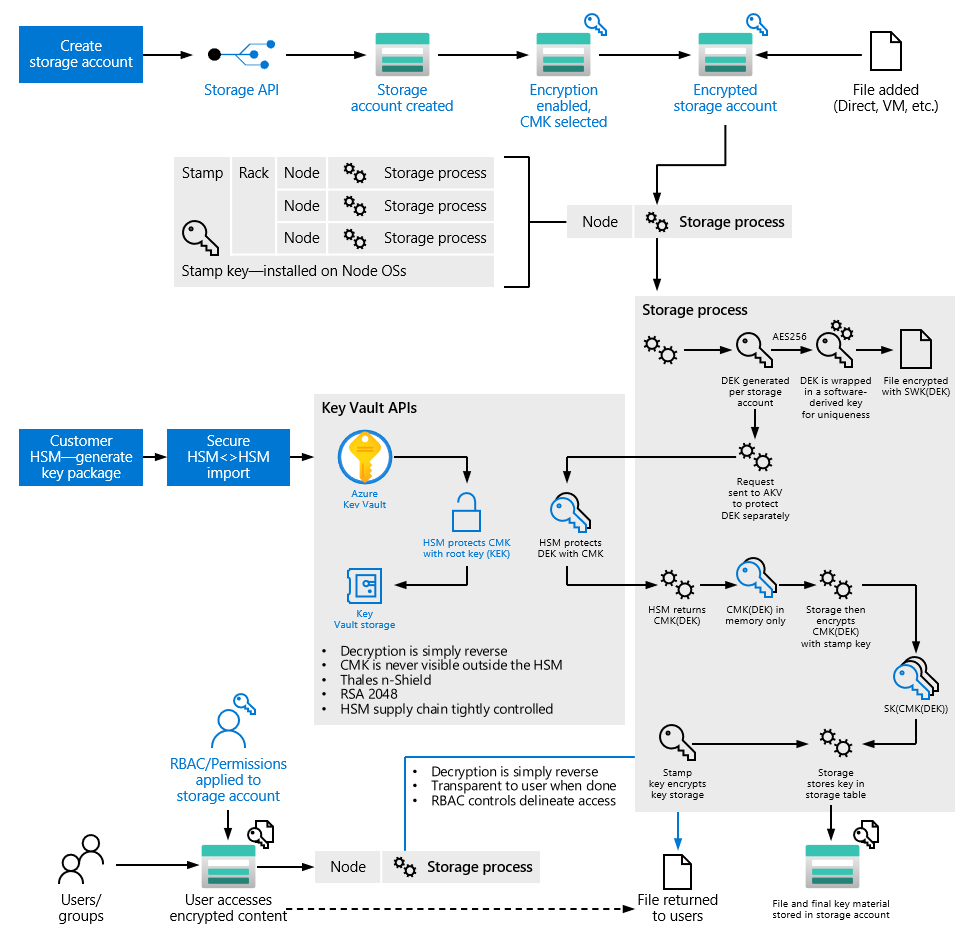

Data encryption key management

Azure has extensive support to safeguard your data using data encryption, including various encryption models:

- Server-side encryption that uses service-managed keys, customer-managed keys in Azure, or customer-managed keys on customer-controlled hardware.

- Client-side encryption that enables you to manage and store keys on premises or in another secure location.

Data encryption provides isolation assurances that are tied directly to encryption (cryptographic) key access. Since Azure uses strong ciphers for data encryption, only entities with access to cryptographic keys can have access to data. Deleting or revoking cryptographic keys renders the corresponding data inaccessible. More information about data encryption in transit is provided in Networking isolation section, whereas data encryption at rest is covered in Storage isolation section.

Azure enables you to enforce double encryption for both data at rest and data in transit. With this model, two or more layers of encryption are enabled to protect against compromises of any layer of encryption.

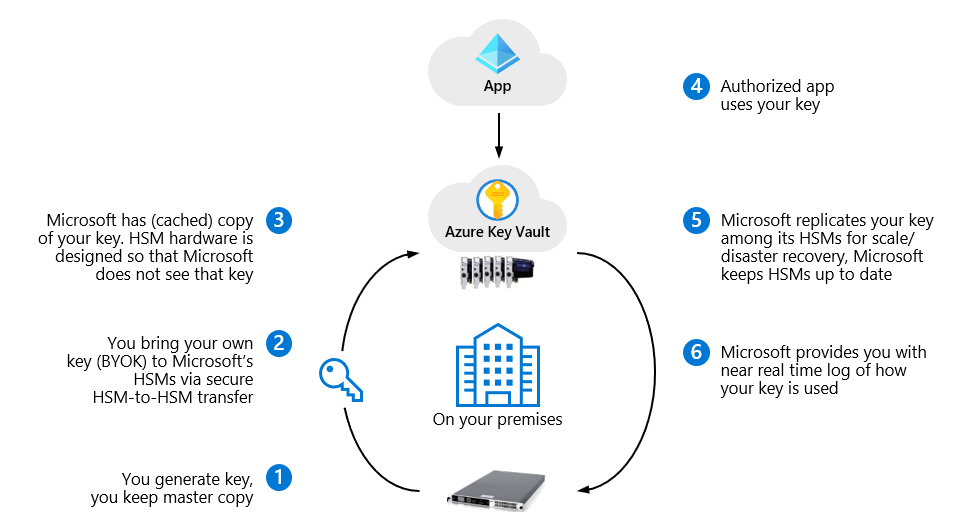

Azure Key Vault

Proper protection and management of cryptographic keys is essential for data security. Azure Key Vault is a cloud service for securely storing and managing secrets. The Key Vault service supports two resource types that are described in the rest of this section:

- Vault supports software-protected and hardware security module (HSM)-protected secrets, keys, and certificates.

- Managed HSM supports only HSM-protected cryptographic keys.

If you require extra security for your most sensitive customer data stored in Azure services, you can encrypt it using your own encryption keys you control in Key Vault.

The Key Vault service provides an abstraction over the underlying HSMs. It provides a REST API to enable service use from cloud applications and authentication through Microsoft Entra ID to allow you to centralize and customize authentication, disaster recovery, high availability, and elasticity. Key Vault supports cryptographic keys of various types, sizes, and curves, including RSA and Elliptic Curve keys. With managed HSMs, support is also available for AES symmetric keys.

With Key Vault, you can import or generate encryption keys in HSMs, ensuring that keys never leave the HSM protection boundary to support bring your own key (BYOK) scenarios, as shown in Figure 3.

Figure 3. Azure Key Vault support for bring your own key (BYOK)

Figure 3. Azure Key Vault support for bring your own key (BYOK)

Keys generated inside the Key Vault HSMs aren't exportable – there can be no clear-text version of the key outside the HSMs. This binding is enforced by the underlying HSM. BYOK functionality is available with both key vaults and managed HSMs. Methods for transferring HSM-protected keys to Key Vault vary depending on the underlying HSM, as explained in online documentation.

Note

Azure Key Vault is designed, deployed, and operated such that Microsoft and its agents are precluded from accessing, using or extracting any data stored in the service, including cryptographic keys. For more information, see How does Azure Key Vault protect your keys?

Key Vault provides a robust solution for encryption key lifecycle management. Upon creation, every key vault or managed HSM is automatically associated with the Microsoft Entra tenant that owns the subscription. Anyone trying to manage or retrieve content from a key vault or managed HSM must be properly authenticated and authorized:

- Authentication establishes the identity of the caller (user or application).

- Authorization determines which operations the caller can perform, based on a combination of Azure role-based access control (Azure RBAC) and key vault access policy or managed HSM local RBAC.

Microsoft Entra ID enforces tenant isolation and implements robust measures to prevent access by unauthorized parties, as described previously in Microsoft Entra ID section. Access to a key vault or managed HSM is controlled through two interfaces or planes – management plane and data plane – with both planes using Microsoft Entra ID for authentication.

- Management plane enables you to manage the key vault or managed HSM itself, for example, create and delete key vaults or managed HSMs, retrieve key vault or managed HSM properties, and update access policies. For authorization, the management plane uses Azure RBAC with both key vaults and managed HSMs.

- Data plane enables you to work with the data stored in your key vaults and managed HSMs, including adding, deleting, and modifying your data. For vaults, stored data can include keys, secrets, and certificates. For managed HSMs, stored data is limited to cryptographic keys only. For authorization, the data plane uses Key Vault access policy and Azure RBAC for data plane operations with key vaults, or managed HSM local RBAC with managed HSMs.

When you create a key vault or managed HSM in an Azure subscription, it's automatically associated with the Microsoft Entra tenant of the subscription. All callers in both planes must register in this tenant and authenticate to access the key vault or managed HSM.

You control access permissions and can extract detailed activity logs from the Azure Key Vault service. Azure Key Vault logs the following information:

- All authenticated REST API requests, including failed requests

- Operations on the key vault such as creation, deletion, setting access policies, and so on.

- Operations on keys and secrets in the key vault, including a) creating, modifying, or deleting keys or secrets, and b) signing, verifying, encrypting keys, and so on.

- Unauthenticated requests such as requests that don't have a bearer token, are malformed or expired, or have an invalid token.

Note

With Azure Key Vault, you can monitor how and when your key vaults and managed HSMs are accessed and by whom.

Extra resources:

You can also use the Azure Key Vault solution in Azure Monitor to review Key Vault logs. To use this solution, you need to enable logging of Key Vault diagnostics and direct the diagnostics to a Log Analytics workspace. With this solution, it isn't necessary to write logs to Azure Blob storage.

Note

For a comprehensive list of Azure Key Vault security recommendations, see Azure security baseline for Key Vault.

Vault

Vaults provide a multitenant, low-cost, easy to deploy, zone-resilient (where available), and highly available key management solution suitable for most common cloud application scenarios. Vaults can store and safeguard secrets, keys, and certificates. They can be either software-protected (standard tier) or HSM-protected (premium tier). For a comparison between the standard and premium tiers, see the Azure Key Vault pricing page. Software-protected secrets, keys, and certificates are safeguarded by Azure, using industry-standard algorithms and key lengths. If you require extra assurances, you can choose to safeguard your secrets, keys, and certificates in vaults protected by multitenant HSMs. The corresponding HSMs are validated according to the FIPS 140 standard, and have an overall Security Level 2 rating, which includes requirements for physical tamper evidence and role-based authentication.

Vaults enable support for customer-managed keys (CMK) where you can control your own keys in HSMs, and use them to encrypt data at rest for many Azure services. As mentioned previously, you can import or generate encryption keys in HSMs ensuring that keys never leave the HSM boundary to support bring your own key (BYOK) scenarios.

Key Vault can handle requesting and renewing certificates in vaults, including Transport Layer Security (TLS) certificates, enabling you to enroll and automatically renew certificates from supported public Certificate Authorities. Key Vault certificates support provides for the management of your X.509 certificates, which are built on top of keys and provide an automated renewal feature. Certificate owner can create a certificate through Azure Key Vault or by importing an existing certificate. Both self-signed and Certificate Authority generated certificates are supported. Moreover, the Key Vault certificate owner can implement secure storage and management of X.509 certificates without interaction with private keys.

When you create a key vault in a resource group, you can manage access by using Microsoft Entra ID, which enables you to grant access at a specific scope level by assigning the appropriate Azure roles. For example, to grant access to a user to manage key vaults, you can assign a predefined key vault Contributor role to the user at a specific scope, including subscription, resource group, or specific resource.

Important

You should control tightly who has Contributor role access to your key vaults. If a user has Contributor permissions to a key vault management plane, the user can gain access to the data plane by setting a key vault access policy.

Extra resources:

- How to secure access to a key vault.

Managed HSM

Managed HSM provides a single-tenant, fully managed, highly available, zone-resilient (where available) HSM as a service to store and manage your cryptographic keys. It's most suitable for applications and usage scenarios that handle high value keys. It also helps you meet the most stringent security, compliance, and regulatory requirements. Managed HSM uses FIPS 140 Level 3 validated HSMs to protect your cryptographic keys. Each managed HSM pool is an isolated single-tenant instance with its own security domain controlled by you and isolated cryptographically from instances belonging to other customers. Cryptographic isolation relies on Intel Software Guard Extensions (SGX) technology that provides encrypted code and data to help ensure your control over cryptographic keys.

When a managed HSM is created, the requestor also provides a list of data plane administrators. Only these administrators are able to access the managed HSM data plane to perform key operations and manage data plane role assignments (managed HSM local RBAC). The permission model for both the management and data planes uses the same syntax, but permissions are enforced at different levels, and role assignments use different scopes. Management plane Azure RBAC is enforced by Azure Resource Manager while data plane-managed HSM local RBAC is enforced by the managed HSM itself.

Important

Unlike with key vaults, granting your users management plane access to a managed HSM doesn't grant them any access to data plane to access keys or data plane role assignments managed HSM local RBAC. This isolation is implemented by design to prevent inadvertent expansion of privileges affecting access to keys stored in managed HSMs.

As mentioned previously, managed HSM supports importing keys generated in your on-premises HSMs, ensuring the keys never leave the HSM protection boundary, also known as bring your own key (BYOK) scenario. Managed HSM supports integration with Azure services such as Azure Storage, Azure SQL Database, Azure Information Protection, and others. For a more complete list of Azure services that work with Managed HSM, see Data encryption models.

Managed HSM enables you to use the established Azure Key Vault API and management interfaces. You can use the same application development and deployment patterns for all your applications irrespective of the key management solution: multitenant vault or single-tenant managed HSM.

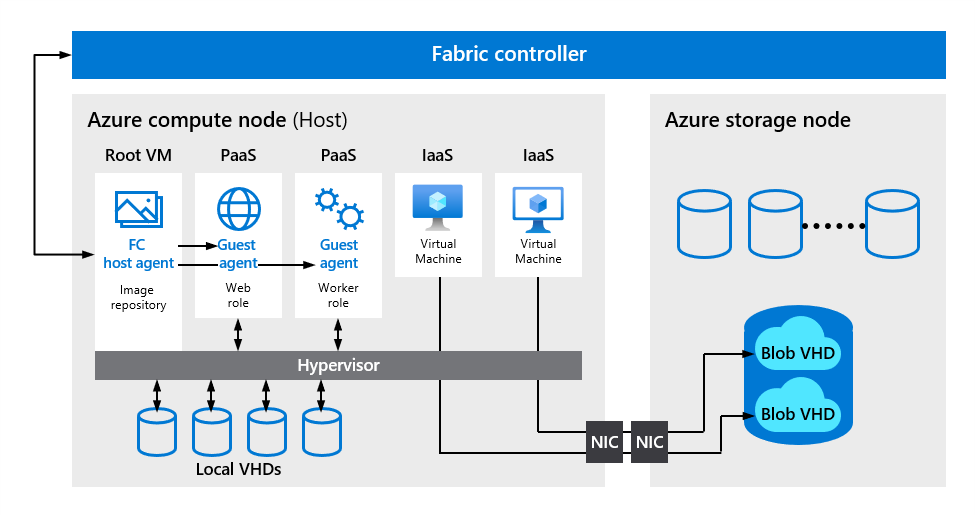

Compute isolation

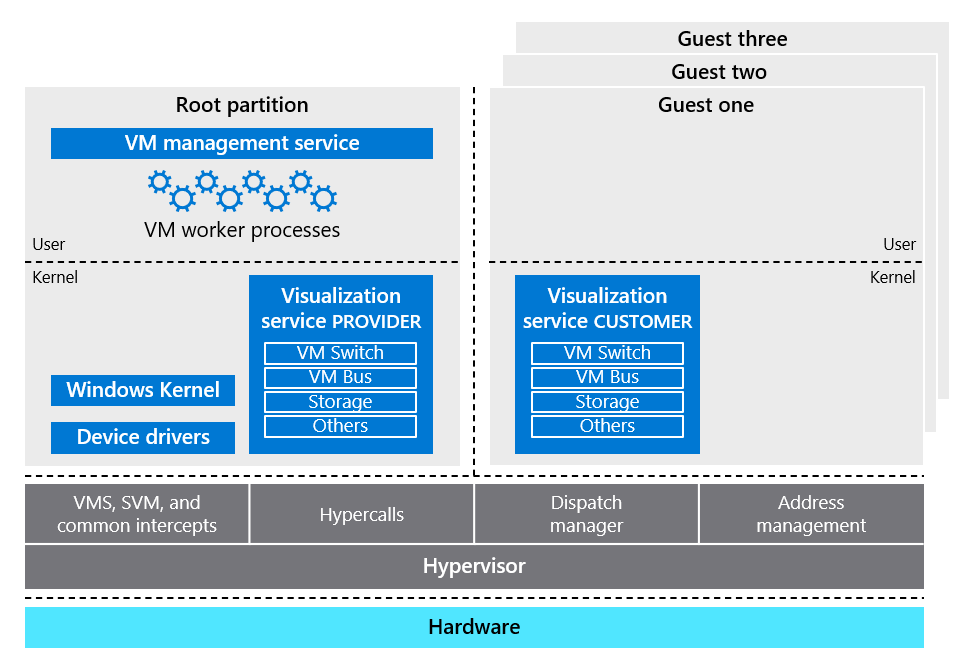

Microsoft Azure compute platform is based on machine virtualization. This approach means that your code – whether it's deployed in a PaaS worker role or an IaaS virtual machine – executes in a virtual machine hosted by a Windows Server Hyper-V hypervisor. On each Azure physical server, also known as a node, there's a Type 1 Hypervisor that runs directly over the hardware and divides the node into a variable number of Guest virtual machines (VMs), as shown in Figure 4. Each node has one special Host VM, also known as Root VM, which runs the Host OS – a customized and hardened version of the latest Windows Server, which is stripped down to reduce the attack surface and include only those components necessary to manage the node. Isolation of the Root VM from the Guest VMs and the Guest VMs from one another is a key concept in Azure security architecture that forms the basis of Azure compute isolation, as described in Microsoft online documentation.

Figure 4. Isolation of Hypervisor, Root VM, and Guest VMs

Figure 4. Isolation of Hypervisor, Root VM, and Guest VMs

Physical servers hosting VMs are grouped into clusters, and they're independently managed by a scaled-out and redundant platform software component called the Fabric Controller (FC). Each FC manages the lifecycle of VMs running in its cluster, including provisioning and monitoring the health of the hardware under its control. For example, the FC is responsible for recreating VM instances on healthy servers when it determines that a server has failed. It also allocates infrastructure resources to tenant workloads, and it manages unidirectional communication from the Host to virtual machines. Dividing the compute infrastructure into clusters, isolates faults at the FC level and prevents certain classes of errors from affecting servers beyond the cluster in which they occur.

The FC is the brain of the Azure compute platform and the Host Agent is its proxy, integrating servers into the platform so that the FC can deploy, monitor, and manage the virtual machines used by you and Azure cloud services. The Hypervisor/Host OS pairing uses decades of Microsoft's experience in operating system security, including security focused investments in Microsoft Hyper-V to provide strong isolation of Guest VMs. Hypervisor isolation is discussed later in this section, including assurances for strongly defined security boundaries enforced by the Hypervisor, defense-in-depth exploits mitigations, and strong security assurance processes.

Management network isolation

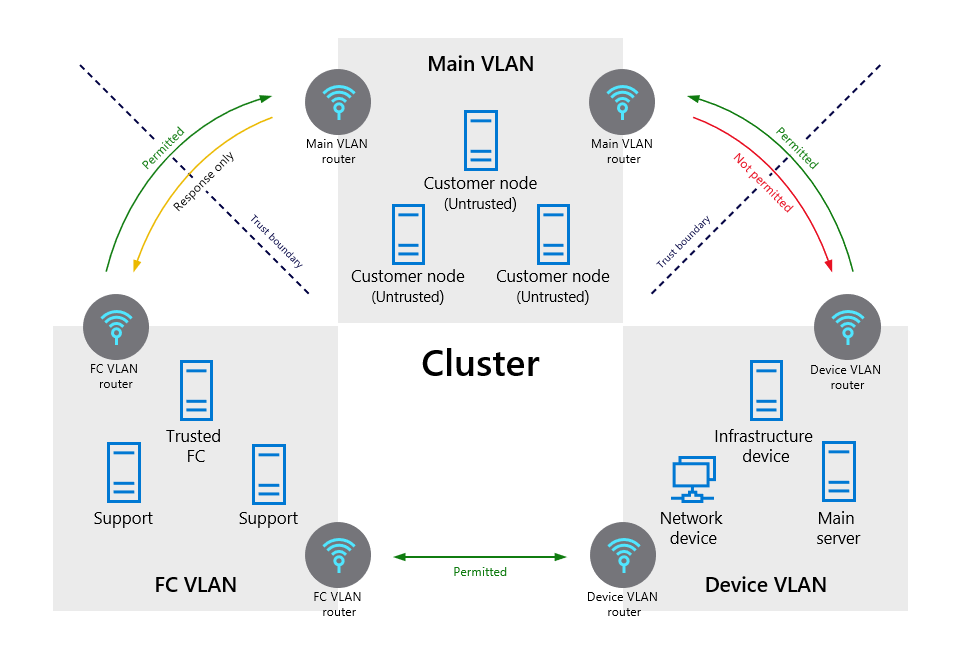

There are three Virtual Local Area Networks (VLANs) in each compute hardware cluster, as shown in Figure 5:

- Main VLAN interconnects untrusted customer nodes,

- Fabric Controller (FC) VLAN that contains trusted FCs and supporting systems, and

- Device VLAN that contains trusted network and other infrastructure devices.

Communication is permitted from the FC VLAN to the main VLAN but can't be initiated from the main VLAN to the FC VLAN. The bridge from the FC VLAN to the Main VLAN is used to reduce the overall complexity and improve reliability/resiliency of the network. The connection is secured in several ways to ensure that commands are trusted and successfully routed:

- Communication from an FC to a Fabric Agent (FA) is unidirectional and requires mutual authentication via certificates. The FA implements a TLS-protected service that only responds to requests from the FC. It can't initiate connections to the FC or other privileged internal nodes.

- The FC treats responses from the agent service as if they were untrusted. Communication with the agent is further restricted to a set of authorized IP addresses using firewall rules on each physical node, and routing rules at the border gateways.

- Throttling is used to ensure that customer VMs can't saturate the network and management commands from being routed.

Communication is also blocked from the main VLAN to the device VLAN. This way, even if a node running customer code is compromised, it can't attack nodes on either the FC or device VLANs.

These controls ensure that management console's access to the Hypervisor is always valid and available.

Figure 5. VLAN isolation

Figure 5. VLAN isolation

The Hypervisor and the Host OS provide network packet filters, which help ensure that untrusted VMs can't generate spoofed traffic or receive traffic not addressed to them, direct traffic to protected infrastructure endpoints, or send/receive inappropriate broadcast traffic. By default, traffic is blocked when a VM is created, and then the FC agent configures the packet filter to add rules and exceptions to allow authorized traffic. More detailed information about network traffic isolation and separation of tenant traffic is provided in Networking isolation section.

Management console and management plane

The Azure Management Console and Management Plane follow strict security architecture principles of least privilege to secure and isolate tenant processing:

- Management Console (MC) – The MC in Azure Cloud is composed of the Azure portal GUI and the Azure Resource Manager API layers. They both use user credentials to authenticate and authorize all operations.

- Management Plane (MP) – This layer performs the actual management actions and is composed of the Compute Resource Provider (CRP), Fabric Controller (FC), Fabric Agent (FA), and the underlying Hypervisor, which has its own Hypervisor Agent to service communication. These layers all use system contexts that are granted the least permissions needed to perform their operations.

The Azure FC allocates infrastructure resources to tenants and manages unidirectional communications from the Host OS to Guest VMs. The VM placement algorithm of the Azure FC is highly sophisticated and nearly impossible to predict. The FA resides in the Host OS and it manages tenant VMs. The collection of the Azure Hypervisor, Host OS and FA, and customer VMs constitute a compute node, as shown in Figure 4. FCs manage FAs although FCs exist outside of compute nodes – separate FCs exist to manage compute and storage clusters. If you update your application's configuration file while running in the MC, the MC communicates through CRP with the FC, and the FC communicates with the FA.

CRP is the front-end service for Azure Compute, exposing consistent compute APIs through Azure Resource Manager, thereby enabling you to create and manage virtual machine resources and extensions via simple templates.

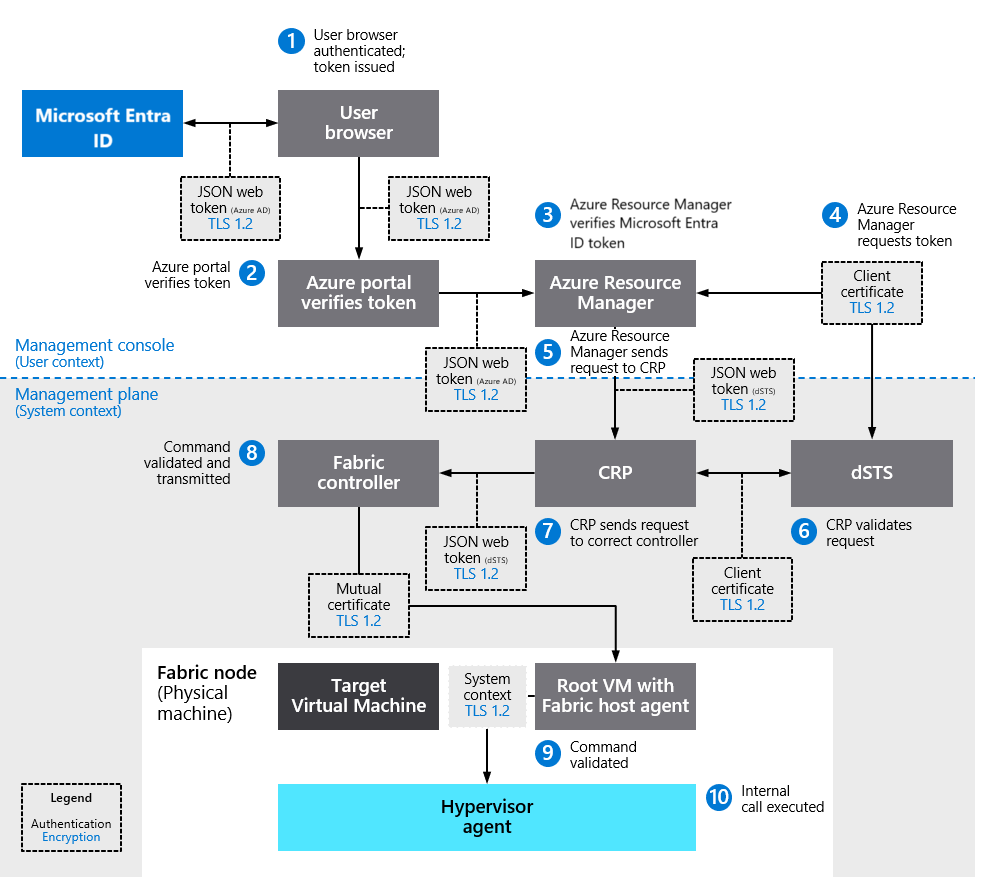

Communications among various components (for example, Azure Resource Manager to and from CRP, CRP to and from FC, FC to and from Hypervisor Agent) all operate on different communication channels with different identities and different permissions sets. This design follows common least-privilege models to ensure that a compromise of any single layer will prevent more actions. Separate communications channels ensure that communications can't bypass any layer in the chain. Figure 6 illustrates how the MC and MP securely communicate within the Azure cloud for Hypervisor interaction initiated by a user's OAuth 2.0 authentication to Microsoft Entra ID.

Figure 6. Management Console and Management Plane interaction for secure management flow

Figure 6. Management Console and Management Plane interaction for secure management flow

All management commands are authenticated via RSA signed certificate or JSON Web Token (JWT). Authentication and command channels are encrypted via Transport Layer Security (TLS) 1.2 as described in Data encryption in transit section. Server certificates are used to provide TLS connectivity to the authentication providers where a separate authorization mechanism is used, for example, Microsoft Entra ID or datacenter Security Token Service (dSTS). dSTS is a token provider like Microsoft Entra ID that is isolated to the Microsoft datacenter and used for service level communications.

Figure 6 illustrates the management flow corresponding to a user command to stop a virtual machine. The steps enumerated in Table 1 apply to other management commands in the same way and use the same encryption and authentication flow.

Table 1. Management flow involving various MC and MP components

| Step | Description | Authentication | Encryption |

|---|---|---|---|

| 1. | User authenticates via Microsoft Entra ID by providing credentials and is issued a token. | User Credentials | TLS 1.2 |

| 2. | Browser presents token to Azure portal to authenticate user. Azure portal verifies token using token signature and valid signing keys. | JSON Web Token (Microsoft Entra ID) | TLS 1.2 |

| 3. | User issues “stop VM” request on Azure portal. Azure portal sends “stop VM” request to Azure Resource Manager and presents user's token that was provided by Microsoft Entra ID. Azure Resource Manager verifies token using token signature and valid signing keys and that the user is authorized to perform the requested operation. | JSON Web Token (Microsoft Entra ID) | TLS 1.2 |

| 4. | Azure Resource Manager requests a token from dSTS server based on the client certificate that Azure Resource Manager has, enabling dSTS to grant a JSON Web Token with the correct identity and roles. | Client Certificate | TLS 1.2 |

| 5. | Azure Resource Manager sends request to CRP. Call is authenticated via OAuth using a JSON Web Token representing the Azure Resource Manager system identity from dSTS, thus transition from user to system context. | JSON Web Token (dSTS) | TLS 1.2 |

| 6. | CRP validates the request and determines which fabric controller can complete the request. CRP requests a certificate from dSTS based on its client certificate so that it can connect to the specific Fabric Controller (FC) that is the target of the command. Token will grant permissions only to that specific FC if CRP is allowed to communicate to that FC. | Client Certificate | TLS 1.2 |

| 7. | CRP then sends the request to the correct FC with the JSON Web Token that was created by dSTS. | JSON Web Token (dSTS) | TLS 1.2 |

| 8. | FC then validates the command is allowed and comes from a trusted source. Then it establishes a secure TLS connection to the correct Fabric Agent (FA) in the cluster that can execute the command by using a certificate that is unique to the target FA and the FC. Once the secure connection is established, the command is transmitted. | Mutual Certificate | TLS 1.2 |

| 9. | The FA again validates the command is allowed and comes from a trusted source. Once validated, the FA will establish a secure connection using mutual certificate authentication and issue the command to the Hypervisor Agent that is only accessible by the FA. | Mutual Certificate | TLS 1.2 |

| 10. | Hypervisor Agent on the host executes an internal call to stop the VM. | System Context | N.A. |

Commands generated through all steps of the process identified in this section and sent to the FC and FA on each node, are written to a local audit log, and distributed to multiple analytics systems for stream processing in order to monitor system health and track security events and patterns. Tracking includes events that were processed successfully and events that were invalid. Invalid requests are processed by the intrusion detection systems to detect anomalies.

Logical isolation implementation options

Azure provides isolation of compute processing through a multi-layered approach, including:

- Hypervisor isolation for services that provide cryptographically certain isolation by using separate virtual machines and using Azure Hypervisor isolation. Examples: App Service, Azure Container Instances, Azure Databricks, Azure Functions, Azure Kubernetes Service, Azure Machine Learning, Cloud Services, Data Factory, Service Fabric, Virtual Machines, Virtual Machine Scale Sets.

- Drawbridge isolation inside a VM for services that provide cryptographically certain isolation to workloads running on the same virtual machine by using isolation provided by Drawbridge. These services provide small units of processing using customer code. To provide security isolation, Drawbridge runs a user process together with a light-weight version of the Windows kernel (library OS) inside a pico-process. A pico-process is a secured process with no direct access to services or resources of the Host system. Examples: Automation, Azure Database for MySQL, Azure Database for PostgreSQL, Azure SQL Database, Azure Stream Analytics.

- User context-based isolation for services that are composed solely of Microsoft-controlled code and customer code isn't allowed to run. Examples: API Management, Application Gateway, Microsoft Entra ID, Azure Backup, Azure Cache for Redis, Azure DNS, Azure Information Protection, Azure IoT Hub, Azure Key Vault, Azure portal, Azure Monitor (including Log Analytics), Microsoft Defender for Cloud, Azure Site Recovery, Container Registry, Content Delivery Network, Event Grid, Event Hubs, Load Balancer, Service Bus, Storage, Virtual Network, VPN Gateway, Traffic Manager.

These logical isolation options are discussed in the rest of this section.

Hypervisor isolation

Hypervisor isolation in Azure is based on Microsoft Hyper-V technology, which enables Azure Hypervisor-based isolation to benefit from decades of Microsoft experience in operating system security and investments in Hyper-V technology for virtual machine isolation. You can review independent third-party assessment reports about Hyper-V security functions, including the National Information Assurance Partnership (NIAP) Common Criteria Evaluation and Validation Scheme (CCEVS) reports such as the report published in Feb-2021 that is discussed herein.

The Target of Evaluation (TOE) was composed of Microsoft Windows Server, Microsoft Windows 10 version 1909 (November 2019 Update), and Microsoft Windows Server 2019 (version 1809) Hyper-V (“Windows”). TOE enforces the following security policies as described in the report:

- Security Audit – Windows has the ability to collect audit data, review audit logs, protect audit logs from overflow, and restrict access to audit logs. Audit information generated by the system includes the date and time of the event, the user identity that caused the event to be generated, and other event-specific data. Authorized administrators can review, search, and sort audit records. Authorized administrators can also configure the audit system to include or exclude potentially auditable events to be audited based on many characteristics. In the context of this evaluation, the protection profile requirements cover generating audit events, authorized review of stored audit records, and providing secure storage for audit event entries.

- Cryptographic Support – Windows provides validated cryptographic functions that support encryption/decryption, cryptographic signatures, cryptographic hashing, and random number generation. Windows implements these functions in support of IPsec, TLS, and HTTPS protocol implementation. Windows also ensures that its Guest VMs have access to entropy data so that virtualized operating systems can ensure the implementation of strong cryptography.

- User Data Protection – Windows makes certain computing services available to Guest VMs but implements measures to ensure that access to these services is granted on an appropriate basis and that these interfaces don't result in unauthorized data leakage between Guest VMs and Windows or between multiple Guest VMs.

- Identification and Authentication – Windows offers several methods of user authentication, which includes X.509 certificates needed for trusted protocols. Windows implements password strength mechanisms and ensures that excessive failed authentication attempts using methods subject to brute force guessing (password, PIN) results in lockout behavior.

- Security Management – Windows includes several functions to manage security policies. Access to administrative functions is enforced through administrative roles. Windows also has the ability to support the separation of management and operational networks and to prohibit data sharing between Guest VMs.

- Protection of the TOE Security Functions (TSF) – Windows implements various self-protection mechanisms to ensure that it can't be used as a platform to gain unauthorized access to data stored on a Guest VM, that the integrity of both the TSF and its Guest VMs is maintained, and that Guest VMs are accessed solely through well-documented interfaces.

- TOE Access – In the context of this evaluation, Windows allows an authorized administrator to configure the system to display a logon banner before the logon dialog.

- Trusted Path/Channels – Windows implements IPsec, TLS, and HTTPS trusted channels and paths for remote administration, transfer of audit data to the operational environment, and separation of management and operational networks.

More information is available from the third-party certification report.

The critical Hypervisor isolation is provided through:

- Strongly defined security boundaries enforced by the Hypervisor

- Defense-in-depth exploits mitigations

- Strong security assurance processes

These technologies are described in the rest of this section. They enable Azure Hypervisor to offer strong security assurances for tenant separation in a multitenant cloud.

Strongly defined security boundaries

Your code executes in a Hypervisor VM and benefits from Hypervisor enforced security boundaries, as shown in Figure 7. Azure Hypervisor is based on Microsoft Hyper-V technology. It divides an Azure node into a variable number of Guest VMs that have separate address spaces where they can load an operating system (OS) and applications operating in parallel to the Host OS that executes in the Root partition of the node.

Figure 7. Compute isolation with Azure Hypervisor (see online glossary of terms)

Figure 7. Compute isolation with Azure Hypervisor (see online glossary of terms)

The Azure Hypervisor acts like a micro-kernel, passing all hardware access requests from Guest VMs using a Virtualization Service Client (VSC) to the Host OS for processing by using a shared-memory interface called VMBus. The Host OS proxies the hardware requests using a Virtualization Service Provider (VSP) that prevents users from obtaining raw read/write/execute access to the system and mitigates the risk of sharing system resources. The privileged Root partition, also known as Host OS, has direct access to the physical devices/peripherals on the system, for example, storage controllers, GPUs, networking adapters, and so on. The Host OS allows Guest partitions to share the use of these physical devices by exposing virtual devices to each Guest partition. So, an operating system executing in a Guest partition has access to virtualized peripheral devices that are provided by VSPs executing in the Root partition. These virtual device representations can take one of three forms:

- Emulated devices – The Host OS may expose a virtual device with an interface identical to what would be provided by a corresponding physical device. In this case, an operating system in a Guest partition would use the same device drivers as it does when running on a physical system. The Host OS would emulate the behavior of a physical device to the Guest partition.

- Para-virtualized devices – The Host OS may expose virtual devices with a virtualization-specific interface using the VMBus shared memory interface between the Host OS and the Guest. In this model, the Guest partition uses device drivers specifically designed to implement a virtualized interface. These para-virtualized devices are sometimes referred to as “synthetic” devices.

- Hardware-accelerated devices – The Host OS may expose actual hardware peripherals directly to the Guest partition. This model allows for high I/O performance in a Guest partition, as the Guest partition can directly access hardware device resources without going through the Host OS. Azure Accelerated Networking is an example of a hardware accelerated device. Isolation in this model is achieved using input-output memory management units (I/O MMUs) to provide address space and interrupt isolation for each partition.

Virtualization extensions in the Host CPU enable the Azure Hypervisor to enforce isolation between partitions. The following fundamental CPU capabilities provide the hardware building blocks for Hypervisor isolation:

- Second-level address translation – the Hypervisor controls what memory resources a partition is allowed to access by using second-level page tables provided by the CPU's memory management unit (MMU). The CPU's MMU uses second-level address translation under Hypervisor control to enforce protection on memory accesses performed by:

- CPU when running under the context of a partition.

- I/O devices that are being accessed directly by Guest partitions.

- CPU context – the Hypervisor uses virtualization extensions in the CPU to restrict privileges and CPU context that can be accessed while a Guest partition is running. The Hypervisor also uses these facilities to save and restore state when sharing CPUs between multiple partitions to ensure isolation of CPU state between the partitions.

The Azure Hypervisor makes extensive use of these processor facilities to provide isolation between partitions. The emergence of speculative side channel attacks has identified potential weaknesses in some of these processor isolation capabilities. In a multitenant architecture, any cross-VM attack across different tenants involves two steps: placing an adversary-controlled VM on the same Host as one of the victim VMs, and then breaching the logical isolation boundary to perform a side-channel attack. Azure provides protection from both threat vectors by using an advanced VM placement algorithm enforcing memory and process separation for logical isolation, and secure network traffic routing with cryptographic certainty at the Hypervisor. As discussed in section titled Exploitation of vulnerabilities in virtualization technologies later in the article, the Azure Hypervisor has been architected to provide robust isolation directly within the hypervisor that helps mitigate many sophisticated side channel attacks.

The Azure Hypervisor defined security boundaries provide the base level isolation primitives for strong segmentation of code, data, and resources between potentially hostile multitenants on shared hardware. These isolation primitives are used to create multitenant resource isolation scenarios including:

- Isolation of network traffic between potentially hostile guests – Virtual Network (VNet) provides isolation of network traffic between tenants as part of its fundamental design, as described later in Separation of tenant network traffic section. VNet forms an isolation boundary where the VMs within a VNet can only communicate with each other. Any traffic destined to a VM from within the VNet or external senders without the proper policy configured will be dropped by the Host and not delivered to the VM.

- Isolation for encryption keys and cryptographic material – You can further augment the isolation capabilities with the use of hardware security managers or specialized key storage, for example, storing encryption keys in FIPS 140 validated hardware security modules (HSMs) via Azure Key Vault.

- Scheduling of system resources – Azure design includes guaranteed availability and segmentation of compute, memory, storage, and both direct and para-virtualized device access.

The Azure Hypervisor meets the security objectives shown in Table 2.

Table 2. Azure Hypervisor security objectives

| Objective | Source |

|---|---|

| Isolation | The Azure Hypervisor security policy mandates no information transfer between VMs. This policy requires capabilities in the Virtual Machine Manager (VMM) and hardware for the isolation of memory, devices, networking, and managed resources such as persisted data. |

| VMM integrity | Integrity is a core security objective for virtualization systems. To achieve system integrity, the integrity of each Hypervisor component is established and maintained. This objective concerns only the integrity of the Hypervisor itself, not the integrity of the physical platform or software running inside VMs. |

| Platform integrity | The integrity of the Hypervisor depends on the integrity of the hardware and software on which it relies. Although the Hypervisor doesn't have direct control over the integrity of the platform, Azure relies on hardware and firmware mechanisms such as the Cerberus security microcontroller to protect the underlying platform integrity, thereby preventing the VMM and Guests from running should platform integrity be compromised. |

| Management access | Management functions are exercised only by authorized administrators, connected over secure connections with a principle of least privilege enforced by fine grained role access control mechanism. |

| Audit | Azure provides audit capability to capture and protect system data so that it can later be inspected. |

Defense-in-depth exploits mitigations

To further mitigate the risk of a security compromise, Microsoft has invested in numerous defense-in-depth mitigations in Azure systems software, hardware, and firmware to provide strong real-world isolation guarantees to Azure customers. As mentioned previously, Azure Hypervisor isolation is based on Microsoft Hyper-V technology, which enables Azure Hypervisor to benefit from decades of Microsoft experience in operating system security and investments in Hyper-V technology for virtual machine isolation.

Listed below are some key design principles adopted by Microsoft to secure Hyper-V:

- Prevent design level issues from affecting the product

- Every change going into Hyper-V is subject to design review.

- Eliminate common vulnerability classes with safer coding

- Some components such as the VMSwitch use a formally proven protocol parser.

- Many components use

gsl::spaninstead of raw pointers, which eliminates the possibility of buffer overflows and/or out-of-bounds memory accesses. For more information, see the Guidelines Support Library (GSL) documentation. - Many components use smart pointers to eliminate the risk of use-after-free bugs.

- Most Hyper-V kernel-mode code uses a heap allocator that zeros on allocation to eliminate uninitialized memory bugs.

- Eliminate common vulnerability classes with compiler mitigations

- All Hyper-V code is compiled with InitAll, which eliminates uninitialized stack variables. This approach was implemented because many historical vulnerabilities in Hyper-V were caused by uninitialized stack variables.

- All Hyper-V code is compiled with stack canaries to dramatically reduce the risk of stack overflow vulnerabilities.

- Find issues that make their way into the product

- All Windows code has a set of static analysis rules run across it.

- All Hyper-V code is code reviewed and fuzzed. For more information on fuzzing, see Security assurance processes and practices section later in this article.

- Make exploitation of remaining vulnerabilities more difficult

- The VM worker process has the following mitigations applied:

- Arbitrary Code Guard – Dynamically generated code can't be loaded in the VM Worker process.

- Code Integrity Guard – Only Microsoft signed code can be loaded in the VM Worker Process.

- Control Flow Guard (CFG) – Provides course grained control flow protection to indirect calls and jumps.

- NoChildProcess – The worker process can't create child processes (useful for bypassing CFG).

- NoLowImages / NoRemoteImages – The worker process can't load DLLs over the network or DLLs that were written to disk by a sandboxed process.

- NoWin32k – The worker process can't communicate with Win32k, which makes sandbox escapes more difficult.

- Heap randomization – Windows ships with one of the most secure heap implementations of any operating system.

- Address Space Layout Randomization (ASLR) – Randomizes the layout of heaps, stacks, binaries, and other data structures in the address space to make exploitation less reliable.

- Data Execution Prevention (DEP/NX) – Only pages of memory intended to contain code are executable.

- The kernel has the following mitigations applied:

- Heap randomization – Windows ships with one of the most secure heap implementations of any operating system.

- Address Space Layout Randomization (ASLR) – Randomizes the layout of heaps, stacks, binaries, and other data structures in the address space to make exploitation less reliable.

- Data Execution Prevention (DEP/NX) – Only pages of memory intended to contain code are executable.

- The VM worker process has the following mitigations applied:

Microsoft investments in Hyper-V security benefit Azure Hypervisor directly. The goal of defense-in-depth mitigations is to make weaponized exploitation of a vulnerability as expensive as possible for an attacker, limiting their impact and maximizing the window for detection. All exploit mitigations are evaluated for effectiveness by a thorough security review of the Azure Hypervisor attack surface using methods that adversaries may employ. Table 3 outlines some of the mitigations intended to protect the Hypervisor isolation boundaries and hardware host integrity.

Table 3. Azure Hypervisor defense-in-depth

| Mitigation | Security Impact | Mitigation Details |

|---|---|---|

| Control flow integrity | Increases cost to perform control flow integrity attacks (for example, return oriented programming exploits) | Control Flow Guard (CFG) ensures indirect control flow transfers are instrumented at compile time and enforced by the kernel (user-mode) or secure kernel (kernel-mode), mitigating stack return vulnerabilities. |

| User-mode code integrity | Protects against malicious and unwanted binary execution in user mode | Address Space Layout Randomization (ASLR) forced on all binaries in host partition, all code compiled with SDL security checks (for example, strict_gs), arbitrary code generation restrictions in place on host processes prevent injection of runtime-generated code. |

| Hypervisor enforced user and kernel mode code integrity | No code loaded into code pages marked for execution until authenticity of code is verified | Virtualization-based Security (VBS) uses memory isolation to create a secure world to enforce policy and store sensitive code and secrets. With Hypervisor enforced Code Integrity (HVCI), the secure world is used to prevent unsigned code from being injected into the normal world kernel. |

| Hardware root-of-trust with platform secure boot | Ensures host only boots exact firmware and OS image required | Windows secure boot validates that Azure Hypervisor infrastructure is only bootable in a known good configuration, aligned to Azure firmware, hardware, and kernel production versions. |

| Reduced attack surface VMM | Protects against escalation of privileges in VMM user functions | The Azure Hypervisor Virtual Machine Manager (VMM) contains both user and kernel mode components. User mode components are isolated to prevent break-out into kernel mode functions in addition to numerous layered mitigations. |

Moreover, Azure has adopted an assume-breach security strategy implemented via Red Teaming. This approach relies on a dedicated team of security researchers and engineers who conduct continuous ongoing testing of Azure systems and operations using the same tactics, techniques, and procedures as real adversaries against live production infrastructure, without the foreknowledge of the Azure infrastructure and platform engineering or operations teams. This approach tests security detection and response capabilities and helps identify production vulnerabilities in Azure Hypervisor and other systems, including configuration errors, invalid assumptions, or other security issues in a controlled manner. Microsoft invests heavily in these innovative security measures for continuous Azure threat mitigation.

Strong security assurance processes

The attack surface in Hyper-V is well understood. It has been the subject of ongoing research and thorough security reviews. Microsoft has been transparent about the Hyper-V attack surface and underlying security architecture as demonstrated during a public presentation at a Black Hat conference in 2018. Microsoft stands behind the robustness and quality of Hyper-V isolation with a $250,000 bug bounty program for critical Remote Code Execution (RCE), information disclosure, and Denial of Service (DOS) vulnerabilities reported in Hyper-V. By using the same Hyper-V technology in Windows Server and Azure cloud platform, the publicly available documentation and bug bounty program ensure that security improvements will accrue to all users of Microsoft products and services. Table 4 summarizes the key attack surface points from the Black Hat presentation.

Table 4. Hyper-V attack surface details

| Attack surface area | Privileges granted if compromised | High-level components |

|---|---|---|

| Hyper-V | Hypervisor: full system compromise with the ability to compromise other Guests | - Hypercalls - Intercept handling |

| Host partition kernel-mode components | System in kernel mode: full system compromise with the ability to compromise other Guests | - Virtual Infrastructure Driver (VID) intercept handling - Kernel-mode client library - Virtual Machine Bus (VMBus) channel messages - Storage Virtualization Service Provider (VSP) - Network VSP - Virtual Hard Disk (VHD) parser - Azure Networking Virtual Filtering Platform (VFP) and Virtual Network (VNet) |

| Host partition user-mode components | Worker process in user mode: limited compromise with ability to attack Host and elevate privileges | - Virtual devices (VDEVs) |

To protect these attack surfaces, Microsoft has established industry-leading processes and tooling that provide high confidence in the Azure isolation guarantee. As described in Security assurance processes and practices section later in this article, the approach includes purpose-built fuzzing, penetration testing, security development lifecycle, mandatory security training, security reviews, security intrusion detection based on Guest – Host threat indicators, and automated build alerting of changes to the attack surface area. This mature multi-dimensional assurance process helps augment the isolation guarantees provided by the Azure Hypervisor by mitigating the risk of security vulnerabilities.

Note

Azure has adopted an industry leading approach to ensure Hypervisor-based tenant separation that has been strengthened and improved over two decades of Microsoft investments in Hyper-V technology for virtual machine isolation. The outcome of this approach is a robust Hypervisor that helps ensure tenant separation via 1) strongly defined security boundaries, 2) defense-in-depth exploits mitigations, and 3) strong security assurances processes.

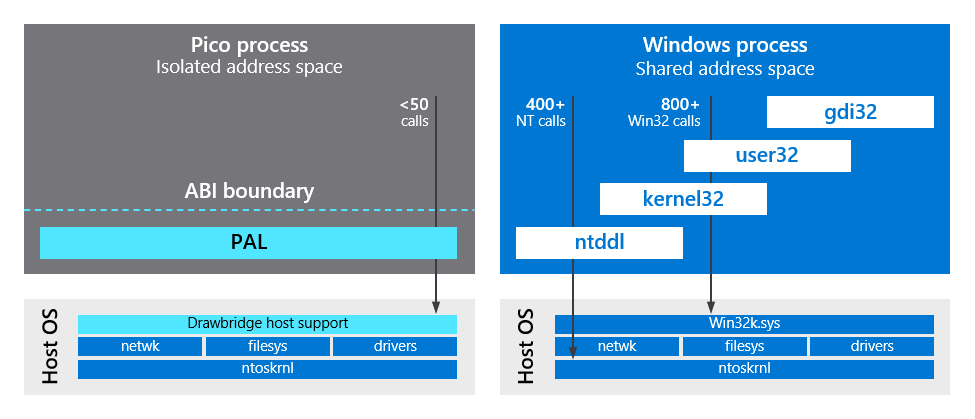

Drawbridge isolation

For services that provide small units of processing using customer code, requests from multiple tenants are executed within a single VM and isolated using Microsoft Drawbridge technology. To provide security isolation, Drawbridge runs a user process together with a lightweight version of the Windows kernel (Library OS) inside a pico-process. A pico-process is a lightweight, secure isolation container with minimal kernel API surface and no direct access to services or resources of the Host system. The only external calls the pico-process can make are to the Drawbridge Security Monitor through the Drawbridge Application Binary Interface (ABI), as shown in Figure 8.

Figure 8. Process isolation using Drawbridge

Figure 8. Process isolation using Drawbridge

The Security Monitor is divided into a system device driver and a user-mode component. The ABI is the interface between the Library OS and the Host. The entire interface consists of a closed set of fewer than 50 stateless function calls:

- Down calls from the pico-process to the Host OS support abstractions such as threads, virtual memory, and I/O streams.

- Up calls into the pico-process perform initialization, return exception information, and run in a new thread.

The semantics of the interface are fixed and support the general abstractions that applications require from any operating system. This design enables the Library OS and the Host to evolve separately.

The ABI is implemented within two components:

- The Platform Adaptation Layer (PAL) runs as part of the pico-process.

- The host implementation runs as part of the Host.

Pico-processes are grouped into isolation units called sandboxes. The sandbox defines the applications, file system, and external resources available to the pico-processes. When a process running inside a pico-process creates a new child process, it's run with its own Library OS in a separate pico-process inside the same sandbox. Each sandbox communicates to the Security Monitor, and isn't able to communicate with other sandboxes except via allowed I/O channels (sockets, named pipes, and so on), which need to be explicitly allowed by the configuration given the default opt-in approach depending on service needs. The outcome is that code running inside a pico-process can only access its own resources and can't directly attack the Host system or any colocated sandboxes. It's only able to affect objects inside its own sandbox.

When the pico-process needs system resources, it must call into the Drawbridge host to request them. The normal path for a virtual user process would be to call the Library OS to request resources and the Library OS would then call into the ABI. Unless the policy for resource allocation is set up in the driver itself, the Security Monitor would handle the ABI request by checking policy to see if the request is allowed and then servicing the request. This mechanism is used for all system primitives therefore ensuring that the code running in the pico-process can't abuse the resources from the Host machine.

In addition to being isolated inside sandboxes, pico-processes are also substantially isolated from each other. Each pico-process resides in its own virtual memory address space and runs its own copy of the Library OS with its own user-mode kernel. Each time a user process is launched in a Drawbridge sandbox, a fresh Library OS instance is booted. While this task is more time-consuming compared to launching a nonisolated process on Windows, it's substantially faster than booting a VM while accomplishing logical isolation.

A normal Windows process can call more than 1200 functions that result in access to the Windows kernel; however, the entire interface for a pico-process consists of fewer than 50 calls down to the Host. Most application requests for operating system services are handled by the Library OS within the address space of the pico-process. By providing a significantly smaller interface to the kernel, Drawbridge creates a more secure and isolated operating environment in which applications are much less vulnerable to changes in the Host system and incompatibilities introduced by new OS releases. More importantly, a Drawbridge pico-process is a strongly isolated container within which untrusted code from even the most malicious sources can be run without risk of compromising the Host system. The Host assumes that no code running within the pico-process can be trusted. The Host validates all requests from the pico-process with security checks.

Like a virtual machine, the pico-process is much easier to secure than a traditional OS interface because it's significantly smaller, stateless, and has fixed and easily described semantics. Another added benefit of the small ABI / driver syscall interface is the ability to audit / fuzz the driver code with little effort. For example, syscall fuzzers can fuzz the ABI with high coverage numbers in a relatively short amount of time.

User context-based isolation

In cases where an Azure service is composed of Microsoft-controlled code and customer code isn't allowed to run, the isolation is provided by a user context. These services accept only user configuration inputs and data for processing – arbitrary code isn't allowed. For these services, a user context is provided to establish the data that can be accessed and what Azure role-based access control (Azure RBAC) operations are allowed. This context is established by Microsoft Entra ID as described earlier in Identity-based isolation section. Once the user has been identified and authorized, the Azure service creates an application user context that is attached to the request as it moves through execution, providing assurance that user operations are separated and properly isolated.

Physical isolation

In addition to robust logical compute isolation available by design to all Azure tenants, if you desire physical compute isolation you can use Azure Dedicated Host or Isolated Virtual Machines, which are both dedicated to a single customer.

Note

Physical tenant isolation increases deployment cost and may not be required in most scenarios given the strong logical isolation assurances provided by Azure.

Azure Dedicated Host

Azure Dedicated Host provides physical servers that can host one or more Azure VMs and are dedicated to one Azure subscription. You can provision dedicated hosts within a region, availability zone, and fault domain. You can then place Windows, Linux, and SQL Server on Azure VMs directly into provisioned hosts using whatever configuration best meets your needs. Dedicated Host provides hardware isolation at the physical server level, enabling you to place your Azure VMs on an isolated and dedicated physical server that runs only your organization's workloads to meet corporate compliance requirements.

Note

You can deploy a dedicated host using the Azure portal, Azure PowerShell, and Azure CLI.

You can deploy both Windows and Linux virtual machines into dedicated hosts by selecting the server and CPU type, number of cores, and extra features. Dedicated Host enables control over platform maintenance events by allowing you to opt in to a maintenance window to reduce potential impact to your provisioned services. Most maintenance events have little to no impact on your VMs; however, if you're in a highly regulated industry or with a sensitive workload, you may want to have control over any potential maintenance impact.

Microsoft provides detailed customer guidance on Windows and Linux Azure Virtual Machine provisioning using the Azure portal, Azure PowerShell, and Azure CLI. Table 5 summarizes the available security guidance for your virtual machines provisioned in Azure.

Table 5. Security guidance for Azure virtual machines

Isolated Virtual Machines

Azure Compute offers virtual machine sizes that are isolated to a specific hardware type and dedicated to a single customer. These VM instances allow your workloads to be deployed on dedicated physical servers. Using Isolated VMs essentially guarantees that your VM will be the only one running on that specific server node. You can also choose to further subdivide the resources on these Isolated VMs by using Azure support for nested Virtual Machines.

Networking isolation

The logical isolation of tenant infrastructure in a public multitenant cloud is fundamental to maintaining security. The overarching principle for a virtualized solution is to allow only connections and communications that are necessary for that virtualized solution to operate, blocking all other ports and connections by default. Azure Virtual Network (VNet) helps ensure that your private network traffic is logically isolated from traffic belonging to other customers. Virtual Machines (VMs) in one VNet can't communicate directly with VMs in a different VNet even if both VNets are created by the same customer. Networking isolation ensures that communication between your VMs remains private within a VNet. You can connect your VNets via VNet peering or VPN gateways, depending on your connectivity options, including bandwidth, latency, and encryption requirements.

This section describes how Azure provides isolation of network traffic among tenants and enforces that isolation with cryptographic certainty.

Separation of tenant network traffic

Virtual networks (VNets) provide isolation of network traffic between tenants as part of their fundamental design. Your Azure subscription can contain multiple logically isolated private networks, and include firewall, load balancing, and network address translation. Each VNet is isolated from other VNets by default. Multiple deployments inside your subscription can be placed on the same VNet, and then communicate with each other through private IP addresses.

Network access to VMs is limited by packet filtering at the network edge, at load balancers, and at the Host OS level. Moreover, you can configure your host firewalls to further limit connectivity, specifying for each listening port whether connections are accepted from the Internet or only from role instances within the same cloud service or VNet.

Azure provides network isolation for each deployment and enforces the following rules:

- Traffic between VMs always traverses through trusted packet filters.

- Protocols such as Address Resolution Protocol (ARP), Dynamic Host Configuration Protocol (DHCP), and other OSI Layer-2 traffic from a VM are controlled using rate-limiting and anti-spoofing protection.

- VMs can't capture any traffic on the network that isn't intended for them.

- Your VMs can't send traffic to Azure private interfaces and infrastructure services, or to VMs belonging to other customers. Your VMs can only communicate with other VMs owned or controlled by you and with Azure infrastructure service endpoints meant for public communications.

- When you put a VM on a VNet, that VM gets its own address space that is invisible, and hence, not reachable from VMs outside of a deployment or VNet (unless configured to be visible via public IP addresses). Your environment is open only through the ports that you specify for public access; if the VM is defined to have a public IP address, then all ports are open for public access.

Packet flow and network path protection

Azure's hyperscale network is designed to provide:

- Uniform high capacity between servers.

- Performance isolation between services, including customers.

- Ethernet Layer-2 semantics.

Azure uses several networking implementations to achieve these goals:

- Flat addressing to allow service instances to be placed anywhere in the network.

- Load balancing to spread traffic uniformly across network paths.

- End-system based address resolution to scale to large server pools, without introducing complexity to the network control plane.

These implementations give each service the illusion that all the servers assigned to it, and only those servers, are connected by a single noninterfering Ethernet switch – a Virtual Layer 2 (VL2) – and maintain this illusion even as the size of each service varies from one server to hundreds of thousands. This VL2 implementation achieves traffic performance isolation, ensuring that it isn't possible for the traffic of one service to be affected by the traffic of any other service, as if each service were connected by a separate physical switch.

This section explains how packets flow through the Azure network, and how the topology, routing design, and directory system combine to virtualize the underlying network fabric, creating the illusion that servers are connected to a large, noninterfering datacenter-wide Layer-2 switch.

The Azure network uses two different IP-address families:

- Customer address (CA) is the customer defined/chosen VNet IP address, also referred to as Virtual IP (VIP). The network infrastructure operates using CAs, which are externally routable. All switches and interfaces are assigned CAs, and switches run an IP-based (Layer-3) link-state routing protocol that disseminates only these CAs. This design allows switches to obtain the complete switch-level topology, and forward packets encapsulated with CAs along shortest paths.

- Provider address (PA) is the Azure assigned internal fabric address that isn't visible to users and is also referred to as Dynamic IP (DIP). No traffic goes directly from the Internet to a server; all traffic from the Internet must go through a Software Load Balancer (SLB) and be encapsulated to protect the internal Azure address space by only routing packets to valid Azure internal IP addresses and ports. Network Address Translation (NAT) separates internal network traffic from external traffic. Internal traffic uses RFC 1918 address space or private address space – the provider addresses (PAs) – that isn't externally routable. The translation is performed at the SLBs. Customer addresses (CAs) that are externally routable are translated into internal provider addresses (PAs) that are only routable within Azure. These addresses remain unaltered no matter how their servers' locations change due to virtual-machine migration or reprovisioning.

Each PA is associated with a CA, which is the identifier of the Top of Rack (ToR) switch to which the server is connected. VL2 uses a scalable, reliable directory system to store and maintain the mapping of PAs to CAs, and this mapping is created when servers are provisioned to a service and assigned PA addresses. An agent running in the network stack on every server, called the VL2 agent, invokes the directory system's resolution service to learn the actual location of the destination and then tunnels the original packet there.

Azure assigns servers IP addresses that act as names alone, with no topological significance. Azure's VL2 addressing scheme separates these server names (PAs) from their locations (CAs). The crux of offering Layer-2 semantics is having servers believe they share a single large IP subnet – that is, the entire PA space – with other servers in the same service, while eliminating the Address Resolution Protocol (ARP) and Dynamic Host Configuration Protocol (DHCP) scaling bottlenecks that plague large Ethernet deployments.

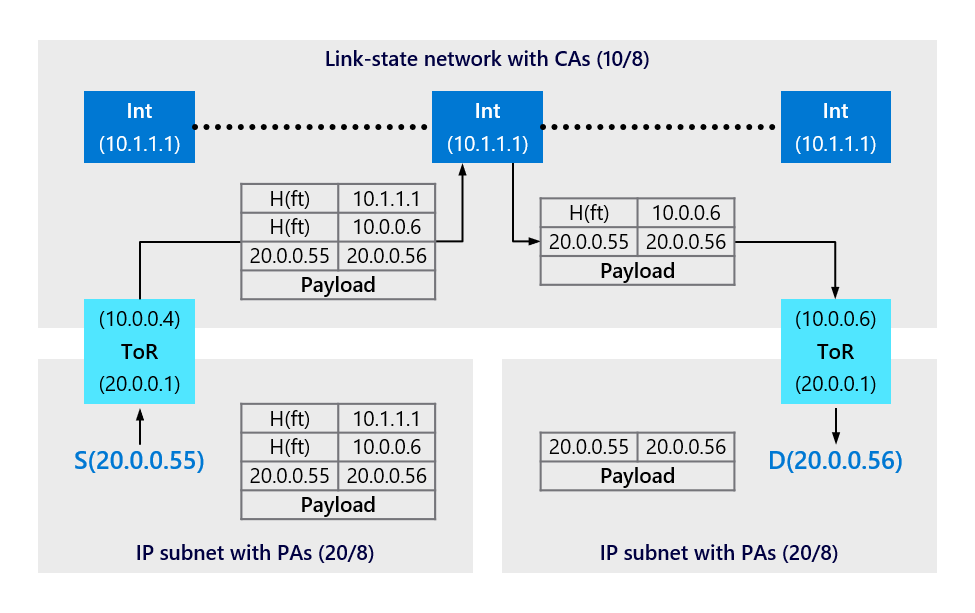

Figure 9 depicts a sample packet flow where sender S sends packets to destination D via a randomly chosen intermediate switch using IP-in-IP encapsulation. PAs are from 20/8, and CAs are from 10/8. H(ft) denotes a hash of the 5-tuple, which is composed of source IP, source port, destination IP, destination port, and protocol type. The ToR translates the PA to the CA, sends to the Intermediate switch, which sends to the destination CA ToR switch, which translates to the destination PA.

Figure 9. Sample packet flow

Figure 9. Sample packet flow

A server can't send packets to a PA if the directory service refuses to provide it with a CA through which it can route its packets, which means that the directory service enforces access control policies. Further, since the directory system knows which server is making the request when handling a lookup, it can enforce fine-grained isolation policies. For example, it can enforce a policy that only servers belonging to the same service can communicate with each other.

Traffic flow patterns

To route traffic between servers, which use PA addresses, on an underlying network that knows routes for CA addresses, the VL2 agent on each server captures packets from the host, and encapsulates them with the CA address of the ToR switch of the destination. Once the packet arrives at the CA (that is, the destination ToR switch), the destination ToR switch decapsulates the packet and delivers it to the destination PA carried in the inner header. The packet is first delivered to one of the Intermediate switches, decapsulated by the switch, delivered to the ToR's CA, decapsulated again, and finally sent to the destination. This approach is depicted in Figure 10 using two possible traffic patterns: 1) external traffic (orange line) traversing over Azure ExpressRoute or the Internet to a VNet, and 2) internal traffic (blue line) between two VNets. Both traffic flows follow a similar pattern to isolate and protect network traffic.

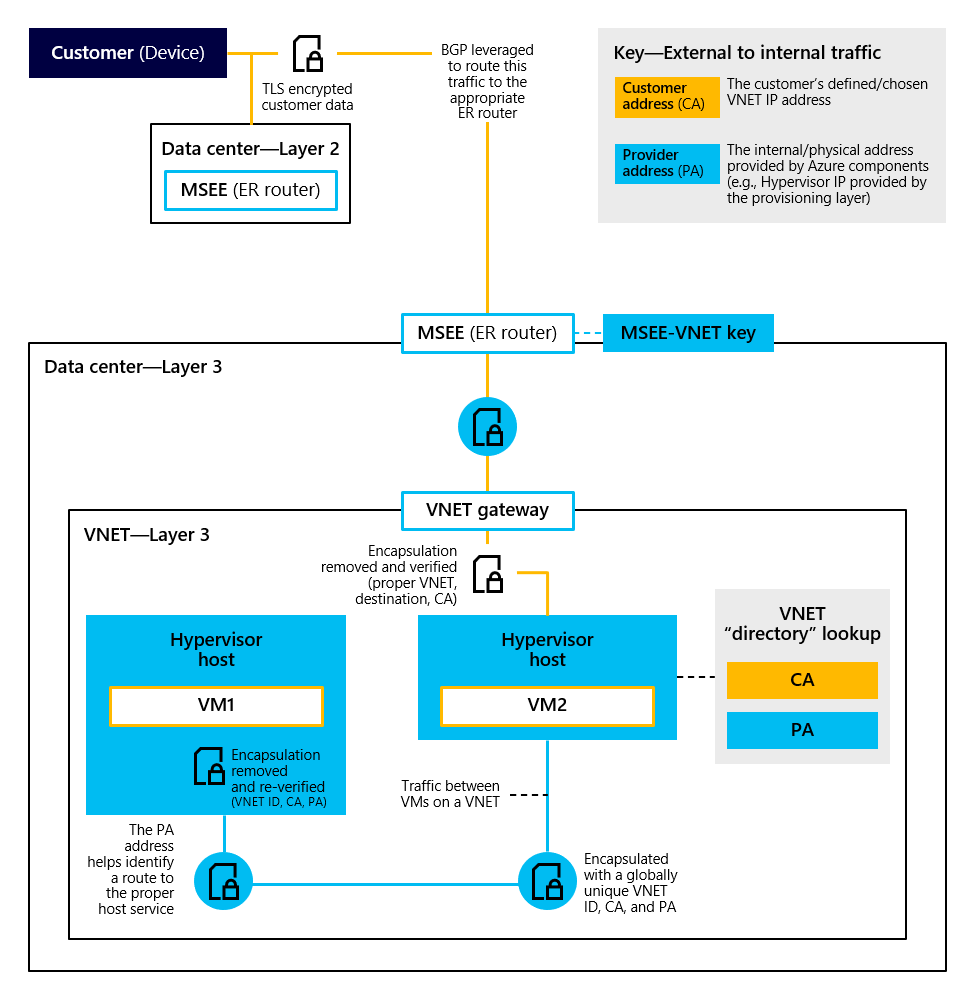

Figure 10. Separation of tenant network traffic using VNets

Figure 10. Separation of tenant network traffic using VNets