Data, privacy, and security for use of models through the model catalog in Azure AI Foundry portal

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

This article describes how the data that you provide is processed, used, and stored when you deploy models from the model catalog. Also see the Microsoft Products and Services Data Protection Addendum, which governs data processing by Azure services.

Important

For information about responsible AI in Azure OpenAI and AI services, see Responsible use of AI.

What data is processed for models deployed in Azure AI Foundry portal?

When you deploy models in Azure AI Foundry portal, the following types of data are processed to provide the service:

Prompts and generated content. A user submits a prompt, and the model generates content (output) via the operations that the model supports. Prompts might include content added via retrieval-augmented generation (RAG), metaprompts, or other functionality included in an application.

Uploaded data. For models that support fine-tuning, customers can upload their data to a datastore for fine-tuning.

Generation of inferencing outputs with managed compute

Deploying models to managed compute deploys model weights to dedicated virtual machines and exposes a REST API for real-time inference. To learn more about deploying models from the model catalog to managed compute, see Model catalog and collections in Azure AI Foundry portal.

You manage the infrastructure for these managed compute resources. Azure data, privacy, and security commitments apply. To learn more about Azure compliance offerings applicable to Azure AI Foundry, see the Azure Compliance Offerings page.

Although containers for Curated by Azure AI models are scanned for vulnerabilities that could exfiltrate data, not all models available through the model catalog are scanned. To reduce the risk of data exfiltration, you can help protect your deployment by using virtual networks. You can also use Azure Policy to regulate the models that your users can deploy.

Generation of inferencing outputs as a serverless API

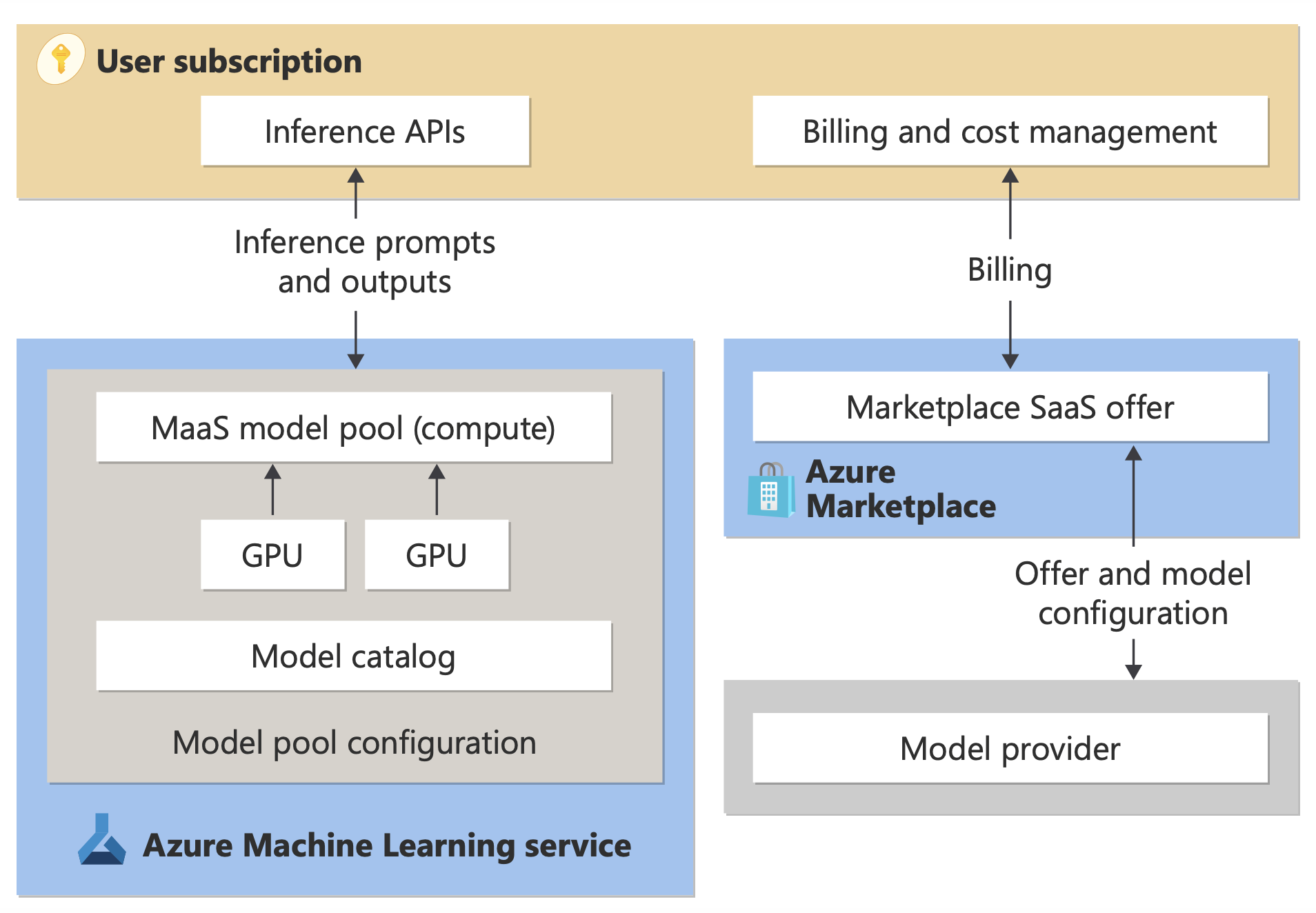

When you deploy a model from the model catalog (base or fine-tuned) by using serverless APIs with pay-as-you-go billing for inferencing, an API is provisioned. The API gives you access to the model that the Azure Machine Learning service hosts and manages. Learn more about serverless APIs in Model catalog and collections.

The model processes your input prompts and generates outputs based on its functionality, as described in the model details. Your use of the model (along with the provider's accountability for the model and its outputs) is subject to the license terms for the model. Microsoft provides and manages the hosting infrastructure and API endpoint. The models hosted in this model as a service (MaaS) scenario are subject to Azure data, privacy, and security commitments. Learn more about Azure compliance offerings applicable to Azure AI Foundry.

Microsoft acts as the data processor for prompts and outputs sent to, and generated by, a model deployed for pay-as-you-go inferencing (MaaS). Microsoft doesn't share these prompts and outputs with the model provider. Also, Microsoft doesn't use these prompts and outputs to train or improve Microsoft models, the model provider's models, or any third party's models.

Models are stateless, and they don't store any prompts or outputs. If content filtering (preview) is enabled, the Azure AI Content Safety service screens prompts and outputs for certain categories of harmful content in real time. Learn more about how Azure AI Content Safety processes data.

Prompts and outputs are processed within the geography specified during deployment, but they might be processed between regions within the geography for operational purposes. Operational purposes include performance and capacity management.

Note

As explained during the deployment process for MaaS, Microsoft might share customer contact information and transaction details (including the usage volume associated with the offering) with the model publisher so that the publisher can contact customers regarding the model. Learn more about information available to model publishers in Access insights for the Microsoft commercial marketplace in Partner Center.

Fine-tuning a model for pay-as-you-go deployment (MaaS)

If a model that's available for serverless APIs supports fine-tuning, you can upload data to (or designate data already in) a datastore to fine-tune the model. You can then create a serverless API deployment for the fine-tuned model. The fine-tuned model can't be downloaded, but:

- It's available exclusively for your use.

- You can use double encryption at rest: the default Microsoft AES-256 encryption and an optional customer-managed key.

- You can delete it at any time.

Training data uploaded for fine-tuning isn't used to train, retrain, or improve any Microsoft or non-Microsoft model, except as you direct those activities within the service.

Data processing for downloaded models

If you download a model from the model catalog, you choose where to deploy the model. You're responsible for how data is processed when you use the model.