Xbox Certification Failure Mode Analysis (FMA)

Improving the consistency, quality and relevance of decision making in Test

Abstract

Certification needs to ensure that decision making is accurate and consistent at all times, while ensuring that only issues that offer true value receive full time investment.

Introduction

The Certification team make thousands of decisions every year to determine the seriousness and non-compliance of issues.

Consistency of decision making is a challenge in a business that is both cross-regional and home to several layers of internal assessment, from testers on the floor up through Leads, Managers and Software Engineers.

Failure Mode Analysis offers a strict framework to enable all involved to make key decisions quickly and consistently while saving a significant amount of time by flagging those issues deemed too minor to be fully documented.

Overview

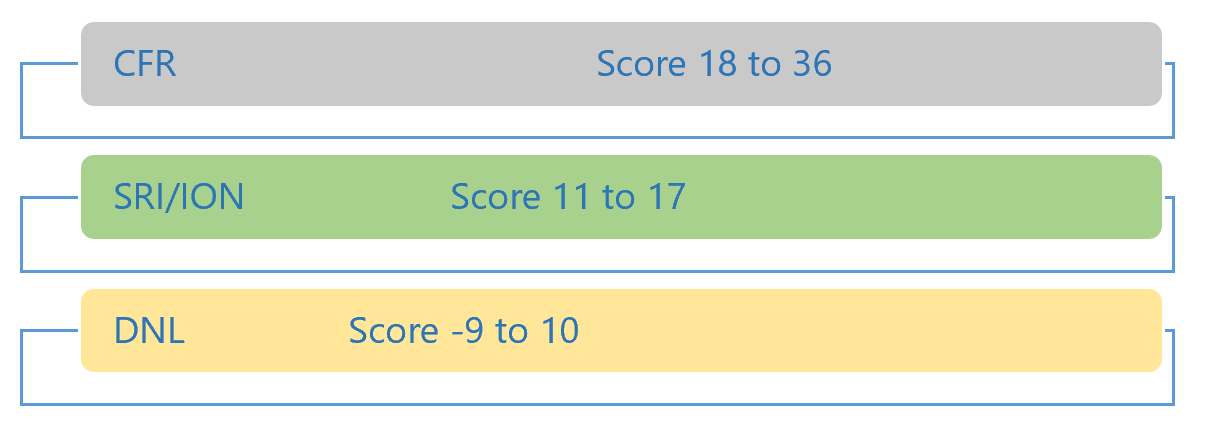

Failure Mode Analysis allows us to structure a framework where issues are assigned three different scores: severity, probability and repeatability. The sum of these scores determine the issue type as one of the following:

- CFR (Condition for Resubmission). These issues are deemed serious enough for the title to either not ship until it has been fixed in a resubmission, or one that can be fixed through a Content Update delivered in a specific time frame, often by day one of end user interaction.

- SRI (Standard Reporting Issue). These issues, although serious, are not seemed severe enough to stop the submission from releasing to the market.

- ION (Issue of Note). These are SRI issues that cannot be mapped directly to an existing test case.

The logical progression to decide where each issue resides is partially based on the wording of the test cases and on a personal assessment of the probability/repeatability of the event occurring in the retail environment.

To serve all areas of decision making, the key concepts of Severity, Probability and Repeatability are brought together in one place which although possible would create a need for a database with potentially tens of thousands of entries to cover all eventualities.

It is important to split issues into component parts to avoid an excessive number of line entries needed to describe an outcome. For Certification, FMA will use a system that has the following variables:

- Severity - 6 potential outcomes.

- Probability - 6 potential outcomes.

- Repeatability - 6 potential outcomes.

Severity (as detailed in the next section) is made up of descriptive strings and thus requires a scenario database to order the data, but both Probability and Repeatability are mathematically extracted from the issue itself.

By keeping this separation, we can order 216 possibilities using only six severity scenarios per test case (this may be more depending on the complexity of the test case, but not by a great deal).

By writing six statements and applying the separate mathematics of Probability and Repeatability we obtain the same value as writing 216 separate scenarios, with the benefit of manageability and ease of use.

The First Building Block of FMA : Severity

Definition - Severity allows us to define how serious an issue is i.e., without considering anything outside of the event.

FMA analysis allows us to break down the varying levels of severity into a user-friendly stack. Focusing on Certification's needs, the following was produced.

| Severity Rating | Meaning |

|---|---|

| -4 | No relevant effect on user or platform. |

| 1 | Very minor effect on user or platform. |

| 2 | Minor impact on user or platform. |

| 6 | Moderate impact on user or platform. |

| 12 | Critical impact on user or platform. |

| 22 | Catastrophic (product becomes inoperative or HBI). |

Here we have six potential buckets for defining each issue, from no impact to very high impact. An overall rating exercise was conducted to create weighted ratings for each bucket (see section X for details on this).

The above in itself would make for a robust part of the framework as it is relatively easy to consistently assign issues to the correct bucket across multiple individuals. Instead of settling for this, we instead chose to modify the steps per test case to create a system that is highly user friendly and able to reflect the idiosyncrasies of specific test cases.

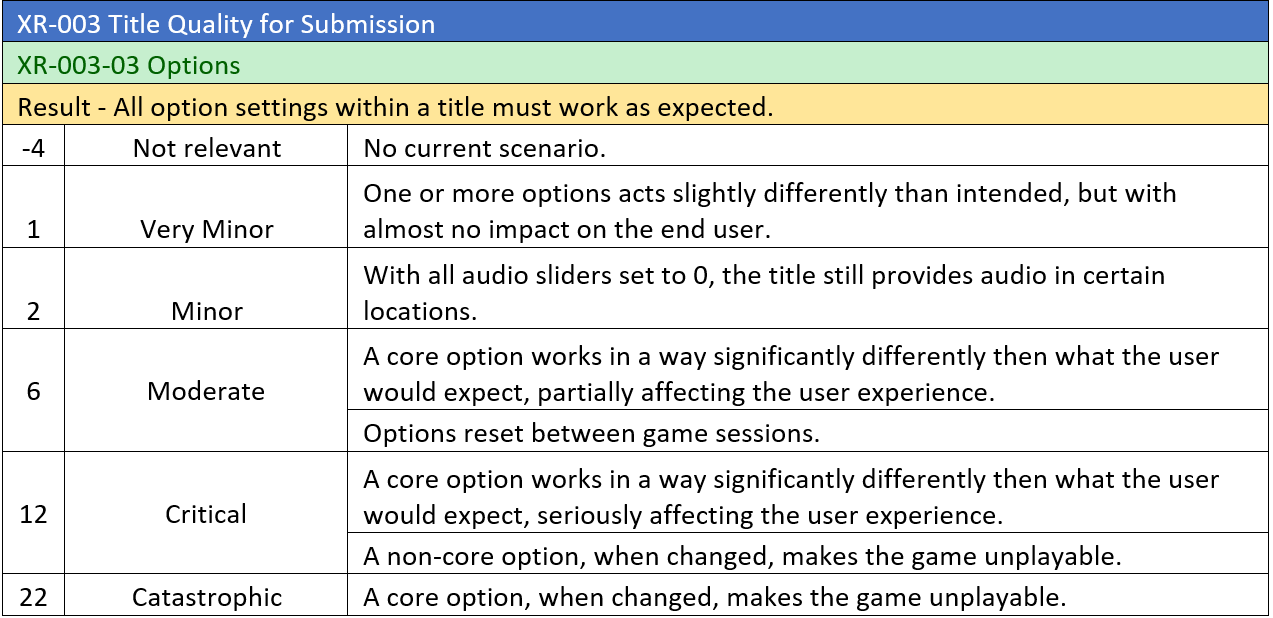

An example would be OPTIONS.

Note the way they take two specific forms. The first is to cover most issues in focused statements such as - "A core option works in a way significantly differently than what the user would expect, partially affecting the user experience".

This style allows the test teams to accurately assign the correct bucket to the issue in relation to severity.

The second style is more specific, such as - With all audio sliders set to 0, the title still provides audio in certain locations.

This style captures specific behaviors that are seen often in the labs, leaving no doubt to how to assign.

The Second Building Block of FMA : Probability

Definition - Probability allows us to assess how likely an end user is to be potentially exposed to a given issue.

We use a similar grid to that used for Severity, but with modifications:

| Probability | Rating |

|---|---|

| Extremely Unlikely to occur. 0-10% of users will go through the steps that may manifest the issue. | -3 |

| Remote. 11-20% of users will go through the steps that may manifest the issue. | 2 |

| Occasional. 21-40% of users will go through the steps that may manifest the issue. | 3 |

| Moderate. 41-70% of users will go through the steps that may manifest the issue. | 4 |

| Frequent. 71-90% of users will go through the steps that may manifest the issue. | 6 |

| Almost Inevitable. 91-100% of users will go through the steps that may manifest the issue. | 8 |

Examples:

- Issue occurs when booting the title in any language. Probability = 8.

- Issue occurs when checking Xbox Help = 4.

- Issue occurs on boot, Polish only for a full European release = -1 (reduced number of users will boot in Polish)

There is a level of subjectivity in the above, but the system is robust enough to still output the correct final number even if testers decide slightly differently on this.

The Third Building Block of FMA : Repeatability

Definition - Repeatability allows us to assess how likely an end user is to experience a given issue an additional time after the original manifestation.

So, we know how two values relate to the Severity of the issue and the Probability of the issue. The outstanding piece we need to identify is the Repeatability of the issue.

We use a similar grid to that used for Severity and Probability, but with modifications:

| Repeatability | Rating |

|---|---|

| Issue will not happen a second time. | -3 |

| Low. | 2 |

| Moderate. | 3 |

| High. | 4 |

| Almost certain to occur after the initial event. | 5 |

| Certain to re-occur after the initial event. | 6 |

As with Probability, we can use the frequency values from the test outcome, such as:

Frequency:

Console 1: 5/5

Console 2: 4/5

To calculate the value, we sum up the successful reproduction attempts (the first numbers) and divide by the sum of the total number of attempts (the second numbers). This is then multiplied by 6 to give the final value.

FMA Scoring Explained

Correct use of the three systems generates three numbers, which should be added together to create a final score.