Why can't Narrator find the buttons in my HTML Windows Store app?

Hi,

I had a reminder the other day about how important it is to keep both the visual and programmatic interfaces in mind, when building our apps’ UI. This time the UI related to an HTML Windows Store app, not a XAML app.

At the top of Ten questions on programmatic accessibility, I asked “The Narrator screen reader doesn’t notice something in my app’s UI. Where do I start the investigation?” And I mentioned how we can use the Inspect SDK tool to check whether our UI is being exposed through either the Control view or Raw view of the UIA tree. If the UI isn’t being exposed through the Raw view, then it’s not being exposed through UIA at all, and Assistive Technology tools like Narrator won’t be able to access the UI. (More discussion on the views of the UIA tree is at I’ll say this only once - Your customers need an efficient experience.)

So when I got asked last week why Narrator couldn’t find some cool-looking buttons in an HTML app’s UI, my first response was, “Well, what does Inspect tell us about the UI?” Sure enough, the UI wasn’t being exposed through the Raw view of the UIA tree. And the next question was pretty predictable, “So why isn’t it being exposed?”

Many HTML devs already know a likely reason for this, and it’s due to the use of div markup in HTML.

It’s easy for a dev to add a div, then add a pointerdown event handler, and to think they’re done building the UI. After all, we often tend to build UI which is useable by customers who interact with their devices in the same way that we interact with ours. That is, if I can see the cool visuals, then my customers must be able to see them too. And if I can click the visuals with a mouse or prod them with a finger, then my customers must be able to do that too. If I can use my UI, then all is well.

But given that you’re reading this, you’re probably a dev who knows that that’s not the case at all. You want to build UI which can be leveraged by all your customers, regardless of how they interact with their devices. You take pride in building UI that allows everyone to benefit from your app’s cool functionality.

So you now look around the web and find lots of discussion on the inaccessibility of divs, and want to fix your UI. MSDN has a helpful section at Guidelines for designing accessible apps called “Make your HTML custom controls accessible”. So I’ll see what happens when I do what that page suggests.

Say I want to add a “Next” button to my app. For this test, I’ll just show the “->” string by adding the HTML below, and add the pointerdown event handler.

<div id="buttonNext">-></div>

buttonNext.addEventListener("pointerdown", onpointerdownNext, false);

When I run this, I see the “->” on the screen, and I can click it with the mouse or touch. But let’s consider two of the most fundamental aspects of accessibility – keyboard accessibility and programmatic accessibility. (By the way, I talk about how I group accessibility topics to help me remember them, at the series of posts starting at Building accessible Windows Universal apps: Introduction.)

When I now run my app, I can’t use the keyboard to tab to the element, and so can’t invoke it through the keyboard. So my customers who only use the keyboard can’t do “Next”, and that sounds like the sort of thing that renders my app potentially useless to them.

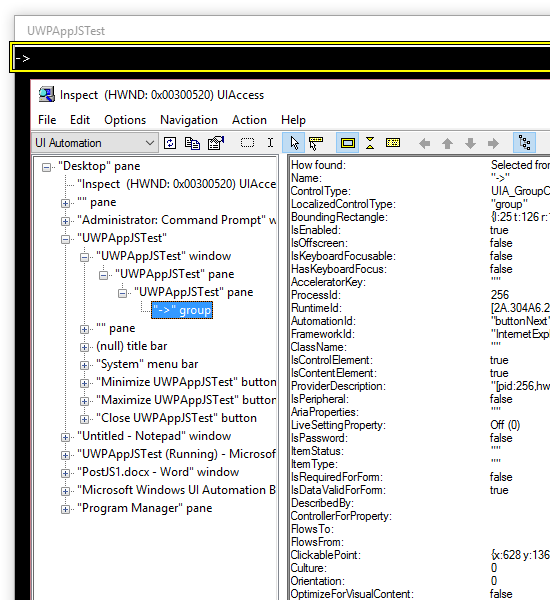

If I point the Inspect SDK tool to the UI, and set the options to show me what’s being exposed through the Raw view of the UIA tree, I don’t see the element being exposed at all. The screenshot below shows that a Pane element is exposed, but no content beneath it.

Figure 1: Inspect shows that a div is not being exposed programmatically though UIA.

So, it would be interesting to run through the steps listed at Guidelines for designing accessible apps, and to use Inspect to learn how the accessibility of the element changes as a result.

But before I do that, I should point out a couple of things I noticed while experimenting so far. The first relates to how Inspect reports a ProviderDescription for any UI it’s looking at. The ProviderDescription is a long string, and I usually only pay attention to a bit near the end of it. In my test app, the bit I’m interested in is “edgehtml.dll”. This tells me that a Microsoft Edge component is the provider for my UI. I’m running the app on a Windows 10 device. If I was running my test app on a Windows 8.1 device, I expect I’d see something like “MSAA Proxy” in the ProviderDescription. And if I was running a XAML app, I’d probably see “Windows.UI.Xaml.dll”. I’ve found this really helpful at times when I don’t know whether the Windows Store app that I’m pointing Inspect to is a XAML app or an HTML app. In that case I can just take a look at the ProviderDescription and know the answer.

(Actually, if I really only want to know whether the app I’m looking at is an HTML app or XAML app, I could look at the FrameworkId property, which would show “InternetExplorer” or “XAML” respectively. But it looks like the FrameworkId doesn’t differentiate between a Win8.1 and Win10 HTML app provider like the ProviderDescription does.)

The second interesting thing I noticed while experimenting, is the result of using a click event handler with my div, rather than a pointerdown event handler. By using a click event handler, the UI framework might take action to make the UI programmatically invokable through UIA. So say I change my HTML and JS to be:

<div id="buttonNext">-></div>

buttonNext.addEventListener("click", onclickNext, false);

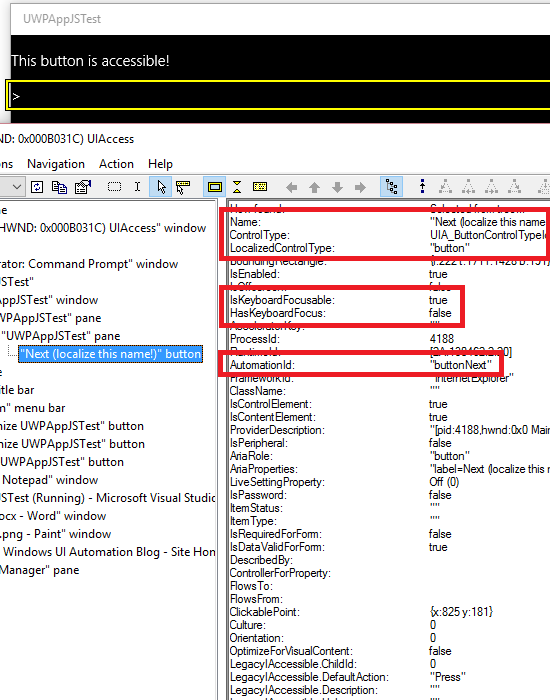

Now when I point Inspect to the UI, I find an element of type “group” being exposed through the Control view of the UIA tree, and Inspect tells me that it supports the UIA Invoke pattern. So the UI claims it can be programmatically invoked.

Figure 2: Inspect showing the div is now being exposed as a “group” in the Control view of the UIA tree.

What’s more, I can use Inspect to programmatically invoke the element, and I find my click event handler does get called in response. The XAML UI framework also sometimes adds support for the UIA Invoke pattern to elements when a click handler is added. I always use click event handlers instead of pointer event handlers because of this. And for this test, I’ll leave the click event handler in place. (Support for the Invoke pattern is essential if a customer using Narrator is to be able to invoke the element through touch input.)

Now, even though the div is represented in the UIA tree, the UI is still far from accessible. For example, even if I could get Narrator to pay attention to the element now, when the string “->” is passed to the text-to-speech (TTS) engine on my machine, the TTS engine won’t say anything. So let’s get back to the steps listed at MSDN on how to make a div accessible.

From MSDN:

• Set an accessible name for the div element.

• Set the role attribute to the corresponding interactive ARIA role, such as "button”.

• Set the tabindex attribute to include the element in the tab order.

• Add keyboard event handlers to support keyboard activation; that is, the keyboard equivalent of an onclick event handler.

Based on the above points, my updated HTML and JS becomes:

<div id="buttonNext"

aria-label="Next (localize this name!)"

role="button"

tabindex="0">-></div>

buttonNext.addEventListener("click", onclickNext, false);

buttonNext.addEventListener("keydown", onkeydownNext, false);

And now when I run the app, I can use the Tab key to move keyboard focus to the button, and use the keyboard to invoke it. So thanks to the use of the tabindex markup, the element seems to be keyboard accessible now.

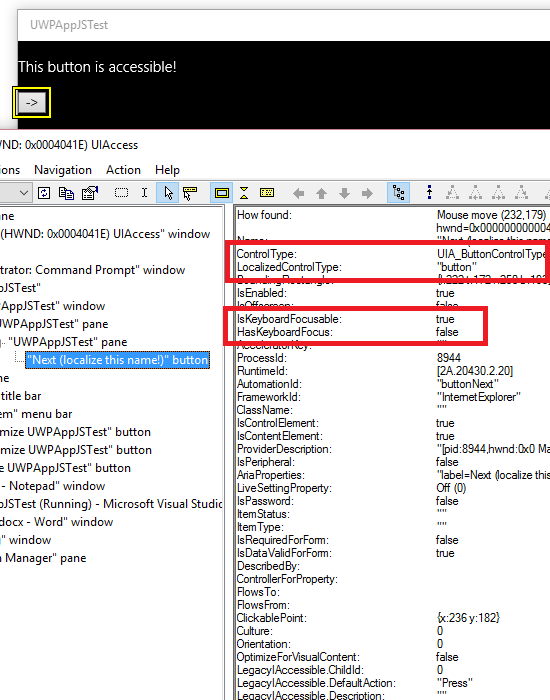

I can use Inspect to learn the full set of useful properties that the element is now exposing, such as:

- Name: “Next (localize this name!)”

- Control type: UIA_ButtonControlTypeId

- IsKeyboardFocusable: true

- AutomationId: buttonNext

- IsInvokePatternAvailable: true

Figure 3: Inspect showing many useful UIA properties being exposed by the UI.

I just put all this to the test, by starting Narrator on the Surface Pro 2 I’m using to write this post. I could tab to the element, and when keyboard focus moved to the element I heard Narrator announce “Next, localize this name, button”. What’s more, I could use swipe gestures to move Narrator to the element, or just explore the screen through touch if I wanted to, and Narrator said “Next, localize this name, button, double tap to activate”. And indeed if I then do a double tap on the screen, my click event handler gets called. This is excellent news, as it means my element can now be invoked through keyboard, mouse, touch and also programmatically.

While the above talks about how to make an existing div accessible, this isn’t exactly the HTML I’d prefer to ship. Rather, if I have UI whose purpose in life is to be a button, I’d declare it to be a button. By doing this, I’m already conveying some meaning about the UI, and I'm not having to make some UI that’s really not a button appear to be a button.

So if I use the “button” HTML below, I’m implicitly saying that I want the element to be keyboard accessible and its control type is to be exposed a button. I still have to assign my helpful, concise and localized name to the button, which seems fair enough given that the framework can’t know how I want my button which shows “->” visually to be announced.

<button id="buttonNext" aria-label="Next (localize this name!)">-></button>

The screenshot below shows that by using the button markup, I get multiple button-appropriate UIA properties for free. These include the control type and keyboard-related properties. (While the use of “button” brings in some default styling for a button, I could override that with my own styling if I wanted to.)

Figure 4: Inspect showing my button has button-appropriate properties.

Summary

Please do consider how your use of divs might be impacting the accessibility of your HTML app’s UI. It’s possible that potential customers who interact with their devices in some way that’s different from how you work at your device, are blocked from leveraging all your app’s cool features. The Inspect SDK tool can really help you learn how your UI is being exposed programmatically.

By default, I’d use HTML controls, like a “<button>”, to convey as much meaning as possible about my UI. By doing that, the UI will be accessible by default in some very important ways. This reduces the work on my plate to polish up the accessible experience, so that all my customers can access my features in as efficient a way as possible.

If for some reason I have existing inaccessible div UI, and it’s not practical for me to replace this with more accessible HTML controls, I can add markup to address certain accessibility issues, (as described at Guidelines for designing accessible apps).

And here’s something for you to ponder: if you were asked by someone whose only form of input at their device is a foot switch, “Can I use your app?”...

What would you tell them?

Comments

Anonymous

January 20, 2016

I often see the advice to add the "keydown" listener to react to keyboard. However, wouldn't "keyup" be more appropriate, as that's when a traditional button also fires? (He wrote, before pushing the fake "Post" button here on the MSDN blog which is just an "a" anchor without an actual href, so not keyboard-focusable or anything...)Anonymous

January 20, 2016

Oh, I should clarify that when I mean "that's when a traditional button also fires", I was referring to the use of SPACE bar (which only fires "click" event once the space bar is released again); using ENTER/RETURN it seems that a button is activated right away when the key is down.Anonymous

January 24, 2016

(Splitting this into two replies, given that apparently a single reply needs to be no more than 3072 characters.) Hi Patrick, This is a great point, thanks for raising it. It's really easy for devs to add a keydown event handler, without considering whether a keyup handler would be more appropriate. And that's exactly what I did. As you say, sometimes it really is important which event handler is used. I know in the past I've used one of these handlers, and then had to change it later once I learned that what I used was not the right choice. I forget all the reasons why I had to do that. I think one time might have related to the fact that I needed to respond to certain key combinations, and reacting to a keydown meant that I couldn't always detect a key combination using that key, (depending on the order that the keys were pressed). I think there were other reasons for me changing my app to detect keyups instead of keydowns, including trying to be consistent with other UI in the app. I do want my customers to feel they're getting a consistent behavior when using the keyboard. Depending on the UI framework I'm using, I'd need to do some experiments to refresh myself on exactly how controls react to different keys. It sounds like you've encountered rather interesting behavior with the Spacebar key triggering action on keyup, and Enter triggering action on keydown. I wouldn't really have thought this was expected, but as a test I just did Win+R to invoke the Run dlg, tabbed to the Cancel button, and invoked that button through the Spacebar and Enter. It looks like Spacebar triggers the action on keyup and Enter on keydown. So maybe there's some history around this behavior that I'm not aware of. As it happens, I also once had to change a keyup handler to a keydown handler. I discovered after I'd built my UI, I needed to support press & hold for autorepeat. Reacting to keyup won't allow press & hold, but my keydown handler got repeatedly called as the key stayed depressed, and that’s just what I needed.Anonymous

January 24, 2016

I've seen different customers have different needs around press & hold. One person used an on-screen keyboard to type into Notepad, and that text was then read by whoever he was having a conversation with. Once the text had been read, the person would then press & hold on the Backspace key on the on-screen keyboard to erase the text, and he'd then type more text. So in this conversational situation, press & hold worked well for him. Another person I met used a switch on her wheelchair, and the switch would simulate a left mouse button click wherever the mouse pointer was on the screen. (I know I'm now moving from the topic of key down/up to pointer down/up, but I think your point on considering events applies to both keys and pointers.) The person would press the switch to click a key shown on an on-screen keyboard that she was using, and so enter text in whatever the target app was. It was not practical for her to release the switch quickly, and so effectively a mouse pointer was down on the on-screen keyboard key for an extended period, and the on-screen keyboard would start auto-repeating the text. This meant the target app got multiple characters instead of a single character, and that was not the desired result at all. So in this case, the technology had not accounted for the customer's specific way of interacting with her device. Instead the technology should have allowed a single character to be sent to the target app, regardless of how long the switch was depressed. Interestingly, this topic also relates to an experimental app I built a while back. I wanted to explore a number of ways that customers with mobility impairments and speech impairments could interact with an HTML Windows Store I'd built for presenting. For example, for customers who can't physically speak and only have limited mobility in their hands, and they're presenting to an audience. I know at some point during this app's development, I intentionally had the app respond to a pointerdown rather than pointerup, because I wanted to support customers who found it a challenge to keep the pointer at roughly the same place between the pointer down and pointer up. So I had the app take action when a button was hit on the pointer down, regardless of where the pointer was when it went up. For anyone interested in this experimental Windows Store app, details are at www.herbi.org/.../8WaySpeaker.htm. One day I'd like to explore having a phone be a remote control for the app too. So say my customer has very limited hand movement, and wants to present. A tablet could be mounted at the front of the wheelchair, and a phone positioned beneath the customer's hand. The customer would make small touch input at the phone, and the pointer would move to anywhere on the tablet screen. This seems worth exploring at some point. Anyway, thanks again Patrick for raising this topic. I'll try not to use keydown or pointerdown in the future without commenting on whether those are really the event handlers that should be used. Guy