SDLC – Software Development Lifecycle … testing, the moment of truth (part 8 of many)

Continued from SDLC – Software Development Lifecycle … designing the blueprint (part 7 of many).

Overview

Testing is the big unknown, often ignored world that intends to uncover design and construction flaws in a solution. What should be obvious, is that the earlier we start testing, the better the testing strategy, the better the test coverage … the sooner we can reveal the flaws and address them. Uncovering a flaw when a solution is still on the whiteboard or in early construction phases is a reasonably cost effective and easy flaw to resolve … uncovering a flaw when the solution is used by end-users in pre-production or worst case production, is a flaw that all of us have nightmares about.

So what is a good approach?

- Firstly, we need a testing strategy, which:

- Includes technical review of the solution … evaluate what has been designed and is being built, before the rocket launches.

- Involves both the solution builders (developers) and qualified and experienced testers. A developer can never, ever test his own code effectively … conflict of interest and knowledge of back doors is simply too great. However, it is important that the developer is involved with testing, as it is not feasible to throw a missile over the wall for testing … in other words the developer is responsible to ship working code, which means certain unit, system and integration testing is essential, however, the developer can never be the final voter on whether we are building the right product correctly.

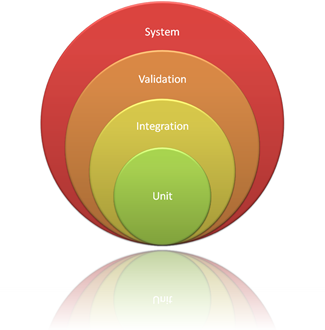

- Starts from the inside, the core of the planet, and work yourself outward to the outer layers of the solution. The big bang test approach simply does not work.

- Includes a definition of what is acceptable quality (the zero quality bar), which once achieved, implies that we can ship. If, however, we have not reached the goal which must be defined at the beginning of the project, not when the going gets tough, we continue the circle of testing, development, testing … until we reach the goal. Lack of time or bandwidth or budget is not an excuse to reduce quality or change the agreed testing strategy.

- Which includes a plan of continuous improvements, as none of us are perfect developers or testers from the word go. We need to improve skills, increase experience and refine the strategy to “evolve”.

- Secondly, we need a testing battle plan, which:

- We must defines the type of testing, test objectives, the quality bar and when and by whom each type of testing will be performed, at the beginning of the project.

- We must have a well defined process of documenting test scenarios, test cases and raising issues raised during tests, that relate the user requirements, to the test case to the identified issue and to the eventual code fix. There is nothing more frustrating than having to spend weeks analysing which code fix resolved a bug, or worse, trying to understand why an issues re-occurs with every new build.

- We must ensure that “testability” is a core principle throughout the lifetime of our solution.

- We must have a plan, which typically defines:

- Testing phases, i.e. unit, integration, system, performance, stress and user acceptance testing.

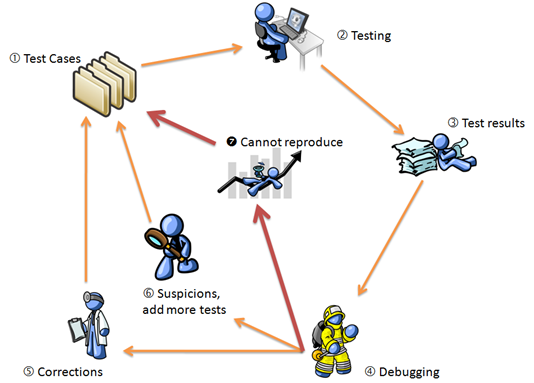

Testing process, i.e. who, how and how do we report. The basic process is depicted in the following illustration, whereby the route from 4 to 7 is often the greatest frustration, time and budget wastage route taken during the test phases, caused by developers being unable to reproduce an error due to lack of information, environment, circumstances or simply a lack of quality mindset.

Testing process, i.e. who, how and how do we report. The basic process is depicted in the following illustration, whereby the route from 4 to 7 is often the greatest frustration, time and budget wastage route taken during the test phases, caused by developers being unable to reproduce an error due to lack of information, environment, circumstances or simply a lack of quality mindset.

With Visual Studio Team System 2010, the new test environment, support for a comprehensive test process and integrated and comprehensive test capture and reporting tools, all but annihilate the previous problem, introducing a healthier process which focuses on the 4-6-1 or 4-5-1 routes in the above diagram. See reference to VSTS 2010 later on and explore the new features!!!

Looking at this blog post, which is long and potentially tedious to absorb, testing can and is often perceived as laborious, time wasting and as a “we will do it if all else fails” resolution. Is is unfortunately not a first aid box, or fire brigade type service which we call when a problem occurs, it is a strategy that we must employ from day one and consistently pursue. While it is a costly and often difficult service and part of a project to obtain support and funding for, the following two “true stories” should give any stakeholder enough information to think about:

- Mars Climate Orbiter, quote from https://www.cnn.com/TECH/space/9909/30/mars.metric/: “NASA lost a $125 million Mars orbiter because one engineering team used metric units while another used English units for a key spacecraft operation”.

- Ariane 5 Flight 501, quote from https://en.wikipedia.org/wiki/Ariane_5_Flight_501: “Flight 501, which took place on June 4 , 1996 , was the first, and unsuccessful, test flight of the European Ariane 5expendable launch system . Due to a malfunction in the control software, the rocket veered off its flight path 37 seconds after launch and was destroyed by its automated self-destruct system when high aerodynamic forces caused the core of the vehicle to disintegrate. It is one of the most infamous computer bugs in history. The breakup caused the loss of four Cluster mission spacecraft, resulting in a loss of more than US$370 million. ”. Are the stakeholders and the developers, responsible for the 64-bit floating point to 16-bit integer conversion error still in business?

These would have been such a simple test cases, which would have identified the simple errors before the space crafts blew up into worthless fireballs!

What are some of the common test concepts and terminologies we will hear in the testing halls?

A summary for those that do not have a memory like an elephant … aaarrgggnnnnnnnnnnn… those acronyms:

Dogfooding |

Eating your own dog food, in other words if you are building design or synchronisation tools, use them internally to do designs and to synchronise data. If you do not like your own solution, how can you expect the user to? |

|

Alpha |

Alpha release is usually an internal release, available to end-users to at the development site, for controlled validation testing. It is great to validate understanding and to observe the end-user. |

|

Beta |

Beta release is usually an internal release, which is made available to end-users at their location, away from development sites. It must be considered as a live solution, as users could inadvertently implement and use … and always remember that first impressions last. If the BETA is a disastrous release,RT the production release can be as good as it wants to be … it will be treated with suspicion and lack of passion. |

|

Black box testing |

Also known as behavioural, functional and surface structure testing, the black box testing focuses on the features delivered by the solution, without a view or understanding of the internals. The black box is primarily focused on finding incorrect or missing features, interfaces, external data exchanges, performance, state (initialisation, termination) and error handling errors, without knowing anything about the internal implementation. Black box testing is complementary testing to white box testing, which is comparable to the external health check done by doctors, before the internal x-ray and other probing checks of a human. |

|

BVA |

Boundary value analysis |

Testing technique that focuses testing on the data exchange at the “boundary” of classes, testing data ranges, maximum and minimum values, valid and invalid data. See orthogonal array testing. |

DRT |

Disaster recovery testing |

Is a specialised test that ensures that an organisation can recover from a complete system disaster, necessitating a reinstall and recovery from scratch. |

CST |

Client Server testing |

Client server testing is focused on the multi layer, client-server solution architecture, testing external interfaces and services, applications, servers, database, transactions and network environments. |

IT |

Integration testing |

Design validation focused testing, which validates solution deliverables with requirements. It is especially important when testing interfaces which are shared and are the basis of some or all of the building blocks. |

ITG |

Independent test group |

Introduces specialised testing skills and minimise conflict of interest. |

OAT |

Orthogonal array testing |

Orthogonal array testing is used when the input data domain is potentially huge and too large to be tested exhaustively, such as a method that receives five arguments, with three possible values each … this would result in 3^5 (253) test cases, which is potentially too great to achieve by most test environments. Orthogonal testing is an approach that analyses the test data and creates an L9 orthogonal array of nine test cases that provides a good test coverage. |

PT |

Performance testing |

Performance testing verifies that user performance requirements are met. If the user specified a 5 second response time, the solution performance has to be validated and tuned to achieve this goal and more … because the user will get used to the 5 second latency and regard it as slow. |

RG |

Regression testing |

Is concerned with the re-testing of the integration tests, which potentially (likely) include unit tests, if changes are made to the solution, to ensure that unintended effects are not rippled through the system … the butterfly effect! Consider a deep hierarchy of objects … a developer decides to make a ‘minor’ change to the mother of all base classes, tests the base class and walks away. His “butterfly” flapping of wings, could potentially ripple through the rest of the inheritance tree (unintentional off course) and cause unexpected failures in the outer layers of the solution. |

ST1 |

System testing |

The final integration testing of software, hardware, humanoids … before the missile is launched. In the world of NASA this could be the countdown, doing a complete system and environment test of all pieces of the puzzle. |

ST2 |

Security testing |

Security testing verifies that the solution and supporting environment is safe from malicious or unintentional security breaches from the outside and inside. |

ST3 |

Stress testing |

The single instance (developer tests his stuff) testing always works and always delivers optimal latency and performance … but will it scale to the 1,000,000 expected concurrent end-users? Stress testing tests the solution stability under ‘stressful’ situations, such as low memory, low disk, low processor bandwidth availability. |

TC |

Test Case |

Definition of a test scenario, which is unique and has a high probability of finding an error. Test cases can be created from use cases, state diagrams, code analysis, etc. |

TDD |

Test Driven Development Test Driven Design |

Understand your business requirements, develop a test strategy that tests your understanding and then, and only then, build the solution until all tests succeed. |

UT |

Unit testing |

Unit of work focused testing, at the core of the solution, typically done by the developer and automated testing. Unit tests focus on topics such as initialisation, logic and error handling at a code level. |

VT |

Validation testing |

Also known as user acceptance testing, the validation testing validates the solution against test requirements, which are based on business requirements. |

White box testing |

Also known as glass-box testing or deep structure testing, the white box testing is focused on the internals of the solution, validating all logic paths, decisions, loops and data structures. Cyclomatic complexity, a metric that defines the complexity of code by analysing the number of independent logic paths, is one of the recipes used do define the white box testing plan. It is important that the test plan focuses on condition, data flow and loop testing, focusing on the internal logic. See VSTS Code Metrics – Cyclomatic complexity of 24359, yet no warning and Code Metrics ... unraveling what it all means for more information on code metrics, including cyclomatic complexity. White box testing is complementary testing to black box testing, which is comparable to the internal x-ray and other probing done by doctors after an external health check of a human. |

Team Foundation Server (TFS) and Visual Studio team System (VSTS) in the world of testing

Again we will assume that we are in a VSTS 2010 world, although VSTS 2008 already has a good set of profiling, analysis and testing tools. Invest a peek at previous posts Rosario 2010 CTP2 Testing to get an idea of what is coming or as before better download the latest Community Technology Preview (CTP) and have a critical look yourself.

In fact the Team Foundation Server (TFS) and Visual Studio Team System (VSTS) 2010 test tools and associated process is giving us the tools to treat testing as a first class citizen, as everybody seems to quote these days, of the application lifecycle management process from beginning to end!

BUT, you need to have a sound testing strategy, understand the tooling, adhere to the test plan and be prepared to stick to the quality bar with your guns, else you may be adding unnecessary noise to your project. It is important to understand the basics, be prepared to stick to the plan and then start polishing the toolset.

BUT, you need to have a sound testing strategy, understand the tooling, adhere to the test plan and be prepared to stick to the quality bar with your guns, else you may be adding unnecessary noise to your project. It is important to understand the basics, be prepared to stick to the plan and then start polishing the toolset.

Next

Not sure … but there will be more in the SDLC bucket q;-)

Comments

Anonymous

March 06, 2009

PingBack from http://www.clickandsolve.com/?p=18824Anonymous

March 07, 2009

Continued from SDLC – Software Development Lifecycle … testing, the moment of truth (part 8 of many)