Deploying Your Dockerized Angular Application To Azure Using VSTS - Part 2

In part 1 I demonstrated building a VSTS build pipeline which built an Angular docker image and deployed it to an Azure Web App for Containers. Whereas it helped me achieve consistency across development and production environments, it required the build server to have all the necessary tools to build the application (Typescript, npm dependencies, Node.JS, etc.). This can be a daunting task and quite frankly unnecessary. In this post I will demonstrate how to eliminate the requirement to install the Angular build tools on the build server and instead utilize a Docker image which encapsulates all the necessary tools.

Building the Docker Image

I won't go into the details of creating a DockerFile as it has been discussed extensively in the community. Instead, I am basing my DockerFile on this excellent post which goes into the details of explaining each step of the DockerFile shown below. Here I have a multi-staged docker build where I am installing the npm packages and building the Angular application in the first image and then copying the final assets from the first image to the final image produced in the second stage. In addition, I am also including a testing steps within the docker build to ensure that only valid images are deployed to production. I am utilizing puppeteer to install headless chrome inside the container as the angular unit and end to end tests need a browser to be present.

# Stage 0, based on Node.js, to build and compile Angular

FROM node:8 as node

# See https://crbug.com/795759

RUN apt-get update && apt-get install -yq libgconf-2-4

# Install latest chrome dev package and fonts to support major

# charsets (Chinese, Japanese, Arabic, Hebrew, Thai and a few others)

# Note: this installs the necessary libs to make the bundled version

# of Chromium that Puppeteer

# installs, work.

RUN apt-get update && apt-get install -y wget --no-install-recommends \

&& wget -q -O - https://dl-ssl.google.com/linux/linux_signing_key.pub | apt-key add - \

&& sh -c 'echo "deb [arch=amd64] https://dl.google.com/linux/chrome/deb/ stable main" >> /etc/apt/sources.list.d/google.list' \

&& apt-get update \

&& apt-get install -y google-chrome-unstable fonts-ipafont-gothic fonts-wqy-zenhei fonts-thai-tlwg fonts-kacst ttf-freefont \

--no-install-recommends \

&& rm -rf /var/lib/apt/lists/* \

&& apt-get purge --auto-remove -y curl \

&& rm -rf /src/*.deb

# # It's a good idea to use dumb-init to help prevent zombie chrome processes.

# ADD https://github.com/Yelp/dumb-init/releases/download/v1.2.0/dumb-init_1.2.0_amd64 /usr/local/bin/dumb-init

# RUN chmod +x /usr/local/bin/dumb-init

# Uncomment to skip the chromium download when installing puppeteer.

# If you do, you'll need to launch puppeteer with:

# browser.launch({executablePath: 'google-chrome-unstable'})

# ENV PUPPETEER_SKIP_CHROMIUM_DOWNLOAD true

WORKDIR /app

COPY package.json /app/

RUN npm install

COPY ./ /app/

# Run unit tests to ensure that the image being built is valid

RUN npm run test

# Run end to end tests to ensure that the image being built is valid

RUN npm run e2e

# Build the angular application with production configuration by default

ARG conf=production

RUN npm run build -- --configuration $conf

# Stage 1, based on Nginx, to have only the compiled app, ready for production with Nginx

FROM nginx:alpine

COPY --from=node /app/dist/ /usr/share/nginx/html

COPY ./nginx-custom.conf /etc/nginx/conf.d/default.conf

It is important to keep in mind that the order of the different docker instructions shown above matters as it will allow us to utilize the caching feature with docker. We start by copying package.json into the container before the rest of the source code, because we want to install everything the first time, but not every time we change our source code. The next time we change our code, Docker will use the cached layers with everything installed (because the package.json hasn't changed) and will only compile our source code. I am using a private build agent on VSTS to ensure that the cached docker layers are not discarded each time the image is built which would lead to increased build times. The final size of the image will be a fraction of the size of the image that includes all the build artifacts which is why I am using a multi-staged docker build. In addition, I am utilizing another great feature with Docker which fails the image build when an instruction returns a non zero exit code. Basically, when a unit test or end to end test fails so will the build as shown here:

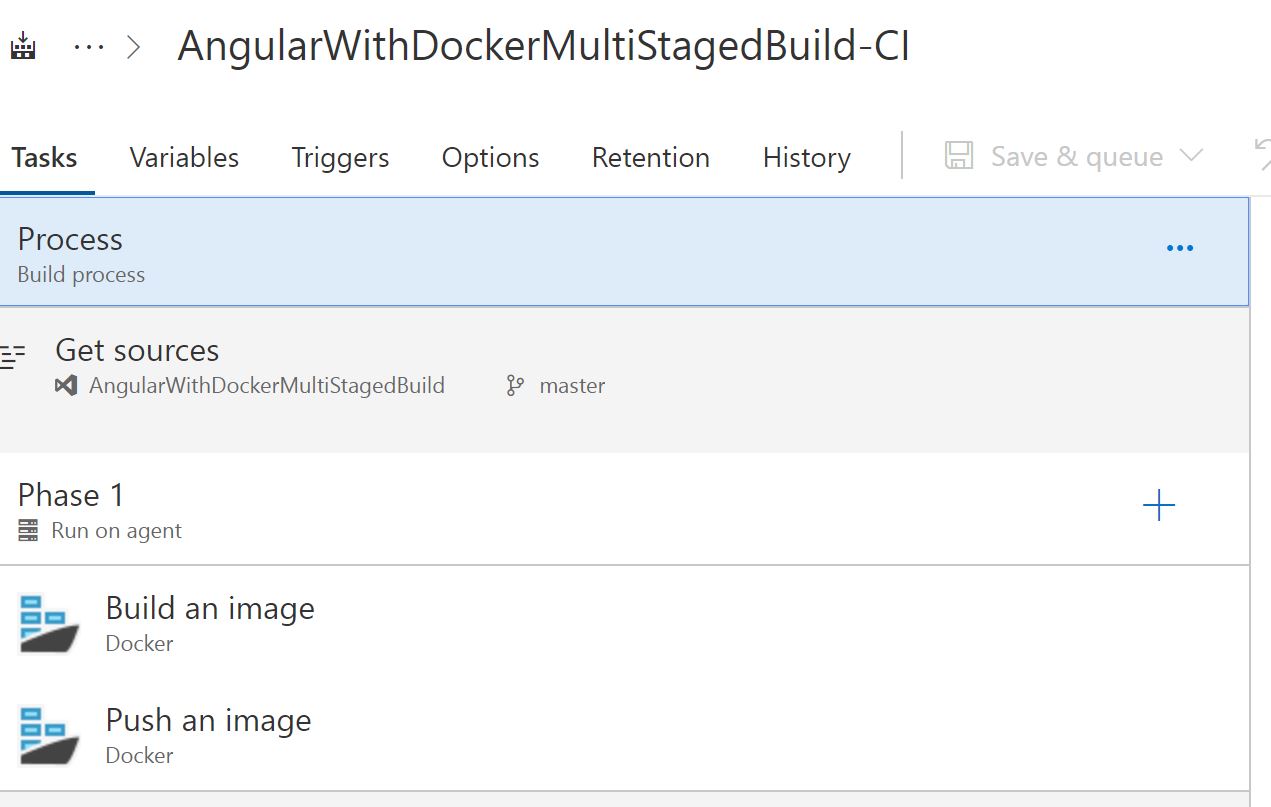

Building a CI/CD Pipeline on VSTS

Compared to the CI/CD pipeline that I built in part 1 you will notice that I am not including an npm install and ng build tasks as there are not carried inside the docker image.

The rest of the steps pertaining to creating an Azure Container Registry as well as building the release pipeline which will deploy to a Web App for Container services will be exactly the same as demonstrated in part 1.

There you have it, next time you check in your code into VSTS you won't have to worry about installing and maintaining the different build tools on your VSTS build agent.