Build analytics on social media streams using Power BI and Logic Apps

By Phil Beaumont, Data and AI Solution Architect at Microsoft

By Phil Beaumont, Data and AI Solution Architect at Microsoft

There are numerous technologies that can be utilised to stream, analyse and visualise tweets on Azure, including traditional Big Data technologies such as Hadoop, Kafka, Spark, Storm, No SQL DBs and more. However, there are also a number of PAAS services that can be utilised to achieve the same result, with much less coding, configuration and setup effort and improved maintenance and HA/DR capabilities.

One simple pattern for twitter analytics is this:

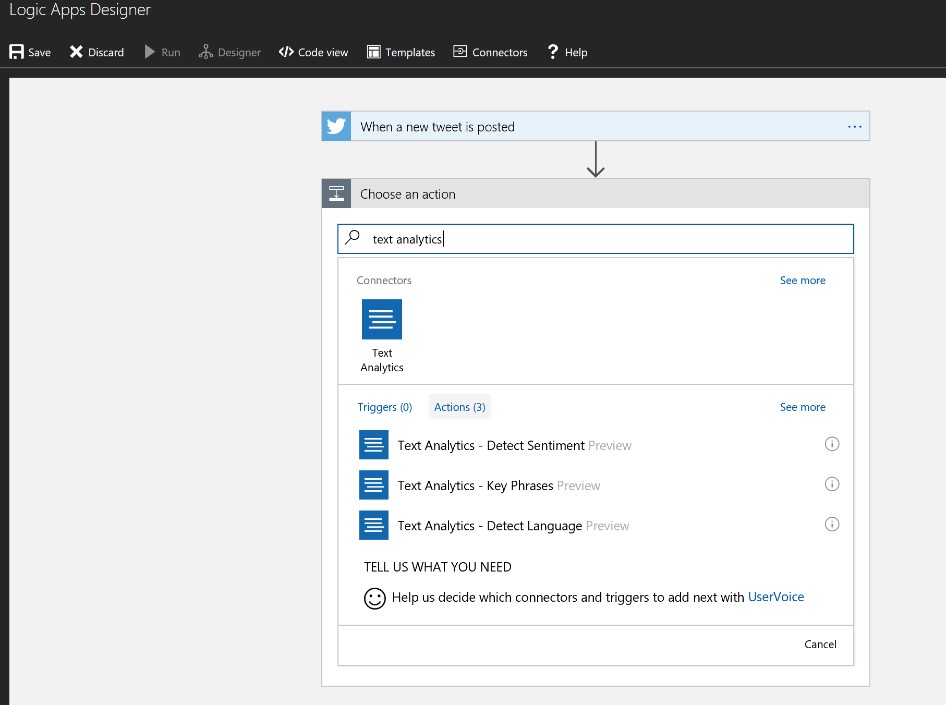

LogicApp is used to pull the data from Twitter periodically using the inbuilt Twitter connector, the Cognitive Services Text API can be called from within LogicApp and the enriched data inserted into SQL DB, and Power BI can be used to generate aggregates/measures/KPIs and to visualise the data. Cognitive Services provides a variety of machine learning services which are extremely useful for social media analytics such as sentiment analysis, moderation (less blushes if the profanity is automatically removed), language translation and intent (whether they asking a question or making a complaint).

- Logic Apps -> Twitter Connect,

- Logic Apps -> Cognitive Services,

- Logic Apps -> SQL Database

- Power BI -> SQL Database

This is an awesome architecture for rapidly prototyping a solution and allows for business rules/logic to be changed rapidly. However, for a production architecture it has weaknesses:

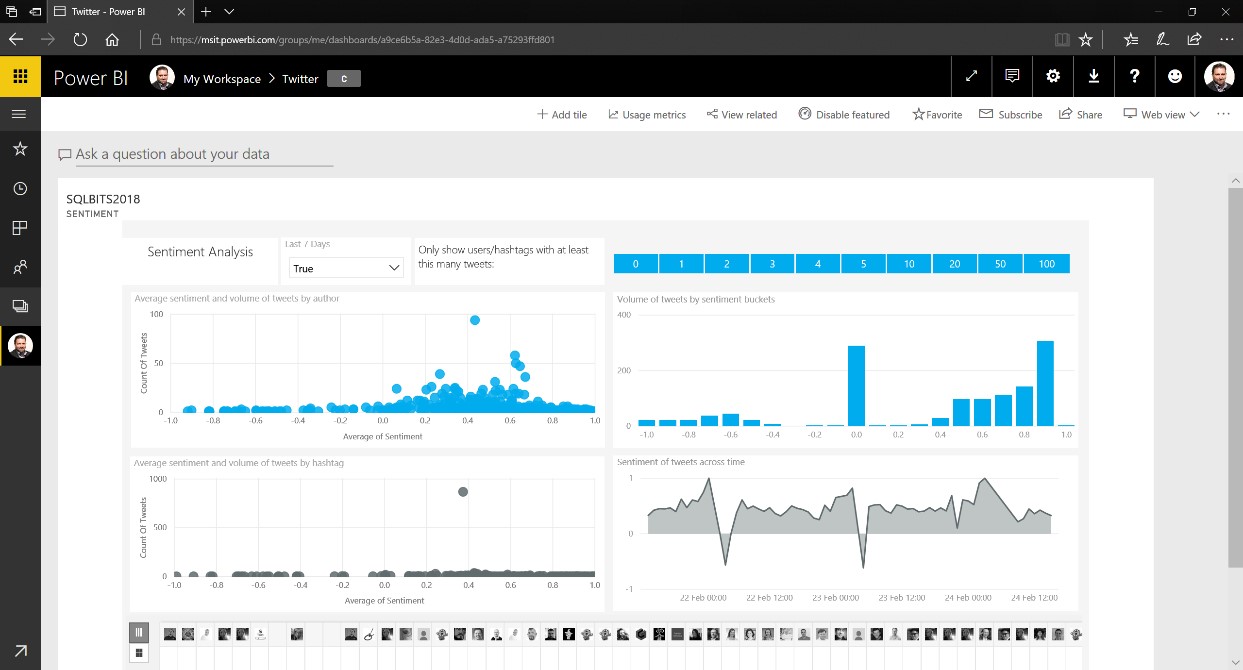

- Aggregate generation in Power BI allows for rapid rule changes but doesn’t allow those rules to be shared across multiple reports, requiring the rules to be recreated in each report. This has obvious data governance and quality concerns. These calculations should be done in the DB, so that a consistent set of rules can be applied across all reports. An example PowerBI dashboard is shown below (this is available as a Power Bi Solution Template):

- Logic Apps is exposed directly to the Twitter API. While it's possible to add code buffering logic and time delays into Logic Apps to handle high volume tweets, other components are available.

- This is hot path analytics only. There’s no provision for cold/batch analytics.

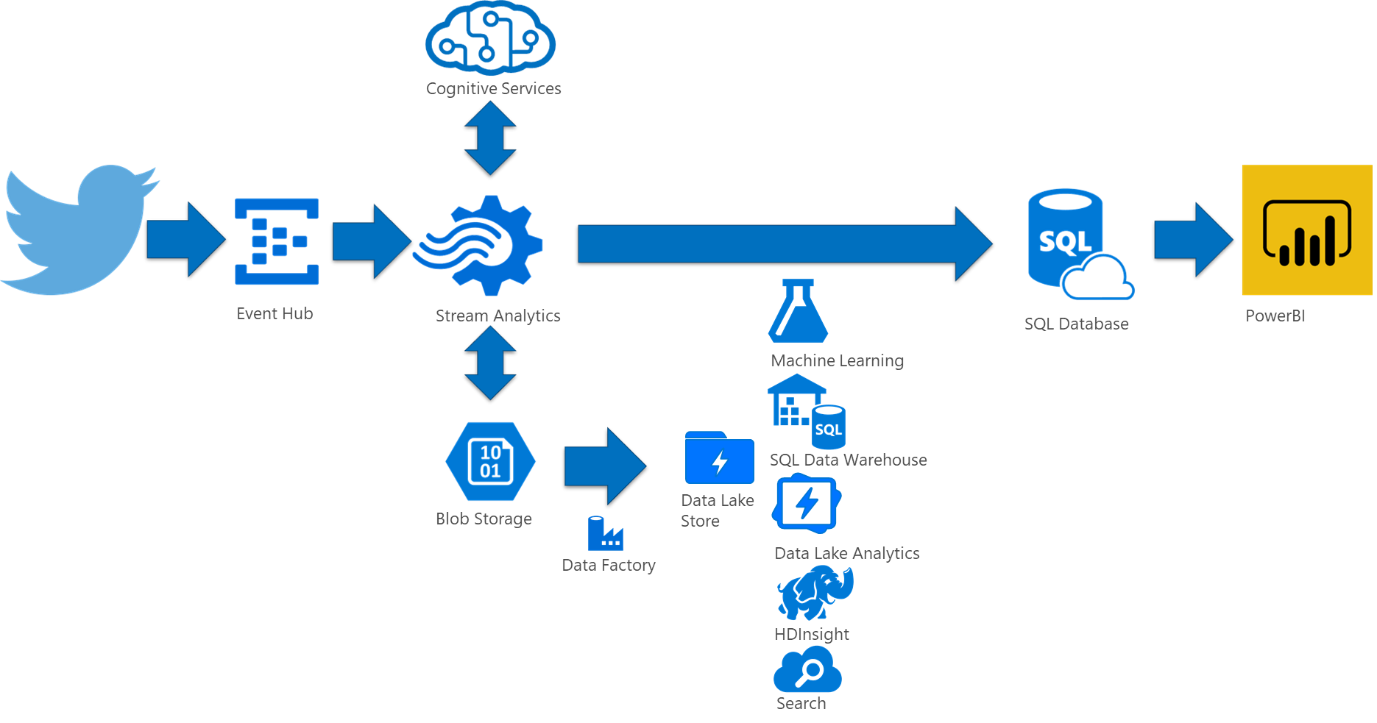

The architecture can be enhanced as per the above, with the addition of Event Hub, Stream Analytics and Blob Storage:

- Event Hub uncouples the Twitter connectivity and acts as a highly scalable buffer for incoming tweets to prevent the overloading of components downstream. It can stream to multiple outputs so it could also stream a raw feed to Blob Storage, but this would lack the enrichment from sentiment analysis etc.

- Similarly to Logic Apps, Stream Analytics can make direct calls to Cognitive Services to perform sentiment analysis, moderation etc. However, Stream Analytics can also perform aggregations over multiple criteria and time windows and push the results in SQL DB. It can also stream a raw feed (with or without enrichment) into Blob storage. Data can also be streamed directly into Power BI to provide real-time reporting, rather than near real-time reporting.

- In-memory columnar storage can be utilised in SQL DB, with triggers used to calculate additional measures. This minimises the complexity of queries and the workload of Power BI, significantly decreasing the refresh time of reports which is critical in near-realtime reporting. It also ensures consistency of business rules for KPIs across multiple reports as the rules are stored in the DB and not the report.

- Passing the data into Blob Storage enables the data to be picked up by other Azure Data Services for additional “cold path” analytical processing or on-premise analytical systems. However using Blob storage is the equivalent of dumping data into files on a file share on-premise; There are more sophisticated, functionality richer services such as Azure Data Lake or Azure CosmosDB that would be better choices. Blob storage does, however, make an excellent transitional staging area before batch moving the data into Azure Data Lake. Just don’t try and use Blob Storage as a “Data Lake” or you will most likely end up with a “Data swamp” or “Digital Data Land Fill”. Once in the Data Lake the data can be further utilised and analysed by Azure Data Lake analytics, Machine Learning Studio/Workbench, HDInsight, SQL Data Warehouse, Databricks and Azure Search, depending on the use case. This is illustrated below:

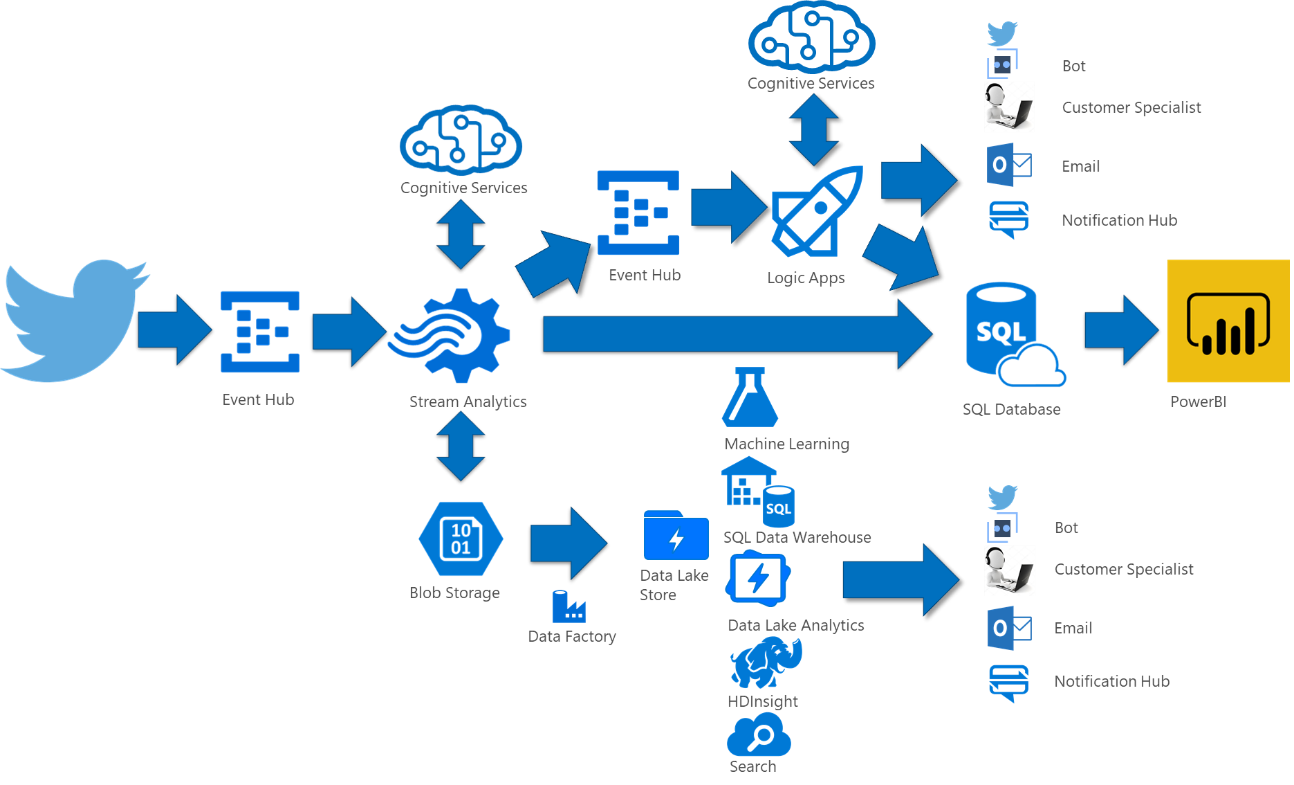

Additional workflow processing for scenarios such as responding to customer complaints and queries, identifying and pursuing leads, modifying marketing campaigns, enhancing events experience can handled either by:

- Hot path processing utilising Logic Apps to process a real-time stream from Stream Analytics. This allows the stream to be pre-processed before passing into Logic Apps, minimising the workload on Logic Apps. Logic Apps can make additional calls to cognitive and other services as well providing conditional workflow management and passing the message for appropriate processing downstream by, for example, a Call handler, notification hub, email, a tweet, or enhancing bot interaction. A 2nd Event Hub should be utilised between Stream Analytics and Logic Apps to provide a buffer between the two and allow Logic Apps to consume messages at its own cadence.

And/Or

- Cold path processing utilising, for example, Azure Data Lake Analytics to analyse and pull data. Using parameters or machine learning, data can be pulled out of the cold data store and pushed onto a message queue for appropriate processing downstream.

These options are illustrated below:

The decision point on the 2 methods is down to the use case and what the response time and analytics window for tweets are. For instance, altering a marketing campaign based on tweets received over a week would be an appropriate cold path use case. Responding to a customer complaint or query is an appropriate hot path use case.