Pantry Log–Microsoft Cognitive, IOT and Mobile App for Managing your Fridge Food Stock

We are Ami Zou (CS & Math), Silvia Sapora(CS), and Elena Liu (Engineering), three undergraduate students from UCL, Imperial College London, and Cambridge University respectively.

None of us knew each other before participating to Hack Cambridge Ternary, but we were very fortunate to have been team-matched. We soon discovered that our skills and interest were complementary. The three of us wanted to experiment with a hack incorporating both software and hardware components.

Our combined areas of expertise would allow us to deploy a complete product, supported by customer insights and an understanding of UI design principles. But most importantly, through our choice of project, we wanted to offer a glimpse into technology’s unlimited potential when it is used to address societal challenges.

The Idea

When hearing MLH announcing all their hardware and watching Microsoft giving an impressive demo on cognitive API, we were excited to do a hardware hack. Then the question came, what should we build? We spent some time throwing ideas around but nothing really caught our interests. Then we decided to change our mind-sets and tackle the question in a different way,

“What is something that we’ve personally struggled or something that we wanted to improve on for the past few days?”. We quickly realized many problems we had were related to grocery shopping: not remembering what food we already bought and kept buying redundant food; didn’t remember the expiration dates and went out to eat, and came back realizing some food were rotten; had limited ingredients at home and didn’t know what to cook, etc. “

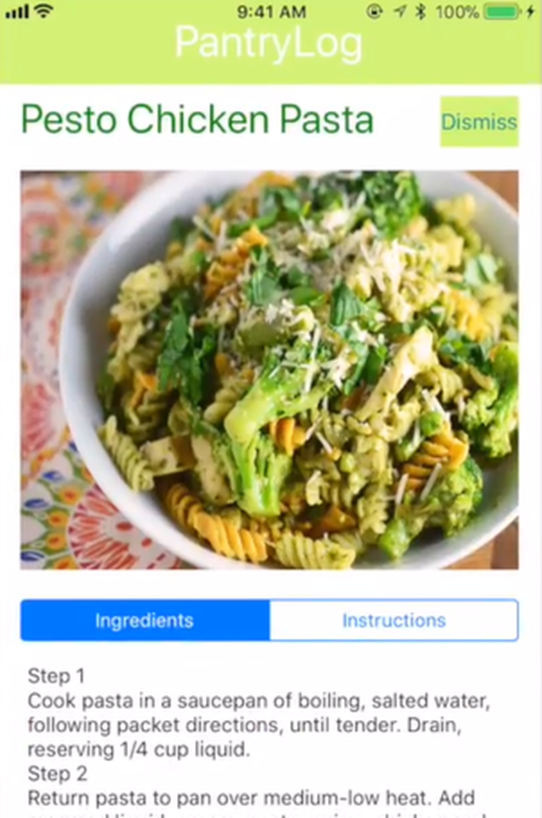

After realizing the issues, as problem-solvers, we immediately started to propose solutions. We decided to build a device that uses a camera to capture the food items and logs the information on a microcontroller. Then it uses Microsoft’s Azure cognitive API and Computer Vision to recognize the types of the food and extracts the expiration dates from the pictures. Our mobile app displays all the food items and sends out notifications when it is close to expiring. It also gives users recipe recommendations based on what kinds of food they already have, so users don’t have to go out to buy more necessary ingredients.

Implementation

The final aim of our project was to provide people with an easy and seamless way to keep track of their food. We didn’t want them to waste time recording all of their food individually on their phones, or taking pictures of every single item. We knew from personal experience that people just don’t do that, in particular when they come home after grocery shopping and just want to sit on the couch and relax. We wanted the whole process to be very simple, with a camera automatically recognizing items, their expiration dates and adding all the information into our app.

We chose to build our device using the Qualcomm Dragon Board paired with a camera. The Dragon Board gave as lots of freedom, as it supported Wi-Fi and had lots of sensors available if we needed them.

Our plan was to split the work in 3 parts:

- the Qualcomm Dragon Board set up and getting the images from the camera

- the Microsoft Cognitive Services API set up and sending the results over to the app

- the iOS app

Setting up the Qualcomm Board involved downloading and installing lots of packages (quite an hard task given how slow the internet was during the hackathon) but we eventually managed to get everything up and running, thanks to lots of patience and Python scripts. We then went on to implement the client side for interacting with the Microsoft Cognitive Services API. We needed to recognize the food in the pictures we were taking. Unfortunately, we soon realized the general Vision API was not specific enough for our purpose. For example, a tin of tomatoes was classified as “can”, or a loaf of bread as “food”. This was definitely not what we were looking for, so we decided to try the Microsoft’s Custom Vision API. It was surprisingly easy to train and test, and was immediately a huge success: after just 10 pictures of each object it was able to recognize the different items with a high precision and reliability. We managed to further improve these numbers by putting our camera on top of a box, so we could take pictures of the food inside of it. The uniform background helped a lot and we were very happy with the results.

Now we just needed a way to send the pictures from the Qualcomm Dragon Board to the API, parse the results back and send them over to the app. We decided to write the script in Python, as this is what we were using to take the pictures and we thought it was going to be easier to write everything in the same language. After some time spent reading the API’s documentation, much more time spent trying to understand the not-so- clear error messages we were getting back from the API and a bit of help from the Microsoft team, we managed to get our whole pipeline working: we were taking pictures, converting them to base64 format, sending them over to the API and parsing the JSON response back. We achieved this by setting up a simple API using Flask and Web App. None of us had any experience with Flask, but it ended up being quite easy to learn and the perfect fit for what we needed. Once the server was implemented and the calls were defined, we deployed it to Web App. This took some time because of some problems with dependencies not supported by Web App and a bit of confusion on part of which version of Python it should have used to compile our code(the default was Python 3, while we were using Python 2). Despite this, we had our API up and running by 6am, with 6 more hours to go before the end of the hackathon. At this point, an HTTP request with some parameters was enough to get back all the information our app needed in JSON format.

The next step: adding the ability to recognize expiration dates. To do this, we used the Microsoft’s Optical Character Recognition (OCR) API. Before actually going ahead and implementing in into code, we decided to test it on some picture we had taken. We immediately realized it was not able to detect and correctly return the expiration date in most cases. After a bit of reading online we tried to change the language setting from “unknown” to “English”, this helped a lot, as the main problem seemed to be related to recognizing numbers. But it was still not accurate enough in most cases. We were also quite confused as the OCR seemed to be more reliable with expiration dates that we thought were harder to read and less reliable with bigger and simpler fonts. After some talking with the Microsoft team we decided to try modifying the images slightly, turning them into black and white and sharpening them. This also helped a lot, but unfortunately we did not have time to implement it in the final version of our project.

In the end, we implemented some “tricks” to account for the unreliability of the OCR. This included: matching months to the closest existing one(“DOC” becomes “DEC”) using shortest word distance and doing something very similar for days(“80” turns into “30”) based on the digits the OCR often confused and switched between each other(“8” and “3” or “6” for example).

Another problem we encountered had to do with finding the expiration date in between lots of text. This often happened in cases where the expiration date was close to nutritional values or ingredients. Our “hack” to solve this issue was to look for words like “best before” or “consume by” , analyse and parse all the words close to it looking for something similar to a date and ignore everything else. We ended up having a surprisingly accurate system for the limited time we had available.

Demo

Next Steps

In the future, we plan on adding complementary features such as:

- Goal Setting: 25% of the items you have purchased this month have expired before consumption and ended up being wasted. Do you think you might be able to reduce this proportion to 10% next month?;

- Nutrition advice based on items purchased, diet plans, and calorie count.

We also believe that the technology could evolve into to a network of sensors deployed in the kitchen, which would make it even easier to automatically track items being added/removed without requiring any additional action from the user.

We are continuing developing the technology and polishing the designs. We will apply for Microsoft’s Imagine Cup and keep moving.

Final Thoughts

The Microsoft team was amazingly helpful and very responsive to some feedback we gave on the documentation (a bit unclear, not extensive enough or almost inconsistent in some points). This was really appreciated as it will hopefully save lots of time to developers in the future. as this feedback went into updating https://docs.microsoft.com