Neural networks and deep learning with Microsoft Azure GPU

Guest blog by Yannis Assael from Oxford University

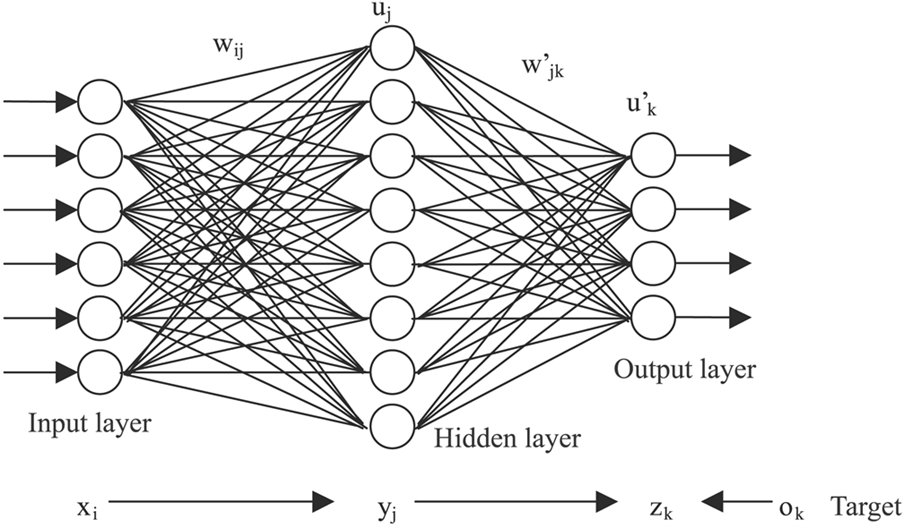

The rise of neural networks and deep learning is correlated with increased computational power introduced by general purpose GPUs. The reason is that the optimisation problems being solved to train a complex statistical model, are demanding and the computational resources available are crucial to the final solution. Using a conventional CPU, one could spend weeks of waiting for a simple neural network to be trained. This problem is amplified when one is trying to spawn multiple experiments to select optimal parameters of a model.

NeuralNetwork, www.extremetech.com

Having computational resources such as a high-end GPU is an important aspect when one begins to experiment with deep learning models as this allows a rapid gain in practical experience. This was one of the main goals of our Deep Natural Language Processing (NLP) course in University of Oxford. This rapid feedback, allows one to experiment with different practical applications and configurations. Combining the theoretical background with practical experience is a fundamental component for building the expertise with which one will be able to be creative and solve new problems in the future.

The course had an applied focus on recent advances in analysing and generating speech and text using recurrent neural networks, and was organised by Phil Blunsom and delivered in partnership with the DeepMind Natural Language Research Group. It introduced the mathematical definitions of the relevant machine learning models and derive their associated optimisation algorithms. A range of applications of neural networks in NLP was covered, including analysing latent dimensions in text, transcribing speech to text, translating between languages, and answering questions. These topics were organised into three high-level themes forming a progression from understanding the use of neural networks for sequential language modelling, to understanding their use as conditional language models for transduction tasks, and finally to approaches employing these techniques in combination with other mechanisms for advanced applications. Throughout the course, the practical implementation of such models on CPU and GPU hardware was also discussed.

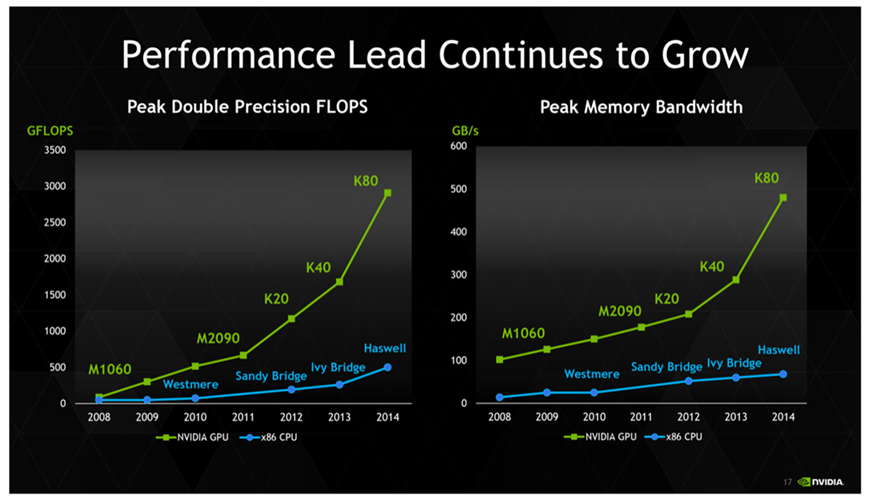

NVidia https://www.nvidia.com/object/tesla-k80.html

More specifically, The lectures of the course were accompanied by four practical exercises. The first three practical's of the Deep NLP course covered a variety of introductory topics in Deep Learning for NLP, while the last one, was an open-ended project according to the previous ones and the students' interests (e.g. machine translation, summary generation, conditional text generation, speaking rate modelling).

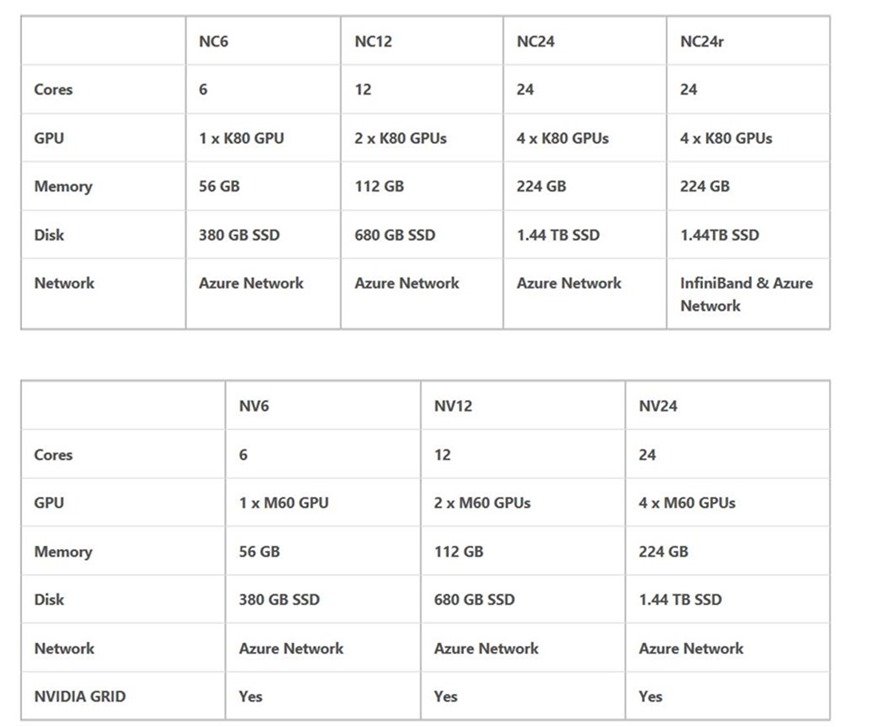

With the GPU computational resources by Microsoft Azure, to the University of Oxford for the purposes of this course, we were able to give the students the full "taste" of training state-of-the-art deep learning models on the last practical's by spawning Azure NC6 GPU instances for each student.

For further details, our syllabus is open-source and available online: https://github.com/oxford-cs-deepnlp-2017