Mixed Reality Immersive– A Beginners guide to building for MR

Guest post by Simon Jackson MVP Windows Development

With the recent release of the Microsoft Mixed Reality headsets, controllers and the awesome Fall Creators update for Windows 10, it has never been a better time to immerse yourself in to the Mixed Reality space.

We say Mixed Reality, as unlike traditional VR products and solutions, there are no external devices or sensors required to make these solutions work, everything you need is built directly in to the Mixed Reality headset. To start you literally just have to plug it in and go.

We can extend the experience (like with the Vive and Oculus) with hand held controllers or gamepads but these should be seen as extensions to any Mixed Reality experience.

Through this short course, we will walk through the basic tools and API’s you’ll need to start building your Mixed Reality experiences and some common tips and tricks to make it the best it can be.

You will learn:

· How to get setup for Mixed Reality

· Enable the Mixed Reality headset in your Unity project

· Build your first Mixed Reality scene

· Look at the various assets to help you build your Mixed Reality Experience

· Learn how to control the camera and placement in the MR scene

· Interact with objects and Unity UI

Prerequisites

Unity· A Windows 10 PC with The Fall Creators Update

· Unity 3D version Beta 2017.2+ you can install the latest release but the Beta version is recommended as per Unity Support see https://forum.unity.com/threads/custom-build-2017-2-rc-mrtp-windows-mixed-reality-technical-preview.498253/

· Visual Studio 2017 version 15.3+

· Windows Insider Preview SDK (verify for Oct)

· An Xbox controller that works with your PC.

· (Optional) Mixed Reality Controller(s)

Project Files

The project for the lab is located on GitHub here:

https://github.com/ddreaper/MixedReality250/tree/Dev_Unity_2017.2.0

Or you can download the release for the workshop here:

https://github.com/DDReaper/MixedReality250/releases/tag/Dev_Unity_2017.2.0

*Note, this is using the “Dev_Unity_2017.2.0” branch, as this is an updated version of the HoloLens workshop for Immersed headsets. So, make sure you grab the right version if you are cloning it from GitHub.

Table of Contents

Chapter 1 – A whole new world.

Chapter 2 – Adding some content

Chapter 3 – Browsing the toolkits available.

1: The Mixed Reality Toolkit

2: The Asset Store.

3: The Unity VR Sample asset

4: The VRToolKit

Chapter 4 – Adding movement and Teleporting.

Chapter 5 – Interaction.

Chapter 6 – Wrapping up.

Chapter 1 – A whole new world

Out of the box with Unity 2017.2 already natively supports the Mixed Reality system. Without any additional assets or tools, we can enable and run Immersive Mixed Reality experiences.

1. Start Unity and create a new 3D project (because VR isn’t flat 2D :D)

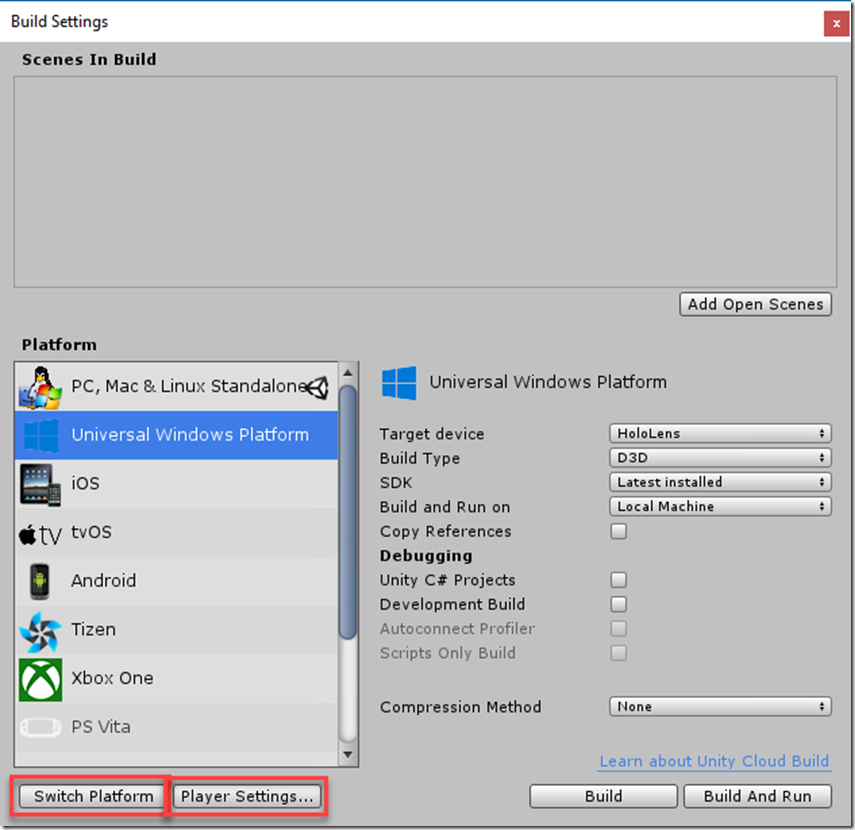

2. Select File -> Build Settings from the Editor menu

3. Select the “Windows Universal” platform and click “Switch Platform”

The Build you are currently targeting can be identified with the small Unity logo next to the platform in the list.

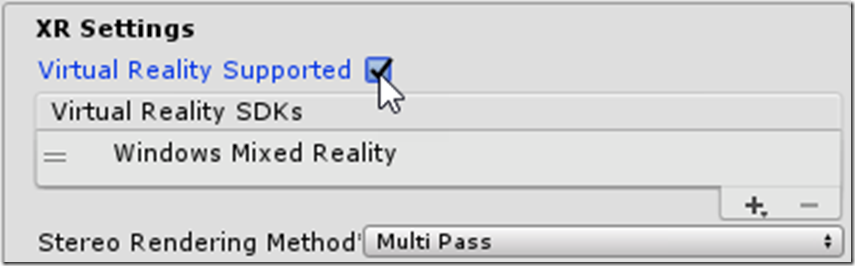

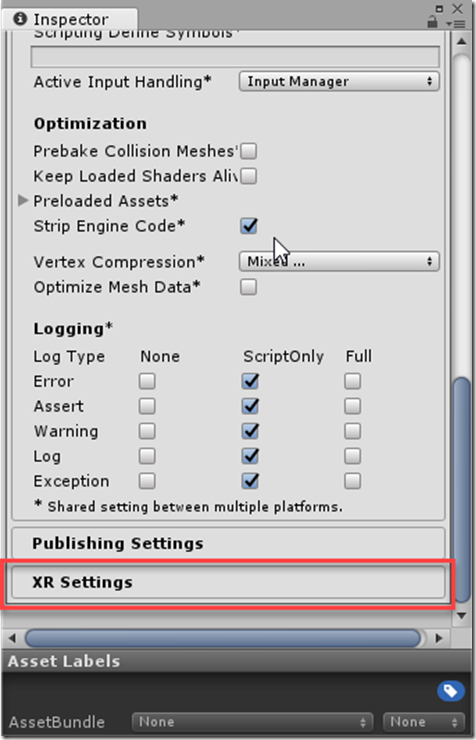

4. Click the “Player Settings” button to bring up the Unity Player Settings window in the right-hand inspector. Scroll to the bottom of the Inspector and select “XR Settings” and click to expand it

5. Ensure the “Virtual Reality Supported” option is checked and that “Windows Mixed Reality” is listed in the options.

*Note, this is only for the Windows UWP platform. If you see Vive or Occulus in the list, then you have not selected the Windows Universal Platform!

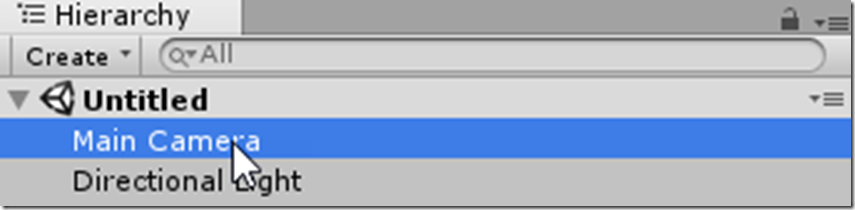

6. Now that your Unity project is setup, return to the scene view and select the “Main Camera” in the hierarchy:

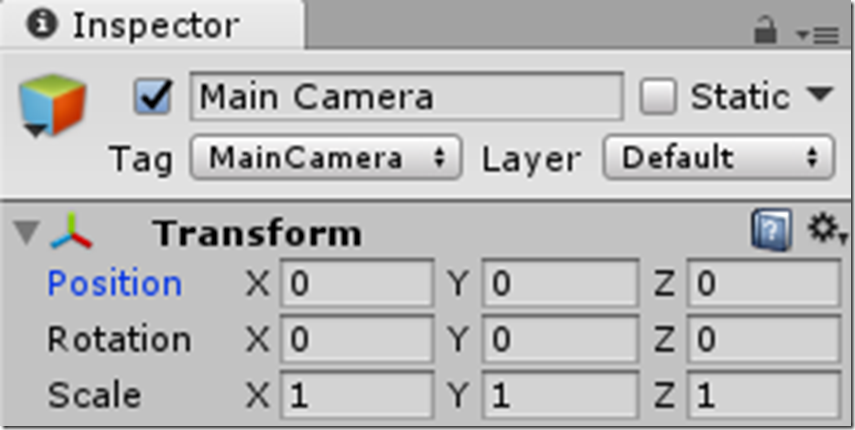

7. For your first scene, let’s place you in the center of the room. As the “Main Camera” is your view, center it in the middle of your scene by either setting the Transform position of the “Main Camera” to 0, or simply click the Cog in the inspector and select “Reset”.

Your Main Camera Transform Position should now look like this:

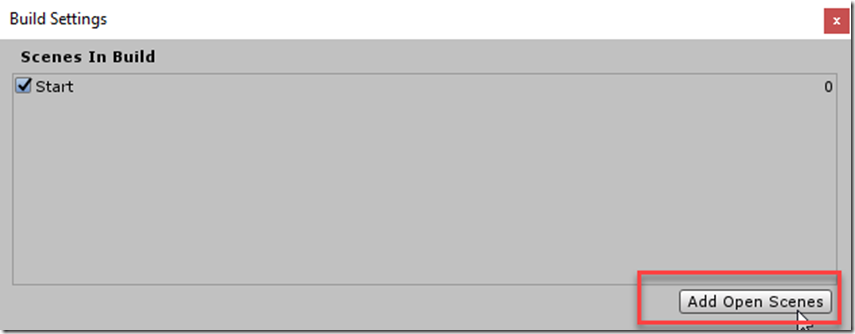

8. Now, save the Scene in your project and add it to the “Build Settings” list using “File -> Build Settings” in the menu and clicking on “Add Open Scenes”

You are now ready to give your simple setup a test run and with Unity and you can now do it directly from the editor, simply:

· Start the Mixed Reality Portal (Unity will start it for you but it can time out)

· Connect your headset, or start the Simulator in the MR Portal

· Hit Play in Unity

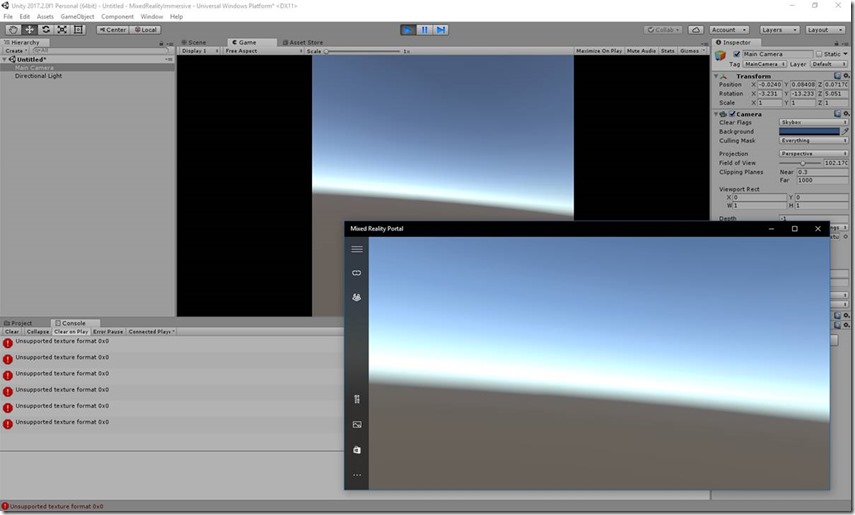

*Note Ignore the errors in the Unity editor console regarding “Unsupported Texture Formats”, It’s a Unity thing. These won’t be seen in the final output project.

What we learned

In this section, we walked through all the necessary steps to ensure your Unity development environment is up to date and ready to start building a Mixed Reality experience.

Of note, is that after you install the Mixed Reality Toolkit (later in chapter 4) there are some Menu commands in the editor to help speed things along. These will pre-populate most of the setup here to get you there quicker. It’s important you know what this involves in case you run in to issues!

Further Reading

· Holograms 100: Getting started with Unity

· Holograms 101E: Introduction with Emulator

Chapter 2 – Adding some content

Having a blank scene and looking around is all well and good, but let’s add a little content to make the scene a little more interesting.

1. Either open a new scene or use your existing one.

2. Download / Clone the MixedReality250 project from the following GitHub Repository in to your Unity Project:

https://github.com/DDReaper/MixedReality250/tree/Dev_Unity_2017.2.0

*Note, you HAVE to use the “Dev_Unity_2017.2.0” branch, as this has been updated for the immersed reality workshop

3. Reset the “Main Camera” in the inspector (Transform cog -> Reset).

4. Drag the “Skybox” asset in to the Scene view from the “AppPrefabs” folder.

(if it doesn’t drag, you are currently looking at the Game view, change tabs!)

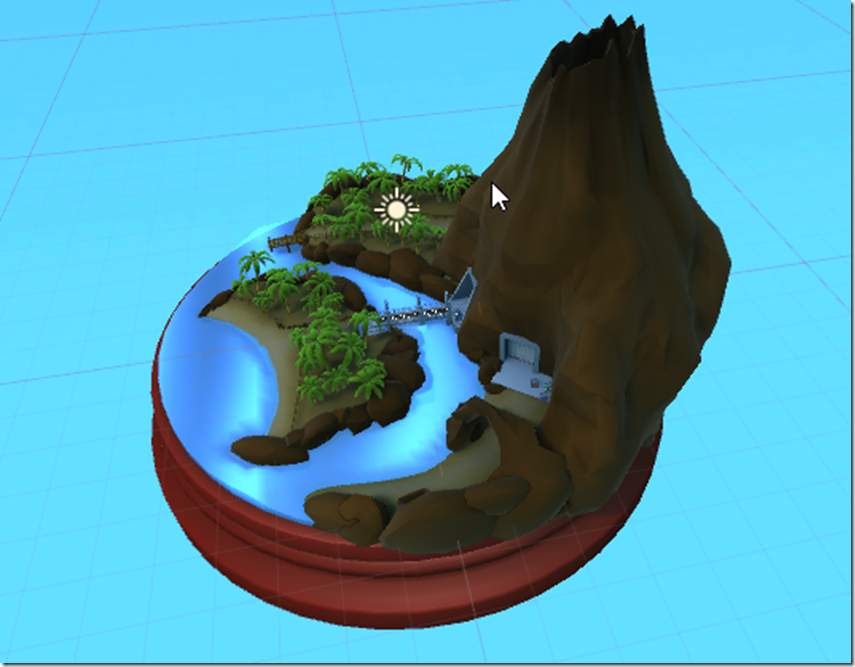

5. Drag the “Island” prefab in to the scene, also from the “AppPrefabs” folder.

(best to drop it in the hierarchy to avoid positioning issues)

6. Save your Scene, add it to the “Build Settings -> Scenes” in Build list.

(refer to CH1.9 for details)

7. Start the MR portal (if you haven’t already) and run the project from the editor.

At this point you have a basic graphic based environment with things to look at and now it’s time to properly explore. Things to test and try out to get a feel for how Mixed Reality works:

· Scale the “Island” Model up and down, see the effect in the Headset.

· Try moving the camera start position and check its effect in the headset.

· Leave the camera alone and try moving the island to set the players start position.

· Place the camera near/inside the mountain and peer in and out (it’s just fun)

This is all well and good and you start to get a good feel for how to build simple experiences but there isn’t much to do (unless you’re planning on a Mixed Reality animation, yes, those are a thing!)

You may see some errors in the console when running the Island “as is”. Don’t worry about that for now.

So, let’s move on.

What we learned

In this section, we walked through adding some content and experimented with Camera / Model placement. Unity gives us a lot of power out of the box with its abstraction of all the VR manufacturers, but it’s key to note that you can extend and go further if you wish.

Further Reading

· Holograms 100: Getting started with Unity

· Holograms 101E: Introduction with Emulator

Chapter 3 – Browsing the toolkits available

Now everything you need to start adding interactivity in to your project, the kind of things we need to add are things like:

· The ability to gaze and select

· Move / Teleport around the scene

· Add voice commands (optional)

· Bring controllers in to the scene for more fun.

But why go it alone when there is so much stuff out there to help you?

If you do want to go it alone, the “Holograms 100” course works very well and is compatible for both HL and Immersive headsets:

https://developer.microsoft.com/en-us/windows/mixed-reality/holograms_100

1: The Mixed Reality Toolkit

Microsoft being the opensource friendly company it is, publishes its own toolkit for building Mixed reality solutions natively. Originally it started with just HoloLens support but has now branched out to also include the new immersive Mixed Reality headsets (although you will still see the original HoloToolkit name here and there).

For the purpose of this workshop tutorial, we’ll continue using the MRTK.

2: The Asset Store

There’s no doubt the Unity Asset Store contains a plethora of content, a lot of which is also free, most of the VR content will be targeting the pre-existing VR platforms such as Oculus and Vive. So be sure when trying any asset that has generic and not fixed scripts.

3: The Unity VR Sample asset

This works surprisingly well using just the built in Unity support for Mixed Reality, providing a some very neatly styled VR menus and scenes, as well as some interesting experiments, such as a Shooting range (both sitting and 360), a maze puzzle and even a 3D ship dodge game controlled via gaze.

Worth looking in to for good implementation examples.

4: The VRToolKit

Although this is also listed as an Asset on the Unity Asset Store for free, it is also an open source project with some major development going on. It also has the greatest number of VR interaction profiles, from Bow and Arrow, picking with joints and gaze features.

It covers a number of common solutions such as:

· Locomotion within virtual space.

· Interactions like touching, grabbing and using objects

· Interacting with Unity3d UI elements through pointers or touch.

· Body physics within virtual space.

· 2D and 3D controls like buttons, levers, doors, drawers, etc.

· And much more...

It’s only downside is that it doesn’t have out of the box support for the MR headsets just yet, but due to the way the toolkit is abstracted for all the other VR headsets, this shouldn’t take long.

What we learned

In this section, we examined some of the major avenues you can take when building your Mixed Reality experience, from going it alone to using either assets or the manufacturers own API’s.

Unity does give us a lot out of the box but there are so many different interactions to take in to account for some games. In the end it comes down to what style of game you are building and what is of use to you. (pretty much the same with any game). However, with VR the interactions are multiplied, especially if you are using VR controllers or embedding speech interaction, both of which are invaluable in a great VR experience. Just remember, there is no physical keyboard in VR!!!

Chapter 4 – Adding movement and Teleporting

Returning to our little island, let’s import the Mixed Reality Toolkit and get the player moving around the environment.

Normally you would need to download the Mixed Reality Toolkit from the GitHub page, but this has been included with the sample project already.

1. Start a new Scene and save it with a new name.

2. Delete the “Main Camera”. We’re going to replace it with one from the MRTK.

3. Drag the “Skybox” and “Island” prefabs in to the scene as before.

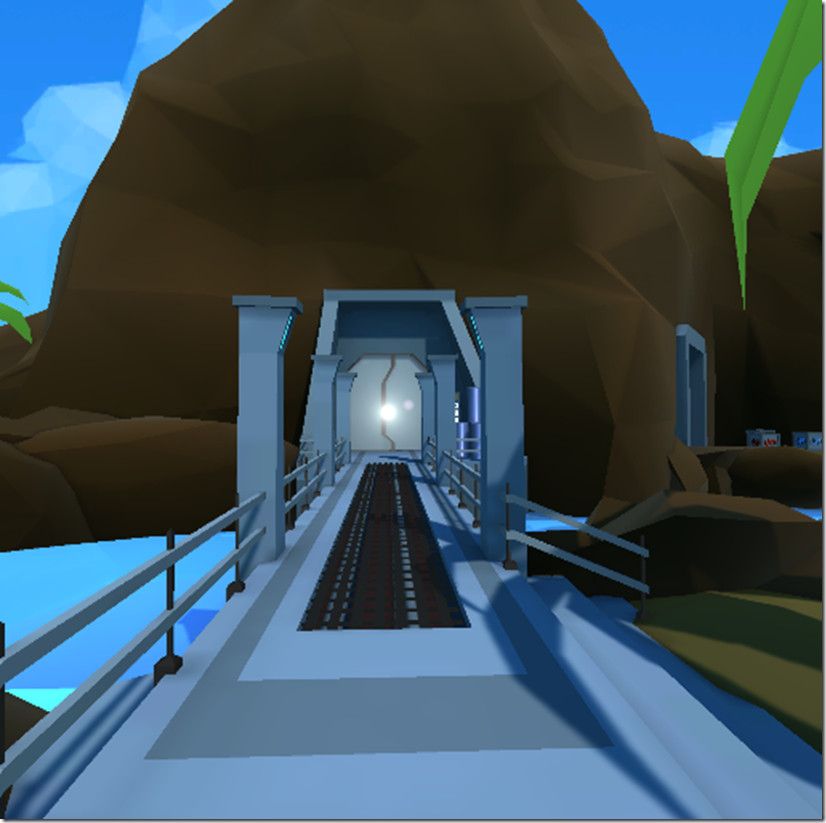

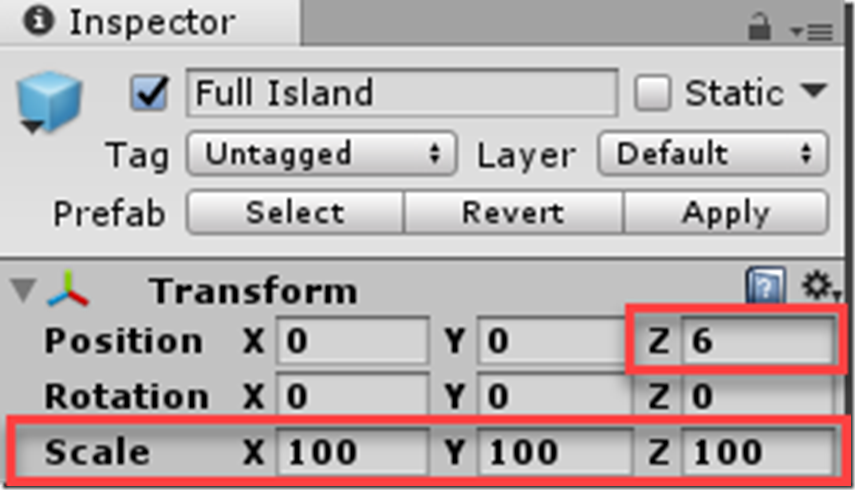

4. As we will be walking around the scene, we need it a little more “human” scale. To achieve this, let’s scale the island prefab by 100 on all axis, as shown below:

5. Also (as shown above) alter the Z axis to 6, this moves the island back in the scene a bit so that the camera start position will be on the beach.

6. Now, navigate to the “HoloToolkit\Input\Prefabs” folder in the Asset view and drag the following prefabs in to the Scene Hierarchy:

· MixedRealityCameraParent – The MRTK VR camera rig (MRCP)

· Managers – Includes the input and control systems

· A Cursor from the Cursor folder, I recommend the Cursor prefab but you can choose.

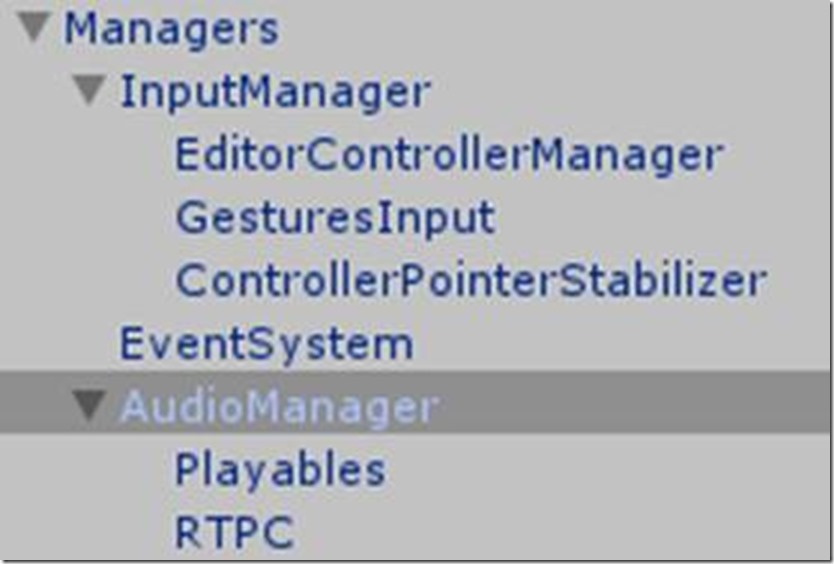

7. As our Island model (courtesy of the MR250 project) already has Spatial audio setup, we also need to include the pre-configured auto manager for the scene. So, drag the “AudioManager” from the “Assets\AppPrefabs” folder and place it in the “Managers” group in the Hierarchy, as shown below:

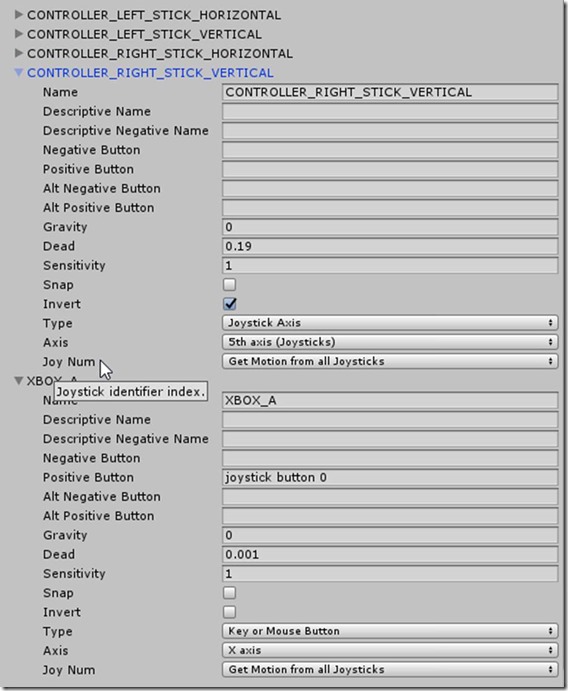

8. Next, we need to add the controller inputs used by the Mixed Reality Camera Manager for Joystick / controller access. So, Open the project Input editor and add the following Axis (you add Axis by increasing the Axis count)

· CONTROLLER_LEFT_STICK_HORIZONTAL

Gravity 0, Dead 0.19, Sensitivity 1, Joystick X axis

· CONTROLLER_LEFT_STICK_VERTICAL

Gravity 0, Dead 0.19, Sensitivity 1, Joystick Y axis, Invert True

· CONTROLLER_RIGHT_STICK_HORIZONTAL

Gravity 0, Dead 0.19, Sensitivity 1, Joystick 4th axis

· CONTROLLER_RIGHT_STICK_VERTICAL

Gravity 0, Dead 0.19, Sensitivity 1, Joystick 5th axis, Invert True

· XBOX_A

Positive Button “joystick button 0”, Gravity 0, Dead 0.001, Sensitivity 1

You can also setup the rest of the Xbox Inputs if you like for your game, using the above patterns, e.g. Xbox B, X, Y buttons, triggers, bumpers, etc. *See note below

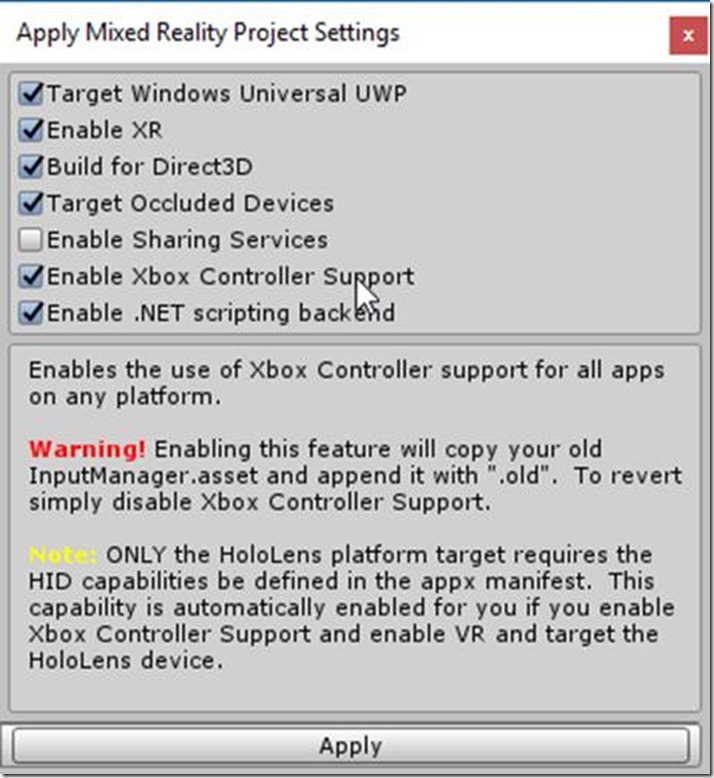

*Note, In the Editor Menu for the Mixed Reality Toolkit, there is an option to import all the Controller settings required. It will however overwrite ALL your existing controller setup. So, you will need to make a note of any manual settings you have changed / updated before enabling it.

To import the settings, in the Editor menu select

“Mixed Reality Toolkit -> Configure ->Apply Mixed Reality Project Settings” and select

“Enable Xbox Controller Support” in the options

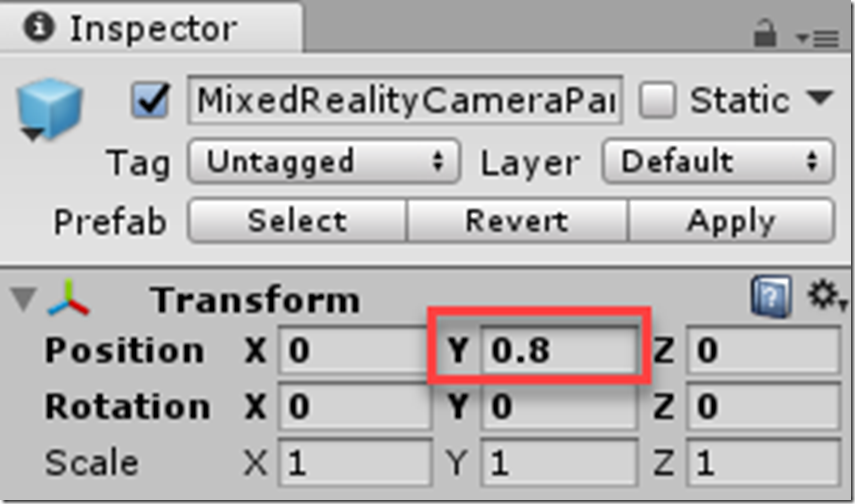

9. To finish off, you need to position the MixedRealityCameraParent (MRCP) starting position for the camera (or position the world around the camera :D). Ideally, we should keep the Camera at position 0,0 (although you don’t have to). However, we need to move it up slightly (0.8f) to allow for the human height within the scene, as shown below:

Then once the scene plays, the camera will organize itself to the ground based on that height.

One thing to be aware of, the view you see in Unity’s “Game” view may not be the final view you see in the headset. Simply put, the sensor data for the headset will alter your view position once running based on the environment data recorded by the headset.

Always test your view in the headset/simulator.

As we are using the MixedRealityCamera rig, we get Gaze (provided by the cursor and GazeControls) and Teleport (via the Input Managers and rig) by default. You can also update these if you wish.

What you will see is the following:

· You will get a white dot when the gaze is not “touching” anything.

· You will get a small blue circle (angled to the object) when you game on something like the ground / tree / door.

· The Xbox Controller or MR Controllers thumbsticks will act the same as in the MR portal (Left stick to step around, right stick to turn).

· A basic teleportation system which will project a teleport cursor on to any suitable surface by holding up on the left thumbstick.

Now the user can move around and explore your environment. Next let’s learn how to interact with it.

What we learned

In this section, we started “getting in to” our Mixed reality environment and gave the player the ability to navigate around our environment. This is great for immersive story telling or history lessons, not so great for an actual game (well, unless you include all these shooter games).

A keen thing to note, is that if you don’t want the player moving of their own accord (a ride or pull along experience) then you can just use the “MixedRealityCamera” prefab instead, it’s the same thing but without the Teleportation interface.

Further Reading

· Holograms 100: Getting started with Unity

· Holograms 101E: Introduction with Emulator

Chapter 5 – Interaction

Interaction in the Mixed Reality universe is handled through the use of the Unity UI EventSystem Manager and raycasts based on the Gaze of the player. As the EventSystem already handles raycasts and scene interactions in both 2D and 3D, it seemed a decent fit.

There are no prefabs or pre-built components for input in the MRTK, simply because everyone’s requirements are so different. This may change with more examples added in the future, such as the Mixed Reality Designlabs, which demonstrate several different interaction use cases.

Other toolkits and frameworks have taken different approaches to input, some give you pre-built frameworks to “drop in”, others provide scripts readily wired for use. In all cases however, you need to check the implementation works with your gameplay style to avoid adverse reactions in your project.

Interaction through the Unity UI system

Starting with the basics, we can use the Unity UI system to provide quick and easy interaction within a VR environment, however, this only works when the Canvas in in World Space. If you use a ScreenSpace canvas, it will only appear on the users view and without a mouse, there is no way to “point” at the UI.

You can see use a Screenspace Canvas for Hud’s and details, just not for interaction.

To see this in action, let’s throw something silly into the scene, a BIG “Click Me” button:

1. Add a new Canvas to your scene by right clicking in the hierarchy window and selecting “UI -> Canvas”.

Or alternately the “Create - > Canvas” button in the hierarchy or “GameObject -> UI -> Canvas” in the editor menu.

Just don’t use the UI object options like Button, Image, etc without a selected Canvas, or it will add the new control to whichever the last canvas that was selected (or the only canvas) in the scene.

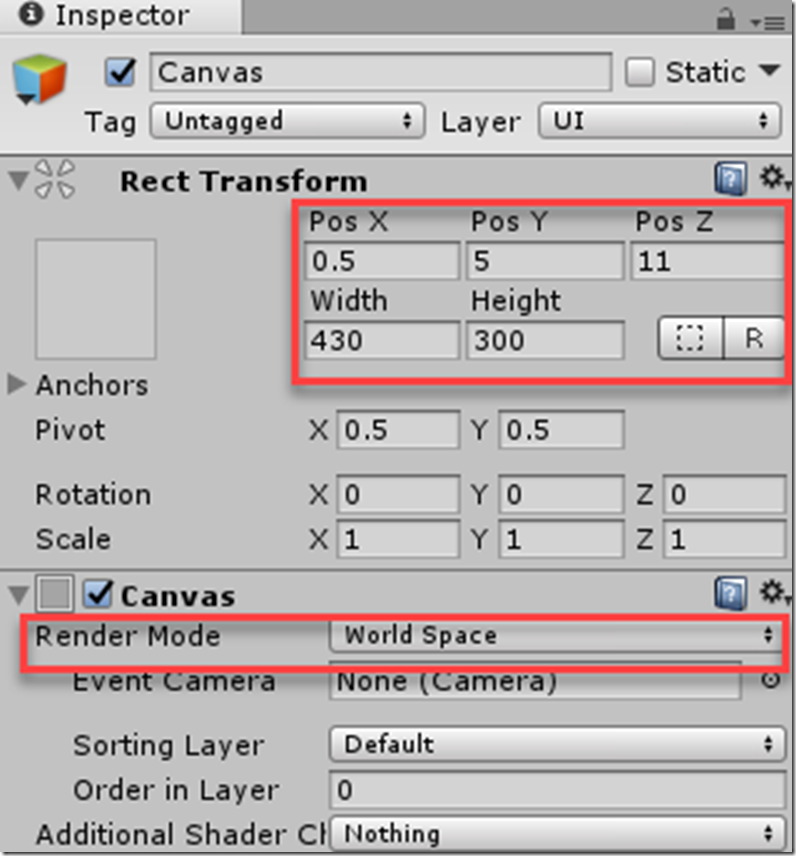

2. Select the canvas and change its Render Mode to “World Space”.

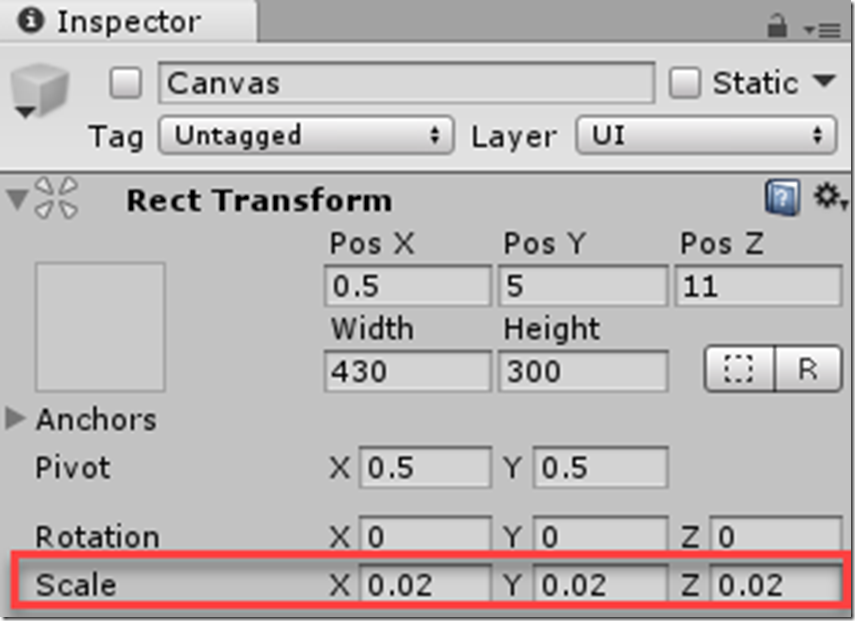

3. Next, we need to size the canvas and place it in the scene. However, in order for graphics (and especially text) to work well, we need to oversize the Canvas and then scale it down to fit. To this end, set the following properties

a. Canvas Pos X = 0.5

b. Canvas Pos Y = 5

c. Canvas Pos Z = 11

d. Canvas Width = 430

e. Canvas Height = 300

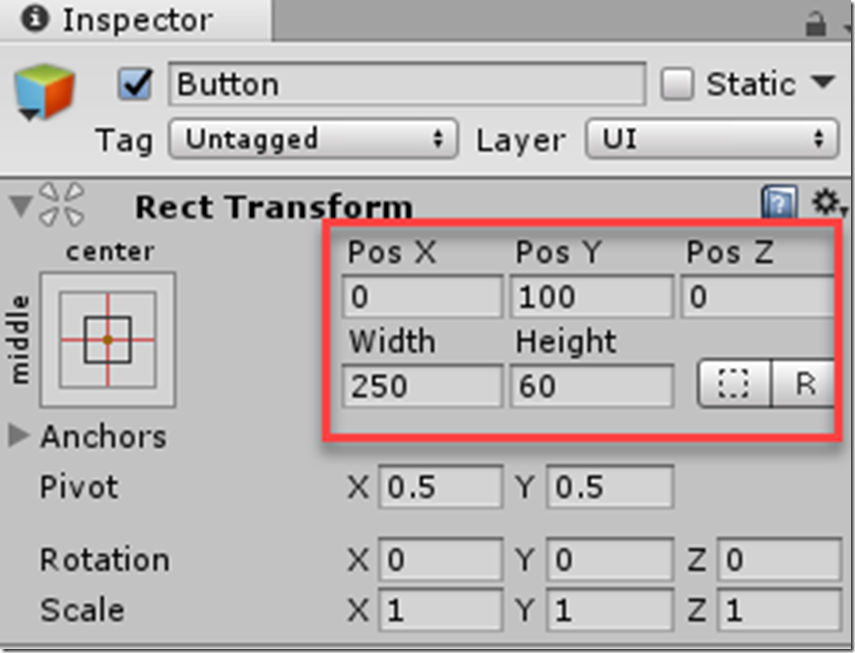

4. Next, we’ll add a button to the Canvas and set its size proportional to the Canvas itself. Right-Click the Canvas and select “UI -> Button”.

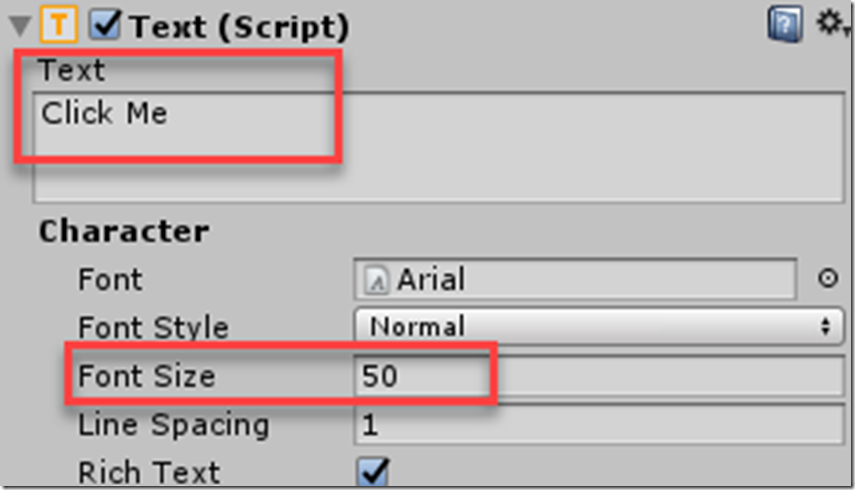

5. Update the Button’s values as shown here:

a. Button Pos X and Pos Z = 0

b. Button Pos Y = 100

c. Button Width = 250

d. Button Height = 60

e. Button -> Child Text component Text = “Click Me”

f. Button -> Child Text component Font Size = 50

6. You should now see a massive button floating in the sky as shown below:

A key thing to be aware of when using the Unity UI system, is that it is generally ONE SIDED only. This is fine in most cases but remember that the player can usually walk around the environment. If the player is able to walk behind a UI component, they won’t get to see anything, so best to either place UI against things, like walls, 3D planes and such if you want to avoid issue. That is, unless that is the effect you are going for but the transition will look very awkward in VR.

7. Which is all well and good if you like REALLY MASSIVE buttons, but let’s scale this down to just make it just a HUGE button, we wouldn’t want to go overboard now would we?

So, select the Canvas and update all its Scale parameters (X, Y, Z) to 0.02, as shown here:

8. Now when you zoon in to the scene, the Button should be scaled nicely down above the main door to the facility:

![clip_image035[1] clip_image035[1]](https://msdntnarchive.z22.web.core.windows.net/media/2017/10/clip_image0351_thumb1.png)

However, if you run the project at this point, it still isn’t accessible. We still need to let the VR system know it’s there and is ready to accept input (to distinguish from other UI Canvases in the scene which are for display only).

To achieve this we simply need to add another script component to the Canvas to register the canvas for Gaze input:

9. Select the Canvas you added in the scene

10. Click on “Add Component” in the inspector and type “Register Pointable Canvas” and select the script that appears with the same name.

Now when you run the project, your gaze cursor will react when it enters the canvas and will allow you to click the button. Test this is if you wish by adding an event to the Button and make it do something, at this point it’s just like any other normal button.

If you are familiar with the UI system, you might just wonder why we didn’t setup an Event Camera on the canvas, surely that would enable it to work, except it doesn’t. Event Camera’s only take input from those input systems registered with the Event System and the Gaze interaction doesn’t use that presently. This may change in the future but for now, it’s more performant to use the gaze system which is a single raycast process that is running continuously, no need to add more.

Interaction with 3D objects using Gaze

If we aren’t using the UI system and want something a little more specifically for 3D, we are going to need to write our own input handling scripts.

Several new Interfaces have been created for easier interaction through the toolkit, namely:

· IFocusable for focus enter and exit events. The focus can be triggered by the user's gaze or any other gaze source.

· IHoldHandle for the Windows hold gesture.

· IInputHandler for source that supports up and down interactions, e.g. button pressed, button released. The source can be a hand that tapped, a clicker that was pressed, etc.

· IInputClickHandler for source that is clicked. The source can be a hand that tapped, a clicker that was pressed, etc.

· IManipulationHandler for the Windows manipulation gesture.

· INavigationnHandler for the Windows navigation gesture.

· ISourceStateHandler for input source detected and source lost events.

· ISpeechHandler for voice commands.

· IDictationHandler for speech to text dictation.

· IGamePadHandler for generic gamepad events.

· IXboxControllerHandler for Xbox One Controller events.

These interfaces are used in the scripts we attach to objects to make these interactable, depending on how we want the player to interact. Each interface provides events for the scripts we can write to enable the script to do things when that event happens, simple.

First, we’ll create a simple debug script that will log to the Unity console then we’ll randomly attach it to “things” in the scene.

The Island scene already comes pre-prepared with some interactions, if you haven’t already, try tapping on the keypad to see if you can unlock the secret code (hint, it has something to do with cake), or wandering over to the far-left hand side of the island to solve the crates puzzle.

1. Create a new Cube and place it in the scene at position X = 1, Y = 1, Z = -6.

This should place it nicely on the beach.

2. Select the Cube and click “Add Component” in the inspector and click on “New Script”

3. Give it an appropriate name, e.g. “WhatHappened”, ensure the language is set to “C Sharp” and click “Create and Add”.

4. Find the script in the Root of your Assets folder and double click it to open it up in Visual Studio.

5. You should have a script that looks something like this now:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class WhatHappened : MonoBehaviour {

// Use this for initialization

void Start () {

}

// Update is called once per frame

void Update () {

}

}

6. Next, we’ll add a new using statement to the top of the script, to be able to find the HoloToolkit Input interfaces. Add the following to the top of the script:

using HoloToolkit.Unity.InputModule;

7. We then need to add an interface to the script. For simplicity sake, we’ll start with the IFocusable interface. So, update the class definition as follows:

public class WhatHappened : MonoBehaviour, IFocusable {

8. Once added, you will notice it has red squiggles underlining the new interface, this simply means we haven’t added the functions for the interface yet. Now either hit “Ctrl + . ” to bring up the Quick Action interface and select “Implement Interface” or simply paste the following code inside your class (you can overwrite the Start and Update functions if you wish as we’re not using them).

public void OnFocusEnter()

{

Debug.Log("I'm looking at a " + gameObject.name);

}

public void OnFocusExit()

{

Debug.Log("I'm no longer looking at a " + gameObject.name);

}

9. In each of the Enter and Exit functions, I’ve simply added a Debug.Log message to output to the Unity console when each event happens. For your game objects, you’ll want to update this for what you need to happen, like activate the monkey, flip the pancake or take over the world, or whatever.

10. Save the script, return to Unity and (provided you weren’t in play mode) when you run the scene now, in your headset when you gaze at the box on the beach the messages will just pop up. Simples.

And that’s all there really is to it, it’s the same for all the other interfaces and you simply pick and choose which Interfaces / events you want to use per-object. Want to pick up objects, use the IHoldHandle interface, want speech interactions, then use the ISpeechHandler interface and so on.

It is worth downloading and checking out the MixedRealityToolKit source project (just be sure to select the Dev_Unity_2017.2.0 branch) and examine the example scenes provided with the project.

If you see “HoloLensCamera” in a scene, you simply need to replace it with “MixedRealityCameraParent” for it to work with the newer Immersive headsets. Eventually, all the examples will be updated to work with both HoloLens and the Immersive headsets using the newer Camera Controller

What we learned

The input experience is usually the biggest difference between different titles, whether you are enabling the user to directly interact with the environment or just provide pointers for users to shoot highlight things will dramatically change your implementation. This section has given you a walk through most of the interaction methods available through the MRTK, for simple pointer style games just look at the Unity VR samples pack as this just uses basic raycasting methods.

There are many other patterns provided through the Mixed Reality API, most of which are already integrated with Unity itself, such as Voice, Dictation, Gamepads and so on. The MRTK does have some extensions of these Unity behaviors to make these easier to use, so they are also worth looking at.

Further Reading

Chapter 6 – Wrapping up

This workshop is by no means, meant to be the complete guide to building Mixed Reality projects, just enough to wet your appetite and get started, we’ve covered:

· Spinning up Unity for Mixed Reality Development

· Adding content, models, placement and scaling

· Enabled users to navigate through a scene. Although you could do a standing / rails project as well if you wish

· Gave the player something to do and interact with. Which you can use or swap to throwing stuff at the player to shoot.

There is a world of choice out there and so many different kinds of Mixed Reality experiences you can build.

Here’s a few links to go even further:

· Holograms 220: Spatial sound

Sound and audio is important, especially when it is linked to something. Both Unity and the UWP platform have a lot to say about sound, plus a few helpers / managers located in the MRTK

Without a keyboard you need to be able to offer Mixed Reality players more ways to interact and nothing is more natural that voice. Whether it’s dictation, simple commands or more, there are powerful things you can do with voice.

Also think about users who can’t hear, and use Speech to Text systems to give feedback to those players as well, from talking to environmental feedback.

The MRTK also includes examples and API’s for voice handling.

· Holograms 240: Sharing holograms

A single player experience is fine but wouldn’t it be better to play together? There are several ways to leverage the Unity networking system (UNET) or other frameworks such as Proton to share position, placement and tracking data, all with a fairly low overhead. The MRTK also includes API’s for sharing.

· Cognitive Services / Microsoft Cognitive Toolkit

Cognitive services / AI is playing an increasing part in everyday systems and applications, this also especially true for Mixed Reality. You need to think beyond the realms of the normal and reach for the stars to stand out. Whether you are simply doing voice recognition or something a little more complex like an in-game AI chat bot, or even complex shape and drawing recognition, there is a world of power out there.

Mixer is Microsoft’s new streaming platform, like Twitch or YouTube Live Streaming. Mixed Reality experiences shouldn’t just be limited to the player and what they are doing, you should be able to share what you are doing and even allow streaming viewers to interact with your play. This is what the developer integration enabled through the extensive Mixer API, why play along when you can play with the world, whether you have a headset or not!

Don’t be normal!

Go beyond with your creation, start small and then keep adding, with Mixed Reality the world is finally your playground, without the confines of your desk.

Comments

- Anonymous

October 12, 2017

Great article. I like it! :) - Anonymous

October 21, 2017

Great article - inspiring us to get started!Ian http://MoreMixedReality.com - Anonymous

October 26, 2017

Excellent article, complete and detailed. Many thanks for that. It was hard to get a how-to dedicated to the new WMR headset. - Anonymous

October 31, 2017

The comment has been removed - Anonymous

January 04, 2018

i can't seem to get the motion controllers working in section 3. it's not detecting them even though i have followed all the procedures above, so i can't teleport. i get this message in the log, so i wonder if i need to do an extra step for some reason?:"Running in the editor won't render the glTF models, and no controller overrides are set. Please specify them on Controllers.UnityEngine.Debug:Log(Object)HoloToolkit.Unity.InputModule.ControllerVisualizer:Start() (at Assets/HoloToolkit/Input/Scripts/ControllerVisualizer.cs:84)"