Image Based Motion Analysis with Kinect V2 and OpenCV

Guest blog by Edward Hockedy, 3rd year computer science student

Introduction and project background

I am a third year computer science undergraduate at Durham University, and recently finished my year-long project on image based motion analysis.

Hand and body motion is becoming an ever more common form of human computer interaction. Analysing images provides lots of information for identifying the movements being made by a user, thus allowing the interaction with on-screen objects.

The main focus of the project was to investigate the use of image analysis to identify hand motion and pose recognition in real time. It also examines the feasibility of using human motion as a method of computer interaction. This is done through the creation of an interactive application that allows a user to control objects and menus in both 2D and 3D using purely poses and motions made by their hands. It uses readily available hardware and with places only a few restrictions on the user.

To gather the information about the user, a Microsoft Kinect v2 is used, which can generate depth data in a wide area. This data can then be examined per pixel or interpreted as a whole image to obtain useful information about the pose and position of a user hands. Depth data was chosen because it allows for use regardless of the colour of the user, the environment or lighting, and also means the position of an object in 3D space can easily be obtained.

Development was done using the Kinect SDK which Microsoft provide for free and OpenCV.

The main applications of this project are in gaming, specifically VR (virtual reality). Allowing a user to have control without any physical equipment in their hands can provide an even more immersive experience than offered. Other applications could be in security (monitoring human movements) or just general human computer interaction.

Hand tracking

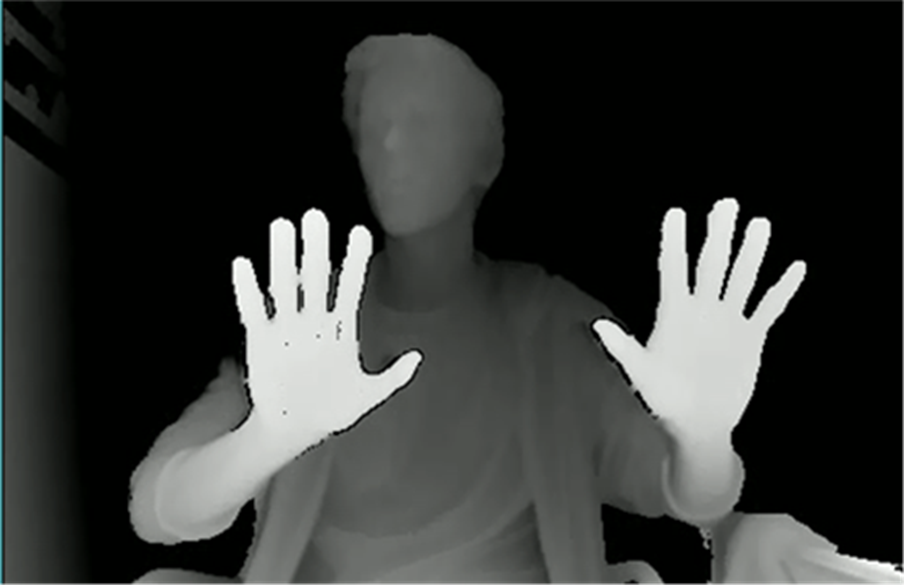

While the Kinect has very robust hand tracking capabilities, I decided to implement my own version that specifically used the depth image data as input. Once the depth information is generated, the depth value can be mapped to a colour intensity, as seen in figure 1.

Figure 1, the depth from the Kinect. The higher intensity, the closer to the camera.

A single hand can easily be found in a depth image. It assumes that the closest object to the camera is the user’s hand, as so takes this to be the hand. In the case of two hands, the second closest that is out of range of the first is identified as the second hand.

However, finding just the hand area is not particularly useful, and a standardised point on the hand is more useful, such as the centre of the palm. To do this a Gaussian blur is done on the image, which accentuates the parts of the image of highest pixel density, in this case the hand (or hands). This is a slight limitation of the system in that the user must have their hands as the closest object to the camera, but when using an application specifically designed with hand control in mind, is not too much of a burden. An example of the blurred image can be seen in figure 2.

Figure 2, the blurred image. Notice the points of highest intensity correspond to the hand centres.

So with the hand centre(s) taken to be the points of highest intensity on the blurred image, the hands can be tracked. In order to speed up processing, once a hand is found in the first frame the next frame does not need to be checked in its entirety. Since the hand will not move a large amount between frames, only a small area around the current hand location needs to be checked. This also allows the hand to move backwards such that it is no longer the closest thing to the camera.

Pose identification

The pose identification is done using machine learning, specifically random forests. This method was chosen because it allows for classification of unseen hand poses, and does its best to identify the pose based on previously seen poses. It also guarantees an identification (not necessarily correct), and also is fast to evaluate. It also means if any further poses are wanted to be added they could be easily done so, and the classifier re-trained.

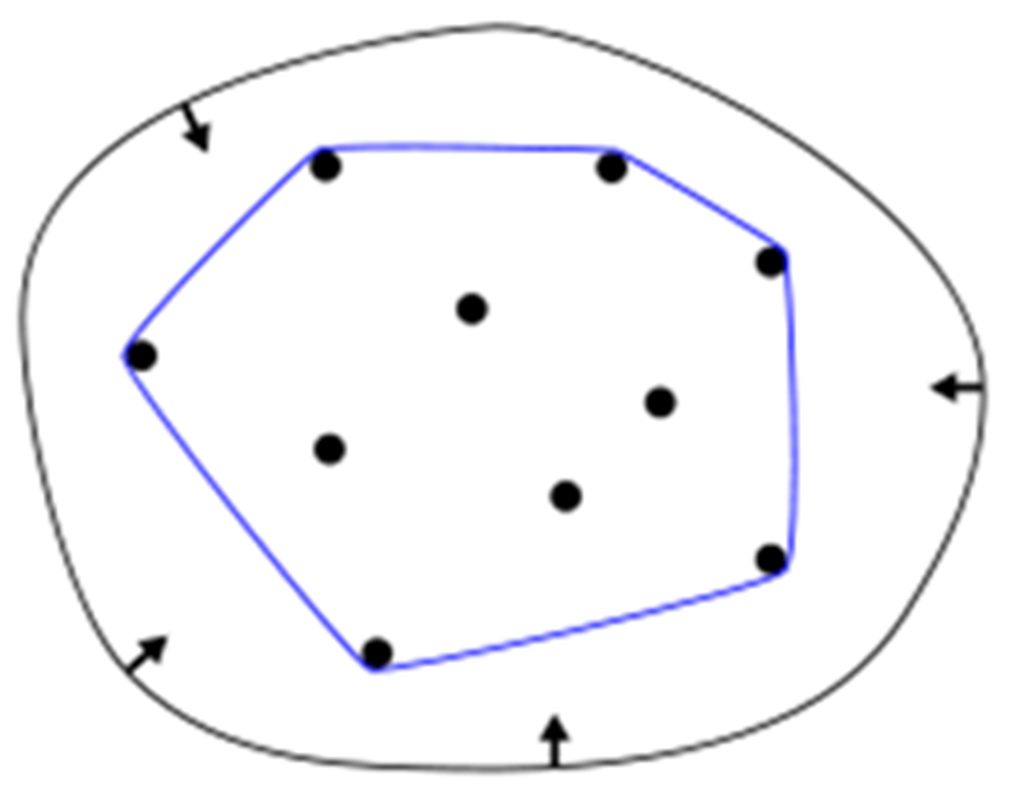

Machine learning requires the input data to be in a certain format in order to draw relationships between the samples. In the case of hands, one of the best ways to represent them is through an estimation of the shape outline. This can be done with a convex hull, essentially a set of points such that any line draw between two of the points is within the set, see figure 3.

Figure 3, visual representation of a convex hull. Source: https://en.wikipedia.org/wiki/Convex_hull#/media/File:ConvexHull.svg

Once the convex hull set has been calculated for the hand, the distance between each point and the hand centre is calculated. A total of 25 feature points are used for each hand.

A total of three different poses can be identified. They are open hand, closed hand, and index finger up. Only a small number of poses was chosen because it allows for higher accuracy with identification. A large amount of functionality can also be obtained from just a few poses with regards to the final application. The poses are shown in figure 4

|

|||||

|

|||||

Final system

Figure 4, the three possible poses

Final application

The end product of the project is a real time application where a user can interact with objects in a 3D environment as well as with a 2D menu. The user can use both their hands at the same time. It was built using OpenGL for the graphics, and the physics engine was Bullet.

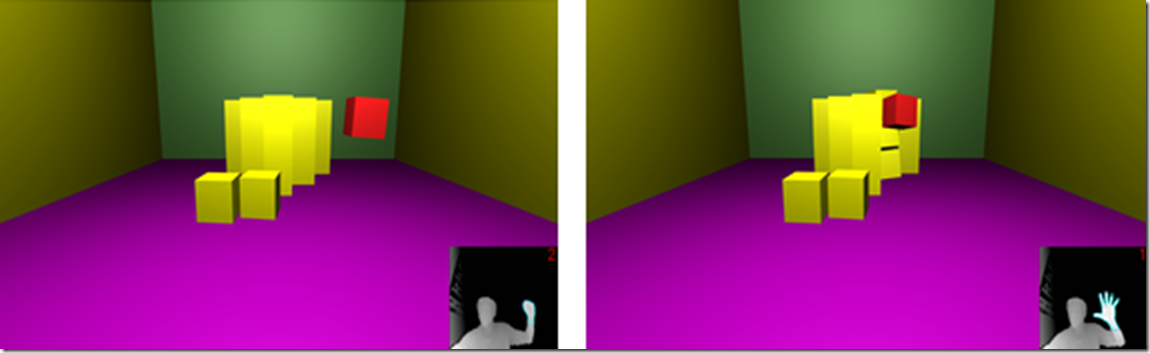

The first part of the interactive application is a 3D environment. Here a user can interact with cubes depending on the hand poses they are making. The user selects the cube they want to interact with by moving their hand towards the desired cube in 3D space. Once the cube is selected (highlighted by red whilst doing open hand pose) they can pick it up by grabbing (making closed fist pose) and moving their hand. A force is applied to the cube in the physics engine using SUVAT equations. Figure 5 shows a user throwing a cube.

Figure 5, picking up and throwing a cube

If the user lets go of a cube they are holding it will fall down. If they let go while moving their hand the cube will fly off in the direction they are moving with a force dependent on their hand speed.

If the user is using two hands, they can control two cubes independently in the same way they would control one.

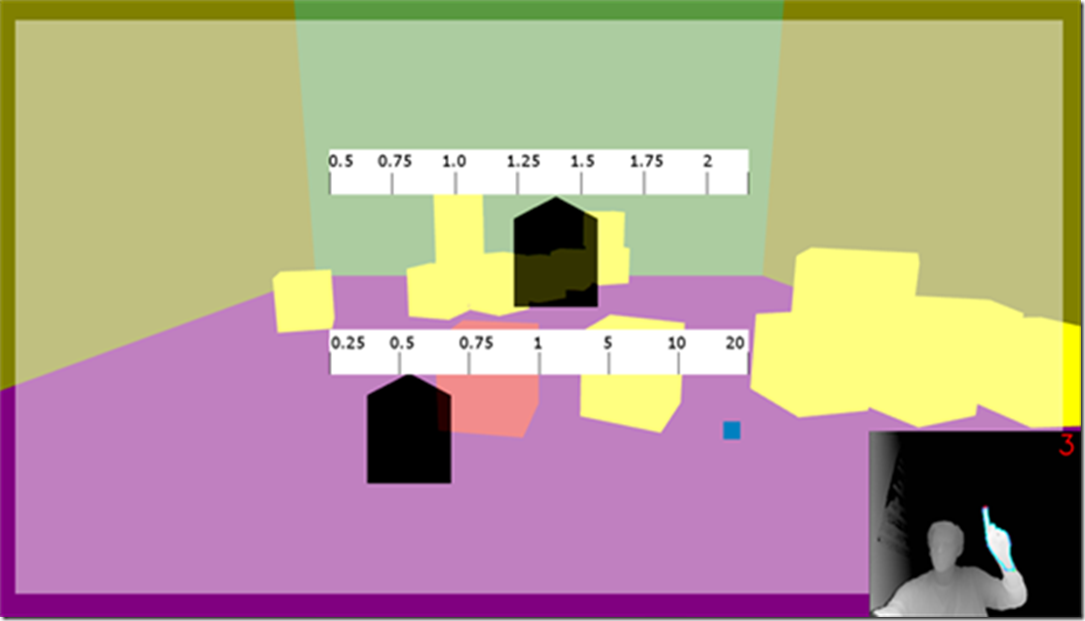

The user can change a number of parameters of the cubes, specifically size and mass. There are multiple ways of doing this. One was is with the 2D menu. This is opened by making the third pose, one finger up. The user can then move sliders as seen in figure 6. An alternative way to change a cube size is to grab it with two hands, then move the hands apart.

Figure 6, 2D menu

Results

To evaluate the effectiveness of the system, 14 test users were asked to have a play with the application, and then perform a few tasks which were timed. They also had to fill in a questionnaire where they scored statements from 1 to 5 based on how much they agreed. The questionnaire results can be seen in table 1.

| Statement | Mean |

| It was easy to select a cube | 3.86 |

| It was easy to pick up a cube | 4.07 |

| It was easy to throw a cube | 4.14 |

| The system responded when I wanted it to | 3.36 |

| The system often did things I didn’t want | 3.00 |

| The menu was easy to open | 4.50 |

| It was easy to change cube size in the menu | 4.36 |

| The actions were intuitive | 3.29 |

| My hand/arm did not get tired | 4.36 |

| It was easy to select 2 cubes | 3.00 |

| It was easy to pick up 2 cubes | 3.00 |

| It was easy to pick up a cube with 2 hands | 2.15 |

Table 1, the mean score for each statement.

As can be seen in the responses, all but one statement got a score of neutral (3) or above. This shows the system generally worked as planned, and that users were satisfied with how easy it was to use.

There were four timed tasks to do. The average times are show in in table 2.

| Test | Mean |

| Build tower of 2 | 21.51 |

| Build tower of 3 | 93.99 |

| Open menu + enlarge cube (1 hand) | 12.00 |

| Enlarge cube (2 hands) | 91.12 |

Table 2

As can be seen, the times to perform a task are much longer if they were performed in the real world (such as building a tower of blocks). This is to be expected as it takes a while to get use to the movement required to be precise with the system, as well as occasional misclassifications of pose, of the difficulty with viewing a 3D environment in a 2D monitor.

In general however, users found that the more they used the system, the easier tasks became. In particular, I became very proficient at using the system since I had not only been developing and testing it for a long time, but know how to best position my hands to allow the system work to the highest accuracy.

Comparing different demographics showed that gamers and computer literate had faster times and gave higher scores. This shows system is not fully intuitive, and is easier for those with more experience in similar tasks.

A few other results are as follows:

· 2D interactions easier than 3D – suggest 2D should be used if can and doesn’t reduce immersion

· Runs as 10fps. This is lower than planned, but still allows for instant interaction.

· Pose classification accuracy was 100% with test data set data

· Pose classification accuracy ranged from 100% for certain poses, to 63% for other poses for test users

Conclusion

I really enjoyed my time working on the project, it was fun to have a very interactive and visual application to develop and to use a wide array of techniques to make the final system work. Results show that people generally agreed that the system worked as designed, or at least as well as they expected it to. Pose classification was generally accurate, and hand tracking didn’t pose too many issues in the right settings. There are numerous extensions to the project, such as adding more functionality or refining the pose estimation or hand tracking methods.

A demonstration of the final working system can be seen here: