3D Reconstruction of Fire-Damage Parchments

Guest post by Microsoft Student Partner at University College London Sergio Hernandez

When you think of a document, you generally think of a written piece of paper. In this piece of paper, a story can be written, a new law created, a discovery made public or an event described. We generally think of writings as a description of the present state of the world in which we live, or the world in which someone’s mind lives. However, documents can also serve us to describe the future or, even more importantly (based on measurements of accuracy of what’s described), the past. Writing materials have allowed humans to share their knowledge, skills and thoughts in a way which transcends time and space; and it would be a fair consideration to make that being able to review and learn from the ideas, victories and defeats of others in this way has been a major contributing factor to our development as species.

You must not worry if this morning your daily newspaper flew away as a current of wind swept the streets you were commuting, coffee also in hand, to start another day of work. You will not miss the news of the day. All major newspapers now own a webpage where you can access the same information, which certainly cannot be so easily swept away by nature or otherwise. Even before the Internet age, you could just buy another printed copy of the journal, or ask for that of your neighbour. The historically peculiar present time we live in makes us forget that such an enabling flow of information has not always been the norm. In fact, it has not been the norm for the most part of history.

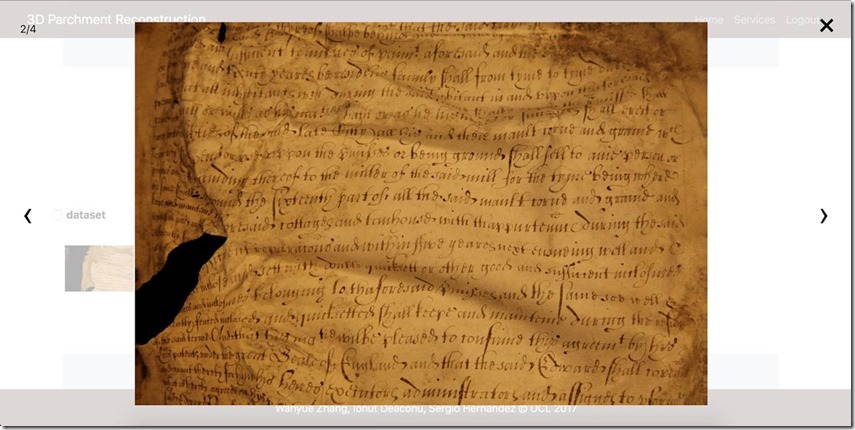

Now, close your eyes and imagine yourself in medieval clothing, in a medieval building, reading medieval documents. If your imagination was to be accurate, you are now most likely reading a parchment under a wooden roof —the clothing I will leave entirely to the reader’s recreation—. Parchments are a writing material made from untanned skin of animals which has been used to capture history for over two millennia. They only began to be replaced by paper in the late Middle Ages, around 500 years ago. As you may induct, given that wood is a very inflammable material and that the security measures against urban fires were not as exhaustive in the past as they are now, many documents uniquely written in parchments were lost during such unfortunate events. Unlike paper, however, parchments may survive high heat conditions. Nonetheless, they harden and wrinkle, making them faintly legible. Due to this, many important historic events and data are currently hidden from our understanding in the shades of the wavy, in some cases even sharp landscapes these parchments adopt. The current solutions addressing the problem of fire-damaged parchments are manual examination of these documents and invasive quemical processes. Both of them can be helpful in certain occasions, but none achieve consistent desirable results.

During the academic year of 2017-2018, as a Computer Science student at UCL, I was teamed with two other students from my class: Rosetta Zhang and Ionut Deaconu. There are both talented and hardworking students. In retrospective, I feel compelled to mention what a pleasure it was working with them. Our team would be, starting from October, developing a year-long project to offer an alternative solution for the aforementioned problem, as part of one of our modules in the UCL Computer Science, which pairs teams of students with industry clients to solve real-world problems.

During the fall of 2017, me and my new team-mates from the Computer Science Department at UCL were presented to Professor Tim Weyrich, a researcher in the very same department. Dr. Weyrich presented us with what, at first, seemed magic: a set of algorithms which could flatten a 3D representation of fire damaged parchments (or any other kind or wrinkled parchments, for that matter), and make them, to our surprise, legible again. I still remember the moment he showed us a video representation of the work done by the algorithms, where a wrinkled parchment would slowly become a flattened one. After getting us excited about the solution, he then presented us with the problem: using the algorithms needed a tech savvy user to compile and configure them, as well as to be able to correctly direct the outputs of one process to the inputs of the next. He proposed to us to create a website (https://parchment.cloudapp.net/) where non-technical users could employ the services, in a server that would be automated to answer our users’ requests. That very moment is when our journey begins.

Our home page (https://parchment.cloudapp.net/)

During the first weeks after the first meeting, we started getting familiar with the technologies and theory which fuelled this project, as well as with our prospective users and the problem itself. We began researching many of the techniques our client used in the algorithms, as well as technologies we may use in the most general structures of our product (such as Microsoft Azure Cloud Services to host our servers and webpage). We also spent time interviewing archivists from the British Library to comprehend their level of understanding of the technological approaches our client used, as well as their main concerns and workflows. We stablished from the beginning that a defining feature of our product would be its user-centred design.

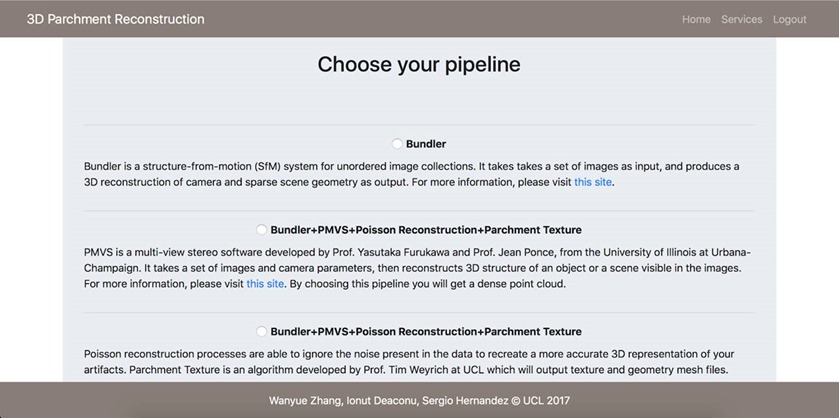

After gaining a deeper understanding of our project, we began concurrently designing the overall system and started to compile the algorithms. The system was to be ulteriorly divided in two main components: our website application and our back-end services, both tightly and harmoniously connected. Our website application would have a minimal design, as well as a sequential set of actions the users would need to take to obtain their expected result. It would allow users to register and login, upload sets of images linked to their accounts, select a set of algorithms to run on these images, and finally download the results when available. The back-end services, based on a virtual machine hosted in Azure, would then obtain the images from the web application and begin the algorithmic pipeline processes upon each request of the users. Ideally, different requests could run concurrently on the server, so that users would not need to wait for other users’ requests to finish. Other additional features would be email notifications alerting our users of a request being started and finished, as well as an image gallery to display the uploaded pictures.

During the time we spent compiling, I was largely dedicated to Bundler and PMVS2, two software packages which, in conjunction, converted images of an object from different angles into a 3D reconstruction of such object. They were developed by researchers in Cornell University and the École Normale Supérieure de Lyon, respectively. At this point in time, we did not have access to the virtual machine we would be later using to store and run such algorithms, as well as our website application; hence, we had to compile and configure them in our personal machines. Soon, it came into my realisation that compiling such programs required us to install a large number of dependencies, as well as configuring the paths in the main programs of the algorithms which pointed to such dependencies. Furthermore, we were using a different operating system to the one we planned to use in our virtual machine. These facts meant that, once we gained access to the virtual machine, we would need to re-compile and re-configure the algorithms . Therefore, we set up a Docker container to host our algorithmic services, a technology which would provide us with a highly personalised and flexible virtual environment in which we could work independently of our computer, since it could later be exported to a different machine. We also made it possible for the container to share specific folders with the local computer it was run in, so that we could easily move data in and out of it. When we gained access to the virtual machine in Microsoft Azure, we configured such machine and migrated the Docker container to it.

After much work, I compiled both programs on the Docker container and successfully got valid output from both. At this point, we started working on a pipeline consisting initially on Bundler, which would be extensible and flexible, allowing to easily include more algorithms in the future. I also personally began working on automatising the process which would happen once images were uploaded to our servers, and preparing an output to be available for download by the user once it was obtained. We designed several scripts which would be run when images were uploaded, and which would trigger the beginning of the pipeline (in this case Bundler, several commands to manipulate the input and output, and other tools such as “mogrify”) and define the set of steps needed to obtain the output and make it available for the user to download. In the future, these scripts can be easily edited to include new algorithms which make use of the output of Bundler; and using similar logic to the one they contain, new scripts can be designed to automatise new pipelines.

Pipeline selection step

In order for many users to be able to request our services at the same time, we would need to be able to achieve three tasks: first, we would need to run our pipeline in the background, so that many algorithmic processes could coexist concurrently; second, we would need a well-organised file system which could differentiate and locate the input, output from each algorithm, and download files, based on every different request of the service by the users. We solved the first task by adapting the scripts which activate the pipeline to make its algorithms run as background processes, thus supporting concurrent processing; and the second by organising such files by having as their name a unique alphanumerical identifier generated for each individual request, as well as being located within another folder specifying their owner’s username.

The last step to achieve concurrent processing was to configure Bundler to direct the output files to a given output directory. To do this, we created symbolic links from the desired output directory to the default running and output directories of Bundler. In other words, Bundler runs and outputs to the directory we specify (one which alludes to the request identifier, in order for many processes to be run concurrently), “believing” it is actually running in the default directory as both are symbolically linked, so it creates the default output folder in the current specific directory, instead of in the main Bundler folder.

We developed the website front-end using CSS, Javascript (with libraries such as Dropzone) and hosted it on an Azure virtual machine using PHP and Apache2. Our website has a minimalistic design, where users are presented firstly with the project information and an introduction to our client’s research, and a second page where they can employ the services. On the services page, and upon registering and login in, users may upload their set of pictures, review them using our image gallery, select a set of algorithms to apply to them, and request these algorithmic processes to be started in our back-end. Users then will receive an email notification confirming the acceptance of their request (as well as the date and time the algorithms began processing the images) and, upon the pipeline finishing its work, users will be notified again by email of the process having finished and their results being available. In these emails, a request identifier is given. Using this identifier, the users are then able to download their results on our webpage. This whole process is presented visually as of sequential nature, so that it implicitly communicates to our users what they should do next.

Image gallery

We have created a solution for archivists and other professionals who cannot make use of complicated-to-use algorithms and packages, which allows them to be able to read and manipulate their historic material. We have created a system with great possibilities for future growth and evolution, in the form of adding different algorithms and pipelines which can make use of our website interface and backend structures. We therefore believe that our work will have a considerable impact in the way history is extracted from damaged parchments, and we trust in future generations to improve the system we have devised and to extend its functionality and reach.

Our team

From left to right: Ionut, Rosetta, Sergio (me)

Comments

- Anonymous

May 09, 2018

Great guest post.