Get Alerts as you approach your Azure resource quotas

Updated 16/06/2107 to also return Network usage

Each Azure subscription has a bunch of limits and quotas. Most of these are "soft" limits, meaning that they can be raised on your request--the limits exist to help with data centre capacity planning and to avoid "bill shock" if you accidentally deploy a lot more than you should have.

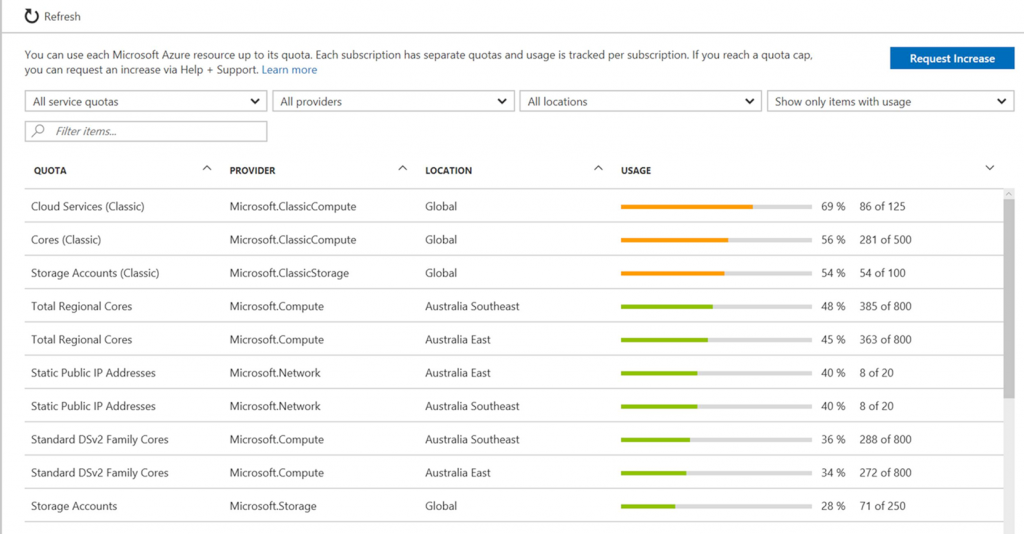

You can view your current quotas, usage against those quotas, and request quota increases by logging on to the Azure Portal, selecting your subscription and choosing "Usage and Quotas":

While you can check this page whenever you want, when you're working on a project you probably have other things to worry about besides checking how you're going against your quotas. But sometimes your deployments can grow quickly, and finding out you've hit limit just as you need to deploy more resources can be a pain, as getting quota increases isn't instantaneous. Would it be great if you could find out when you're close to hitting your quota limits before it's too late?

Luckily this is pretty easy to do, at least for most of your quotas. Azure PowerShell includes the cmdlets Get-AzureRmVMUsage and Get-AzureRmStorageUsage which will report your current usage and quotas for a range of different resource types. Networking is a bit trickier as it's not included in the PowerShell commands, but the same info can be pulled directly out of the REST API. But you don't want to be responsible for calling these functions to the check the quotas yourself, you want to be notified proactively. You could build your own notification engine, but a better option is to use something that's already good at alerting. There are a bunch of tools that could use, but in this post I'll be using OMS Log Analytics.

Before we can use Log Analytics to send alerts based on your Azure quotas, you need to get data into its store. Azure is able to automatically send a bunch of data into OMS, but quota details are not included (at least not as of June 2017). Luckily there is a Log Collector API which lets you write your own data into the Log Analytics repository. I took the PowerShell sample from this page and modified by calling the Get-AzureRmVMUsage and Get-AzureRmStorageUsage cmdlets and the Network Usage REST API to populate the events. Finally I put this into Azure Automation which lets me easily authenticate to Azure and configure the script to run on a schedule (e.g. once per day). The final script looks like this:

[powershell] Param(

[string]$omsWorkspaceId,

[string]$omsSharedKey,

[string[]]$locations

)

# To test outside of Azure Automation, replace this block with Login-AzureRmAccount

$connectionName = "AzureRunAsConnection"

try

{

# Get the connection "AzureRunAsConnection "

$servicePrincipalConnection = Get-AutomationConnection -Name $connectionName

"Logging in to Azure..."

Add-AzureRmAccount `

-ServicePrincipal `

-TenantId $servicePrincipalConnection.TenantId `

-ApplicationId $servicePrincipalConnection.ApplicationId `

-CertificateThumbprint $servicePrincipalConnection.CertificateThumbprint

}

catch {

if (!$servicePrincipalConnection)

{

$ErrorMessage = "Connection $connectionName not found."

throw $ErrorMessage

} else{

Write-Error -Message $_.Exception

throw $_.Exception

}

}

$LogType = "AzureQuota"

$json = ''

# Credit: s_lapointe https://gallery.technet.microsoft.com/scriptcenter/Easily-obtain-AccessToken-3ba6e593

function Get-AzureRmCachedAccessToken()

{

$ErrorActionPreference = 'Stop'

if(-not (Get-Module AzureRm.Profile)) {

Import-Module AzureRm.Profile

}

$azureRmProfileModuleVersion = (Get-Module AzureRm.Profile).Version

# refactoring performed in AzureRm.Profile v3.0 or later

if($azureRmProfileModuleVersion.Major -ge 3) {

$azureRmProfile = [Microsoft.Azure.Commands.Common.Authentication.Abstractions.AzureRmProfileProvider]::Instance.Profile

if(-not $azureRmProfile.Accounts.Count) {

Write-Error "Ensure you have logged in before calling this function."

}

} else {

# AzureRm.Profile < v3.0

$azureRmProfile = [Microsoft.WindowsAzure.Commands.Common.AzureRmProfileProvider]::Instance.Profile

if(-not $azureRmProfile.Context.Account.Count) {

Write-Error "Ensure you have logged in before calling this function."

}

}

$currentAzureContext = Get-AzureRmContext

$profileClient = New-Object Microsoft.Azure.Commands.ResourceManager.Common.RMProfileClient($azureRmProfile)

Write-Debug ("Getting access token for tenant" + $currentAzureContext.Subscription.TenantId)

$token = $profileClient.AcquireAccessToken($currentAzureContext.Subscription.TenantId)

$token.AccessToken

}

# Network Usage not currently exposed through PowerShell, so need to call REST API

function Get-AzureRmNetworkUsage($location)

{

$token = Get-AzureRmCachedAccessToken

$authHeader = @{

'Content-Type'='application\json'

'Authorization'="Bearer $token"

}

$azureContext = Get-AzureRmContext

$subscriptionId = $azureContext.Subscription.SubscriptionId

$result = Invoke-RestMethod -Uri "https://management.azure.com/subscriptions/$subscriptionId/providers/Microsoft.Network/locations/$location/usages?api-version=2017-03-01" -Method Get -Headers $authHeader

return $result.value

}

# Get VM quotas

foreach ($location in $locations)

{

$vmQuotas = Get-AzureRmVMUsage -Location $location

foreach($vmQuota in $vmQuotas)

{

$usage = 0

if ($vmQuota.Limit -gt 0) { $usage = $vmQuota.CurrentValue / $vmQuota.Limit }

$json += @"

{ "Name":"$($vmQuota.Name.LocalizedValue)", "Category":"Compute", "Location":"$location", "CurrentValue":$($vmQuota.CurrentValue), "Limit":$($vmQuota.Limit),"Usage":$usage },

"@

}

}

# Get Network Quota

foreach ($location in $locations)

{

$networkQuotas = Get-AzureRmNetworkUsage -location $location

foreach ($networkQuota in $networkQuotas)

{

$usage = 0

if ($networkQuota.limit -gt 0) { $usage = $networkQuota.currentValue / $networkQuota.limit }

$json += @"

{ "Name":"$($networkQuota.name.localizedValue)", "Category":"Network", "Location":"$location", "CurrentValue":$($networkQuota.currentValue), "Limit":$($networkQuota.limit),"Usage":$usage },

"@

}

}

# Get Storage Quota

$storageQuota = Get-AzureRmStorageUsage

$usage = 0

if ($storageQuota.Limit -gt 0) { $usage = $storageQuota.CurrentValue / $storageQuota.Limit }

$json += @"

{ "Name":"$($storageQuota.LocalizedName)", "Location":"", "Category":"Storage", "CurrentValue":$($storageQuota.CurrentValue), "Limit":$($storageQuota.Limit),"Usage":$usage }

"@

# Wrap in an array

$json = "[$json]"

# Create the function to create the authorization signature

Function Build-Signature ($omsWorkspaceId, $omsSharedKey, $date, $contentLength, $method, $contentType, $resource)

{

$xHeaders = "x-ms-date:" + $date

$stringToHash = $method + "`n" + $contentLength + "`n" + $contentType + "`n" + $xHeaders + "`n" + $resource

$bytesToHash = [Text.Encoding]::UTF8.GetBytes($stringToHash)

$keyBytes = [Convert]::FromBase64String($omsSharedKey)

$sha256 = New-Object System.Security.Cryptography.HMACSHA256

$sha256.Key = $keyBytes

$calculatedHash = $sha256.ComputeHash($bytesToHash)

$encodedHash = [Convert]::ToBase64String($calculatedHash)

$authorization = 'SharedKey {0}:{1}' -f $omsWorkspaceId,$encodedHash

return $authorization

}

# Create the function to create and post the request

Function Post-OMSData($omsWorkspaceId, $omsSharedKey, $body, $logType)

{

$method = "POST"

$contentType = "application/json"

$resource = "/api/logs"

$rfc1123date = [DateTime]::UtcNow.ToString("r")

$contentLength = $body.Length

$signature = Build-Signature `

-omsWorkspaceId $omsWorkspaceId `

-omsSharedKey $omsSharedKey `

-date $rfc1123date `

-contentLength $contentLength `

-fileName $fileName `

-method $method `

-contentType $contentType `

-resource $resource

$uri = "https://" + $omsWorkspaceId + ".ods.opinsights.azure.com" + $resource + "?api-version=2016-04-01"

$headers = @{

"Authorization" = $signature;

"Log-Type" = $logType;

"x-ms-date" = $rfc1123date;

}

$response = Invoke-WebRequest -Uri $uri -Method $method -ContentType $contentType -Headers $headers -Body $body -UseBasicParsing

return $response.StatusCode

}

# Submit the data to the API endpoint

Post-OMSData -omsWorkspaceId $omsWorkspaceId -omsSharedKey $omsSharedKey -body ([System.Text.Encoding]::UTF8.GetBytes($json)) -logType $logType

[/powershell]

When you configure the script to run, make sure you populate your OMS Workspace ID and Shared Key (which you can get by logging into your OMS portal and then going to Settings > Connected Sources) and the list of Azure locations that you're interested in (formatted as a JSON array, e.g. ["australiaeast", "australiasoutheast"] ).

Once you have a few points of data in OMS, you can use the Log Analytics search and alerting capabilities to query, visualise or alert on the Azure quota data. Note that as per the Log Collector API documentation, OMS will suffix both your event type and fields. So your events will have a type of AzureQuota_CL and the fields will be suffixed with _s (for strings) and _d for numbers. The following query will give you your raw events:

Then you can do some fancier queries to get more insights. For example, to graph your % utilisation in a specific region:

* Type=AzureQuota_CL Location_s=australiaeast | measure avg(Usage_d) by Name_s interval 1MINUTE

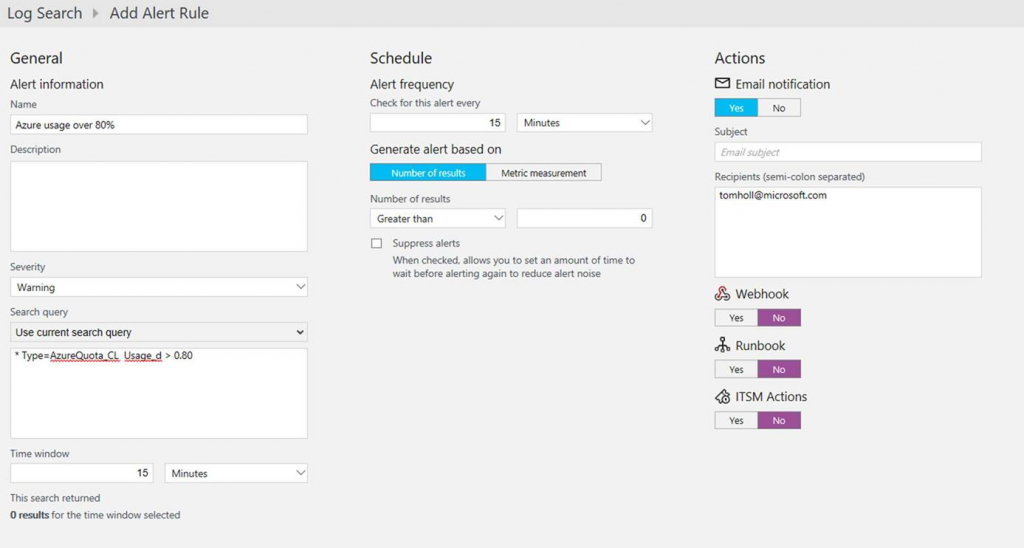

But, remember our real goal was to get notified when your usage starts getting close you your limit. You can find any instances above a threshold (say, 80%) with the following query:

* Type=AzureQuota_CL Usage_d > 0.80

With this, you can use the OMS Alert capabilities to send an email, invoke a WebHook, call a runbook or call an ITSM system.

So with a few lines of PowerShell and Azure Automation used as glue between the Azure APIs and OMS Log Analytics, you'll never be surprised by hitting a quota again. Happy alerting!

Comments

- Anonymous

June 15, 2017

When dealing with multiple subscriptions, it would be nice to add a subscription name property using "SubscriptionName":"$($(Get-AzureRMContext).Subscription.SubscriptionName)" to JSON object. Also locations can be auto populated using $locations = (Get-AzureRMLocation | Select-Object Location).Location- Anonymous

June 18, 2017

Thanks Mohit. Adding the subscription is a good idea. You don't necessarily care about quotas in all regions, so specifying the locations manually isn't necessarily a bad idea.

- Anonymous

- Anonymous

June 29, 2017

Hi Tom,Can you please share any command or script that who created the VM and when, location too in Azure, when i try to check the activity log those logs only existed for 3 months in custom.,- Anonymous

June 29, 2017

Hi Surya - yes I've actually been working on something that does exactly this - see https://github.com/tomhollander/AzureSetCreatedTags. This will set tags on the resource specifying who created the resource and when, eliminating the need to go through the activity log. Also note that you can retain your activity log for over 3 months by exporting it to a storage account.

- Anonymous