Using SSIS to get data out of Oracle: A big surprise!

Since late last year, Microsoft has made the Attunity connectors to Oracle databases available to Enterprise Edition customers. We all recognized right away that these connectors were faster than the pre-existing options from either Microsoft or Oracle, when moving data into or out of an Oracle database. It wasn’t immediately obvious what speeds we could expect from the connectors, so I did some experimenting to see. This posting summarizes some findings from those experiments done earlier this year, but rather than report all the details I will then zero in on the key lessons and one big surprise that came out of the work.

Before getting in to my findings, let me give a little anecdote heard from a colleague: There is at least one SSIS customer that now uses SSIS and the Attunity connectors to move data from an Oracle database to an Oracle database, because SSIS with Attunity outperforms any of the Oracle options! While I can’t corroborate that, the information to follow is from my own measurements.

To do this work, I used two machines each with 24 cores (64-bit, 2.4 GHz), one for SSIS and one for Oracle. The machines were practically an embarrassment of riches for this simple benchmark. The SSIS machine had flat files to read (when loading data into Oracle) or write (when extracting data from Oracle). The SSIS packages were super simple, just a flat file source and an Oracle destination, or an Oracle source and a flat file destination. The data was 75 million rows, 133 bytes per row, of the LINEITEM table from the TPC-H benchmark, as generated by the DBGEN utility.

Some basic findings (remember, you mileage will vary):

- Putting data into an Oracle database using the Attunity connectors clocked 30,000 rows per second, on the order of 20 times faster than using the OLE DB connectors to Oracle.

- Extracting data from Oracle moved 36,000 rows per second, about 20% faster than using the OLE DB connectors.

- The above measurements were taken using “mixed” data types: Numbers were put in NUMBER fields, dates were put in DATE fields, etc. A funny thing happened though when all the data was put in string fields (VARCHAR2 use used for everything). Now we could hit 42,000 rows per second loading data into Oracle, and 76,000 rows per second extracting from Oracle!

- The Fast Load option is supposed get higher performance through the use of the DirectPath API. In my experiments, I didn’t see a consistent advantage of Fast Load over non-Fast Load. The thing that Fast Load did seem to do was shift more of the CPU time from the Oracle process to the SSIS process. This could mean that if you have multiple concurrent SSIS packages sending data to Oracle, using Fast Load might let Oracle receive the data faster. Given my experience with Fast Load, I can only recommend that you check its performance in your own situation. Note: I’ve been told that Fast Load will be fixed in a maintenance release later this calendar year. So while I’m not promising anything, it’s likely that this will change.

- The default batch size for the Oracle destination connector is 100 rows. Setting the batch size to 10,000 rows gave a boost of 10% to 50%, depending on other elements of the configuration. (When using Fast Load, you specify the buffer size instead of the row count. So estimate the buffer size needed to hold the number of rows you want, and use that number.)

- When using the Oracle source, setting batch size to 10,000 rows gave a boost of around 10%, depending on other elements of the configuration.

- I wanted to know how important it was for SSIS to be on a separate machine from the Oracle database. There was a good network connecting the source and destination servers, and also plenty of CPUs and memory on the servers. What I saw was a negligible difference between the case where SSIS and Oracle were on the same server and the case where SSIS and Oracle were on separate systems. My recommendation: Look at what resource is the most loaded in your environment, and configure to lighten the load on that resource.

The idea that performance with string data would be so different from performance with natural data types was a big surprise. The difference was especially pronounced when extracting data from Oracle. Now let’s face it, we would prefer to see data extracted from Oracle and placed in SQL Server databases! Given the big speed disparity and the fact that most real-world data needs to be in natural data types, I wondered if the same thing would happen if data was cast to string types in the query that SSIS issues against Oracle. So instead of having SSIS simply read the table, I gave it this query to run:

select

TO_CHAR(L_SHIPDATE),

TO_CHAR(L_ORDERKEY),

TO_CHAR(L_DISCOUNT),

TO_CHAR(L_EXTENDEDPRICE),

TO_CHAR(L_SUPPKEY),

TO_CHAR(L_QUANTITY),

L_RETURNFLAG,

TO_CHAR(L_PARTKEY),

L_LINESTATUS,

TO_CHAR(L_TAX),

TO_CHAR(L_COMMITDATE),

TO_CHAR(L_RECEIPTDATE),

L_SHIPMODE,

TO_CHAR(L_LINENUMBER),

L_SHIPINSTRUCT,

L_COMMENT

from ATTUSER.LINEITEM

Then before inserting the data into SQL Server using the SQL Server destination, I put in a data conversion task to get all the data into the correct types.

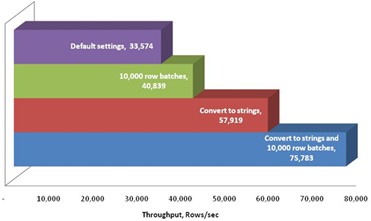

At this point you must be thinking, “Surely converting the data twice can’t be the fastest way!” Well, here are the results: The first run below read the mixed data types using the Attunity Oracle source with default settings, converted to SQL Server types, then wrote to the SQL Server destination. The second run was like the first, with the addition of setting the batch size larger. The third run was like the first, but on reading from Oracle all the columns were converted to text as discussed above. The last test was like the third, with the addition of setting the batch size larger. Using the string conversion and larger batches, the run was over two times faster than the obvious out-of-the-box configuration.

Overall, the Attunity connectors for Oracle really were fast, as expected. In doing this work a few lessons turned up that hopefully help you get optimal performance.

- Len Wyatt

Comments

Anonymous

August 17, 2009

Can we use Attunity to replace our linked server?Anonymous

August 17, 2009

The Attunity connectors are for use with SSIS. If you are using linked servers to Oracle and then using that as an SSIS source or destination, it might very well make sense to replace that with the Attunity connector to connect your SSIS data flow directly to your Oracle database.Anonymous

August 20, 2009

Is the Oracle Attunity connector available for SQL Server enterprise customers the CDC (continuous data change) version (i.e. the one that hooks into the logs)?Anonymous

August 20, 2009

The connectors you can download from Microsoft at http://www.microsoft.com/downloads/details.aspx?FamilyID=d9cb21fe-32e9-4d34-a381-6f9231d84f1e&DisplayLang=en do not contain the CDC functionality. Attunity Oracle-CDC for SSIS is available from Attunity. Information is available at http://www.attunity.com/oracle_cdc_for_ssis.Anonymous

August 22, 2009

One drawback I saw with this is that you can not use an expression for the SQL command on the object. I have a package that I dynamically build a SQL statement to only pull in rows with certain criteria. I suppose I could pull in the entire table and use some of the filtering data flow objects to only take the records i need, but that would somewhat defeat the purpose of limiting the selected rows. Anyone have the same experience with this tool? Hopefully it can be built into a newer version, because I would love to use it. SteveAnonymous

August 25, 2009

Thanks for Input , we will look into this request for the update . Ramakrishnan PM SSIS TeamAnonymous

September 05, 2010

A year later, Attunity still does not support SQL variable command. Transfer 10 M records from Oracle to SQL using Attunity takes about 5 mins. Other OLE providers takes over an hour.Anonymous

September 21, 2010

This is true and also note that you cannot use variables in dynamic sql like you can in ado.net. However you CAN use an expression in your oledb source query - just build the sql in a variable (say using vbscript in previous command task) and then set the property SqlCommand for your Oracle source in the expressions editor to that variable. Works like a charm albeit a bit of work to do something you'd think would be simpler.Anonymous

September 21, 2010

Set variable to the sql and then use in expression for the oledb sourceAnonymous

October 18, 2010

thanks for providing this info ..it helped me Regards nwebsolution W:www.nwebsoluiton.comAnonymous

June 03, 2011

Does the Attunity support Windows Server 2008 R2 (SP1) environment?Anonymous

February 22, 2012

The comment has been removedAnonymous

February 23, 2012

Hi Bjorn - make sure you've installed the 32bit provider on the machine that is running SQL Agent. If you're sure you've installed the provider, restart the SSIS Service and try again.Anonymous

July 09, 2012

How can we perform testing on SQL data base while everything data will be reached r notAnonymous

February 01, 2013

The comment has been removedAnonymous

January 23, 2014

There is an advantage of using variable via expression to derive SQL statement - that is you can see the actual SQL issued via the value change in the variable if you use BiXpress audit framework - that's an incredible debugging capability to have when needed. I like the use of parameter substitution for source sql and use it because it's easy but since BiXpress doesn't yet monitor package parameter values, I lose the simple exposure that I get with the use in combination of variable/expression derived SQL and BiXpressAnonymous

March 11, 2015

The comment has been removed