BigData! Hype or Reality?

I have recently had the opportunity to present on the topic of BigData at several forums including the DW 2.0 Asia-Pac Summit and at the Microsoft Big Picture event. At both these events there were sceptics of BigData and as such I thought I might write this blog post.

I must agree partly though with some of the audience members, about “BigData” being a buzz word, or at least that is how it is portrayed. It can be a good way of signing off bigger budgets on hardware especially storage. However if we take some time to understand the origins of the term then it does make sense. As such the brief of where BigData came about from is for those organisations that are working with large amounts of data - these are beyond the realms of the typical relational data warehouses (beyond TB) – such as the Internet search engines like Yahoo, Google and Bing (many PB across 40000 compute nodes). They not only have to store the index to the Internet, but even the activities/log information produced by users when using there service. This requires petabytes of information to be stored and be able to queried quickly, as such the relational paradigm (ACID) doesn’t suit and the CAP theorem is more suitable. I am not going to be going into detail of these but one can easily conduct a Wikipedia search on this.

One of the popular implementations of this non-relational, or as it has come to be known to be noSQL (not only SQL) movement is Hadoop, which was started as a open source project by Yahoo and Google. The basis for this was the storage layer – a highly distributed filed system – HDFS – and querying/processing methodology known as Map Reduce. This noSQL implementations were required because of the following characteristics of the data:

- Volume – lots of data beyond the TB

- Variety – unstructured data, not just rows and column of data, it could be text, log files, videos, images, audio, etc

- Velocity – speed of data, data generated very rapidly by such things as factory floor sensors, log data, machine generated data

- Variability – a row of data is not necessarily the same as the previous one

I have captured some of the above points in the below video I also recorded on this topic, so if you would just like to hear it then view the video:

Big Data

Hopefully that now provides you with an insight into BigData, now the next question that arises – is about the use case in my organisation? It is a great use case for Internet startups that generate large web logs, or use lots of media – audio, video files – but what about at my bricks & mortar organisation. To respond to this I provide many examples, one simple one is of the ability to harvest the vast amounts of social media data out there that could potentially be out there about the companies brand, products, service, etc – how can we take this and mine it for great piece insights. I then further refer to a survey conducted by Avanade that talks about sources of data internally within organisations. Below is the graph for your reference:

From this you can see a lot of data comes from unstructured systems such as email, documents, spread sheets as opposed to the line of business application databases and other structured sources. I then explain this by giving my day to day example as a member of the sales team at Microsoft. If I was to analyse a lifecycle of sales opportunity, it would be incorrect of me to just take the data from the CRM/ERP system, as this only contains the data as to when I entered it into the system, however prior to this there would have been many emails exchanged between myself, account team and the customer. There would also have been spread sheets with models reflecting the opportunity size, etc. So as you can see the data about the beginnings of the opportunity is completely missed out on if I analysed the structured data only. In some cases this might be okay, as this could be noise data, but having access to it could potentially reveal a best practice that my account team could be using that could be replicated across the organisation for example. Other examples of this might be with regards to contracts – most of these are pdf/word documents, being able to get key metrics on contracts could help you better manage them.

Below is a table of other scenarios and uses of BigData across different industries/verticals. Hopefully you find something that resonates with your situation.

| Industry/Vertical | Scenario |

| Financial Services |

|

| Web & E-tailing |

|

| Retail |

|

| Telecommunications |

|

| Government |

|

| General (cross vertical) |

|

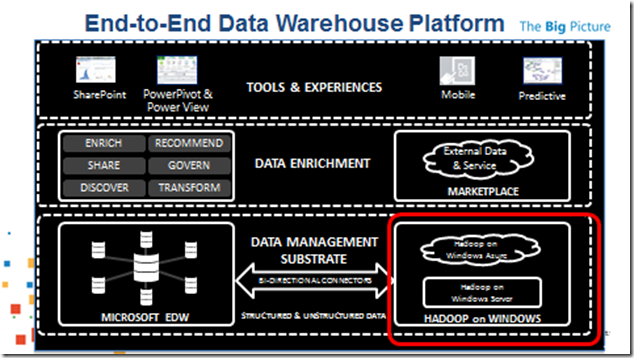

So what is Microsoft doing in the space of BigData, well I am glad you asked, as we have been doing quite a lot in this space, and we made some major announcements at 2011 SQL PASS Summit. These were as follows:

- Microsoft will ship an Apache Hadoop™ based distribution for Windows Server and Windows Azure to help accelerate its adoption in the Enterprise.

- Broader access of Hadoop to end users, IT professionals and Developers

- Enterprise-ready Hadoop distribution with greater security, performance and ease of management

- Breakthrough insights with the use of familiar tools such as PowerPivot for Excel, SSAS and SSRS

- Two Hadoop connectors for SQL Server and Parallel Data Warehouse now available – https://www.microsoft.com/download/en/details.aspx?id=27584

- Excel Hadoop connector via Hive - https://social.technet.microsoft.com/wiki/contents/articles/how-to-connect-excel-to-hadoop-on-azure-via-hiveodbc.aspx

What does this all mean, basically we will continue to provide end user access to their data in familiar tools such as Excel, Power View, SharePoint to gain insight from the data - whether it resides in structured world in relational databases such as SQL Server or unstructured stored in Hadoop. Below is a high level architecture:

To get a better understanding of our strategy and roadmap, I would also like to refer you to the following channel 9 video:

To see the Windows Azure Hadoop CTP implementation in action refer to:

- Blog post by Lynn Langit on her experience: https://lynnlangit.wordpress.com/2011/12/15/trying-out-hadoop-on-azure/

- Video: https://t.co/3qnnaqPQ

Lastly I think this is an exciting time to be involved with data, with technologies like Hadoop and its implementation in Azure making it very easy store and then consume BigData and come up with new insights and analytics. Below is a cartoon from geek&poke that talks about how to leveraging the NoSQL boom:

Comments

- Anonymous

January 01, 2003

thank you - Anonymous

November 03, 2015

The comment has been removed - Anonymous

November 14, 2015

I think your article is very useful, it might be in more detail further suggest the method clearer method, can be of several categories that you created or any of several methods that should be done in the process of it, thank you very much

http://www.lokerjobindo.com

http://www.kerjabumn.com

http://www.lokerjobindo.com/2015/10/lowongan-bumn-brantas-abipraya-persero.html

http://www.kerjabumn.com/2015/10/lowongan-bumn-brantas-abipraya-persero.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-terbaru.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-bumn-pt-nindya-karya.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-bank-cimb-niaga.html

http://www.lokerjobindo.com/2015/11/info-kerja-bumn-pt-sarana-multi.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-terbaru-pt-citilink.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-d3-s1-pt-hk-realtindo.html

http://www.lokerjobindo.com/2015/11/info-kerja-bank-bri-frontliner.html

http://www.lokerjobindo.com/2015/11/info-lowongan-kerja-staf-hubungan.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-pertamina-retail.html

http://www.lokerjobindo.com/2015/11/info-kerja-professional-insurance.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-it-programmer.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-bank-bca-syariah.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-bank-danamon.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-d3.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-bni-life-insurance.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-axa-mandiri.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-bank-sinamrmas.html

http://www.lokerjobindo.com/2015/11/lowongan-kerja-bank-mandiri-syariah.html

http://www.lokerjobindo.com/2015/10/lowongan-kerja-bank-btn.html

http://www.lokerjobindo.com/2015/10/lowongan-kerja-pt-astra-international.html

http://www.lokerjobindo.com/2015/10/lowongan-kerja-adira-finance.html

http://www.lokerjobindo.com/2015/10/lowongan-kerja-pertamina-geothermal.html

http://www.lokerjobindo.com/2015/10/lowongan-kerja-bank-bca.html

http://www.lokerjobindo.com/2015/10/lowongan-kerja-bank-jatim.html

http://www.lokerjobindo.com/2015/10/lowongan-kerja-terbaru-icon-pln.html

http://www.lokerjobindo.com/2015/10/lowongan-kerja-terbaru-s1-kalbe.html - Anonymous

December 18, 2015

http://www.lokerjobindo.com/2015/11/lowongan-kerja-desember-2015.html Lowongan Kerja PT GMF AeroAsia

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-nindya-karya-persero.html Lowongan Kerja PT. Nindya Karya (Persero)

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Garuda%20Indonesia Lowongan Kerja Garuda Indonesia

http://www.lokerjobindo.com/2015/11/lowongan-kerja-asuransi.html Lowongan Kerja Asuransi

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Hari%20Ini Lowongan Kerja Hari Ini

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-pertamina-retail_17.html Lowongan Kerja PT Pertamina Retail

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Hukum Lowongan Kerja Hukum

http://www.lokerjobindo.com/2015/11/info-lowongan-kerja-staf-hubungan.html Info Lowongan Kerja Staf Hubungan Industrial PT Pertamina Retail

http://www.lokerjobindo.com/2015/11/lowongan-kerja-bpjs-kesehatan.html Lowongan Kerja BPJS Kesehatan

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-pelayanan-listrik.html Lowongan Kerja PT Pelayanan Listrik Nasional

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20IT Lowongan Kerja IT

http://www.lokerjobindo.com/2015/11/lowongan-kerja-asuransi.html Lowongan Kerja Asuransi

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Indomart Lowongan Kerja Indomart

http://www.lokerjobindo.com/2015/11/lowongan-kerja-it-programmer.html Lowongan Kerja IT Programmer

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Lulusan%20SMK Lowongan Kerja Lulusan SMK

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-pertamina-retail_17.html Lowongan Kerja PT Pertamina Retail

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Lulusan%20Sarjana Lowongan Kerja Lulusan Sarjana

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-pertamina-retail_17.html Lowongan Kerja PT Pertamina Retail

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Marketing Lowongan Kerja Marketing

http://www.lokerjobindo.com/2015/11/info-kerja-professional-insurance.html Info Kerja Professional Insurance

http://www.lokerjobindo.com/2015/11/lowongan-kerja-d3.html Lowongan Kerja D3 - Anonymous

December 18, 2015

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-bni-life-insurance.html Lowongan Kerja PT BNI Life Insurance

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-surya-madistrindo.html Lowongan Kerja PT Surya Madistrindo

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Metro%20TV Lowongan Kerja Metro TV

http://www.lokerjobindo.com/2015/11/loker-terbaru-akunting-pt-sumber.html Loker Terbaru Akunting PT Sumber Alfaria Trijaya, Tbk

http://www.lokerjobindo.com/2015/11/lowongan-kerja-it-programmer.html Lowongan Kerja IT Programmer

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Negeri Lowongan Kerja Negeri

http://www.lokerjobindo.com/2015/11/lowongan-kerja-pt-pertamina-retail_17.html Lowongan Kerja PT Pertamina Retail

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Pegawai%20Negeri Lowongan Kerja Pegawai Negeri

http://www.lokerjobindo.com/2015/11/lowongan-kerja-management-trainee.html Lowongan Kerja Management Trainee

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Penerbangan Lowongan Kerja Penerbangan

http://www.lokerjobindo.com/2015/11/info-kerja-professional-insurance.html Info Kerja Professional Insurance

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Persero Lowongan Kerja Persero

http://www.lokerjobindo.com/2015/11/lowongan-kerja-management-trainee.html Lowongan Kerja Management Trainee

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Pertamina Lowongan Kerja Pertamina

http://www.lokerjobindo.com/2015/11/info-kerja-professional-insurance.html Info Kerja Professional Insurance

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20Programmer Lowongan Kerja Programmer

http://www.lokerjobindo.com/2015/11/lowongan-kerja-asuransi.html Lowongan Kerja Asuransi

http://www.lokerjobindo.com/search/label/Lowongan%20Kerja%20S1 Lowongan Kerja S1

http://www.lokerjobindo.com/2015/11/info-kerja-bumn-pt-sarana-multi.html Info Kerja BUMN PT Sarana Multi Infrastruktur (Persero)

http://www.lokerjobindo.com/2015/11/info-kerja-dewan-pengawas-dan-direksi.html Info Kerja Dewan Pengawas dan Direksi BPJS Ketenagakerjaan

http://www.lokerjobindo.com/2015/11/info-kerja-professional-insurance.html Info Kerja Professional Insurance

http://www.lokerjobindo.com/2015/11/info-kerja-sales-pt-pertamina-retail.html Info Kerja Sales PT Pertamina Retail

http://www.lokerjobindo.com/2015/11/info-lowongan-kerja-staf-hubungan.html Info Lowongan Kerja Staf Hubungan Industrial PT Pertamina Retail

http://www.lokerjobindo.com/2015/11/loker-terbaru-akunting-pt-sumber.html Loker Terbaru Akunting PT Sumber Alfaria Trijaya, Tbk

http://www.lokerjobindo.com/2015/11/loker-terbaru-pt-astra-otoparts-tbk.html Loker Terbaru PT Astra Otoparts Tbk Tingkat SMA/SMA D3 S1

http://www.lokerjobindo.com/2015/11/loker-terbaru-pt-wijaya-karya-persero.html Loker Terbaru PT. Wijaya Karya (Persero)

http://www.lokerjobindo.com/2015/12/lowongan-kerja-2016-pt-bank-uob.html Lowongan Kerja 2016 PT Bank UOB Indonesia