A walkthrough of Loan Classification using SQL Server 2016 R Services

Joseph Sirosh, Data Group Corporate Vice President, had shown during his keynote session how customers are able to achieve a scale up of 1 million predictions/sec using SQL Server 2016 R Services. We will get down to the nuts and bolts of how you can emulate a similar setup using Lending Club data using SQL Server 2016. My colleague, Ram, has documented the use Lending Club data and SQL Server 2016 R Services to perform Loan Classification and basic concepts involved in using R Services. I am going to talk about how to create a pipeline which can help ingest the data from the flat files, re-run the predictions for the incremental data. I will be using the Azure Data Science VM comes pre-installed with SQL Server 2016 Developer edition and can be used readily for our scoring experiment.

At Ignite, we had Jack Henry & Associates on the stage with Joseph. They provide more than 300 products and services to over 10,000 credit unions and enable them to process financial transactions plus automate their services. Using SQL Server as a Scoring Engine, enabled their vision of building an intelligent enterprise data warehouse which would help their customers increase their productivity. They have been working with Microsoft to leverage SQL Server with built-in R capability to build intelligence into their current data warehousing service portfolio. Such an intelligent data warehouse helps credit unions and financial services become more flexible and react to situations in a data-driven manner. We see opportunities in applying such models within the database to customer churn predictions, predicting loan portfolio changes and a host of other scenarios. Several banking and insurance companies rely on very complex architectures to do predictive analytics and scoring today. Using the architecture outlined in this blog, businesses can do this in a dramatically simpler and faster manner.

The scripts used for creating this sample is available on theMicrosoft SQL Server Samples GitHubrepository. In this blog post, I will make references to the specific script files which have the sample code to achieve what I talking about in this walkthrough.

Data Preparation

The first thing that you will need to do is download the Lending Club loan data in CSV format. After that you can create a database and the associated objects using the T-SQL [1 - Create Database.sql] script. This script creates the database, adds a SCHEMA ONLY in-memory table which will act as the staging table along with all other objects required to get this sample up and running.

One of the roadblocks that you will have is the lines at beginning and end of the CSV files in the Lending Club data which will throw errors if you put them through an Import/Export package without any error handling. With some amount of PowerShell automation, you can work out a way to ignore the rows which do not have valid data or are not part of the data. The PowerShell script [2 – ImportCSVData.ps1] on the GitHub samples will provide some respite from the import woes. Once you import all the data, you would have processes over a million valid records. I won’t be spending too much time on automating the data preparation pipeline as the source and destination will vary across systems and businesses. The above section is just an example to get you setup with the Lending Club data.

Creating the Data pipeline

The first step was ingesting the data into a staging table which can be done using multiple different ways through PowerShell, scheduled SQL Agent jobs using PowerShell or T-SQL scripts or SSIS Packages or a combination of all of these. The next step is to process the data in the staging table and import the data into table which will store the final data. This is done using a stored procedure dbo.PerformETL available in the GitHub sample in the 1 - Create Database.sql script.

Once the data is imported, we found that it was beneficial to have a non-clustered columnstore index defined on the columns that would be used as the attributes in the scoring. This can be found in the 3 - Create Columnstore Index.sql script. The script also populates one of the columns. Ram had explained how to perform feature selection in his previous blog post which you can reference here. I will not repeat the same concepts in this post.

Resource Governor Configuration

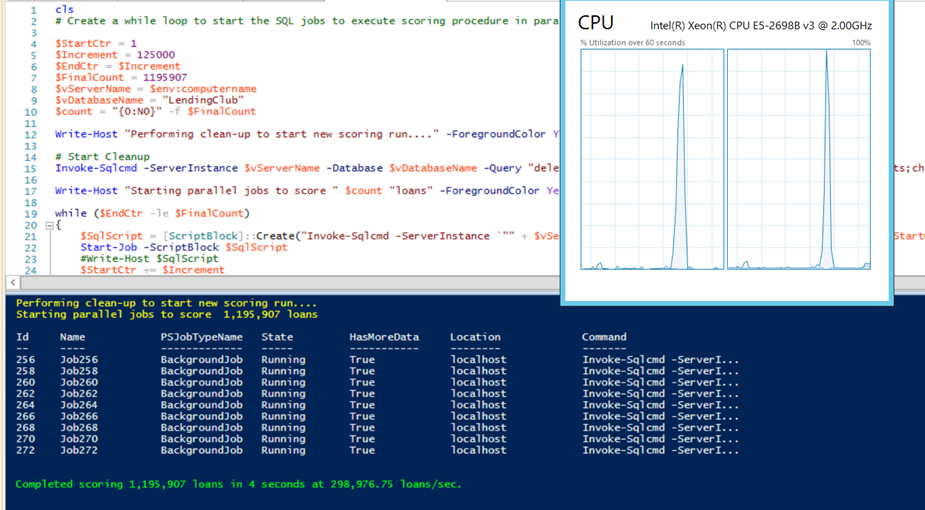

If you are dealing with a high number of parallel threads on multi-node NUMA machine, then you will need to use external resource pools to ensure that the threads are being equally distributed across the NUMA nodes or if you need to allocate more memory to the resource pools. You can use resource pools to manage external script processes. In some builds, the maximum memory that could be allocated to the R processes was 20%. Therefore, if the server had 32GB of RAM, the R executables (RTerm.exe and BxlServer.exe) could use a maximum 6.4GB in a single request. For my Azure Data Science VM, I am using the resource governor configuration shown below (available in 5 - Resource Governor Config.sql). You will see from the screenshot below that both NUMA nodes are pegged at nearly 100% CPU during the parallel scoring process.

create external resource pool "lcerp1" with (affinity numanode = (0));

create external resource pool "lcerp2" with (affinity numanode = (1));

create resource pool "lcrp1" with (affinity numanode = (0));

create resource pool "lcrp2" with (affinity numanode = (1));

create workload group "rg0" using "lcrp1", external "lcerp1";

create workload group "rg1" using "lcrp2", external "lcerp2";

USE [master]

GO

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE function [dbo].[assign_external_resource_pool]()

returns sysname

with schemabinding

as

begin

return concat('rg', @@SPID%2);

end;

GO

Setting up the loan scoring automation

You will now need a scoring model which can be created using 75% of the training dataset. An example of using 75% of the dataset as a training dataset is shown below.

CREATE TABLE [dbo].[models](

[model] [varbinary](max) NOT NULL

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY];

GO

INSERT INTO [dbo].[models]

EXEC sp_execute_external_script

@language = N'R',

@script = N'

randomForestObj <- rxDForest(is_bad ~ revol_util + int_rate + mths_since_last_record + annual_inc_joint + dti_joint + total_rec_prncp + all_util, InputDataSet)

model <- data.frame(payload = as.raw(serialize(randomForestObj, connection=NULL)))

',

@input_data_1 = N'SELECT revol_util, int_rate, mths_since_last_record, annual_inc_joint, dti_joint, total_rec_prncp, all_util,is_bad FROM [dbo].[LoanStats] WHERE (ABS(CAST((BINARY_CHECKSUM(id, NEWID())) as int)) % 100) < 75',

@output_data_1_name = N'model';

You can import the model from a different location i.e. your development environment. For the demo purpose, we are keeping things simple. The model creation script is available in 4 - Create Model.sql.

Once you have resource governor configured, you can create a PowerShell script which will spawn parallel threads to call the loan scoring stored procedures using an increment specified by you. The 6 - Score Loans.ps1 and 7 - WhatIf.ps1 PowerShell scripts available in the repository on GitHub spawn parallel threads using a while loop to executing the loan scoring stored procedure. The loan scoring stored procedure fetches data using ranges provided by the PowerShell script using the non-clustered columnstore index. Then it uses sp_execute_external_script to score the loans using the model which was created earlier. The scoring results are then stored in an in-memory schema-only table to minimize the transaction logging overhead associated with multiple parallel threads writing into the same database at a very high rate. Since the loan scoring rate is quite high, you can afford to store the results in an in-memory table provided you have sufficient RAM available.

The ScoreLoans stored procedure and the PowerShell script calling this stored procedure is available below.

-- Stored procedure for scoring loans for the base predictions

CREATE PROCEDURE [dbo].[ScoreLoans]

@start bigint,

@end bigint

AS

BEGIN

-- Declare the variables to get the input data and the scoring model

DECLARE @inquery nvarchar(max) = N'SELECT id,revol_util, int_rate, mths_since_last_record, annual_inc_joint, dti_joint, total_rec_prncp, all_util, is_bad FROM [dbo].[LoanStats] where [id] >= ' + CAST(@start as varchar(255)) + 'and [id] <= ' + CAST(@end as varchar(255));

DECLARE @model varbinary(max) = (SELECT TOP 1 [model] FROM [dbo].[models])

-- Log beginning of processing time

INSERT INTO [dbo].[RunTimeStats] VALUES (@@SPID, GETDATE(),'Start')

-- Score the loans and store them in a table

INSERT INTO [dbo].[LoanStatsPredictions]

EXEC sp_execute_external_script

@language = N'R',

@script = N'

rfModel <- unserialize(as.raw(model));

OutputDataSet<-rxPredict(rfModel, data = InputDataSet, extraVarsToWrite = c("id"))

',

@input_data_1 = @inquery,

@params = N'@model varbinary(max)',

@model = @model

-- Log end of processing time

INSERT INTO [dbo].[RunTimeStats] VALUES (@@SPID, GETDATE(),'End')

END

GO

# Create a while loop to start the SQL jobs to execute scoring procedure in parallel

$StartCtr = 1

$Increment = 250000

$EndCtr = $Increment

$FinalCount = 1195907

$vServerName = $env:computername

$vDatabaseName = "LendingClub"

$count = "{0:N0}" -f $FinalCount

Write-Host "Performing clean-up to start new scoring run...." -ForegroundColor Yellow

# Start Cleanup

Invoke-Sqlcmd -ServerInstance $vServerName -Database $vDatabaseName -Query "delete from [LoanStatsPredictions];delete from Runtimestats;checkpoint;"

Write-Host "Starting parallel jobs to score " $count "loans" -ForegroundColor Yellow

while ($EndCtr -le $FinalCount)

{

$SqlScript = [ScriptBlock]::Create("Invoke-Sqlcmd -ServerInstance `"" + $vServerName + "`" -Query `"EXEC [dbo].[ScoreLoans] " + $StartCtr + "," + $EndCtr + "`" -Database `"$vDatabaseName`"")

Start-Job -ScriptBlock $SqlScript

$StartCtr += $Increment

$EndCtr += $Increment

}

# Wait till jobs complete

while (Get-Job -State Running)

{

Start-Sleep 1

}

# Find out duration

$duration = Invoke-Sqlcmd -ServerInstance $vServerName -Database $vDatabaseName -Query "select DATEDIFF(s,MIN (Runtime), MAX(Runtime)) as RuntimeSeconds from dbo.RuntimeStats;"

Write-Host "`n"

$rate = "{0:N2}" -f ($FinalCount/$duration.RuntimeSeconds)

Write-Host "Completed scoring" $count "loans in" $duration.RuntimeSeconds "seconds at" $rate "loans/sec." -ForegroundColor Green

# Remove Jobs

Get-Job | Remove-Job

The WhatIf scenario is actually a very common scenario for a business user for modeling various scenarios and checking what the possible outcome will be. In this sample, the user is allowed to increase the interest rate of all the loans and check what the charge-off probability would be. Such WhatIf scenarios can be made to handle complex business scenarios and provides business users the capability to run various models using the power of SQL Server and R-Services and make informed decisions about their business. These type of implementations can turn the data in your data warehouse into a gold mine of business insights waiting to harnessed!

The above sample is one way of setting up a parallel workload for scoring ingested loans from a table using columnstore indexes to speed up data fetch/aggregation and using parallel processing to get a high throughput. On a machine with 32 logical processors with two NUMA nodes, I was able to get a throughput of ~298K loans/sec with only 9 parallel processes.The screenshot above shows a sample output.

REFERENCES

Lending Club Statistics Machine Learning for Predicting Bad Loans Variable Importance Plot and Variable Selection Machine Learning Templates with SQL Server 2016 R Services SQL Server R Services Tutorials Provision the Microsoft Data Science Virtual Machine sp_execute_external_script (Transact-SQL) Explore and Visualize the Data (In-Database Advanced Analytics Tutorial) Selecting Rows Randomly from a Large Table Receiver operating characteristic Area under the curve The Area Under an ROC Curve

Amit Banerjee (@banerjeeamit)

Comments

- Anonymous

January 11, 2017

Very nicely structured and presented example. It is very much appreciated.