Dissecting SQL Server physical reads with Extended Events and Process monitor

In this blog post I’ll (re)introduce you to a really neat tool to use alongside of SQL Server and use this tool to show you how SQL Server is handling IO under the covers. We will view the IOs as they occur, and then tie the IO back to the pages that we pull into the buffer pool.

First, I’ll create a table named NumbersTable that I’ll use for the subsequent demos, which I’ll create in a database named NumbersDB. I’ll then load 500K worth of records into it.

CREATE TABLE NumbersTable

(

NumberValue BIGINT NOT NULL

)

GO

INSERT INTO NumbersTable (NumberValue)

SELECT TOP 500000 NumberValue

FROM

( SELECT NumberValue = row_number() over(order by newid() asc)

FROM master..spt_values a

CROSS APPLY master..spt_values b

WHERE a.type = 'P' AND a.number <= 710 AND a.number > 0 AND

b.type = 'P' AND b.number <= 710 AND b.number > 0

) a

ORDER BY NumberValue ASC

GO

Next, make sure is a clustered index on the table and that it isn’t fragmented after the rebuild. I use MAXDOP to ensure that the operation is single threaded, which removes any opportunity for fragmentation. Sometimes when a rebuild operation uses multiple worker threads the index can end up very slightly fragmented. This removes that possibility.

CREATE CLUSTERED INDEX ixNumbersTable ON NumbersTable(NumberValue)

WITH(MAXDOP=1)

GO

Next, to setup for the following demo, run the following to clear out the buffer pool and flush anything necessary to the log file. This will ensure that when I query the table later I’ll need to go out to disk to read the data pages.

DBCC DROPCLEANBUFFERS

CHECKPOINT

DBCC FREEPROCCACHE

GO

OK, now for the really cool stuff. If you don’t have it downloaded already, navigate to https://technet.microsoft.com/en-us/sysinternals/bb896645.aspx and download Process Monitor. This is a great tool that allows you to view real-time process & thread activity – including SQL Server activity! Once you download the file click on the Filter menu and enter the following filters.

Run the following query and watch the activity come through in Process Explorer. Because we just cleared out all pages from the buffer pool, we will have to go out to the data file to pull data back in. We can see these IOs as they occur through Process Monitor. We care mainly about the Length portion of the Detail column for our purposes today.

SELECT COUNT(*) FROM NumbersTable

We can see that in most cases, the IO requests above are in 512KB blocks. This is read-ahead at work. The read-ahead mechanism allows the Database Engine to read up to 64 contiguous pages (512KB) from one file in a single IO. The read is performed as a single scatter-gather read (see this blog post for more information on IO basics - https://blogs.msdn.com/b/sqlblog/archive/2006/09/20/whitepaper-sql-server-i-o-basics.aspx) to the appropriate number of (probably non-contiguous) buffers in the buffer cache. If any of the pages in the range are already present in the buffer cache, the corresponding page from the read will be discarded when the read completes. The range of pages may also be "trimmed" from either end if the corresponding pages are already present in the cache. This makes the scanning of data very efficient in cases where the data on disk is not fragmented. For more information on how fragmentation affects scan performance in SQL Server 2012, check out my post here: https://blogs.msdn.com/b/timchapman/archive/2012/12/03/visualizing-index-fragmenation.aspx.

You’ll also notice that the smallest IO is 64K in size. In this case we are reading extents because my buffer pool hadn’t ramped up quite yet. During the Ramp up phase which is before the buffer pool committed memory reaches the target memory, in order to expedite the ram up process every single page read is aligned into 8 page read requests. If my buffer pool had been ramped up, then a single page ready would be an 8K IO. We don’t always perform IOs on data files in 64K increments. Log files are a bit different in that we will write very smaller units of work to the log file – as small as 512 bytes in size (up to a max of 60K).

At this point, if you’ve loaded up the public symbols into Process Monitor (https://blogs.msdn.com/b/vijaysk/archive/2009/04/02/getting-better-stack-traces-in-process-monitor-process-explorer.aspx ), then you could view the functions calls by right clicking one of the ReadFile operations. I’m not going to show this here because I’ve got the private symbols loaded up, but it is something to play with if you really want to geek out.

If I fire up Excel, I can post the values from the Process Monitor capture and do some quick math to see how many pages were actually read into SQL Server. From my calculation, I can see that my scan query read in 1067 pages.

Another way to view this same information is through Extended Events (https://msdn.microsoft.com/en-us/library/bb630354(v=sql.105).aspx ) . In 2012, the file_read_completed event includes the size field, which equates to (as you can guess) the size of the IO. If I’ve done everything correctly, this should relate to the IOs that we captured in Process Monitor above. Notice that I am using a histogram (previously called a Bucketizer in 2008) target, which will allow me to aggregate data based on fields (or actions) retrieved by the event. This is a bit different than the event_counter target, which only counts occurrences of an event.

-- replace the Database ID by the appropriate DBID

CREATE EVENT SESSION [Reads] ON SERVER

ADD EVENT sqlserver.file_read_completed(

WHERE ([database_id]=(8)))

ADD TARGET package0.histogram(SET filtering_event_name=N'sqlserver.file_read_completed',

source=N'size',source_type=(0))

WITH

( MAX_MEMORY=4096 KB,EVENT_RETENTION_MODE=ALLOW_SINGLE_EVENT_LOSS,

MAX_DISPATCH_LATENCY=30 SECONDS,

MAX_EVENT_SIZE=0 KB,MEMORY_PARTITION_MODE=NONE,TRACK_CAUSALITY=OFF,

STARTUP_STATE=ON

)

GO

And, here is what it looks like to configure the event through the fancy new Extended Events UI in SSMS 2012. Notice that for an apples-to-apples comparison, I have to set the database_id value as a predicate. If I didn’t, it would capture file_read_completed events for all databases. I set a similar predicate using a filter to the file path in Process Monitor.

Looking at the number of pages captured in my Extended Event, after I do some quick math I can see that my xEvent caught the same number of page reads as my Process Monitor capture did. Awesome!

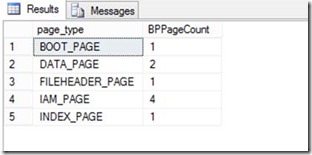

So, let’s do some investigation to see what pages were read in. First, I’ll somewhat redo my test and clear out my buffer pool. I’ll then query sys.dm_os_buffer_descriptors to see what pages are immediately read back into the BP (this is done for misc. engine purposes).

DBCC DROPCLEANBUFFERS

CHECKPOINT

DBCC FREEPROCCACHE

SELECT page_type, count(*) as BPPageCount

FROM sys.dm_os_buffer_descriptors d

WHERE database_id = DB_ID()

GROUP BY page_type

Cool, looks like we have around 9 pages. Now, let’s scan the table again to see how many we read into the buffer pool.

SELECT COUNT(*) FROM NumbersTable

If we compare the before and after page counts, we can see that the difference in pages is 1067 – which is the exact number we calculated from the IO reads from Process Monitor. Really cool!

Hope you think this is as cool as I do!

Tim Chapman

Comments

Anonymous

March 14, 2013

Yes it is cool, lots of stuff happening internally, if you run the events feature.Anonymous

April 02, 2013

Thank you! This is an excellent and nicely documented way of verifying read-ahead behaviour. The only comment I'd make is that the procmon filter does not work if the file(s) concerned are on a mount point - it will have to be modified so that it 'Ends with' the file name(s) concerned or to replace the path name with a value of e.g. DeviceHarddiskVolume10MSSQLDataNumbersAnonymous

April 30, 2013

Very cool and detailed post! Dissecting sql server to its finest is a very challenging task and you put fun and humor into it.