Improving the performance of web services in SL3 Beta

Silverlight 3 Beta introduces a new way to improve the performance of web services. You have all probably used the Silverlight-enabled WCF Service item template in Visual Studio to create a WCF web service, and then used the Add Service Reference command in your Silverlight application project in order to access the web service. In SL3, the item template has undergone a small change that turns on the new binary message encoder, which significantly improves the performance of the WCF services you build. Note that this is the same binary encoder which has been available in .Net since the release of WCF, so all WCF developers will find the object model very familiar.

The best part is that this is done entirely in the service configuration file (Web.config) and does not affect the way you use the service. (Check out this post for a brief description of exactly what the change is.)

I wanted to share some data that shows exactly how noticeable this performance improvement is, and perhaps convince some of you to consider migrating your apps from SL2 to SL3.

When Silverlight applications use web services, XML-based messages (in the SOAP format) are being exchanged. In SL2, those messages were always encoded as plain text when being transmitted; you could open a HTTP traffic logger and you would be able to read the messages. However using plain text is far from being a compact encoding when sending across the wire, and far from being fast when decoding on the server side. When we use the binary encoder, the messages are encoded using a WCF binary encoding, which provides two main advantages: increased server throughput and decreased message size.

Increased server throughput

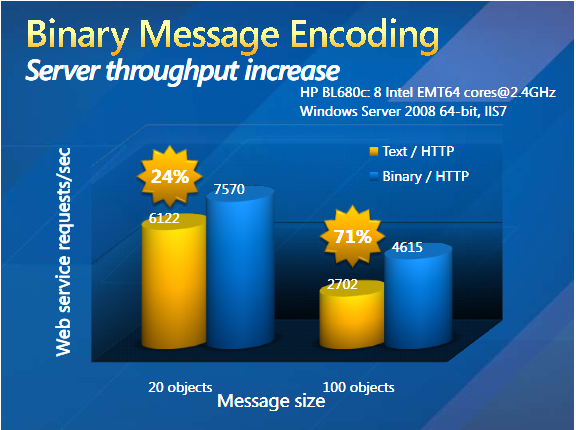

Let’s examine the following graph (hat tip to Greg Leake of StockTrader fame for collecting this data). Here is the scenario we measure: the client sends a payload, the server receives it and sends it back to the client. Many clients are used to load the service up to its peak throughput. We run the test once using the text-based encoding and once using the new binary encoding and compare the peak throughput at the sever. We do this for 2 message sizes: in the smaller size the payload an array with 20 objects, and in the bigger one the payload is an array with 100 objects.

Some more details for the curious: The service is configured to ensure no throttling is happening, and a new instance of the service is created for every client call (known as PerCall instancing). There are ten physical clients driving load, each running many threads hitting service in tight loop (but with small 0.1 second think time between requests) using a shared channel to reduce client load. The graph measures peak throughput on the service at 100% CPU saturation. Note that in this test we did not use Silverlight clients but regular .Net clients. Since we are measuring server throughput it is not significant what the clients are.

When sending the smaller message we see a 24% increase in server throughput, and with the larger message size we see a 71% increase in server throughput. As the message complexity continues to grow, we should see even more significant gains from using the binary encoder.

What does that mean to you? If you run a service that is being used by Silverlight clients and you exchange non-trivial messages, you can support significantly more clients if the clients use SL3’s binary encoding. As usage of your service increases, that could mean being able to save on buying and deploying extra servers.

Decreased message size

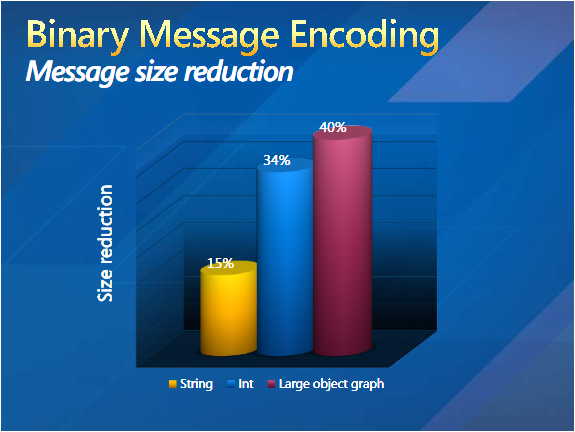

Another feature of the binary encoder is that since messages sent on the wire are no longer plain-text, you will see a reduction in their average size. Let’s clarify this point: the main reason you would use the binary encoding is to increase the service throughput, as discussed in the previous section. The decrease in message size is a nice side-effect, but let’s face it: you can accomplish the same effect by turning on compression on the HTTP level.

This test was far less comprehensive than the previous one and we did it ad-hoc on my co-worker’s office machine. We took various objects inside a Silverlight control, and turned them into the same kind of SOAP messages that get sent to the service. We did this using the plain-text encoding and using binary encoding and then we compared the size of the messages in bytes. Here are our results:

The takeaway here is that the reduction of message size depends on the nature of the payload: sending large instances of system types (for example a long String) will result in a modest reduction, but the largest gains occur when complex object graphs are being encoded (for example objects with many members, or arrays).

What does this mean to you? If you run a web service and you pay your ISP for the traffic your service generates, using binary encoding will reduce the size of messages on the wire, and hopefully lower your bandwidth bills as traffic to your service increases.

Conclusion

We are confident that binary encoding is the right choice for most backend WCF service scenarios: you should never see a regression over text encoding when it comes to server throughput or message size; hopefully you will see performance gains in most cases. This is why the binary encoder is the new default in the Silverlight-enabled WCF Service item template in Visual Studio.

An important note: binary encoding is only supported by WCF services and clients, and so it is not the right choice if you aren’t using WCF end-to-end. If your service needs to be accessed by non-WCF clients, using binary encoding may not be possible. The binary AMF encoding used by Adobe Flex is similarly restricted to services that support it.

Comments

Anonymous

June 07, 2009

PingBack from http://blogs.msdn.com/silverlightws/archive/2009/06/07/improving-the-performance-of-web-services-in-sl3-beta.aspxAnonymous

June 07, 2009

Improving the performance of web services in SL3 BetaAnonymous

June 08, 2009

Hi guys, It's rather strange to measure message size with objects. May be you will provide some Kbyte equivalent. Thanks, Alexey Zakharov.Anonymous

June 08, 2009

How does this compare to using compression in IIS? From what we saw, an uncompressed WCF XML that was let's say 1Meg, compressed down to about the same size (and sometimes better) as the binary result. It also seemed that the compressed xml message was faster to process (preceived). The question is, is it more efficient to basically gzip a very compress-able string then it would be to serialize the binary message? It would seem to me that the consideration is not only the server serving it up, but also the strain on the client to process the message.Anonymous

June 08, 2009

Thanks for your questions folks. @lexer - the complexity of the object is what will cause the difference in message size reduction, this is why we use that as a measure. For example, we could have two messages with the exact same size in KB, but due to the complexity of the objects serialized inside those messages, we could see very different sizes when encoded using the binary encoder. @bmalec - great point, we should look at GZIP-compressed text-encoded XML alongside binary XML. Like I mentioned in the post, I would not be surprised if compressed text might beat binary purely for size, but again the main reason for using binary is to increase the server throughput. Although we haven't explicitly measured this, server throughput should always be better using binary. We haven't looked at client-side but you shouldn't see regressions when you use binary. Thanks, -YavorAnonymous

June 14, 2009

Numa semana em que existem dois feriados, e a grande maioria aproveitou para tirar umas mini-férias,Anonymous

June 19, 2009

Thank you for submitting this cool story - Trackback from DotNetShoutout