Top 14 Updates and New Technologies for Deploying SAP on Azure

Below is a list of updates, recommendations and new technologies for customers moving SAP applications onto Azure.

At the end of the blog is a checklist we recommend all customers follow with planning to deploy SAP environments on Azure.

1. High Performance & UltraPerformance Gateway

The UltraPerformance Gateway has been released.

This Gateway supports only Express Route Links and has a much higher maximum throughput.

The UltraPerformance Gateway is very useful on projects where a large amount of R3load dump files or database backups need to be uploaded to Azure.

https://azure.microsoft.com/en-us/documentation/services/vpn-gateway/

2. Accelerated Networking

A new feature to drastically increase the bandwidth between DS15v2 VMs running on the same Vnet has been released. The latency between VMs with Accelerated Networking is greatly reduced.

This feature is available in most data centers already and is based on the concept of SRIO-V.

Accelerated networking is particularly useful when running SAP Upgrades or R3load migrations. Both the DB server and the SAP Application servers should be configured for Accelerated networking.

Large 3 tier systems with many SAP Application servers will also benefit from Accelerated networking on the Database server however.

For example a highly scalable configuration would be a DS15v2 database server running SQL Server 2016 with Buffer Pool Extension enabled and Accelerated Networking and 6 D13v2 application servers:

Database Server: DS15v2 database server running SQL Server 2016 SP1 with Buffer Pool Extension enabled and Accelerated Networking. 110GB of memory for SQL Server cache (SQL Max Memory) and another ~200GB of Buffer Pool Extension

Application Server: 6 * D13v2 each with two SAP instances with 50 work processes and PHYS_MEMSIZE set to 50%. A total of 600 work processes (6 * D13v2 VMs * 50 work process per instance * 2 instances per VM = 600)

The SAPS value for such a 3 tier configuration is around 100,000 SAPS = 30,000 SAPS for DB layer (DS15v2) and 70,000 SAPS for app layer (6 x D13v2)

3. Multiple ASCS or SQL Server Availability Groups on a Single Internal Load Balancer

Prior to the release of multiple frontend IP addresses for an ILB each SAP ASCS required a dedicated 2 node cluster.

Example: a customer with SAP ECC, BW, SCM, EP, PI, SolMan, GRC and NWDI would need 8 separate 2 node clusters = total of 16 small VMs for the SAP ASCS layer.

With the release of the multiple ILB frontend IP address feature only 2 small VMs are now required.

A single Internal Load Balancer can now bind multiple frontend IP addresses. These frontend IP addresses can be listening on different ports such as the unique port assigned to each AlwaysOn Availability Group listener or the same port such as 445 used for Windows File Shares.

A script with the PowerShell commands to set the ILB configuration is available here

Note: It is now possible to assign a Frontend IP address to the ILB for the Windows Cluster Internal Cluster IP (this is the IP used by the cluster itself). Assigning the IP address of the Cluster to the ILB allows the cluster admin tool and other utilities to run remotely.

Up to 30 Frontend IP addresses can be allocated to a single ILB. The default Service Limit in Azure is 5. A support request can be created to get this limit increased.

The following PowerShell commands are used

New-AzureRmLoadBalancer

Add-AzureRmLoadBalancerFrontendIpConfig

Add-AzureRmLoadBalancerProbeConfig

Add-AzureRmLoadBalancerBackendAddressPoolConfig

Set-AzureRmNetworkInterface

Add-AzureRmLoadBalancerRuleConfig

4. Encrypted Storage Accounts, Azure Key Vault for SQL TDE Keys & Advanced Disk Encryption (ADE)

SQL Server supports Transparent Database Encryption (TDE). SQL Server keys can be stored securely inside the Azure Key Vault. SQL Server 2014 and earlier can retrieve keys from the Azure Key Vault with a free Connector utility. SQL Server 2016 onwards natively supports the Azure Key Vault. It is generally recommended to Encrypt a database before loading data with R3load as the overhead involved is only ~5%. Applying TDE after an import is possible, but this will take a lot of time on large databases. The recommended cipher is AES-256. Backups are encrypted on TDE systems.

Advanced Disk Encryption is a technology like "Bitlocker". It is preferable not to use ADE on disks holding DBMS datafiles, temp files or log files. The recommended technology to secure SQL Server (or other DBMS) datafiles at rest is TDE (or the native DBMS encryption tool).

It is strongly recommended not to use both SQL Server TDE and ADE disks in combination. This may create a large overhead and is a scenario that has not been tested. ADE is useful for encrypting the OS Boot Disk

The Azure Platform now supports Encrypted Storage Accounts. This feature encrypts at-rest data on a storage account

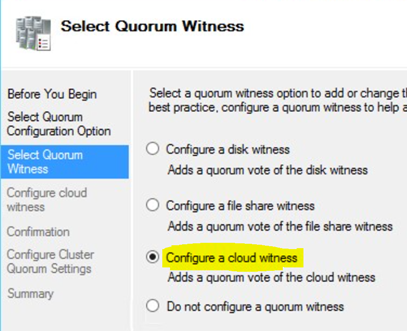

5. Windows Server 2016 Cloud Witness

Windows Server 2016 will be generally available for SAP customers in Q1 2017 based on current planning.

One very useful feature is the Cloud Witness.

A general recommendation for Windows Clusters on Azure is:

1. Use Node & File Share Witness Majority with Dynamic Quorum

2. The File Share Witness should be in a third location (not in the same location as either primary or DR)

3. There should be independent redundant network links between all three locations (primary, DR, File Share Witness)

4. Systems that have a very high SLA may require the File Share Witness share to be highly available (thus requiring another cluster)

Until Windows 2016 there were several problems with this approach:

1. Many customers compromised the DR solution by placing the FSW in the primary site

2. Often the FSW was not Highly Available

3. The FSW required at least one additional VM leading to increased costs

4. If the FSW was Highly Available this required 2 VMs and software shared disk solutions like SIOS. This increases costs

Windows Server 2016 resolves this problem with the Cloud Witness:

1. The FSW is now a Platform as a Service (PaaS) role

2. No VM is required

3. The FSW is now automatically highly available

4. There is no ongoing maintenance, patching, HA or other activities

5. After the Cloud Witness is setup it is a Managed Service

6. The Cloud Witness can be in any Azure datacenter (a third location)

7. The Cloud Witness can be reached over standard internet links (avoiding the requirement for redundant independent links to the FSW)

6. Azure Site Recovery Azure-2-Azure (ASR A2A)

Azure Site Recovery is an existing technology that replicates VMs from a source to a target in Azure.

A new scenario will be released in Q1. The new scenario is Azure to Azure ASR.

Scenarios supporting replicating Hyper-V, Physical or VMWare to Azure are already Generally Available.

The key differentiators between Azure Site Recovery (ASR) and competing technologies:

Azure Site Recovery substantially lowers the cost of DR solutions. Virtual Machines are not charged for unless there is an actual DR event (such as fire, flood, power loss or test failover). No Azure compute cost is charged for VMs that are synchronizing to Azure. Only the storage cost is charged

Azure Site Recovery allows customers to perform non-disruptive DR Tests. ASR Test Failovers copy all the ASR resources to a test region and start up all the protected infrastructure in a private test network. This eliminates any issues with duplicate Windows computernames. Another important capability is the fact that Test Failovers do not stop, impair or disrupt VM replication from on-premise to Azure. A test failover takes a "snapshot" of all the VMs and other objects at a particular point in time

The resiliency and redundancy built into Azure far exceeds what most customers and hosters are able to provide. Azure blob storage stores at least 3 independent copies of data thereby eliminating the chances of data loss even in event of a failure on a single storage node

ASR "Recovery Plans" allow customers to create sequenced DR failover / failback procedures or runbooks. For example, a customer might create a ASR Recovery Plan that first starts up Active Directory servers (to provide authentication and DNS services), then execute a PowerShell script to perform a recovery on DB servers, then start up SAP Central Services and finally start SAP application servers. This allows "Push Button" DR

Azure Site Recovery is a heterogeneous solution and works with Windows and Linux and works well with SQL Server, Oracle, Sybase and DB2.

Additional Information:

1. To setup an ASCS on more than 2 nodes review SAP Note 1634991 - How to install an ASCS or SCS instance on more than 2 cluster nodes

2. SAP Application Servers in the DR site are not running. No compute costs incurred

3. Costs can be reduced further by decreasing the size of the DR SQL Servers. Use a smaller cheaper VM sku and upgrade to a larger VM if a DR event occurs

4. Services such as Active Directory must be available in DR

5. SIOS or BNW AFSDrive can be used to create a shared disk on Azure. SAP requires a shared disk for the ASCS as at November 2016

6. Costs can be reduced by removing HA from DR site (only 1 DB and ASCS node)

7. Careful planning around cluster quorum models / voting should be done. Explain cluster model to operations teams. Use Dynamic Quorum

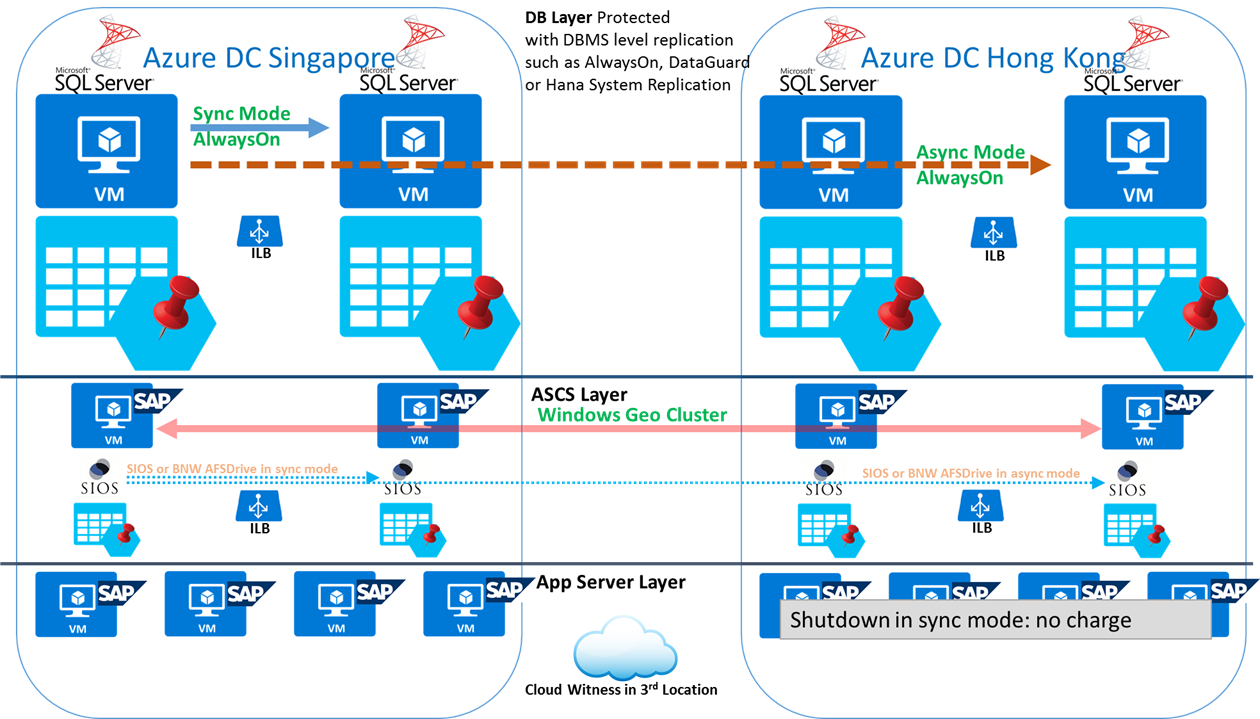

Diagram showing DR with HA

7. Pinning Storage

Azure storage accounts can be "affinitized" or "pinned" to specific storage stamps. A stamp can be considered a separate storage device.

It is strongly recommended to use separate storage account for the DBMS files for each database replica.

In the example below the following configuration is deployed:

1. SQL Server AlwaysOn Node 1 uses Storage Account 1

2. SQL Server AlwaysOn Node 2 uses Storage Account 2

3. A support request was opened and Storage Account 1 was pinned to stamp 1, Storage Account 2 was pinned to stamp 2

4. In this configuration the failure of the underlying storage infrastructure will not lead to an outage.

8. Increase Windows Cluster Timeout Parameters

When running Windows Cluster on Azure VMs it is recommended to apply a hotfix to Windows 2012 R2 to increase the Cluster Timeout values to the defaults that are set on Windows 2016.

To increase the Windows 2012 R2 cluster timeouts to those defaulted in Windows 2016 please apply this KB https://support.microsoft.com/en-us/kb/3153887

No action is required on Windows 2016. The values are already correct

More information can be found in this blog

9. Use ARM Deployments, Use Dv2 VMs, Single VM SLA and Use Premium Storage for DBMS only

Most of the new enhanced features discussed in this blog are ARM only features. These features are not available on old ASM deployments. It is therefore strongly recommended to only deploy ARM based systems and to migrate ASM systems to ARM.

Azure D-Series v2 VM types have fast powerful Haswell processors that are significantly faster than the original D-Series.

All customers should use

Premium Storage for the Production DBMS servers and for non-production systems.

Premium Storage should also be used for Content Server, TREX, LiveCache, Business Objects and other IO intensive non-Netweaver file based or DBMS based applications

Premium Storage is of no benefit on SAP application servers

Standard Storage can be used for database backups or storing archive files or interface files

More information can be found in SAP Note 2367194 - Use of Azure Premium SSD Storage for SAP DBMS Instance

Azure now offers a financially backed SLA for single VMs. Previously a SLA was only offered for VMs in an availability set. Improvements in online patching and reliability technologies allow Microsoft to offer this feature.

10. Sizing Solutions for Azure – Don't Just Map Current VM CPU & RAM Sizing

There are a few important factors to consider when developing the sizing solution for SAP on Azure:

1. Unlike on-premises deployments there is no requirement to provide a large sizing buffer for expected growth or changed requirements over the lifetime of the hardware. For example when purchasing new hardware for an on-premises system it is normal to purchase sufficient resources to allow the hardware to last 3-4 years. On Azure this is not required. If additional CPU, RAM or Storage is required after 6 months, this can be immediately provisioned

2. Unlike most on-premises deployment on Intel servers Azure VMs do not use Hyper-Threading as at November 2016. This means that the thread performance on Azure VMs is significantly higher than most on-premises deployments. D-Series v2 have more than 1,500 SAPS/thread

3. If the current on-premises SAP application server is running on 8 CPU and 56GB of RAM, this does not automatically mean a D13v2 is required. Instead it is recommended to:

a. Measure the CPU, RAM, network and disk utilization

b. Identify the CPU generation on-premises – Azure infrastructure is renewed and refreshed more frequently than most customer deployments.

c. Factor in the CPU generation and the average resource utilization. Try to use a smaller VM

4. If switching from 2-tier to 3-tier configurations it is recommended to review this blog

5. Review this blog on SAP on Azure Sizing

6. After go live monitor the DB and APP servers and determine if they need to be increased or decreased in size

11. Fully Read & Review the SAP on Azure Deployment Guides

Before starting a project all technical members of the project team should fully review the SAP on Azure Deployment Guides

These guides contain the recommended deployment patterns and other important information

Ensure the Azure VM monitoring agents for ST06 as documented in Note 2015553 - SAP on Microsoft Azure Support prerequisites

SAP systems are not supported on Azure until this SAP Note is fully implemented

12. Upload R3load Dump Files with AzCopy, RoboCopy or FTP

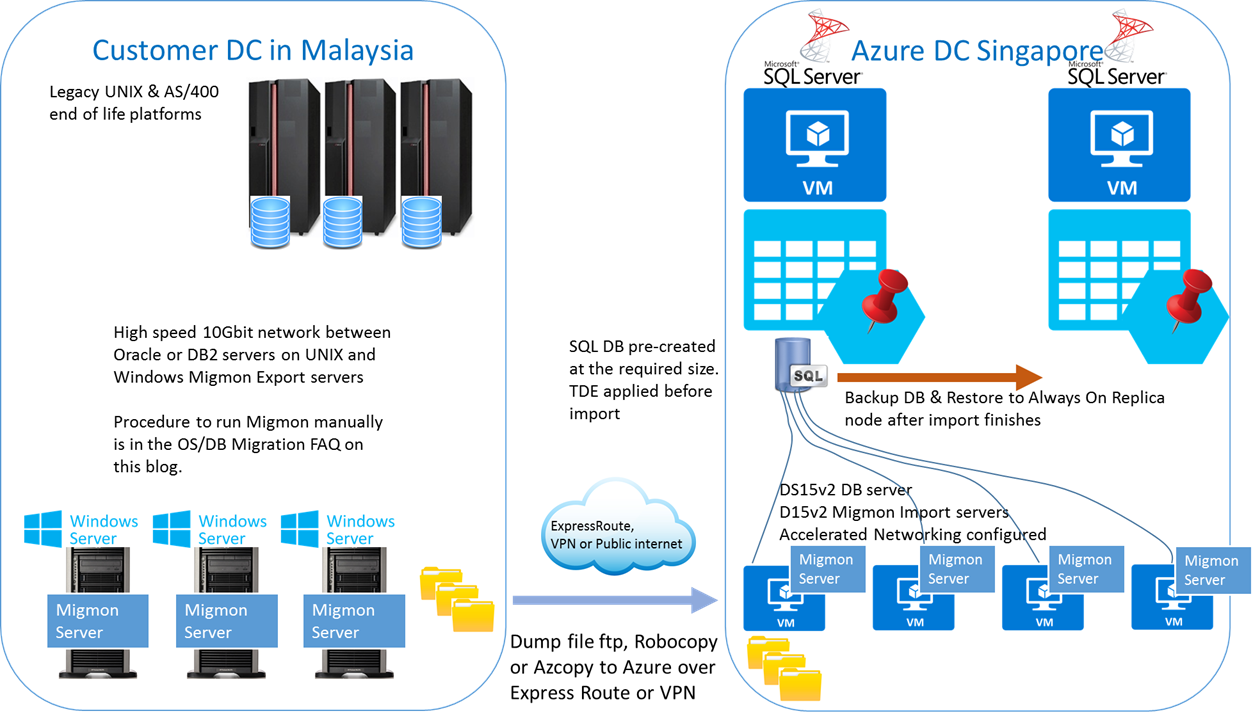

The diagram below shows the recommended topology for exporting a system from an existing datacenter and importing on Azure.

SAP Migration Monitor includes built in functionality to transfer dump files with FTP.

Some customers and partners have developed their own scripts to copy the dump files with Robocopy.

AzCopy can be used and this tool does not need a VPN or ExpressRoute to be setup as AzCopy runs directly to the storage account.

13. Use the Latest Windows Image & SQL Server Service Pack + Cumulative Update

The latest Windows Server image includes all important updates and patches. It is recommended to use the latest available Windows Server OS available in the Azure Gallery

The latest DBMS versions and patches are recommended.

We do not generally recommend deploying SQL Server 2008 R2 or earlier for any SAP system. SQL Server 2012 should only be used for systems that cannot be patched to support more recent SQL Server releases.

SQL Server 2014 has been supported by SAP for some time and is in widespread deployment amongst SAP customers already both on-premises and on Azure

SQL Server 2016 is supported by SAP for SAP_BASIS 7.31 and higher releases and has already been successfully deployed in Production at several large customers including a major global energy company. Support for SQL 2016 for Basis 7.00 to 7.30 is due soon.

The latest SQL Server Service Packs and Cumulative updates can be downloaded from here.

Due to a change in incremental servicing policies the very latest SQL Server CU will only be available for download.

Previous Cumulative Updates can be downloaded from here 1966681 - Release planning for Microsoft SQL Server 2014 2201059 - Release planning for Microsoft SQL Server 2016

The Azure platform fully supports Windows, Suse 12 or higher and RHEL 7 or higher. Oracle, DB2, Sybase, MaxDB and Hana are all supported on Azure.

Many customers utilize the move from on-premises to Cloud to switch to a single support vendor and switch to Windows and SQL Server

Microsoft has released a Database Trade In Program that allows customers to trade in DB2, Oracle or other DBMS and obtain SQL Server licenses free of charge (conditions apply).

The Magic Quadrant for Operational Database Management Systems places SQL Server in the lead in 2015. This lead was further extended in the 2016 Magic Quadrant

14. Migration to Azure Pre-Flight Checklist

Below is a recommended Checklist for customers and partners to follow when migrating SAP applications to Azure.

1. Survey and Inventory the current SAP landscape. Identify the SAP Support Pack levels and determine if patching is required to support the target DBMS. In general the Operating Systems Compatibility is determined by the SAP Kernel and the DBMS Compatibility is determined by the SAP_BASIS patch level.

Build a list of SAP OSS Notes that need to be applied in the source system such as updates for SMIGR_CREATE_DDL. Consider upgrading the SAP Kernels in the source systems to avoid a large change during the migration to Azure (eg. If a system is running an old 7.41 kernel, update to the latest 7.45 on the source system to avoid a large change during the migration)

2. Develop the High Availability and Disaster Recovery solution. Build a PowerPoint that details the HA/DR concept. The diagram should break up the solution into the DB layer, ASCS layer and SAP application server layer. Separate solutions might be required for standalone solutions such as TREX or Livecache

3. Develop a Sizing & Configuration document that details the Azure VM types and storage configuration. How many Premium Disks, how many datafiles, how are datafiles distributed across disks, usage of storage spaces, NTFS Format size = 64kb. Also document Backup/Restore and DBMS configuration such as memory settings, Max Degree of Parallelism and traceflags

4. Network design document including VNet, Subnet, NSG and UDR configuration

5. Security and Hardening concept. Remove Internet Explorer, create a Active Directory Container for SAP Service Accounts and Servers and apply a Firewall Policy blocking all but a limited number of required ports

6. Create an OS/DB Migration Design document detailing the Package & Table splitting concept, number of R3loads, SQL Server traceflags, Sorted/Unsorted, Oracle RowID setting, SMIGR_CREATE_DDL settings, Perfmon counters (such as BCP Rows/sec & BCP throughput kb/sec, CPU, memory), RSS settings, Accelerated Networking settings, Log File configuration, BPE settings, TDE configuration

7. Create a "Flight Plan" graph showing progress of the R3load export/import on each test cycle. This allows the migration consultant to validate if tunings and changes improve r3load export or import performance. X axis = number of packages complete. Y axis = hours. This flight plan is also critical during the production migration so that the planned progress can be compared against the actual progress and any problem identified early.

8. Create performance testing plan. Identify the top ~20 online reports, batch jobs and interfaces. Document the input parameters (such as date range, sales office, plant, company code etc) and runtimes on the original source system. Compare to the runtime on Azure. If there are performance differences run SAT, ST05 and other SAP tools to identify inefficient statements

9. SAP BW on SQL Server. Check this blogsite regularly for new features for BW systems including Column Store

10. Audit deployment and configuration, ensure cluster timeouts, kernels, network settings, NTFS format size are all consistent with the design documents. Set perfmon counters on important servers to record basic health parameters every 90 seconds. Audit that the SAP Servers are in a separate AD Container and that the container has a Policy applied to it with Firewall configuration.

Content from third party websites, SAP and other sources reproduced in accordance with Fair Use criticism, comment, news reporting, teaching, scholarship, and research