Moving from SAP 2-Tier to 3-Tier configuration and performance seems worse

Lately we were involved in a case where a customer moved a SAP ERP system from a 2-Tier configuration to a 3-Tier configuration in virtualized environments. This was done because the customer virtualized the system and could not provide VMs large enough on the new host hardware to continue with a 2-Tier SAP setup. Hence the ASCS and only dialog instance got moved into one VM. Whereas the DBMS server was in another VM hosted in the same private cloud. Testing the new configuration afterwards was positive in terms of functionality. However, performance wise some of the batch processes were running factors slower. Not a few percent points, but really factors apart from what those batch jobs took on run time in the 2-Tier configuration. So investigations went into several directions. Did something change on the DBMS settings? No, not really. Got a bit more memory, but no other parameters changed. ASCS and primary application server also configured the same way. Nothing really changed in configuration. Since the tests did run a single batch job, issues around network scalability could be excluded as well.

So next step was looking into SAP single record statistics (transaction STAD). It really showed rather high database times for the selects one of the slowest running batch jobs issued against the database. Means it looked like a lot of the run time was accumulated when trying to select data from the SQL Server DBMS. On the other side there was no reason why the DBMS VM should run slow. We talked about on single batch job that was running against the system when the tests ran. At the end we found out what the issue was. Correct, it had to do with networking latency that was added by moving from a 2-Tier configuration to a 3-Tier configuration. Got us thinking a bit about documenting and investigating a bit on this. Below you can read some hopefully interesting facts.

You got all the tools in SAP NetWeaver to figure it out

What does that mean? With the functionalities built into SAP NetWeaver you can measure what the DBMS system measures on response time for a query and on the other side the response time the application instance experiences. At least for SQL Server. The two functionalities we are talking about in specific are:

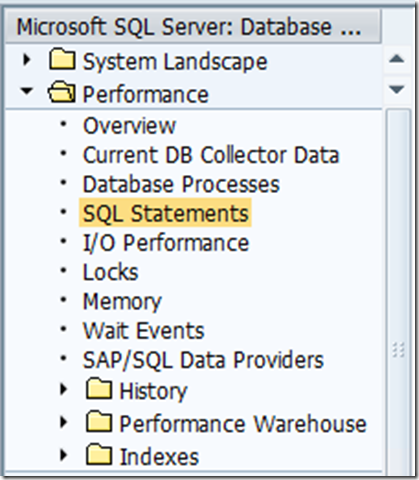

- DBACockpit – SQL Statements

- ST05 – Performance Trace

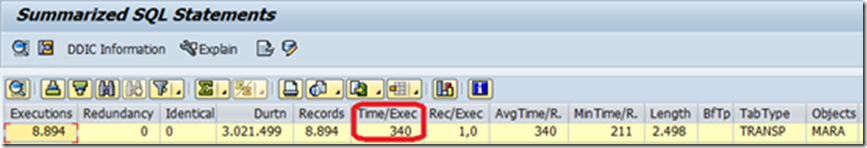

As implemented in DBACockpit for SQL Server, the data shown here:

Is taken directly from the SQL Server instance. Or to be more precise from a DMV called sys.dm_exec_query_stats. It measures a lot of data about every query execution and stores it I the DMV. However, within the SQL Server instance. So, the time starts ticking when the query enters the SQL Server instance and stops when the results are available to be fetched from the SQL Server client used by the application (like ODBC, JDBC, etc). Hence the elapsed time is recorded and measured purely within SQL Server usually without taking network latency into consideration.

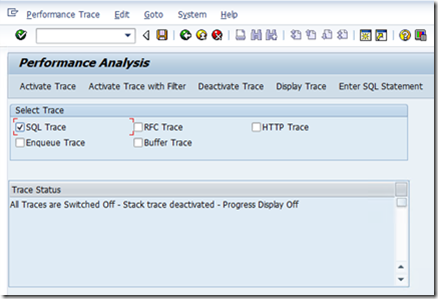

On the other side, the SAP ST05 Performance Trace:

is measuring query execution times at a point where the query leaves the application server instance and the result set returns. Means in this case, the time spent in network communication between the SAP application instance and DBMS server is fully included in the measurement. And it is the time the SAP application instance experiences. In case of our batch job the time that can define the execution time majorly. Especially for batch jobs that spend larger quantities of time in interaction with the DBMS system.

Let’s measure a bit

In order to measure a bit and demonstrate the effects, we went a bit extreme by creating a job that spent nearly 100% of its time inter acting with the DBMS. And then we started to measure by performing the following steps:

- We flushed the SQL Server procedure cache with the T-SQL command dbcc freeproccache. Be aware this command is also dropping the content of the SQL Server DMV sys.dm_exec_query_stats.

- We then started ST05 with the appropriate filters to trace the execution of our little job

- We then started our little job. The run time was just around 10 seconds

Our little job read around 9000 Primary keys of the SAP ERP material master table MARA into an internal table. As next step, it looped over the internal table and selected row by row fully defining the primary key. However only the first 3 columns of every row got read.

Our first setup was to test with VMs that were running on rather old server hardware. Something around 6 or 7 year old processor generation. But the goal of this exercise is not really to figure out the execution times as such, but the ratio, we are getting between what we measure on the DBMS side and the SAP side in the different configurations.

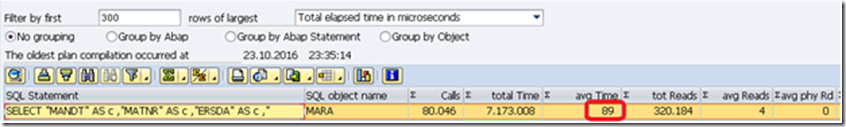

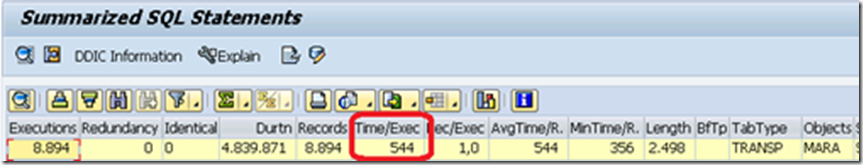

Hence in a first test using our older hosts with VMs we saw this after a first run:

So, we see 89 microseconds average execution time per query on the SQL Server side. Well, the data as expected, was in in memory. The query is super simple since it accesses a row by the primary key. Just reading the first 3 columns of a relative wide table. So yes, very well doable.

As we are going to measure the timing of running this report on the SAP application side, we are going to introduce the notion of an ‘overhead factor’. We define:

‘Overhead factor’ = The factor of time that is spent on top of the pure SQL Server execution time for network transfer of the data and processing in the ODBC client until the time measurement in the SAP logic is reached. So, if it takes x microseconds elapsed time in SQL Server to execute the query and we measure 2 times x in ST05, the ‘overhead factor is 2.

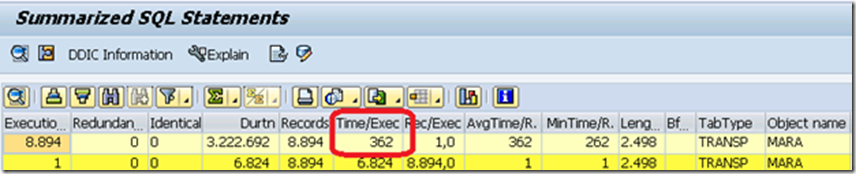

As we are running in a 2-Tier configuration, expectation would be that we add a few microseconds into this network and processing time. Looking at the summarized ST05 statistics of our trace we were a bit surprised to see this:

We measure 362 microseconds in our 2-Tier configuration. Means compared to what SQL Server requires on time to execute our super simple query, the response time as measured from the SAP side is a rough 4 times larger. Or in other words our ‘overhead factor’ is a factor of 4.

The interesting question is now going to be how the picture is going to look in case of a 3-Tier system. The first test we are going to do is involving a DBMS VM and an SAP application server VM that are running on the same host server.

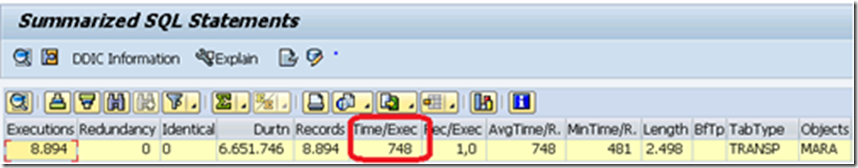

In this case the measurements in ST05 looked like:

With 544 microseconds in average. Means the ‘overhead factor’ accumulated to a factor of around 6.

In the second step let’s consider a scenario where the VMs are not hosted on the same server. The servers of the same type and same processor that we used for the next test were actually sitting in two different racks. Means we had at least two or three switches to have the traffic flowing between the VMs. This very fact was reflected in the ST05 trace immediately where we looked at this result:

Means our ‘overhead factor’ basically increased to a bit over 8.

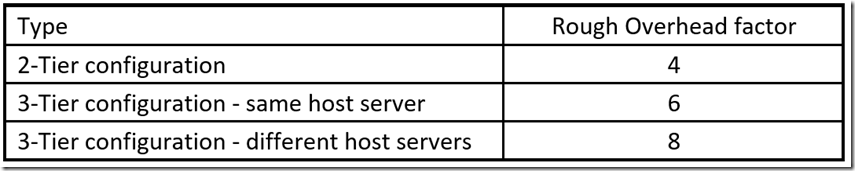

So, let’s summarize the results in this table:

|

Thus, we can summarize that the move from 2-Tier to 3-Tier can add significant overhead time, especially if more and more network components get involved. For SAP jobs this can mean that the run time can increase by factors in extreme cases as we tested them here. The more resource consuming a SAP logic is on the application layer, the less the impact of the network will be.

What can you do, especially in case of virtualization?

- Try to leverage hardware for your private cloud that optimizes network traffic through architectures like SR-IOV (https://msdn.microsoft.com/en-us/windows/hardware/drivers/network/overview-of-single-root-i-o-virtualization--sr-iov- ). SR-IOV is also supported by VMWare in more recent releases. Please check individual documentation of your vSphere version.

- Especially for larger VMs of 16vCPUs or above use vRSS with the NIC drivers (https://technet.microsoft.com/en-us/library/dn383582(v=ws.11).aspx and https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/whitepaper/vmware-vsphere-pnics-performance-white-paper.pdf )

That assumes that the design principles from an infrastructure point of view are to have the compute infrastructure of the SAP DBMS layer and the application layer as close as possible together. ‘Together’ in the sense of distance, but also in the sense of having the least amount of network switches/routers and gateways in the network path that ideally is not miles in length.

Testing on Azure

The tests in Azure were conducted exactly with the same method and same report.

Tests within one Azure VNet

For the first test on Azure, we created a VNet and deployed our SAP system into it. Since the intent as well was to test a new network functionality of Azure, we used the DS15v2 VM type for all our exercises. The new functionality we wanted to test and demonstrate is called Azure Accelerated Networking and is documented here: https://azure.microsoft.com/en-us/documentation/articles/virtual-network-accelerated-networking-portal/

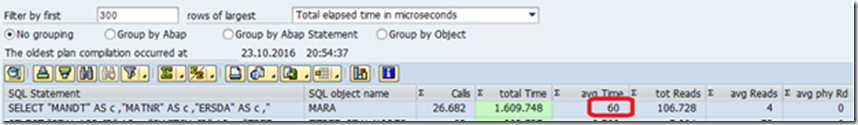

Executing our little report, in a 2-Tier configuration we measured:

60 microseconds to execute a single query in SQL Server. Looking at the ST05 values, we are experiencing 177 microseconds:

This is a factor of around 3.5 times between the pure time it takes on SQL Server and the time measured on the SAP instance that ran on the same VM as SQL Server.

For the first 3-Tier test, we deployed the two VMs, the VM running the database instance and the VM running the SAP application instance without the ‘Azure network acceleration’ functionality deployed. As mentioned the VMs are in the same Azure VNet.

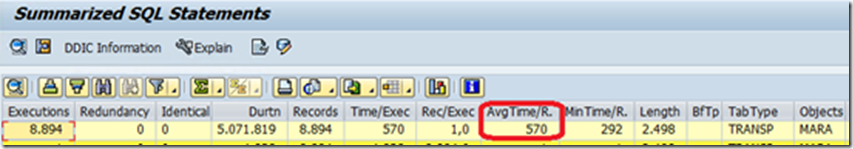

As we perform the tests, the results measured in ST05 on the dedicated SAP instance look like:

Means we are looking into 570 microseconds which is nearly 3 times the time it takes with a 3-Tier configuration and around a factor of 9.5 times more time than SQL Server did take to work on a single query execution.

As a next step, we configured the new ‘Azure network acceleration’ functionality in both VMs. The database VM and the VM running the SAP instance were using the acceleration. Repeating the measurements, we were quite positively surprised experiencing a result like this:

We basically are down to 340ms per execution of the query measured on the SAP application instance. A dramatic reduction of the impact the network stack introduced in virtualized environments.

The factor of overhead compared to the pure time spent on the execution on SQL Server is reduced to a factor of under 6. Or you cut out a good 40% of communication time.

Additionally, we performed some other tests in 3-Tier SAP configurations in Azure where:

- We put the SAP instance VM and DBMS VM into an Azure Availability Set

- Where we deployed the SAP instance VM into a VM type that forced that VM onto another hardware cluster (SAP instance VM = GS4 and DBMS VM = DS15v2). See also this blog on Azure VM Size families: https://azure.microsoft.com/en-us/blog/resize-virtual-machines/

In all those deployments, without ‘Azure network optimization’ functionality, we did not observe any significant change from ST05 measurements. In all the cases the values the single execution values measured with ST05 ended between 530 and 560ms.

So in essence, we showed for the Azure side:

- Azure compared to on-premise virtualization does not add anymore overhead in communications.

- The new accelerated networking feature has a significant impact on the time spent in communications and data transport over the network.

- There is no significant impact having two Azure VMs communicating that run on different hardware clusters.

What are we missing? Yes, we are missing pure bare-metal to bare-metal measurements. The reason we left that scenario out is because most of our customers have at least one side of the 3-Tier architecture virtualized or even both sides. Take e.g the SAP landscape in Microsoft. Except the DBMS side of the SAP ERP system, all other components are virtualized. Means the Microsoft SAP landscape has a degree of 99% virtualization with a good portion of the virtualized systems already running on Azure. Since the tendency by customers is to virtualize their SAP landscapes more and more, we did not see any need to perform these measurements on bare-metal systems anymore.

Comments

- Anonymous

February 22, 2017

Thank you Jürgen, for another excellent insight!My questions:1) In a concrete VM configuration (as ours) can I determine "my overhead" by repeating your test?And will it be specific to my landscape?2) You took a very small query with very little data to transfer over the network.How about the overhead of more expensive queries?Does the factor for tiny queries also apply? Or would the "overhead formula" be more complex?3) For clarification: The DB request times in the STAD records reflect the DB time PLUS the overhead. Right?With this new knowledge my old problem persists - and now its even worse:What I can see in the DBACockpit is the "SQL Server truth".But the query times are "wrong" from the ABAP program's perspective.So I only can tell the programmer: * This ABAP SELECT consumes n milliseconds in the Database. This is not good ... (Many reasons possible ...)* But the impact on the response time of the application of this not good query is much more,* BUT I CANNOT TELL YOU - unless you can reproduce it.* And then we will see the ABAP truth in the ST05 trace.Things keep being complex...Kind regards, Rudi