SQL Server Log Shipping – Bandwidth Analysis for Content Database Updates

A question that often comes up when talking about SharePoint and SQL Server log-shipping is “how much bandwidth will I need to make log-shipping work between my two farms?” It’s certainly a sensible question given these things need to be planned to avoid over-congestion preventing the Disaster-Recovery (DR) site from being able to restore logs in time, which would obviously break the entire DR farm concept.

Well, as should become apparent fairly quickly there’s no short question to this – you could just as well ask “how long is a piece of string?” – neither question on its own makes sense to ask but that’s not to say we can’t make some intelligent calculations that’ll help us figure out how much bandwidth we’ll need.

Obviously the amount of bandwidth is going to depends a lot of how many updates you make to the content-database – if you’re adding several hundred gigabytes of data a day then all those same transactions will need to be applied to the DR site too. So perhaps a more appropriate question is “how much transaction-log data does SharePoint generate for concrete data operations performed on a SharePoint application?”. That is a good question; from there we can work backwards to know how much bandwidth will be needed at a minimum if our assumptions on # of edits are correct. Let’s take a look.

Bandwidth Analysis Testing Methodology

For this analysis the testing was fairly simple; run then immediately suspend log-shipping backups before each test, then re-running the backup again immediately after each test to make sure the next backup/TRN file will only contain the transactions generated by each test. The file-size is then measured to estimate the bandwidth needed for that operation.

Testing was done in SharePoint 2013 and 2010 the same – the 2010 results are at the end of this article.

No analysis article is complete without a clever word-art diagram or graph of some kind, so here’s what we’re going to do:

Tests to Run in SharePoint

- Add – enter x10 files of 10mb each one.

- Edit (field) – edit each item & change a field value in each item added.

- Edit (file) – edit the file-contents of the 10mb attachments in each item added.

- Delete (to recycle-bin) – delete the items from a list to the recycle-bin.

- Delete (from recycle-bin) – permanently purge the items from the content-database/application.

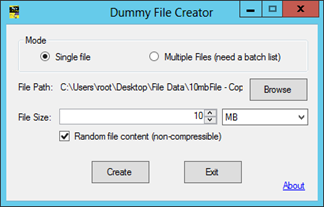

For these tests, a source file was generated with this tool, the excellent “Dummy File Creator” and then copied x10 times to give us x10 identical files of exactly 10 megabytes.

Here’s an example file:

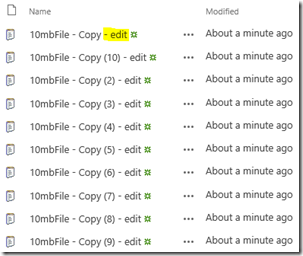

Here are the full set of files:

Tests in SharePoint 2013

Inserts

Let’s upload these files to a SharePoint documents library.

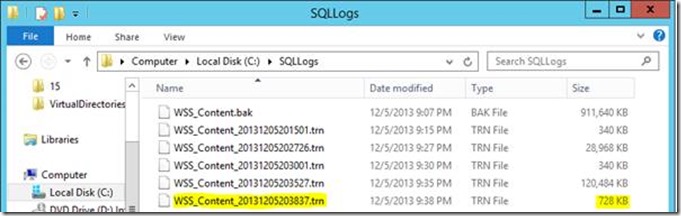

Now we start the suspended backup job to generate new transaction-log backups since the last backup.

Enable & trigger an incremental backup.

Resulting transaction-log file is 120mb.

Edits

Let’s update all 10 title fields and see what happens.

Force another backup.

Resulting file is < 1mb.

Edit Random File

Instead of editing the fields we now want to try editing the file itself.

Here we make a quick, dirty edit & save.

Resulting file is > 4mb. Why exactly its 4mb isn’t clear without deeper investigation.

Edit Word Document

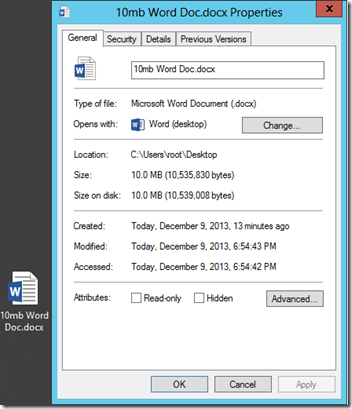

Just to compare, let’s do the same with a Word document in Word 2013. In this example I create a blank document and just embed the dummy file so it’s basically a container for the data.

Here’s the file contents – barely anything except the dummy-file too. We make an edit & upload again and get a very small update.

Yep, it’s 10mb. Now we’ll upload it & edit it from SharePoint.

Deletes

Now we delete the records to and from the recycle-bin.

Delete to recycle-bin:

After deleting from the recycle-bin, the resulting file was very small.

SharePoint 2013 Results Summary

Action |

TRN File Size |

Add 10mb file |

Approximately the size of each file added since last backup. |

Edit field |

Kilobytes per updated item. |

Edit above file. |

4 mb |

Edit 10mb Word Doc (2013) |

0.5mb |

Delete to recycle-bin |

< 100 Kilobytes per deleted item. |

Delete from recycle-bin. |

Almost nothing. |

Most of these results are fairly unsurprising; adding data is the most expensive operation. Editing too is also quite expensive although it’s interesting to see how Office edits are much more efficient in 2013 – that because only the delta between the old & new is saved to DB, rather than the entire file each time. Clever stuff.

Tests in SharePoint 2010

Many customers are still on 2010 so it would be unfair to leave it out. Almost all tests were the same except where the file-data was edited which gives us a 100% separate copy each save in 2010 whereas in 2013 we have a new feature called “shredded storage” for files, meaning (in short) we save the file then only the changed parts thereafter (instead of a whole new file). This makes updates much smaller in storage costs and therefore we see the 2013 bandwidth requirements are much lower.

Anyway, here’s the results.

Action |

TRN File Size |

Add 10mb file |

Same as 2013 |

Edit field |

Same as 2013. |

Edit above file. |

12mb |

Edit 10mb Word Doc (Word 2013) |

12.5mb |

Delete to recycle-bin |

Same as 2013. |

Delete from recycle-bin. |

Same as 2013. |

Calculating Bandwidth Needed

Expected Rate of Updates/Inserts a Day/Hour

This guide was done on the basis of a set number of adds/updates/deletes. As it turns out, only the additions & updates are really costly (less so for updates in 2013). So with this figure we know that if 10 users edit or add approximately 10mb of data an hour, we’ll need to be able to ship 100mb within an hour before the logs start backing up at the primary site.

Our pipe between sites therefore needs to be capable of delivering 1.6mb a minute, minimum, in order to maintain this 100mb of updates before another 100mb is backed-up. If we don’t hit this minimum bandwidth then our DR site will get further & further “behind” our primary site and cease to be an effective disaster recovery site anymore, until the transfer could catch-up again. How quickly we generate another 100mb of log-data is of course dependant on how many users insert or update data, so again, researching expected data updates from users is critical here.

What type of users will be on the site? If they’re anonymous they’ll almost certainly generate very little content so you can take that into account. How many editors will there be making changes? How many content contributors will be adding new material and how big will it be? Now we’re starting to get an idea of how much content we’ll be generating an hour and therefore how much bandwidth we’ll need.

Transferring of Data across a Network

What we’ve looked at in this article is just the size of the log-file generated by each action. That doesn’t of course necessarily directly translate into bandwidth needed because a file of 100mb may be compressed to 50mb if the compression algorithm used is good enough (and the data is compressible). For shipping log-files, a recommended practise is to use Distributed File System that comes in Windows Server as it does come with built-in compression for reducing bandwidth costs. Quite how much reduction however isn’t covered here for now; I suspect the TRN files are already quite compressed so I doubt the reduction in size for transfer would be much but it would be some. More info on DFS @ https://technet.microsoft.com/en-us/library/jj127250.aspx

Conclusion

That’s it; with this information you should be able to figure out how much bandwidth will be needed once you also have a rough figure on how many updates/inserts you’re expecting. Good luck!

// Sam Betts