The case of the server who couldn’t join a cluster – operation returned because the timeout period expired

Hi everyone! Today I am going to present a very interesting case which we recently handled in EMEA CSS. The customer wanted to build a Windows Server 2008 SP2 x64 Failover Cluster but wasn’t able to add any nodes to it. The “Add Node” wizard timed out and the following error was visible in the “addnode.mht” file in the %SystemRoot%\Cluster\reports directory:

The server “nodename” could not be added to the cluster. An error occurred while adding node “nodename” to cluster “clustername” . This operation returned because the timeout period expired.

First of all let me give you some details of the setup:

- Windows Server 2008 SP2 x64 Geospan Failover Cluster

- Node + Disk majority quorum model

- EMC Symmetrix storage

- Powerpath 5.3 SP1

- EMC Cluster Extender 3.1.0.20

- Mpio.sys 6.0:6002.18005 / Storport.sys 6.0:6002.22128 / Qlogic QL2300 HBA with v 9.1:8.25

- Broadcom NetXtreme II NICs v. 4.6:55.0

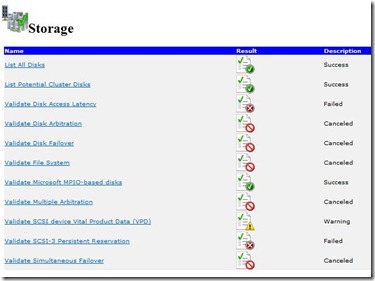

We initially saw some Physical Disk related errors/warnings in the validation tests:

- SCSI page 83h VPD descriptors for the same cluster disk 0 from different nodes do not match

- Successfully put PR reserve on cluster disk 0 from node “nodename” while it should have failed

- Cluster Disk 0 does not support Persistent Reservation

- Cluster Disk 1 does not support Persistent Reservation

- Failed to access cluster disk 0, or disk access latency of 0 ms from node “nodename” is more than the acceptable limit of 3000 ms status 31

The following was also noticed in the cluster log file:

00000f94.00001690::10:21:48.502 CprepDiskFind: found disk with signature 0xa34c96dc

00000f94.00001690::10:21:48.504 CprepDiskPRUnRegister: Failed to unregister PR key, status 170

00000f94.00001690::10:21:48.504 CprepDiskPRUnRegister: Exit CprepDiskPRUnRegister: hr 0x800700aa

As described in the “ Microsoft Support Policy for Windows Server 2008 Failover Clusters ”, this behavior can be ignored for this particular (geographically dispersed) cluster due to the data replication model in use.

"Geographically dispersed clusters - Failover Cluster solutions that do not have a common shared disk and instead use data replication between nodes may not pass the Cluster Validation storage tests. This is a common configuration in cluster solutions where nodes are stretched across geographic regions. Cluster solutions that do not require external shared storage failover from one node to another are not required to pass the Storage tests in order to be a fully supported solution. Please contact the data replication vendor for any issues related to accessing data on failover"

From this point on, what we needed to focus on was a pure connectivity issue between the nodes. As we cross referenced several cluster related log files, we noticed this particular error from the Cluster Server, approximately at the same time the wizard gave up due to timeouts:

000012f8.00000758::2010/04/09-13:15:44.518 INFO [NETFT] Disabling IP autoconfiguration on the NetFT adapter.

000012f8.00000758::2010/04/09-13:15:44.518 INFO [NETFT] Disabling DHCP on the NetFT adapter.

000012f8.00000758::2010/04/09-13:15:44.518 WARN [NETFT] Caught exception trying to disable DHCP and autoconfiguration for the NetFT adapter. This may prevent this node from participating in a cluster if IPv6 is disabled.

000012f8.00000758::2010/04/09-13:15:44.518 WARN [NETFT] Failed to disable DHCP on the NetFT adapter (ethernet_7), error 1722.

[…]

00001460.00001038::2010/04/09-13:16:44.987 ERR [CS] Service OnStart Failed, WSAEADDRINUSE(10048)' because of '::bind(handle, localEP, localEP.AddressLength )'

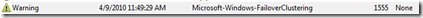

In addition, we caught this warning Event ID 1555 from Failover Clustering in the system event log of the node:

A good description of this event can be found on TechNet:

Event ID 1555 – Cluster Network Connectivity

https://technet.microsoft.com/en-us/library/cc773407(WS.10).aspx

A failover cluster requires network connectivity among nodes and between clients and nodes. Problems with a network adapter or other network device (either physical problems or configuration problems) can interfere with connectivity. Attempting to use IPv4 for '%1' network adapter failed. This was due to a failure to disable IPv4 auto-configuration and DHCP. IPv6 will be used if enabled; else this node may not be able to participate in a failover cluster.

The following troubleshooting steps were implemented, settings were checked but unfortunately the behavior did not improve:

· IPV6 was un-checked as a protocol -> re-enabled it

· Disable Teredo as per KB969256 -> doesn’t apply to SP2

· Disable Application Layer Gateway service as per KB943710 –> done

· Stop / Disable service & uninstall Sophos Antivirus software from both nodes –> done

· Virtual MAC of the NetFT adapter was different on both nodes (they are identical when the nodes are sysprepped after installing the Failover Cluster feature)

· Disabled all physical NICs except one on both nodes & configured it as “allow cluster to use this network” as well as “allow clients to connect over this network”

· Disabled TCP Chimney, RSS and NetDMA manually via netsh as per KB951037

netsh int tcp set global RSS=Disabled

netsh int tcp set global chimney=Disabled

netsh int tcp set global autotuninglevel=Disabled

netsh int tcp set global congestionprovider=None

netsh int tcp set global ecncapatibility=Disabled

netsh int ip set global taskoffload=disabled

netsh int tcp set global timestamps=Disabled

[Dword] EnableTCPA=0 in “HKLM\SYSTEM\CCS\Services\Tcpip\Parameters\”

· Rebooted several times in the process and even disabled the HBAs in Device Manager to completely cut off from the storage.

At this point we decided to bump the cluster debug log level to 5, reproduce the problem and hope we get more details than with default logging:

· Stopped the cluster service

· Rename the “%SystemRoot%\Cluster\reports” directory to “reports_old”

· Created a new reports directory

· Started back the cluster service

· Enabled log level 5 by cluster . /prop clusterloglevel=5

· Added a node by cluster . /add /node:”nodename”

· Generate the cluster log with cluster log /g after add node timed out.

What we saw was that the counter went up to 100% and remained stuck at this last phase, which was somewhat expected…important was to catch the timeout in more detailed logs:

“Waiting for notification that node “nodename” is a fully functional member of the cluster”

“This phase has failed for cluster object “nodename” with an error status of 1460 (timeout)".

ERR [IM] Unable to find adapter[….] DBG [HM] Connection attempt to node failed with WSAEADDRNOTAVAIL(10049): Failed to connect to remote endpoint

This information didn’t bring any groundbreaking clues to the case, so we decided to sit back for a minute, recap everything we did and knew so far and try to find the “missing link”:

· The customer had other Windows Server 2008 x64 SP2 clusters in production with no issues

· They used storage from the same IPV6 is enabled by default on every adapter in Windows Server 2008 but the customer decided to “unclick” it because they didn’t really have the infrastructure ready for it

· IPv4 IPs were static on both nodes, gateway and DNS servers manually assigned

· Everything looked fine and yet…the NetFT adapter seemed to have a problem.

The fact that they used static configuration coupled with the tendency to disable features that are not implemented or needed, led us to check the DHCP Client service….well, it was actually stopped and disabled! We also took a look at the TCP/IP parameters in the registry and noticed that IPv6 was also (globally) disabled.

We used the information in KB929852, removed the DisabledComponents in HKLM\SYSTEM\CCS\Services\Tcpip\Parameters\and rebooted the servers. Previously we manually started the DHCP Client service as well as changed the start type to automatic and as soon as the nodes were up and running, we initiated another node join and the other node joined successfully in less than 10 seconds!

The customer was thrilled to have his cluster ready in time for production go-live and we were happy to have played the decisive role in reaching the deadline.

As always feel free to comment/rate this article if you think this information was helpful to you as well.

Bogdan Palos

- Technical Lead / Enterprise Platforms Support (Core)

Comments

Anonymous

January 01, 2003

In our case the same this was applicable. We are not using DHCP in our Server Farm. Still for only giving the Random IP address to Virtual Nics on the both nodes (Which gets created automatically at the time of Windows Cluster Configuration), DHCP is MUST. Which keeps both nodes in communication for HeartBits. Please reply for any clarification.Anonymous

January 01, 2003

Dear All, We have gone through this error... We have spent almost 6 Hrs to resolve this issue. Solution : We enabled DHCP Services, which were disabled at the time of Hardening. Everything is working fine now. We have created cluster successfully. Thanks, .::[ashX]::.Anonymous

January 01, 2003

In my case, the problem arose because the cluster network Live Migration was off

http://blog.it-kb.ru/2014/10/31/server-could-not-be-added-to-the-cluster-hyper-v-waiting-for-notification-that-node-is-a-fully-functional-member-of-the-cluster-error-code-is-0x5b4-unable-to-successfully-cleanup/Anonymous

January 01, 2003

Hello Everyone , Just wanted to appreciate the efforts you put in to troubleshoot this issue , Just wanted to share my Ideas on this .

- DHCP client service needs to be started & running on the nodes because when we try to add a node or we bring the node up in a cluster the netft driver uses (APIPA) assign an non rout able IP address to itself & this driver is responsible to map the cluster network & create the routing of cluster networks . Since the DHCP client service was in stopped state it never gets the IP address & it doesn't function . By Default Cluster uses IPV6 to establish the connectivity between the nodes so if you do not want to use IPV6 , please make sure you disable it completely on all nodes otherwise it may cause communications delays & transient flapping in cluster networks . Please feel free to ask if you any doubts or concern . Thank You

Anonymous

August 18, 2011

but if you're NOT using DHCP for anything, does it still need to be enabled? thx.Anonymous

November 23, 2011

This document really helped to troubleshoot the same cluster node addition timeout issue...thank u so muchAnonymous

September 02, 2012

Spent some frantic moments looking at the exact same issue, wanted to tell you what fix my issues. A little known bug with LANMAN. support.microsoft.com/.../971277 HKEY_LOCAL_MACHINESYSTEMCurrentControlSetservicesLanmanServerDefaultSecurity SrvsvcDefaultShareInfo Delete the Value ‘SrvsvcDefaultShareInfo’ and restart SERVER service Hope this saves someone else some time.Anonymous

April 18, 2013

The information in KB929852 works in my case, win2k8 r2 sp1 DC hyper v, great and thnks for share your knowledge..Anonymous

December 03, 2014

Same issue here, DHCP client was stopped and disabled as part of hardening, and was found to be the cause.Anonymous

December 16, 2014

I am facing the same issue, but I am unable to find DisabledComponents registry key in HKLMSYSTEMCCSServicesTcpipParameters

I have verified that DHCP Client service is running on both nodes. I have unchecked the IPv6 in network adapter properties on both nodes.Anonymous

August 06, 2015

I had the DisabledComponents on only 1 node. Removed that, started DHCP client (set to automatic) and restarted the service and the node added like a charm. Thank u so much!Anonymous

September 28, 2015

So is IPv6 needed or not, to allow iplementation fo Windows Failover Clustering for SQL AlwaysOn Availability Groups?