Automating Capture, Save and Analysis of HTTP Traffic – Part I

Capturing HTTP traffic is a common task that most of us perform while analyzing various aspects of a website. The ultimate goal may vary but to achieve it you need some sort of tooling to help you capture the traffic and on top of that you may also want to do it in an automated fashion so productivity does not get hurt. In this blog post I will describe how to capture and save the details of communication that take place between a passive client (browser to be exact) and a server (website that host the webpages) using PowerShell. The next part will describe how to perform the analysis and drive meaningful results from the data that is captured again in an automated fashion using PowerShell.

- Capture Traffic + Save To Log Files (Covered in this post)

- Perform Analysis On Logs (Covered in the next post here)

The diagram below illustrates this:

I have chosen to use HttpWatch as a primary tool for capturing and analyzing the HTTP traffic. If you are not aware of this product you can take a look at their website for details and download the basic version which is available at no cost. I also recommend you to read their online API documentation but it's not necessary to go through this blog post as I will explain API calls that are used in the scripts. If you choose to use free of cost basic version of HttpWatch then I will recommend using "csv" format for log outputs (explained later in this post) this will ensure that you can later read the log files without using HttpWatch API.

For the record I am not affiliated with HttpWatch in anyway.

Apart from HttpWatch only software you need is PowerShell v2 or above (I didn't test these scripts on v1 but most likely they should just work) and Internet Explorer (IE 8/9 will work).

Before you begin please download files here. The download contains following:

- Capture Traffic + Save Log files (GatherPerfCountersForPages.ps1)

- Globals.ps1 (This is a global script file containing some utility functions but nothing related to automation)

- Input.csv (A sample csv file containing Url's and name of log file to be used. More on it later in this post)

- Output folder: Contains sample output log files in ".csv" and ".hwl" formats.

I have kept Capture & Analysis part separate to gain maximum flexibility. Basically the first script GatherPerfCountersForPages.ps1 produces HttpWatch log files which second script reads as an input for performing analysis. As the log files are not exclusive to our scripts you can even feed any other HttpWatch log files that you have captured outside our script (e.g. through manual browsing to a website and then saving the log file in ".hwl" extension). I will touch on this point later when we explore the analysis part, but for now focus on how you can capture the traffic and store it to the file system.

Capture Traffic & Saving It To Log Files

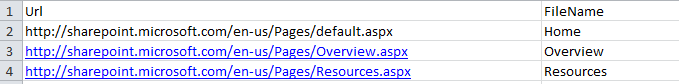

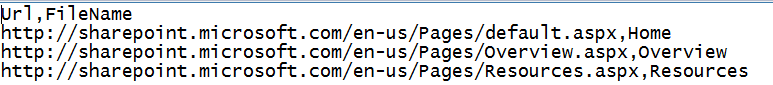

The first task is to create list of URL's that you will provide to script for browsing. You can save this list in a CSV file (take a look at Input.csv which is provided as part of download). Along with the URL you also need to provide a friendly name for a log file that will store the detail trace for that URL. Once done your CSV file should look like the following screen shots showing both editing of CSV in Excel and Notepad but you can use whatever software you prefer it doesn't really matter. I am using https://sharepoint.microsoft.com as an example as it's an anonymous site available publicly. The URLs are for the Home, Overview and Resources pages.

Input.csv (Excel 2010)

Input.csv (Notepad)

With the csv file ready, let's take a look at the script GatherPerfCountersForPages.ps1. This script is responsible for reading the URLs from the csv file and then opening up one page at a time, log its trace and save it to the file system and move to the next page. The pages are browsed in the same order as they appear in csv file, so if sequence is important make sure page URLs are in correct order within csv file. I strongly recommend downloading all scripts from the link provided at the beginning of the post and avoid copy/past directly from the blog itself due to the possibility of unsupported characters being copied as part of the script.

# ****************************************************************************** # THIS SCRIPT AND ANY RELATED INFORMATION ARE PROVIDED "AS IS" WITHOUT WARRANTY # OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE # IMPLIED WARRANTIES OF MERCHANTABILITY AND/OR FITNESS FOR A PARTICULAR PURPOSE" # ******************************************************************************

<# .SYNOPSIS Capture complete page request/response cycle and save the details to the file system for later analysis. The URL's are read from CSV file such that one URL is visited at a time in a sequential manner. .INPUT: args[0] --> <csvFile>: Path to CSV file containing URL(s) and corresponding friendly log file name args[1] --> <filePath>: Location to save the log files args[2] --> <fileExtension>: Extension of log file (by default log file would be be saved with .csv extension) args[3] --> <OutputToFile >: True | False. If set to true output will be written to a file otherwise text will be displayed on the console. .EXAMPLE .\GatherPerfCountersForPages.ps1 "C:\Files\Urls.csv" "C:\Files\" ".hwl" $false .\GatherPerfCountersForPages.ps1 "C:\Files\Urls.csv" "C:\Files\" ".csv" $true | Out-File "C:\Files\Results.txt" .LINK https://blogs.msdn.com/b/razi/archive/2013/01/27/automating-capture-save-and-analysis-of-http-traffic-part-i.aspx .NOTES File Name : GatherPerfCountersForPages.ps1 Author : Razi bin Rais Prerequisite : PowerShell V2 or above, HttpWatch, Globals.ps1 #>

Function GatherPerfCountersForPages { [CmdletBinding()] Param( [Parameter(Mandatory=$true,Position=0)][string] $csvFile, [Parameter(Mandatory=$true,Position=1)][string] $filePath, [Parameter(Mandatory=$true,Position=2)][string] $fileExtension, [Parameter(Mandatory=$false,Position=3)][bool] $OutputToFile )

if( $fileExtension -ne ".hwl" -or $fileExtension -ne ".csv") { $fileExtension= ".csv" #default to .csv }

$httpWatchController = New-Object -ComObject "HttpWatch.Controller"; $plugin = $httpWatchController.IE.New() $plugin.Log.EnableFilter($false)

foreach ($csv in Import-Csv $csvFile) {

WriteToOutPut ("Browsing:- " + $csv.Url) "Yellow" $OutputToFile

$plugin.Clear(); $plugin.ClearCache(); $plugin.Record(); $plugin.GotoURL($csv.Url); [void]$httpWatchController.Wait($plugin, -1);

#Build the full log file name and then save it $fileName = $filePath + $csv.FileName + $fileExtension WriteToOutPut ("Saving the log at " + $fileName) "Yellow" $OutputToFile

switch($fileExtension.ToUpper()) { ".HWL" {$plugin.Log.Save($fileName)} ".CSV" {$plugin.Log.ExportCSV($fileName)} default {$plugin.Log.ExportCSV($fileName)} }

$plugin.Stop() }

$plugin.CloseBrowser()

}

.\Globals.ps1 GatherPerfCountersForPages $args[0] $args[1] $args[2] $args[3]

|

Let's go through the core functionality of function GatherPerfCountersForPages. There are total of four parameters three of which are mandatory and last one is optional. Following table explain their purpose:

Parameter |

Description |

csvFile |

Filename with complete path to input CSV file containing URL's and FileName for the log file. The execution context must have read access to this file. |

filePath |

Path to a folder to save log files. The execution context must have write access to this folder. |

fileExtension |

Extension to use for the log file. Current supported extensions are ".csv" and ".hwl". Default is ".csv" |

outputToFile |

A Boolean flag with a value of $true|$false. When $true verbose is saved to an output file. This is an optional parameter. |

The first step is to create a new object based on the COM type "Httpwatch.Controller" this is standard way of creating a COM object and essentially gives you access to HttpWatch functionality to be used programmatically. Next a new instance of Internet Explorer is created and assigned to $plugin. I am creating a new instance because I find it useful to have a clean instance every time I execute the script and there are no unknown's. Also the Filtering is switched off to ensure log file does not filter out anything. The filtering in HttpWatch is a functionality to filter out the trace based on conditions but when accessed programmatically it can only either be enabled or disabled but it cannot take conditions which is only possible through UI.

With the $plugin object ready the foreach loop is used to read the csv file by using Import-CSV cmdlet. This makes it easier to use properties like "URL" and "FileName" coming from csv. Next are the calls to Clear(), ClearCache() and Record(). Clear method simply clears any existing traffic captured in the current session (if any) , ClearCache() clear the browser cache and call to Record method actually triggers the recording which is responsible for capturing the traffic .

As recording in activated, the GoToURL method is used with $csv.Url parameter to browse to the desired URL coming from the csv file. At this point you will notice a new IE instance will open and browsing to the URL will begin. One important consideration is to ensure that enough time is given to the page to completely render itself before transitioning to next page. The way to achieve this is by using Wait method of the controller object. The Wait method takes two parameters - the first one is the $plugin which is responsible for interacting with the IE instance and the second one is the timeout duration after which browser should stop processing current page regardless of its state. The value of "-1" dictates to wait for the page until it completely processed by the browser. Keep in mind that call to Wait in this case is blocking/synchronous and won't return until page is fully processed. I would advise don't do any UI interaction while page is loaded by the browser as it may affect the default behavior of Wait method.

Once the page is fully processed then the trace is saved to a file system either in ".hwl" format or as a ".csv" file. To save the output in a ".hwl" format Save method of the Log class is used. And for ".csv" output ExportCSV method is used from a Log class. As the last step Stop method finishes the recording session as whatever need to be logged is already captured and saved. Finally, after the foreach finishes enumerating all the entries in the csv file a call to CloseBrowser is made to close the IE instance.

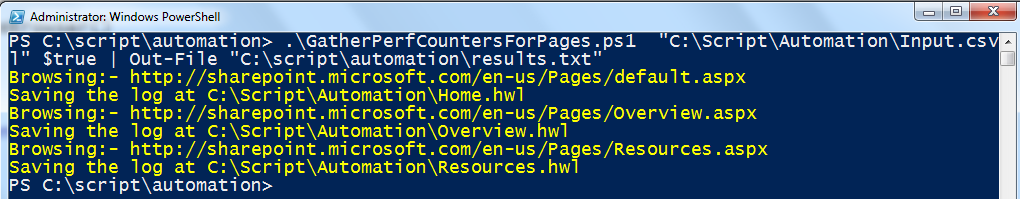

As you now have basic understanding about the workings of the script, let's run it using PowerShell console. I have placed all my scripts inside "C:\Script\Automation" folder along with the "Input.csv" file.

PS C:\script\automation> .\GatherPerfCountersForPages.ps1 "C:\Script\Automation\Input.csv" "C:\Script\Automation\" ".hwl" $true | Out-File "C:\script\automation\results.txt" |

As I press "enter" a message in my PowerShell console appears indicating that the script is browsing to the https://sharepoint.microsoft.com/en-us/Pages/default.aspx page. At the same time new IE browser instance is launched by the script and start loading the page, once the page is rendered it will display the log file details. It will then transition to the next page and performs the same steps. As the script will eventually loop through the entire URL list from the csv file it keeps updating the console (as well as output file if you have asked for it) with that information. Once it finishes processing all the URLs you should have following output in the console and in the output file (results.txt). If there are any errors they will appear on the console and will also be part of output file (if you have opted for one).

PowerShell Console Output

Results.txt (Output file)

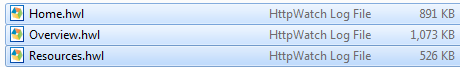

The log files are stored in ".hwl" or ".csv" extension, notice all the pages in input csv file are captured and logged.

Log files as generated by the script

With the downloads you will get an "Output" folder which contain logs both in ".hwl" and ".csv" format. It's likely your logs may differ because of web site changes during this time but it will give you good bit of idea about the log files generated by the script.

Word About Authenticated Sites

So what would happen if you run the script against a site which is authenticated and require you to provide credentials before you can browse the page? Technically speaking the answer depends on what type of authentication (e.g. Windows, Claims etc.) is setup on the target site and that will dictate the authentication flow. Typically your experience will be same as if you browse to the authenticated page manually and provide the credentials, so when browser instance opened by the script you should able to provide the credentials and page browsing should work as expected. However, depending on your need you may or may not want to capture the authentication trace as part of a log file as this will be automatically added to the first page that you browsed (because authentication is triggered by the server side and browser as a passive client respond to it). To keep log file for the first page unaffected by the authentication flow the easiest solution is to add a first entry in the Input.csv file to any of the authenticated page within the site and later discard/ignore that log file during analysis.

There is a related concern when you want to browse to an authenticated site but want to use different user accounts. One possible solution for this scenario is to create a multiple user profiles - an option available in many browsers. The idea is to use different user profiles for different authentication types. For Internet Explorer AFAIK to enable multiple user profiles you have to create a multiple windows account (e.g. UserProfile2) and then run the script under that specific user account (e.g. UserProfile2). If you are using Firefox and have multiple user profiles enabled then you can just change the call from $httpWatchController.IE.New() to $httpWatchController.Firefox.New("ProfileName") to open a new browser instance with specific user profile within Firefox.

Authentication is a broader topic and addressing it within automation is worthy of a separate discussion but for now I will leave it for some future post.

Cheers!