Floating-point arithmetic intricacies in C++ AMP

In this blog post I am going to describe a few discrepancies between floating-point behavior on the host-side and that on the accelerator-side in C++ AMP. This post will be of interest to anyone who pays attention to the floating-point accuracy and correctness in little more exotic scenarios involving floating-point numbers.

The first section gives an overview of floating-point format and is a base for all remaining sections – feel free to skip it if you consider yourself a floating-point expert. Subsequent sections describe specific discrepancies. Please note that these discrepancies are specific to Microsoft’s implementation of C++ AMP open spec and other implementations of C++ AMP might not exhibit them.

Basics of floating-point format

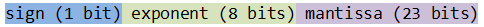

The IEEE Standard for Floating-Point Arithmetic (IEEE 754) is a document that provides a discipline for performing floating-point computations in computer systems. Floating-point numbers defined by that standard use a fixed number of bits to represent real numbers. The format is divided into 3 fields: the sign, the exponent and the mantissa. Here is a visual depiction of single precision floating-point type (float):

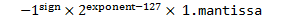

The general formula to decode a value from this format is:

The left most bit signifies whether the floating point is negative (bit set to 1) or positive (bit set to 0). Next is 8 bit exponent. It is biased by 127, which allows it to swing between -127 when all bits are zero, to 128 when all exponent bits are set to 1. With negative exponent we achieve floating-point numbers of small magnitude and with positive exponent numbers of large magnitude. The last field in floating-point format is mantissa. Mantissa encodes the precision of floating-point number. That portion of the formula has implicit leading 1 and the bits in mantissa field represent the fraction. Each bit represents subsequent negative power of 2. Here is an example of how value 5 is stored as float:

Notice that leftmost bit in mantissa is 0, therefore 1/2 is not added to the fraction. The next mantissa bit is 1, and it would contribute next negative power to the fraction which is 1/4. Another important thing to realize is that implicit leading 1 gives us extra bit of precision. Imagine for a moment that the formula does not have implicit leading 1. We would then have to explicitly encode that information in our mantissa. However since it is always set to 1, we can make it implicit and add one more bit to represent 1/2^23. In result we can actually say that our mantissa has 24 bits of precision, with only 23 bits explicitly encoded.

Double precision floating-point format (double) is very similar to what I described above. It has twice as many bits (64bits) and the format is divided into 1 bit sign, 11 bit exponent and 52 bit mantissa. The formula has bias of 1023 as we have 11 bit exponent, instead of 8 bits in float. There is implicit leading 1 as in formula described above and we commonly said that such type offers 53 bits of precision (1 more bit on top of actual storage space for mantissa).

OK, now that we got the basics, we can talk about specific discrepancies that you may find while working with C++ AMP.

The sign of negative zero can be dropped

Zero in floating-point is a special value. When all bits of the exponent and the mantissa are set to zero, then the formula is not used and we interpret such number as zero. The sign bit can still be either 1 or 0, both numbers are zeros, one is negative zero and the other is positive zero. Here are their binary representations in single precision floating-point format:

In many scenarios you would not care about the sign on your zero, in fact the standard requires those two values to return true when they are logically compared to each other. The following snippet will print “yes, equal” on the output:

if (0.0f == -0.0f)

cout << "yes, equal" << endl;

else

cout << "not equal" << endl;

That being said, there are a few circumstances in which sign on zero matters and cannot be ignored (for complete list please see "The sign bit" chapter inside IEEE 754-2008 standard). One of those examples is division by zero. The result of division by zero when the dividend is a finite non-zero number has to result in infinity with a sign that is exclusive OR of the signs of division operands. e.g.

(-5.0f/0.0f) // -infinity

(5.0f/-0.0f) // -infinity

(-5.0f/-0.0f) // +infinity

(5.0f/0.0f) // +infinity

In C++ AMP the code marked with restrict(amp) that executes on the accelerator does not guarantee to preserve the sign bit on zero. This applies for both single and double precision floating-point numbers. Here is an example in which C++ AMP drops the sign bit of negative zero:

float x = -0.0f;

unsigned int signx = (*reinterpret_cast<unsigned int*>(&x) & 0x80000000) >> 31;

The signx variable should contain 1, as first bit in x is set to 1, however when compiled with /O1 or /O2 (in any of the Visual C++ floating point models) the code is aggressively optimized and the value of signx would be 0. The possible workaround for testing the sign of floating-point value is to use signbit() function from C++ AMP math library.

Single precision denormal numbers are flushed to zero

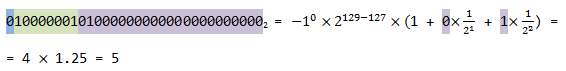

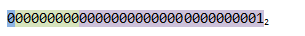

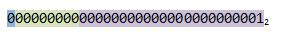

Let me start with a question: Given the single precision floating-point format from our introductory section what is the smallest positive value that we can represent? Well, let’s calculate this together. The question is about the positive number, so we keep the bit sign set to zero. Then we want the smallest possible exponent, so we choose all zeros, this will give us the exponent of 2^-127. Next is the mantissa, to have the smallest value we also fill this field of the format with zeros. Oh, wait we cannot! If we would do that we would create positive zero, we want smallest positive value, but something greater than zero, so we put 1 on the last bit in mantissa. Here is what we have:

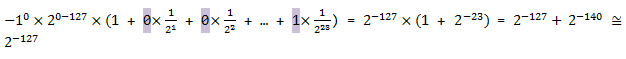

Using our general formula, this value would equal to:

The important thing to notice is that the implicit leading 1 in the formula is hurting our ability to achieve as small floating-point number as we could without it. We would get a similar result if we would set our explicit bit anywhere else in the mantissa as there is implicit 1 in the formula that is dominating the final result.

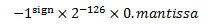

The standard allows us to represent even smaller numbers by removing the implicit one when exponent is set to 0. The new formula would be:

As I described in introductory section, the implicit leading 1 adds extra bit of precision. We therefore want to keep general formula for majority of cases and use our new formula only for very small floating-point numbers. The new formula would be used only when all bits in the exponent field are set to zero.

Notice that to achieve smooth transition of values between previous formula and our new formula the implicit exponent bias is set to -126. Let's see how this continuity between these two formulas is achieved. The smallest number in normalized format would be therefore:

Now the largest number in our new format (the one without implicit leading 1) is:

Please notice that if we would choose the exponent of -127 for our new formula then we would create a gap in floating-point numbers between 2^-126 and 2^-127. With the bias of -126 in our new formula we achieved continuity when switching from one formula to the other.

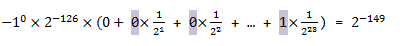

OK, having our new formula let's check what is the smallest number possible with our new format:

Can be now interpreted as:

The answer to the question that I asked at the beginning of this section is therefore 2^-149. Any number that is calculated with formula that has no implicit leading 1, is called denormal (or subnormal). The same approach works for negative numbers, we would simply flip the sign bit and same logic applies. In effect we have increased the overall range of representable floating-point values in the area (called underflow) around zero:

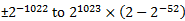

- The general formula can represent a value in a range of

.

. - With denormal numbers we can additionally represent values between

.

.

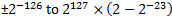

In C++ AMP single precision floating-point denormal numbers are not supported. We say they are flushed to zero, as any arithmetic operation that results in denormal would be rounded to zero. If zero as approximation is not good enough for your computation then your only option is to switch to double precision type. You would not only have higher precision (53bits, instead of 24bits) but also much wider range of values that you can represent:

Moreover, if you will push this type to the limits and try to underflow it, then you are covered as denormal numbers for double precision-floating point in C++ AMP are supported, which gives you the following extra range:

Please keep in mind that precision comes with its cost. Double precision floating-point numbers would negatively impact the performance (as it takes more cycles to perform operations on them), they occupy twice as much memory (32 bit -> 64bit) and they require your accelerator to support doubles which is an optional feature. On the host side (in CPU code) support for denormal numbers is turned on by default for both single and double precision in all Visual C++ floating-point models and can be controlled with _controlfp_s function.

Always round to nearest, tie even

As floating-point numbers represent an infinite number of real numbers into a finite number of bits, there has to be a situation when we have a real number that is not directly representable in floating-point format and it has to be rounded to its most acceptable bit representation. There are various approaches for rounding floating-point numbers. The default in Visual C++ is round to nearest, tie even. It means that when the real number cannot be adequately represented by a floating-point format then it is represented by the nearest number in the destination format. For the case where it falls exactly between two floating point numbers (we have a tie) then the floating-point number with an even least significant digit is chosen.

The other possible rounding modes are: round to positive infinity, negative infinity and zero. To modify the default round to nearest, tie even behavior you need to turn on fenv_access and use _controlfp_s function.

In C++ AMP the code that runs on the accelerator is always rounded to nearest, ties to even (same as the default behavior of host side). Any modifications with _controlfp_s affect only host side code and have no effect on your C++ AMP computations.

No support for floating-point exceptions or status bits to query

A floating-point calculation which results in an error such us underflow or invalid operation sets a special flag for the programmer to query. An alternative approach to querying for errors is to throw exceptions in such occurrences. Visual C++ offers a floating point exception when you compile your program with /fp:except or use float_control in your program to turn on except mode.

Both these error handling methods are available on the host and not available in C++ AMP. Possible workaround in this case is to explicitly test your results with math functions such as fpclassify, isnan, isinf, isfinite or isnormal.

Single floating point model per parallel_for_each

Visual C++ compiler since VS2005 offers the following floating-point models:

- precise – the default: prefer precision over speed, disables some of the optimization that can perturb precision of floating-point operations.

- fast – prefer faster code, optimization that involve floating-point types are allowed.

- strict – use same rules as precise, but also enables floating-point exceptions and disables contractions. The intermediate results are kept in their source precision.

- except - enables floating-point exceptions, it can be used with precise and is on by default for strict.

The default floating-point model can be controlled with /fp switch at compile-time, or explicitly specified with #pragma float_control per function level.

The HLSL language on which C++ AMP is built has corresponding strict (except there are no floating-point exceptions available) and fast floating point models. Our translation of C++ AMP source code to HLSL source code however does not support mixing of the floating-point models per function. It will be one of the two floating-point models for entire parallel_for_each invocation. If all of your functions in the call graph including parallel_for_each are in the fast floating-point model, then the computation would be translated into the corresponding fast model for the accelerator. If however any of your functions in the call graph including parallel_for_each are in precise, strict or except then your computation would be in the corresponding strict model on the accelerator.

Math functions from the concurrency::precise_math are exception to above rule, their precision remains unaffected regardless of floating-point model in which your computation defined.

The differences between floating-point results

The same sequence of floating-point arithmetic can yield different results across different CPU-architectures, when compiling with /fp:fast or /fp:precise. Here is blog post that discusses how to test for floating-point equivalency. C++ AMP accelerators are no different, but aforementioned discrepancies may result in divergence even when compiling with /fp:strict.

In closing

In this blog post I described the differences that you might encounter between the floating-point behavior on the host side (in CPU code) and the one in parallel_for_each kernels:

- dropping sign bit of negative zero,

- flushing single precision floating-point denormal numbers to zero,

- always rounding to nearest,

- no support for floating-point exceptions,

- single floating-point model for entire parallel_for_each computation,

- difference in results across architectures.

I hope awareness of these discrepancies will save time consuming investigations into any divergence of floating point arithmetic results between the CPU and C++ AMP kernels. Any feedback or comments are welcome either below or at the forum.

Comments

- Anonymous

November 21, 2012

what is the output of floating point arithmetic if input is nan