Getting Started with .NET and Docker

Editor’s note: The following post was written by Microsoft Azure MVP Elton Stoneman as part of our Technical Tuesday series with support from his technical editor, Microsoft Azure MVP Richard Seroter.

Docker lets you build and run apps, more quickly, with less hardware. That's why application container technology (and Docker in particular) is so popular. You can run hundreds of containers on a server which could only run a handful of VMs, and containers are fast to deploy and can be versioned like the software they run.

If you work in the Microsoft space, you might think containers are The Other Guy's technology (think LAMP, Node or Java), but actually you can run .NET apps in Docker containers today. In this post, I'll show you how.

Application Containers

Application containers are a fast, lightweight unit of compute that let you run very dense loads on a single physical (or virtual) server. A containerized app is deployed from a disk image which contains everything it needs, from a minimal OS to the compiled app. Those images are small - often just a couple of hundred megabytes - and they start up in seconds.

Docker have led the way in making containers easy to build, share and use. Containers have been the hot topic for the last couple of years, and with good reason. They're so light on resources that you can run hundreds or thousands of containers on a single server. They're so fast to ship and run that they're becoming a core part of dev, test and build pipelines. And the ease of linking containers together encourages stateless architectures which scale easily.

If the current pace of adoption continues, it's likely we'll see application containers as the default deployment model in the next few years. Container technology is coming to Windows soon, but with .NET Core you can build containers that run .NET apps right now, so it's a great time to start looking into an exciting technology.

Not Just for the Other Guy

Containers make use of core parts of the Linux kernel, which lets apps inside containers make OS calls as quickly as if they were running on the native OS. For that you need to be running Linux inside the container, and using Linux to host the container.

But the Linux host can actually be a virtual machine running on Windows or OS/X - the Docker Toolbox wraps all that up in a neat package and makes it easy to get started with containers on Windows. It's a single download that installs in a few minutes, it uses VirtualBox under the hood but it takes care of the Linux VM for you.

Microsoft is working hard to bring containers to Windows without needing a Linux VM in between. With Windows Server 2016 we will be able to run Docker containers natively on Windows, and with Windows Nano Server we'll have a lightweight OS to run inside containers, so we can run .NET apps on their native platform.

.NET Core

Right now, we can make use of the cross-platform .NET Core project to package apps and run them in Linux containers. Docker has a public registry of images, and I've pushed some sample .NET Core apps there. Once you've installed Docker, you can try out a basic .NET Core app with a single command:

docker run sixeyed/coracle-hello-world

When you first run that command, the container image gets downloaded from the Hub and it will take a few minutes. But next time you run it, you'll already have the image saved locally and it will run in seconds. The output tells you the current date and time:

Which might not seem very impressive - but it's using Console.WriteLine() in a .NET app, and the container which runs the app is running Ubuntu Server. So we have a .NET app which runs on Linux, packaged as a Docker container, that you can run just about anywhere.

.NET Core is the open-source version of .NET which is available now. It has a different focus from the full .NET and it's a modular framework, so you only include the parts you need - the framework itself is composed from NuGet packages. The ASP.NET 5 docs give a good overview of the different frameworks in Choosing the Right .NET for you on the Server.

Before you can run .NET Core apps on a Linux (or OS/X, or Windows) machine, you need to install the DNX runtime. This isn't the full .NET runtime that we have on Windows; it's a slimmed-down .NET Execution Environment (DNX). You can read the Book of the Runtime to find out how it all fits together, but you don't need a deep understanding to start packaging .NET Core apps for Docker.

When you define a Docker image, you start from an existing base image, and the sixeyed/coreclr-base image which is publically available on the Hub already has the DNX installed and configured. To containerize your own .NET Core app, just use that base image and add in your own code. In the next part of the post, we'll walk through doing that.

The Uptimer App

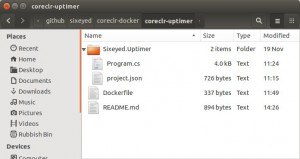

On GitHub I have a simple .NET Core app which pings a URL and records some key details about the response in an Azure blob - like the response code and the time it took to get the response. The code is available from my coreclr-uptimer repository.

This is a .NET Core app, so it has a different structure to a classic Visual Studio solution. There's no solution file, the Sixeyed.Uptimer folder is the solution, and there's a project.json file which defines how the app runs and its dependencies. Here's a snippet from that JSON:

"frameworks": {

"dnxcore50": {

"dependencies": {

"Microsoft.CSharp": "4.0.1-beta-23516",

"WindowsAzure.Storage": "6.1.1-preview",

"System.Net.Http": "4.0.1-beta-23516"

...

Here we're saying that the app runs under dnxcore50, the latest DNX framework version, and those dependencies are all NuGet packages. .NET Core apps use NuGet just like normal apps, but you can only reference packages which are built for .NET Core - like these preview versions of the WindowsAzure.Storage and System.Net.Http packages.

The code which makes use of those packages is standard C#, and that includes all the usual good stuff like timers, disposables and AAT (Async, Await and Tasks), so I ping the URL like this:

var request = new HttpRequestMessage(HttpMethod.Get, url);

using (var client = new HttpClient())

{

return await client.SendAsync(request, HttpCompletionOption.ResponseHeadersRead);

}

And then write the output to Azure, appending it to a blob like this:

var blockId = Convert.ToBase64String(Guid.NewGuid().ToByteArray());

using (var stream = new MemoryStream(Encoding.UTF8.GetBytes(content)))

{

stream.Position = 0;

await blockBlob.PutBlockAsync(blockId, stream, md5, access, options, context);

}

The project.json file means we can build and run this app from source code on any machine with a DNX - we don't need to build it into a platform-specific executable. So this code will run on Windows, OS/X or Linux, and that means we can package it up as Docker container.

Containers are defined with a Dockerfile which encapsulates all the steps to build and run the image. Typically, you create a folder for the container definition, which has the Dockerfile and all the files you need to build the image - like the Sixeyed.Uptimer folder which has the source code for the .NET Core app we want the container to run:

When we build the container, we'll produce a single image which contains the compiled app, ready to run. So in the container definition I need to tell Docker to use the .NET Core base image and copy in the source files for my own app. The syntax for the Dockerfile is pretty self-explanatory - the whole thing only takes 7 lines:

FROM sixeyed/coreclr-base:1.0.0-rc1-final

MAINTAINER Elton Stoneman <elton@sixeyed.com>

# deploy the app code

COPY /Sixeyed.Uptimer /opt/sixeyed-uptimer

When Docker builds the image, at this point we'll just have an Ubuntu server image, with .NET Core installed (which is what the base image gives us), and the app files copied but not built. To build a .NET Core app, you first need to run dnu restore, which fetches all the dependencies from NuGet - which we can do with a RUN statement in the Dockerfile:

WORKDIR opt/sixeyed-uptimer

RUN dnu restore

(The WORKDIR directive sets the current working directory for the image, so dnu will restore all the packages listed in the project.json file in the app folder).

At this point in the build, the image has everything it needs to run the app, so the final part of the Dockerfile is to tell it what to run when the container gets started. I add the location of DNX to the path for the image with the ENV directive. Then the ENTRYPOINT directive tells Docker to execute dnx run in the working directory when the container is run:

ENV PATH /root/.dnx/runtimes/dnx-coreclr-linux-x64.1.0.0-rc1-final/bin:$PATH

ENTRYPOINT ["dnx", "run"]

That's it for defining the container. You can build that locally, or you can download the version which I've published on the Docker Hub - sixeyed/coreclr-uptimer - using the docker pull command:

docker pull sixeyed/coreclr-uptimer

The .NET app takes two arguments: the URL to ping and the frequency to ping it. It also needs the connection string for the Azure Storage account where the results are stored, and it looks for that in an environment variable called STORAGE_CONNECTION_STRING.

The connection string is obviously sensitive, so I keep that out of the image I build. Using an environment variable means we can pass the information to Docker directly when we run a container, or put it in a separate, secure file.

I can run an instance of the container to ping my blog every 10 seconds and store the output in my storage container with this command:

docker run -e STORAGE_CONNECTION_STRING='connection-string' sixeyed/coreclr-uptimer https://blog.sixeyed.com 00:00:10

And I can run that from any Docker host - whether it's a development laptop, a VM in the local network, or a managed container service in the cloud. Whatever the host, it will run exactly the same code.

Architecting Solutions with Docker in Mind

That app does one small piece of work, so what value does it really have? Recording a response time for one website hit every few seconds isn't much use. But this project evolved from a real problem where I wasn't happy with the original solution, and Docker provided a much better approach.

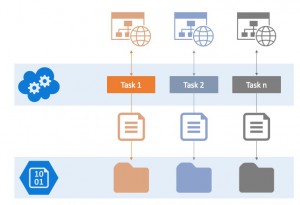

I delivered a set of public REST APIs for a client, and a large number were business critical so we wanted them pinged every few seconds, to get instant feedback if there were any problems. The commercial ping providers don't offer that frequency of service so we wrote our own:

The main code running in the tasks is the same as the .NET Core app from this post - but when you write it as a monolithic app for handling multiple URLs, you add a whole lot of complexity that isn't really about solving the problem.

You need storage to record the schedule for pinging each URL; you need a scheduler; you need to manage multiple concurrent tasks; you need a mechanism to know if the app has failed. Suddenly the monitoring component is big and complex, and it needs to be reliable which makes it bigger and more complex.

Enter the Docker option, which is a much cleaner alternative. I extracted the core code to a simple .NET app which just pings one URL and records the responses - which is all it needs to do. Now we have a minimal amount of custom code (about 100 lines), and we can use the right technologies to fill in the other requirements.

To ping multiple URLs, we can fire up as many concurrent instances as we need and Docker will manage resource allocation. And if we use a cloud-based host for the Docker machine, we can scale up and scale down depending on the number of containers we need to run. Technologies like Mesos and Kubernetes provide a management layer which integrates well with Docker.

These lines from a sample shell script fire up container instances which run in the background, each responsible for monitoring a single domain. This will ping a.com every 10 seconds, b.com every 20 seconds and c.com every 30 seconds:

docker run -i -d --env-file /etc/azure-env.list sixeyed/coreclr-uptimer https://a.com 00:00:10

docker run -i -d --env-file /etc/azure-env.list sixeyed/coreclr-uptimer https://b.com 00:00:20

docker run -i -d --env-file /etc/azure-env.list sixeyed/coreclr-uptimer https://c.com 00:00:30

The --env-file flag tells Docker where to find the file with the storage connection string, so we can keep that safe. The -i and -d flags tell Docker to run the container in the background, but keep the input channel open, which keeps the .NET app running.

The work to check one domain is very simple, as it should be. To run more checks, we just need to add more lines to the shell script, and each check runs in its own container. The overhead of running multiple containers is very small, but with the first RC release for .NET Core, there is a startup delay when you run an app.

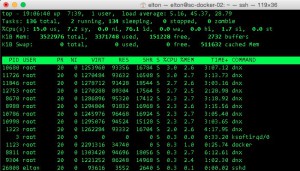

To see how far it scales, I fired up an VM in Azure, using the Docker on Ubuntu Server image from the gallery, which already has the latest version of Docker installed and configured. I used a D1-spec machine which has a single core and 3.5GB of memory allocated.

Then I pulled the coreclr-uptimer image and ran a script to start monitoring 50 of the most popular domains on the Internet. The containers all started in a few seconds, but then it took a few minutes for the dnx run commands in each container to start up.

When the containers had all settled, I was monitoring 50 domains, pinging each one at 10-30 second intervals, with the machine averaging 10-20% CPU:

This is a perfect use-case for containers, where we have a lot of isolated work to run, each of which is idle for much of the time. We could potentially run hundreds of these containers on a single host and get a lot of work done for very little compute cost.

Developing .NET Core Apps

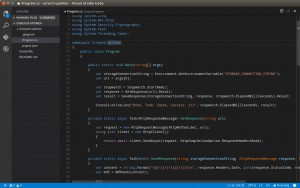

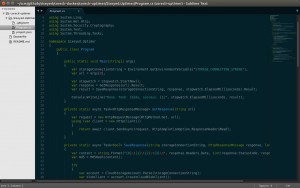

You can build and run .NET apps on Mac and Linux machines, but you can also develop on those platforms too. Visual Studio Code is a cut down alternative to the full Visual Studio, which is able to work with the .NET Core project structure, and gives you nice formatting and syntax highlight for .NET and other languages, like Node and Dockerfiles:

You can even use a standard text editor for developing .NET Core. The OmniSharp project adds formatting and IntelliSense for .NET projects using popular cross-platform editors like Sublime Text:

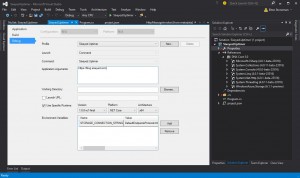

But both of those projects are in their early days, and I've had mixed results with IntelliSense. Fortunately, when you install ASP.NET 5 on a Windows machine with Visual Studio, you can use the full VS to build, debug and run .NET Core code.

Visual Studio 2015 can work with project.json files and load the source files into a solution. You get all the usual IntelliSense, debugging and class navigation for references, but at the moment the NuGet Package Manage doesn't filter available packages by the runtime. So it will let you try to add a standard .NET package to a .NET Core project, and then give you an error like this:

NU1002: The dependency CommonServiceLocator 1.3.0 in project Sixeyed.Uptimer does not support framework DNXCore,Version=v5.0.

The full Visual Studio is still my preferred IDE for .NET Core apps though. The alternatives are attractively lightweight, but they need a few more releases to be stable enough for real development work.

Visual Studio 2015 does a good job of making .NET Core development feel like ordinary .NET development. It's all familiar, down to the properties page for the project, where you can configure how to run the project in Debug mode - with useful cross-platform features, like specifying the DNX runtime version and values for environment variables:

Docker and .NET in the Future

Windows Server 2016 is coming soon and it will support running Docker containers without an intermediate VM layer, so the sixeyed/coreclr-uptimer image will run natively on Windows.

The Windows equivalent of the Linux base image to run inside containers is Windows Nano Server. That technology is also modular, aimed at creating images with very small footprints (currently under 200MB - much smaller than the 1.5GB for Windows Server Core, but still much bigger than the Ubuntu base image, at 44MB). For that approach, .NET Core would still be preferred to the full framework, even if the container could run it.

And we'll have to wait and see if you'll be able to define a Docker image that starts:

FROM windowsnanoserver.

The Dockerfile approach doesn't fit with how Microsoft currently favor defining images, which is using PowerShell and Desired State Configuration. Also, for Windows-based images to be publically available on the Docker Hub (or something similar), Windows Nano Server would effectively need to be free.

But if the Dockerfile format isn't supported natively in Windows Server, there's a good chance that a translator emerges from the community which takes a Dockerfile and generates a PowerShell DSC script.

Application containers are changing the way software is designed, built and deployed and now is a great time to start taking advantage of them with .NET projects.

About the author

Elton is a Software Architect, Microsoft MVP and Pluralsight Author who has been delivering successful solutions since 2000. He works predominantly with Azure and the Microsoft stack, but realises that working cross-platform creates a lot of opportunities. His latest Pluralsight courses follow that trend, covering Big Data on Azure and the basics of Ubuntu. He can be found on Twitter @EltonStoneman, and blogging at blog.sixeyed.com.

Comments

- Anonymous

December 18, 2015

Potential typo on the Run command? docker run sixeyed/coreclr-hello-world instead of docker run sixeyed/coracle-hello-world? - Anonymous

December 19, 2015

Hello and thank you for sharing your time and knowledge. When attempting to "docker run sixeyed/coracle-hello-world" I receive Error: image sixeyed/coracle-hello-world:latest not found - Anonymous

December 19, 2015

Using the Pull command seems to have worked - Anonymous

January 06, 2016

Hi Elton,Having that the .Net Core is multiplatform (will run on Linux) and self-contained (all runtime is included with a web app), why should I use containers? I can guess they also give some isolation similar to VMs but lighterweight, but this gives advantages to infrastructure/platform providers (like MS Azure). What that gives to app developers? - Anonymous

February 04, 2016

I get the following trying to run that command:docker run sixeyed/coracle-hello-worlddocker: An error occurred trying to connect: Post http://127.0.0.1:2375/v1.22/containers/create: dial tcp 127.0.0.1:2375: connectex: No connection could be made because the target machine actively refused it..See 'docker run --help'. - Anonymous

June 06, 2016

This has some great information! I'm running into a bit of an issue, though. I am working on a project very similar to this and I need to be able to run c# code within containers. I've pulled from your image and copied the coreclr-uptimer Dockerfile with the only modification being made for locating the correct file paths. Once "dnu restore" is hit the script fails and the terminal states: /bin/sh: 1: dnu: not found \n The command '/bin/sh -c dnu restore' returned a non-zero code: 127. I believe it is not successfully installing dnx. Any ideas? - Anonymous

August 05, 2016

You make a good point on your comment regarding the host OS having to be free in order to publish the containers in an online repo. Interesting times ahead. Also, I suppose Nano will have to be redesigned with a modular architecture in order to see any decent drop in base OS size for the images.. - Anonymous

September 30, 2016

The comment has been removed