Build Your First Planet-Scale App With Azure Cosmos DB

Editor's note: The following post was written by Data Platform MVP Johan Åhlén *as part of our Technical Tuesday series. Daron Yondem of the Technical Committee served as the Technical Reviewer of this piece. *

Azure Cosmos DB is Microsoft’s distributed, multi-model cloud database service for managing data at planet-scale. It started in 2010 as “Project Florence,” to address developer pain-points that were faced by large Internet-scale applications inside Microsoft. In 2015, the first API was made available externally in the form of Azure DocumentDB. Finally, Azure Cosmos DB was launched in May 2017 and was recently honored in InfoWorld’s 2018 Technology of the Year Awards.

This article will give you an introduction to Azure Cosmos DB and then take you through a sample scenario of building a globally scalable web application using Azure Cosmos DB and Azure Traffic Manager - called project Planetzine.

But first, let’s delve into Azure Cosmos DB features and pricing

Azure Cosmos DB comes with multiple APIs:

- SQL API, a JSON document database service that supports SQL queries. This is compatible with the former Azure DocumentDB.

- MongoDB API, compatible with existing Mongo DB libraries, drivers, tools and applications.

- Cassandra API, compatible with existing Apache Cassandra libraries, drivers, tools, and applications.

- Azure Table API, a key-value database service compatible with existing Azure Table Storage.

- Gremlin (graph) API, a graph database service supporting Apache Tinkerpop’s graph traversal language, Gremlin.

This means that if you have an existing app that stores data in, for example, Gremlin, you can just point the app to your Azure Cosmos DB.

What also makes Azure Cosmos DB stand out from other distributed database services is that it offers five different consistency models:

The most popular one, the Session consistency model, comes with a read-your-own-writes guarantee, which means that a client will never get anything older than the last version of information that the client wrote.

Some other great features of Azure Cosmos DB are:

- Stored Procedures

- User Defined Functions

- Triggers

- ACID support (within a single stored procedure or trigger)

- Designed for very high performance and scalability.

- Service Level Agreement that guarantees high availability and low latency.

- Automatic maintenance. Will handle growth or expansion to new geographic regions seamlessly in the background.

- Fully elastic. Scale up or down at any time.

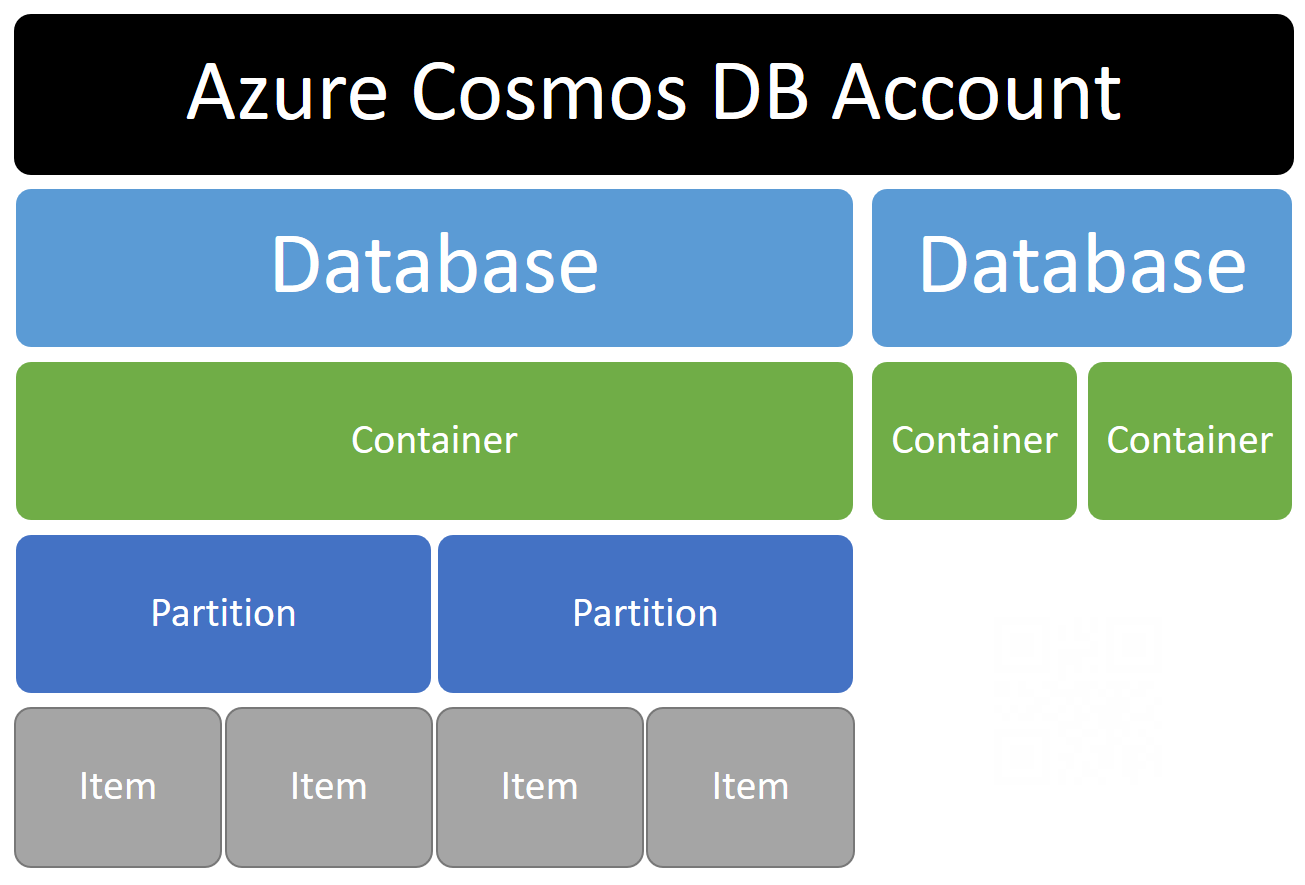

Azure Cosmos DB Architecture

To start using Azure Cosmos DB, you need at least one account. Each account can have multiple databases (and each database can be of any model). Depending on the database model, the objects stored in the database are projected into containers/partitions/items. For instance, in the SQL API, the containers are called “Collections,” and the items are called “Documents.”

Partitioning is optional, but without a partition key, there is a 10 GB limit to the database size and throughput is capped. I strongly recommend that you always use partitioning.

Pricing

The pricing has two components:

- Storage (per GB per month)

- Reserved RUs per second

Performance (or “throughput”) is measured in RUs (“Request Units”). Each operation has an RU cost. Reading a 1KB item costs about 1 RU while writing and changing data costs more. The RUs represent a weighted measure of CPU, disk and network cost of your operations. If your requests demand higher RUs per second than you have reserved, they will be rate-limited (throttled). Note that, currently, the minimum billing is 400 RUs per second per container (or minimum 1000 RUs per second if you want to enable unlimited growth). Since the price is per container, you could keep costs down by letting multiple datatypes share the same container.

If you distribute your data geographically, you will have to pay storage costs and reserved RUs for all the regions, so the total cost becomes approximately proportional to the number of regions where your data is distributed. The exact price details for your selected regions are available on the Azure pricing page.

For development purposes, there are free options, but they, of course, have limitations. You can also download the Azure Cosmos DB Emulator and test your application locally.

Now let’s get to work with ‘Project Planetzine’

Azure Cosmos DB is useful for a wide range of applications, but in some cases, Azure Cosmos DB is extra beneficial:

- Mobile applications, web applications or client applications that run globally

- Internet of Things

- Applications that contain existing code, which already uses any of the supported APIs (Mongo DB, Cassandra or Gremlin)

- When the application or service has to be globally scalable

- When there is a need for elasticity (to handle quick growth or bursts)

- When low latency, high availability, and high throughput is required (and has to be guaranteed by an SLA)

- When data consistency has to be clearly defined and guaranteed by an SLA

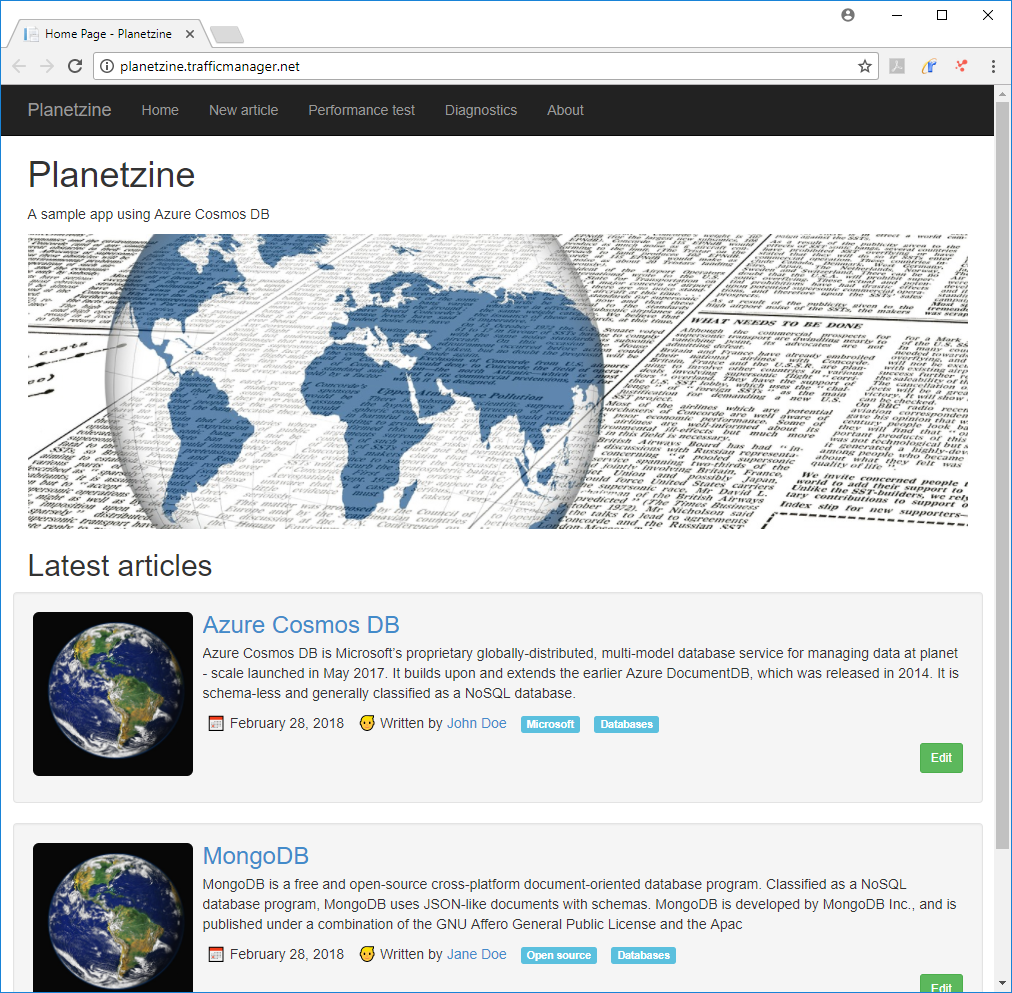

Now, imagine that we are starting a global magazine, “Planetzine.” This global magazine has to be scalable, elastic - and data consistency is important. Also, we need to make sure the website of this global magazine is very fast for visitors worldwide, so we need the content to be replicated globally.

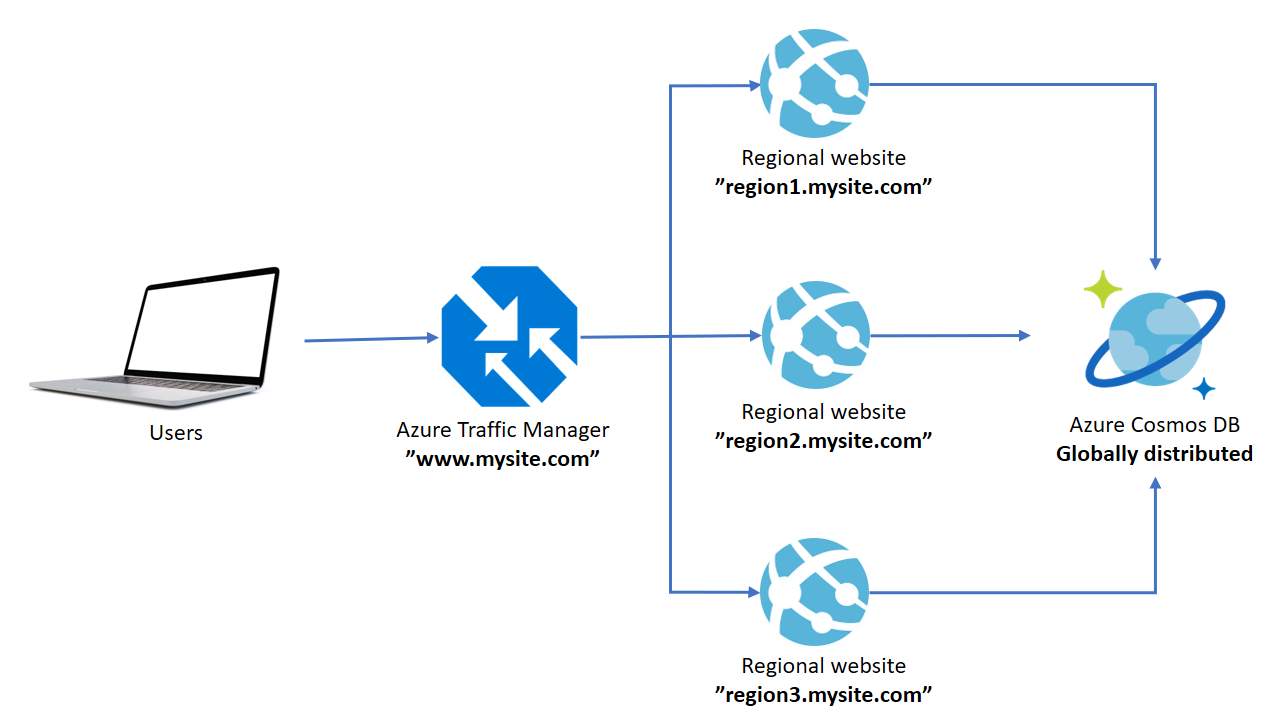

Planetzine Architecture

To serve website visitors from the nearest region, “Planetzine” uses:

- Azure Traffic Manager to route the visitors to the nearest regional website

- Regional websites (in any number of regions)

- Azure Cosmos DB database, which is replicated to multiple regions

You can run this sample scenario with any number of regional websites. For best performance, and to have read operations stay within the visitors region, you should replicate your Azure Cosmos DB database to the same regions as the websites.

Creating the Azure Services

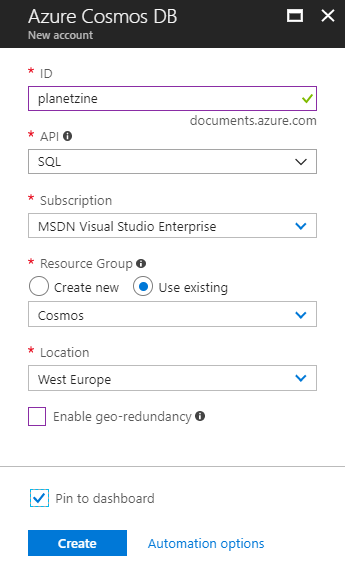

Let’s select the SQL API. Any API could be used, but my code is written for the SQL API.

Note: All the APIs are native to Cosmos DB and equally supported by Microsoft. For your own applications, you should select the API that works best for you.

Azure Cosmos DB

In the Azure Portal, click “New” and chose Cosmos DB:

- Chose the SQL API.

- Leave “Enable geo-redundancy” unchecked, but after creation, click “Replicate data globally” to set up the data replication to the other regions of your choice.

Consistency will by default be set to “Session”, which is fine.

There will be no costs for your Azure Cosmos DB until you create a collection, and you don’t need to create any collection manually.

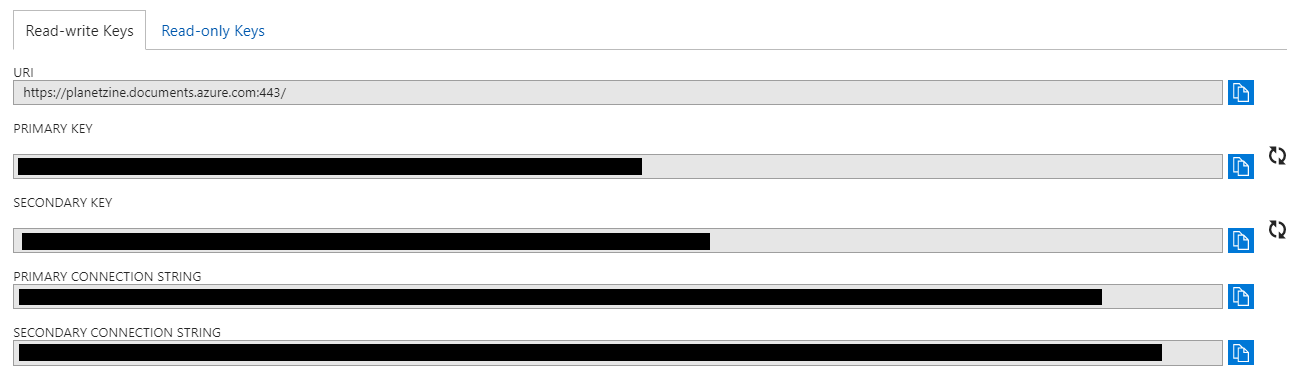

Note that you will later need your URI, and PRIMARY KEY (or SECONDARY KEY), that can be found by clicking “Keys”:

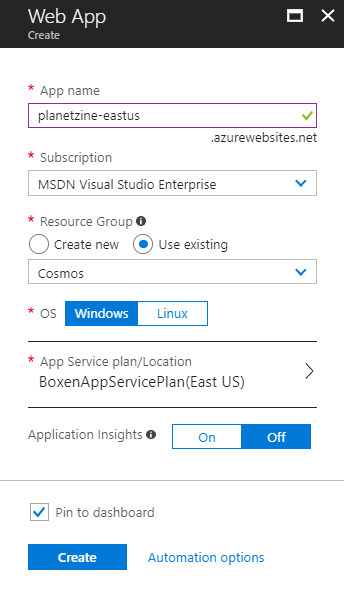

Azure Web Apps

In the Azure Portal, click “New” and choose Web App:

- Chose Windows as OS.

- Create (or reuse) app service plans that are located in your desired regions.

- Note that setting a custom domain name on a web app that is integrated with Traffic Manager is only available for the Standard pricing tier (or higher). If you go to a lower tier, you will have to skip the Azure Traffic Manager.

- You should create web apps in the same regions that you are replicating your database to.

When completed, click on “Get publish profile” and save the file somewhere safe. You will need to import it into Visual Studio later.

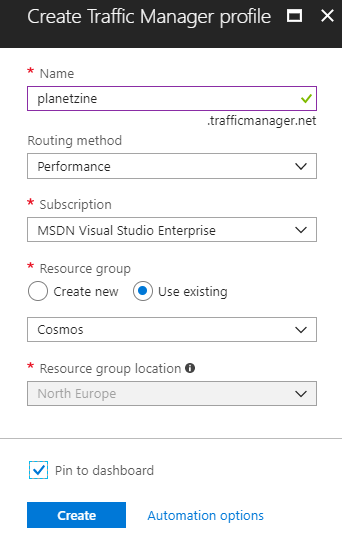

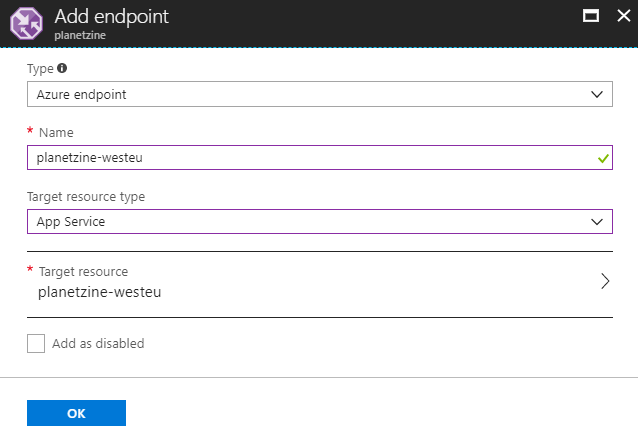

Azure Traffic Manager profile

The Azure Traffic Manager will help automatically route website visitors to the nearest region. It looks at the IP address of visitors to decide which Web App they should be sent to.

In the Azure Portal, click “New” and chose to create a Traffic Manager profile:

Choose Performance as the routing method - this means traffic will automatically go to the nearest location).

When completed, click “Endpoints” and add your Azure Web Apps:

The address will be “https://profilename.trafficmanager.net”. If you want to assign a custom domain (“www.yoursite.com”), follow these instructions.

Deploying the Web App

Now I suggest you download the source code for the web app. You will need Visual Studio 2017 (or equivalent) to build and publish the web app to your Azure accounts.

The web application has been developed in Visual Studio using ASP.NET MVC. However, it could easily have been developed using other platforms such as Node.js, Java or Python, since Azure Cosmos DB provides client libraries for multiple platforms.

This is what you need to do:

- Open Visual Studio.

- Edit Web.config (instructions below!) with your settings, including your Azure Cosmos DB EndpointURL and AuthKey.

- Optionally: Test run locally from Visual Studio.

- Import your publish profiles for the web apps.

- Publish to Azure and test each site.

- Also, test through your Azure Traffic Manager address (if you have that in place). You can see which real website you have connected to through the Diagnostics page.

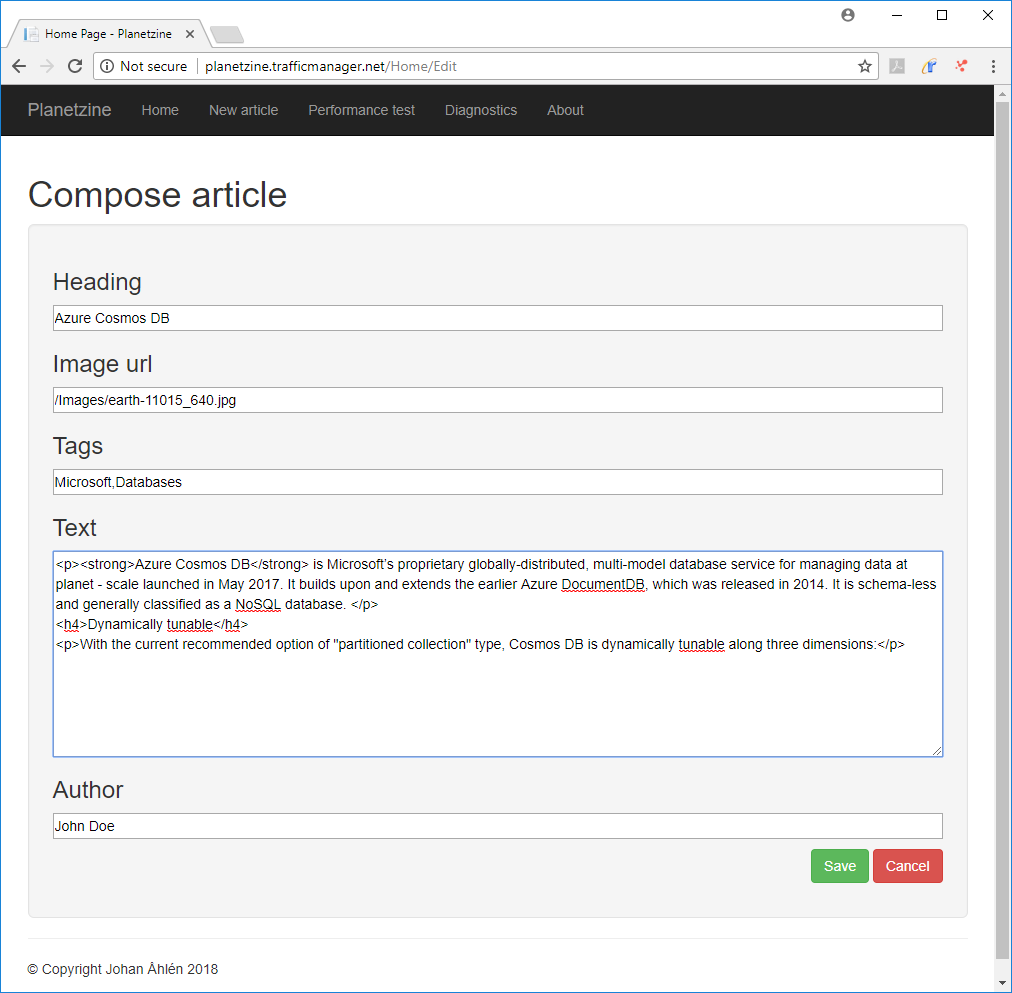

Here are some screenshots of what you should see:

Web.config

These are the settings in Web.config that you should review:

<add key="ConnectionMode" value="Direct" />

<add key="ConnectionProtocol" value="Tcp" />

<add key="InitialThroughput" value="400" />

<add key="MaxConnectionLimit" value="500" />

<add key="DatabaseId" value="Planetzine" />

<add key="ConsistencyLevel" value="Session" />

<!-- CONFIGURE THESE! -->

<add key="EndpointURL" value="https://planetzine.documents.azure.com:443/" />

<add key="AuthKey" value="EnterYourSecretKeyHere" />

<!-- CONFIGURE THESE! -->

These configuration parameters are read by the DbHelper class in the web app.

- The ConnectionMode and ConnectionProtocol are set to Direct/Tcp, which are the fastest options.

- MaxConnectionLimit sets the maximum number of simultaneous connections to the database. When doing performance test, it is important you set this number high enough.

- The InitialThroughput is used when the web application is run for the first time, and a new collection is created. Must be minimum 400 RU/s. You can easily adjust the throughput (and costs) at any time through the Azure Portal or programmatically.

- EndpointURL is the endpoint address of your account. It can be found in the Azure Portal under the Keys tab. It is called “URI” in the Azure Portal.

- AuthKey is your secret authentication key. It can be found in the Azure Portal under the Keys tab. You can use either the “PRIMARY KEY”, or the “SECONDARY KEY.”

The SQL API

*Note: The following is already done if you download my source code.

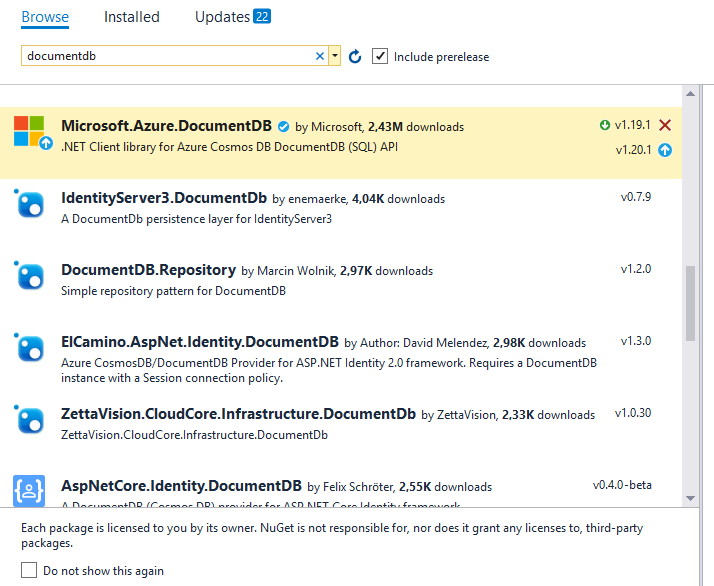

To use the Azure Cosmos DB SQL API in your ASP.NET web application, or any .NET application, you need to add a reference to the SDK. In Visual Studio, right-click on “Manage NuGet packages…” and install the Microsoft.Azure.DocumentDB package (note that it is called DocumentDB, due to the API being previously known as DocumentDB API).

The SQL API (Microsoft.Azure.DocumentDB) is pretty straightforward to use. Going through the API would be outside of the scope of this article, so I recommend looking at the Azure Cosmos DB: SQL API documentation.

Creation of databases and collections

Databases and collections are created (if they don’t already exist) every time the web app starts. This is done from Global.asax.cs.

If you are using a non-free Azure Cosmos DB account, remember to delete the databases/collections when you are done testing, so you don’t get charged unnecessarily.

The DbHelper class

*Note: this is information about the DbHelper class, which is also part of my source code.

One of the most important classes in my sample web application is the DbHelper class. It handles all communications with the Azure Cosmos DB database.

- The constructor of the DbHelper class reads all the settings.

- It then determines the preferred locations (for reading data) from the server’s actual region.

- It then creates a connection policy and applies the preferred locations to the connection policy.

- It then creates the client.

- Finally it calls OpenAsync on the client, to avoid a startup latency on the first request.

Note that the DbHelper class is static and that there will never be more than one client. It’s best practice never to use multiple clients to communicate with a single endpoint.

Note also that all methods of the DbHelper class are asynchronous. This is also best practice since the SQL API itself is asynchronous, and we should avoid any blocking.

The DbHelper class supports the basic operations: creating a database, creating a collection, creating a document, upserting a document (which means that it replaces a document if it already is there, otherwise creates it), reading and querying.

The Article class

The structure of the Article class looks like this (in shortened form):

public class Article

{

public const string CollectionId = "articles";

public const string PartitionKey = "/partitionId";

[JsonProperty("id")]

public Guid ArticleId;

[JsonProperty("partitionId")]

public string PartitionId => Author;

[JsonProperty("heading")]

public string Heading;

[JsonProperty("imageUrl")]

public string ImageUrl;

[JsonProperty("body")]

public string Body;

[JsonProperty("tags")]

public string[] Tags;

[JsonProperty("visible")]

public bool Visible;

[JsonProperty("author")]

public string Author;

[JsonProperty("publishDate")]

[JsonConverter(typeof(IsoDateTimeConverter))]

public DateTime PublishDate;

[JsonProperty("lastUpdate")]

[JsonConverter(typeof(IsoDateTimeConverter))]

public DateTime LastUpdate;

}

- Note the attributes I have added to support JSON serialization.

- Note that I rename my ArticleId to “id”. This is because “id” is the reserved name the SQL API uses for the unique identifier of a document.

- Note also the partition key. Articles are partitioned by Author. This partition key is chosen because it is a logical way to group articles and will be pretty scalable.

Conclusion

InfoWorld’s article outlining the “Technology of the Year 2018” writes:

“Do you need a distributed NoSQL database with a choice of APIs and consistency models? That would be Microsoft’s Azure Cosmos DB.”

Azure Cosmos DB is easy to use. It supports multiple APIs (including a SQL API) and is compatible with MongoDB, Cassandra, and Gremlin. It also comes with client libraries in multiple different programming languages (with 5-minute quickstarts available).

Azure Cosmos DB is also useful for pretty much all sizes of applications, from small to planet-scale. You can start small and seamlessly grow data volume, throughput and number of regions. Just be careful about partition keys. The choice of partition key is very important. Typically, good partition keys are customer-ids, user-ids or device-ids. Don’t worry about having too many partitions. As your data grows, it is just beneficial that also the number of partitions grows.

Azure Cosmos DB is also a stable database service that comes with comprehensive guarantees (SLAs) of high availability and low latencies. It empowers developers to make precise tradeoffs in latency, throughput, availability, and consistency by offering five well-defined consistency levels.

Finally, Microsoft has a feedback website where you can vote for Azure Cosmos DB ideas/features and submit your ideas for voting. Microsoft actively comments on the ideas, and it gives some pretty good hints about what new things to expect in Azure Cosmos DB in the future!

References

- Azure Cosmos DB documentation and quickstarts

- Azure Cosmos DB FAQ

- Planetzine source code on GitHub

- A technical overview of Azure Cosmos DB

- Partition and scale in Azure Cosmos DB

- InfoWorld’s 2018 Technology of the Year Award winners

- Azure Cosmos DB on Twitter

- My blog posts about Azure Cosmos DB

Johan Åhlén is an internationally recognized consultant and Data Platform MVP. He is passionate about innovation and new technologies, and shares this passion through articles, presentations and videos. Johan founded and co-managed PASS SQLRally Nordic, the largest SQLRally conference in the world. He also founded SolidQ in Sweden. Johan built up the Swedish SQL Server Group and served as the president of the user group between 2009-2016. In 2017 he founded the Skåne Azure User Group. He has been recognized by TechWorld/Computer Sweden as one of the top developers in Sweden.

Johan Åhlén is an internationally recognized consultant and Data Platform MVP. He is passionate about innovation and new technologies, and shares this passion through articles, presentations and videos. Johan founded and co-managed PASS SQLRally Nordic, the largest SQLRally conference in the world. He also founded SolidQ in Sweden. Johan built up the Swedish SQL Server Group and served as the president of the user group between 2009-2016. In 2017 he founded the Skåne Azure User Group. He has been recognized by TechWorld/Computer Sweden as one of the top developers in Sweden.