Beyond The Obvious With API Vision On Cognitive Services

Editor's note: The following post was written by Azure MVP Víctor Moreno *as part of our Technical Tuesday series. Mia Chang of the Technical Committee served as the Technical Reviewer of this piece. *

This is the decade of Artificial Intelligence. And thanks to Cloud Computing with Microsoft Azure, we all have the ability to use sophisticated tools like never before. This article is dedicated to Cognitive Services - and particularly, Cognitive Services’ Vision API - within Azure.

We’ll see how easy it is to analyze static images and videos with the Vision API, and delve into 5 examples in order to extract valuable information from our applications.

Let’s begin by defining cognitive computation

- It’s the simulation of human thinking

- Pattern recognition

- Processing of natural language

- And the imitation of the human brain

So as a developer, how does integrating the above points benefit our applications? It improves user experience, finds important data to resolve specific problems, and makes existing and new business models even stronger. Now how can developers take advantage of this? The Microsoft Cloud offers us service for hosting, storage, the virtualization of infrastructure - and of course, Cognitive Computing as a service, called Cognitive Services.

At Microsoft, there mainly exists five central cognitive services:

- Knowledge: identifies probabilities within chosen products or services based on user preferences and behaviors.

- Language: helps to unlock the sentiment behind the text - we’re talking about feelings, phrases, and emotions.

- Speech: converts voice into text in real-time and then, depending on user intent, engages in actions.

- Search: provides suggestions based on user input. If you’re familiar with search engines like Google or Bing - as most people are - it's easy to understand the approach of this service.

- Vision: processes any image in order to recognize useful inputs which indicate what exactly the images or video represents.

The above cognitive services are exposed as APIs. As I said before, this article focuses on the Vision API.

What can the Vision API do?

Vision Cognitive Services is a group of several APIs dedicated to working with any image or videos. Currently, these are the possibilities:

- Computer Vision: This feature returns information about visual content found in an image, using tags and descriptions. For example, if we receive a landscape as input, this API can define in detail what’s really there. For example “there is a tree beside the lake.”

- Video Indexer: This is a cloud service that extracts insight from videos using artificial intelligence technologies like audio translation, speaker indexing, visual text recognition, voice activity detection and more.

- Analysis of emotions: This can identify patterns, find the probability of faces belonging to the same person, and do facial expressions as an input and return detected emotions (like anger, annoyance, fear, happiness, neutral, sadness and surprise).

The Face API

At the time of publishing, there are two APIs for analysis of emotions - the Face, and Emotion API. These are in charge of analyzing expressions, and exposing the confidence level for emotions - ie the probability of emotions being presented by the face.

Now, let's go to see how easy is to build an application with the Face Verification API:

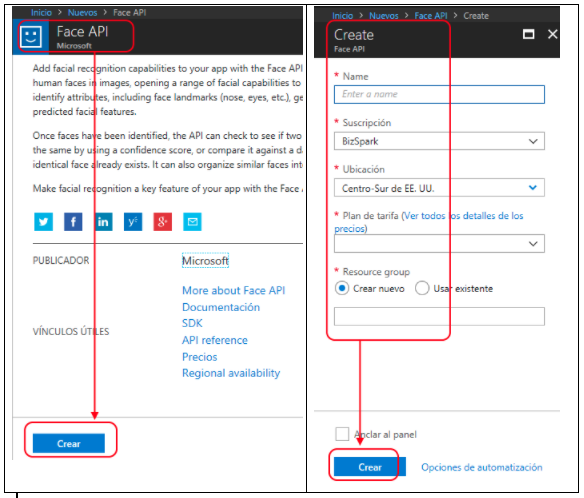

1) Go to: Try Cognitive Services and choose "Get API Key for Face API"

Face API is also available from Azure Portal, if you have an Azure subscription this is another way to achieve it.

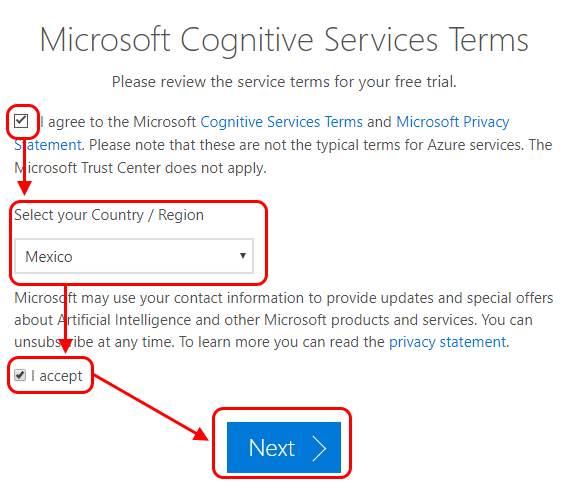

2) Accept Microsoft terms and conditions.

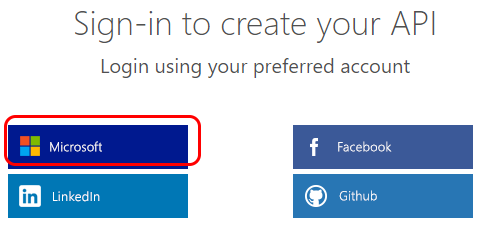

3) Choose the way you’d like to be authenticated.

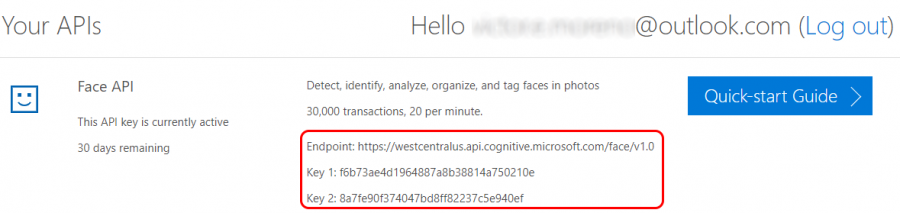

4) Take the provided Face Verification APIs (we can copy it on the notepad in the meantime).

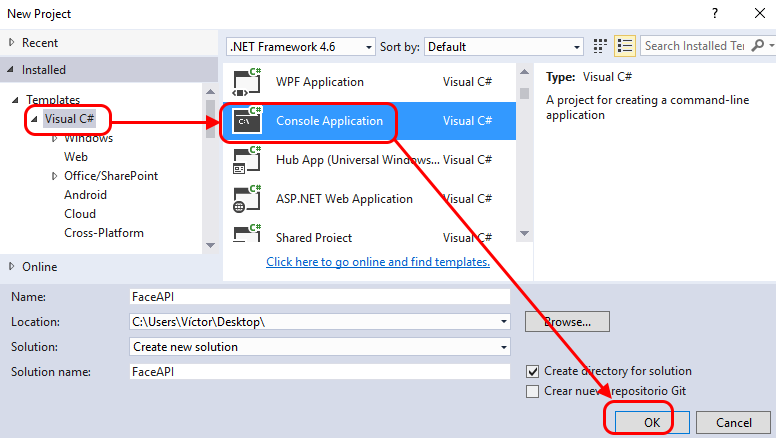

5) Create a console project in Visual Studio.

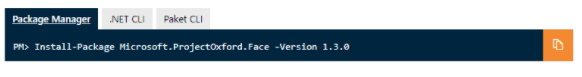

6) Get the Microsoft Face API Windows SDK. We can install it from Visual Studio by clicking:

Tools Menu ➔ Nuget Package Manager ➔ Package Manager Console

7) Write the code for the detection of Faces. You can get a complete project from GitHub clicking here. Once we’ve opened our project, it is necessary to make two changes: specify the path of the image to analyze, and replace the API with our new API, which we got from the Azure Portal.

When we execute the project, is necessary to use an image to analyze. In this case, we will use the handsome guy to the left.

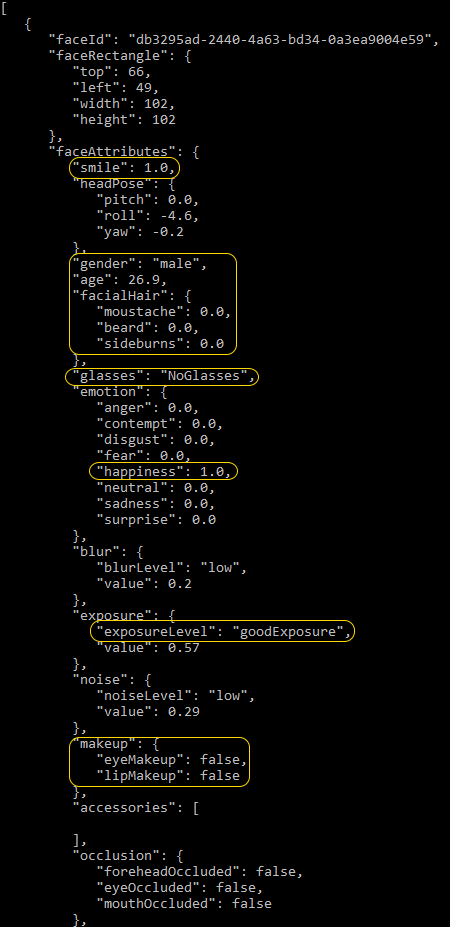

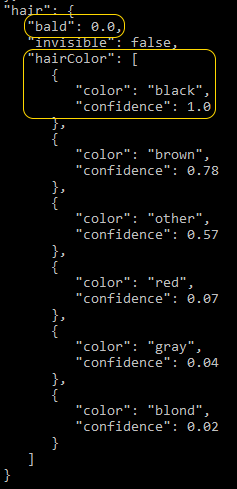

The result comes in JSON format. For this image we have the below analysis, and I’ve circled in yellow how exactly the results appeared using the API in some field, using this image as input.

https://westcentralus.api.cognitive.microsoft.com/face/v1.0/detect

https://westcentralus.api.cognitive.microsoft.com/face/v1.0/detect

The result comes in JSON format. For this image we have the below analysis, and I’ve circled in yellow how exactly the results appeared using the API in some field, using above image as input.

Computer Vision

There are four versions of the computer vision API:

- Analyzing image content: this is dedicated to recognizing what is happening in environments like cities, forests, parks, sea, restaurants, parties - basically, any possible place or situation. The functions are:

- Identifying impactful or adult content is present

- Identifying color schemes.

- Using tags and descriptions for each possible entity found (people, animals, transportation, building and so on).

2) Reading text: this is dedicated to recognizing human penmanship. This API allow us translate any possible way of writing.

3) Recognizing celebrities: this is dedicated to recognizing celebrities like presidents, actors, businessmen, journalists , or famous places around the world.

4) Analyzing video in near real time: this is dedicated to recognizing things in a video by frames, actions, clothes, people, places and many other things.

As you can see, Computer Vision covers many aspects relevant to images. It works with static images and videos.

Now, let's see how easily to build an application with the Computer Vision API

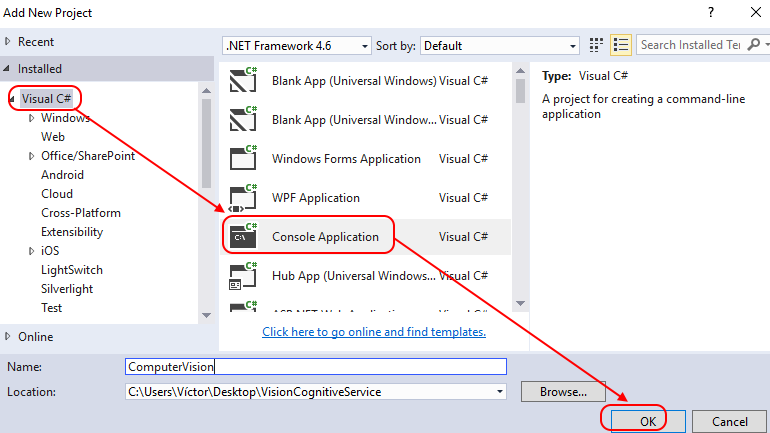

1) Create a console project in Visual Studio:

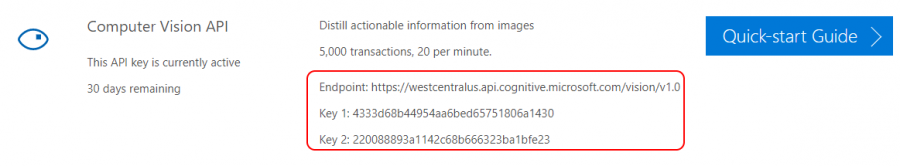

2) Write the code for the detection of Computer Vision. You can get a complete project from GitHub clicking here. Just like with the face verification API, it is necessary to make two changes once we’ve opened our project: specify the path of the image to analyze, and replace the API with our new API, which we got from the Azure Portal.

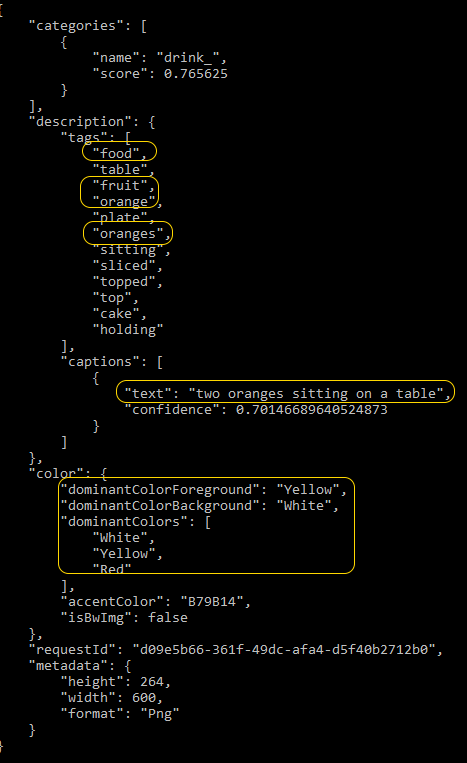

We’ll use this below image to analyze:

The result comes in JSON format. For this image we have the below analysis, and I’ve circled in yellow how exactly the results appeared using the API in some field, using above image as input.  https://westcentralus.api.cognitive.microsoft.com/vision/v1.0/analyze

https://westcentralus.api.cognitive.microsoft.com/vision/v1.0/analyze

Above is the Vision API used for this demonstration. It is a simple HTTPS call to apply Cognitive Services. Please be aware this can change in the future.

Video Indexer

Video Indexer is an API that allows us to extract useful metadata from any video. We can get insights like spoken words, text, faces and more. Imagine you are watching video in which there are many actions happening, and you need to identify when something very specific occurs - such as when the word “breakfast” is first mentioned in a movie. Video Indexer let us find that useful data while the video is playing.

Here’s how easy it is to build an application with Video Indexer API

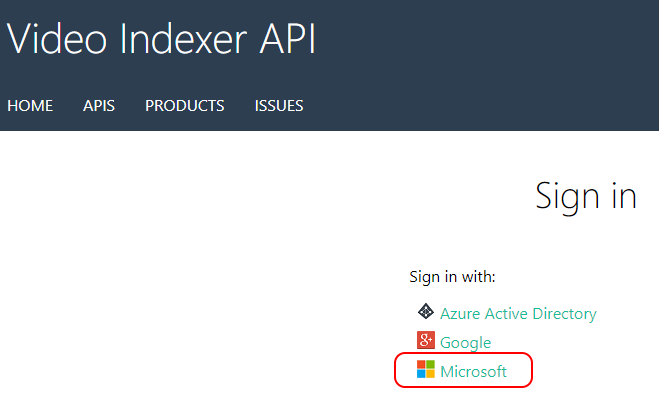

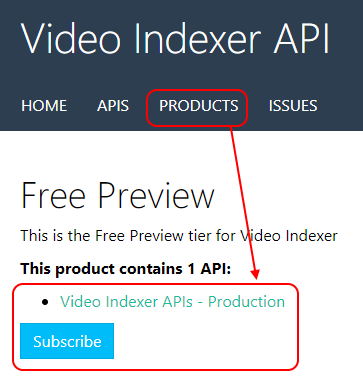

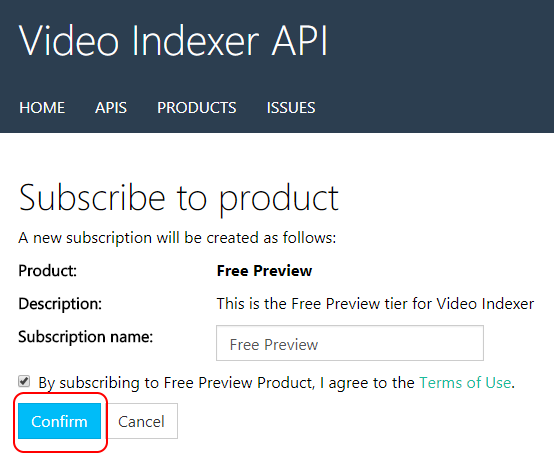

1) Sign in to the portal Video Indexer API

2) Go to Products Menu and click the subscribe button

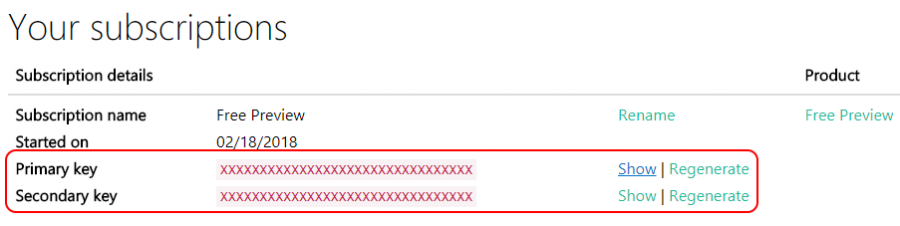

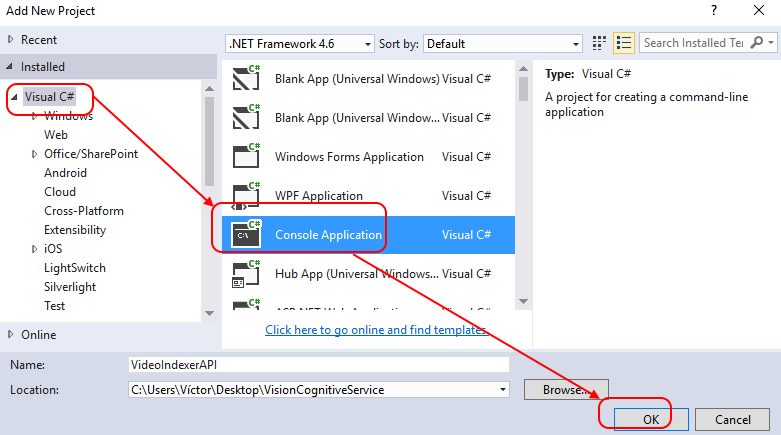

3) Create a console project in Visual Studio

4) Write code for Video Indexer API. Once again, you can get a complete project from GitHub clicking here. Once opened our project, is necessary to make the two changes: specify the path of the video to analyze and replace API wrote by our new API got from the Azure Portal.

When executing the project, is necessary to choose a video to analyze. In this case, we will use the below video: https://sec.ch9.ms/ch9/b581/490ef9a8-6e04-46c7-a12b-0fb9fc86b581/HappyBirthdaydotNETwithDanFernandez.mp4

Here’s a screenshot from it - a little conversation between two Microsoft employees.

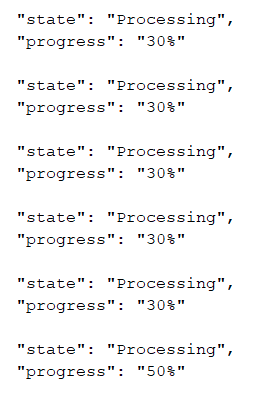

Depending on the size of the video, it might take a longer amount of time to load. But in console mode we can see the progress of analysis:

All the voice is translated and separated by the period during which its played.

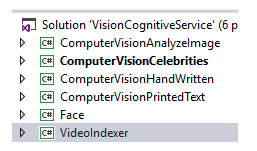

The complete JSON result can be downloaded from here. I also invited you to take a look into this project from my GitHub repositories. It’s a Visual Studio solution with six projects:

Each project uses a specific Cognitive Service with API Vision. Feel free to download it, update it and include it in your own applications.

Each project uses a specific Cognitive Service with API Vision. Feel free to download it, update it and include it in your own applications.

So while in the past using software like this would have been expensive and difficult to implement, implementing Cognitive Service’s Vision API is simple, fast and powerful. I hope this article helps you to have a clear vision about what Cognitive Services are, and how API Vision specifically can extract valuable information from images or videos.

Víctor Moreno is a specialist in Cloud Computing, and has 10 years of experience working in software development with Microsoft, .Net and open source technologies. He's been a Microsoft Azure MVP since 2015. He blogs in Spanish here. Follow him on Twitter @vmorenoz

Víctor Moreno is a specialist in Cloud Computing, and has 10 years of experience working in software development with Microsoft, .Net and open source technologies. He's been a Microsoft Azure MVP since 2015. He blogs in Spanish here. Follow him on Twitter @vmorenoz