Using R to perform FileSystem Operations on Azure Data Lake Store

In this article, you will learn how to use WebHDFS REST APIs in R to perform filesystem operations on Azure Data Lake Store. We shall look into performing the following 6 filesystem operations on ADLS using httr package for REST calls :

- Create folders

- List folders

- Upload data

- Read data

- Rename a file

- Delete a file

Prerequisites

1. An Azure subscription. See Get Azure free trial.

2. Create an Azure Data Lake Store Account using the following guide.

3. Create an Azure Active Directory Application. You use the Azure AD application to authenticate the Data Lake Store application with Azure AD. There are different approaches to authenticate with Azure AD, which are end-user authentication or service-to-service authentication. For instructions and more information on how to authenticate, see Authenticate with Data Lake Store using Azure Active Directory.

4. An Authorization Token should be obtained from above created Azure AD application. There are many ways to obtain this token: Using REST API , Azure Active Directory Code Samples, ADAL for Python. This authorization token will be used in the header of all requests. R code to obtain the access token : (replace with proper values for client id, client secret, tenant id)

5. Microsoft R Open (OR) Microsoft R Client (OR) Microsoft R Server.

6. Integrated Development Environment like RStudio/RTVS

Install the required package “httr” along with its dependencies.

install.packages("httr", dependencies = TRUE)

In all the code snippets provided below,

- Replace <AD AUTH TOKEN> with the authorization token you obtained in step 4 of Prerequisites.

- Replace <yourstorename> with the Data Lake Store name that you created in step 2 of Prerequisites.

Create Folders

Create a directory called mytempdir under the root folder of your Data Lake Store account.

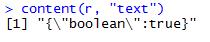

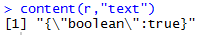

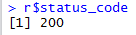

You should see a response like this if the operation completes successfully:

List Folders

List all the folders under the root folder of your Data Lake Store account.

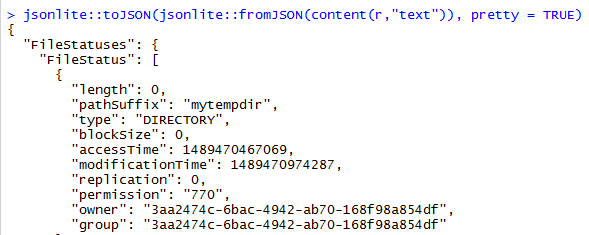

You should see a response like this if the operation completes successfully:

Upload Data

Upload any file to a particular directory in Data Lake Store account. In this example, we will save iris dataframe in a .csv file and upload that iris.csv file to mytempdir.

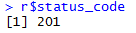

You should see a response like this if the operation completes successfully:

We can also upload R dataframes directly to Data Lake by converting the data frame to a csv string using textConnection() and using it in the "body" parameter. Here is an example of uploading iris dataframe without saving it as a csv file :

You should see a response like this if the operation completes successfully:

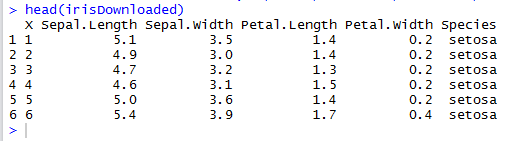

Read Data

Let us read the data in iris.csv file uploaded in the previous step into a R dataframe "irisDownloaded"

You should see a response like this if the operation completes successfully:

In order to read large files from ADLS, we can use the write_disk() argument in httr::GET function to save the file directly to disk without loading it into memory. Here is an example code :

Rename a File

Let's rename the file iris.csv to iris2.csv

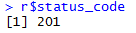

You should see a response like this if the operation completes successfully:

Delete a File

Let's delete the file iris2.csv

You should see a response like this if the operation completes successfully:

REFERENCES

Get started with Azure Data Lake Store using REST APIs Package httr