From the MVPs: Understanding the Windows Server Failover Cluster Quorum in Windows Server 2012 R2

This is the 40th in our series of guest posts by Microsoft Most Valued Professionals (MVPs). You can click the “MVPs” tag in the right column of our blog to see all the articles.

Since the early 1990s, Microsoft has recognized technology champions around the world with the MVP Award . MVPs freely share their knowledge, real-world experience, and impartial and objective feedback to help people enhance the way they use technology. Of the millions of individuals who participate in technology communities, around 4,000 are recognized as Microsoft MVPs. You can read more original MVP-authored content on the Microsoft MVP Award Program Blog .

This post is by Cluster MVP David Bermingham . Thanks, David!

Understanding the Windows Server Failover Cluster Quorum in Windows Server 2012 R2

Before we get started with all the great new cluster quorum features in Windows Server 2012 R2, we should take a moment and understand what the quorum does and how we got to where we are today. Rob Hindman describes quorum best in his blog post…

“The quorum configuration in a failover cluster determines the number of failures that the cluster can sustain while still remaining online.”

Prior to Windows Server 2003, there was only one quorum type, Disk Only. This quorum type is still available today, but is not recommended as the quorum disk is a single point of failure. In Windows Server 2003 Microsoft introduce the Majority Node Set (MNS) quorum. This was an improvement as it eliminated the disk only quorum as a single point of failure in the cluster. However, it did have its limitations. As implied in its name, Majority Node Set must have a majority of nodes to form a quorum and stay online, so this quorum model is not ideal for a two node cluster where the failure of one node would only leave one node remaining. One out of two is not a majority, so the remaining node would go offline.

Microsoft introduced a hotfix that allowed for the creation of a File Share Witness (FSW) on Windows Server 2003 SP1 and 2003 R2 clusters. Essentially the FSW is a simple file share on another server that is given a vote in a MNS cluster. The driving force behind this innovation was Exchange Server 2007 Continuous Cluster Replication (CCR), which allowed for clustering without shared storage. Of course without shared storage a Disk Only Quorum was not an option and effective MNS clusters would require three or more cluster nodes, hence, the introduction of the FSW to support two node Exchange CCR clusters.

Windows Server 2008 saw the introduction of a new witness type, Disk Witness. Unlike the old Disk Only quorum type, the Disk Witness allows the users to configure a small partition on a shared disk that acts as a vote in the cluster, similar to that of the FSW. However, the Disk Witness is preferable to the FSW because it keeps a copy of the cluster database and eliminates the possibility of “partition in time”. If you’d like to read more about partition in time, I suggest you read the File Share Witness vs. Disk Witness for local clusters.

Windows Server 2012 continued to improve upon quorum options. It is my belief that many of these new features were driven by two forces: Hyper-V and SQL Server AlwaysOn Availability Groups. With Hyper-V we began to see clusters that contained many more nodes than we have typically seen in the past. In a majority node set, as soon as you lose a majority of your votes, the remaining nodes go offline. So for example, if you have a Hyper-V cluster with seven nodes, if you were to lose four of those nodes the remaining nodes would go offline, even though there are three nodes remaining. This might not be exactly what you want to happen. So in Windows Server 2012, Microsoft introduced Dynamic Quorum.

Dynamic Quorum does what its name implies, it adjust the quorum dynamically. So in the scenario described about, assuming I didn’t lose all four servers at the same time, as servers in the cluster went offline, the number of votes in the quorum would adjust dynamically. When node one went offline, I would then in theory have a six node cluster. When node two went offline, I would then have a five node cluster, and so on. In reality, if I continued to lose cluster nodes one by one, I could go all the way down to a two node cluster and still remain online. And, if I had configured a witness (Disk or File Share) I could actually go all the way down to a single node and still remain online.

However, best practice in Windows Server 2012 and earlier is to only configure a witness on clusters that have an even number of nodes. Here is an example of why you wouldn’t configure a witness in cluster with an odd number of nodes. Let’s say for example you have a three node cluster and decide to configure a witness (Disk or File Share). If you happen to lose the Witness and a single node, the remaining two nodes will go offline because they only have two out of four votes, not a majority. So adding a witness to a cluster that already has an odd number of votes actually gives you less availability than if you didn’t add a witness at all.

That leads me too Windows Server 2012 R2 and Dynamic Witness. In the scenario above on Windows Server 2012, I wouldn’t have configured a Disk Witness in my seven node cluster. However, on Windows Server 2012 R2 the recommendation has changed to ALWAYS configure a witness, regardless of the number of nodes. The cluster itself will determine whether the witness actually has a vote, depending upon how many cluster nodes are available at any given time. So in the example above with my seven node Hyper-V cluster, I would still configure a witness. Under normal circumstances when all seven nodes are running, my witness would not have a vote. However, as a started losing cluster nodes, the witness vote would be turned on or off as needed to ensure that I always had an odd number of votes in my cluster.

This ability to control whether a cluster resource has a vote or not is not limited to the witness. Starting in Windows Server 2012 (and back ported to Windows Server 2008/2008 R2) the concept of the cluster property “ nodeweight ” was introduced. By setting a cluster nodes nodeweight=0, you are able to remove the voting rights of any cluster node. This is ideal in multisite cluster configurations where a large number of cluster nodes might reside in a different data center. In that case a failure of a WAN link may cause you to lose quorum, bringing down the remaining nodes in your primary data center. However, if you took the votes away from the nodes in your DR site they would not be able to impact the availability of your cluster nodes in your primary data center.

While that is one good use case of nodeweight, another, probably more common use case is when you are configuring SQL Server 2012/2014 AlwaysOn Availability Groups (AOAG). Without going into too much depth here on AOAG, in order to enable AOAG each Replica must reside in the same failover cluster. AOAG relies on the cluster quorum when using the automatic failover feature. So here is the story, let’s say your deployment of SQL Server involves a 2-node SQL Server AlwaysOn Failover Cluster Instance (AOFCI) for high availability. However, you also take advantage of AOAG Read-Only Replicas and have three Replicas which are used for reporting services. Because all of these servers (AOFCI and AOAG) must reside in the same “cluster”, all five of these servers have a vote in the cluster by default.

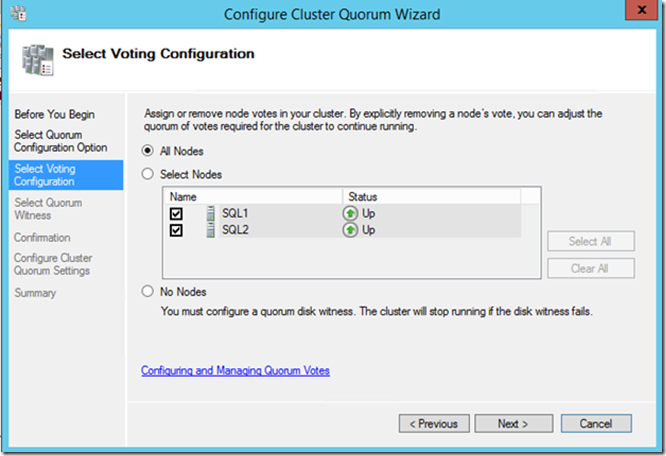

Do you really want your reporting servers impacting the availability of your production database…probably not? Even though Dynamic Quorum will reduce the likelihood of the reporting servers having an impact on the availability of your AOFCI, it is probably best to set the nodeweight=0 on those cluster nodes since there really is no value in having them vote in your cluster anyway. Adding or removing node votes (Setting the nodeweight) in Windows Server 2012 R2 can be done through the Advance Quorum options in the Failover Cluster Manager as shown below.

Prior to Windows Server 2012 R2 you would have to use a PowerShell command to remove a node’s vote nodeweight as shown below:

(Get-ClusterNode “NodeName”).NodeWeight = 0

One final improvement to cluster quorum introduced in Windows Server 2012 R2 is the “Tiebreaker for 50% node split”. The name of this feature is a little misleading. Although it can be used as a “tiebreaker for a 50/50 node split, these does not adequately describe what it does. Essentially, you can assign the LowerQuorumPriorityNodeID cluster common property to any cluster node. When the dynamic quorum has to select which node to remove voting privileges from to maintain an odd number of votes, the nodes with this property set will be removed first. This helps especially in a multisite cluster when you want to ensure that the primary site always has the majority of voting members. If you have a multisite cluster and have lost your witness, and are down to just one node in each site, with the LowerQuorumPriorityNodeID set on the DR server you will still be able to stay online in your primary site, even if you lose communication with the node in your DR site. This is because effectively once you got down to a two node cluster, the dynamic quorum would remove the vote from the DR server, leaving you with a cluster that includes only one vote, and one out of one is a majority!

In summary, cluster quorum options in Windows Server 2012 R2 builds upon the improvements and resiliency in quorum options introduced over the years. Dynamic Witness and 50% Node Split are the new features introduced in Windows Server 2012 R2. These features further enhance the Dynamic QUORUM feature introduced in Windows Server 2012. The other important thing to remember in Windows Server 2012 R2 is that the new recommendation is to ALWAYS configure a witness, whereas in previous versions the recommendation was to always ensure you had an odd number of votes.

Comments

Anonymous

April 28, 2014

The second sentence in the last paragraph should read "Dynamic WITNESS....." not "Dynamic QUORUM.."Anonymous

April 28, 2014

thanks @David - fixedAnonymous

April 29, 2014

Great post! I appreciate how you layout the progression of the feature across versions.Anonymous

August 07, 2014

There is no such acronym in the SQL world AOAG - it's just AG.Anonymous

September 26, 2014

@Allan, just be glad I didn't just call it AlwaysOn ;)Anonymous

December 25, 2014

Great Post ! Made all the concepts clearAnonymous

January 18, 2015

Shouldn't the third sentence in the last paragraph read "Dynamic QUORUM..." , as this was introduced in Windows Server 2012?Anonymous

January 20, 2015

@Charles - yes, you're correct. I fixed the post just now after confirming with Dave, the author.Anonymous

March 30, 2015

Actually you can use Failover Cluster Manager in Windows Server 2012 to configure or remove nodeweight from Advanced Quorum Configuration and Witness Selection option. UI option is not new to Windows Server 2012 R2.Anonymous

September 17, 2015

It is a common task to be ask from consultants to setup ConfigMgr 2012 R2 to create a scenario that supports HA and DR at the same time, looking at this information available for Windows Server 2012 R2 provides the perfect situation to create a three node cluster to host the SQL product with attached storage to host the database (I would assume and active + passive + DR node) in this possible scenario, can you describe or provide suggestions (I have an idea but would like to hear some from you)in which the LowerQuorumPriorityNodeID feature is used to assign this to the node in DR? it appears to be explained above.... just need a little more extended explanation on it...Anonymous

December 21, 2015

Fantastic and informative read. Its nice to have such a clear and progressive article on a fairly complex subject.Anonymous

March 28, 2016

Thanks so much. Very informative.Anonymous

August 17, 2016

Hi. What is the best 2012 R2 quorum model if we want to create a multi-site cluster with 2 nodes per site, but we don't have a 3rd site to house a File Share Witness?- Anonymous

September 21, 2016

With Windows 2016 release imminent your best bet is to use a Cloud Witness. This eliminates the need for a 3rd site. If you are stuck on 2012 R2 then your only option is to put the witness in the primary location and just realize that in the event of a disaster where you lose the entire primary location you will need to force the quorum online in the DR site.

- Anonymous

Anonymous

September 02, 2016

Cleared lot of my questions. Thanks for the article.Anonymous

November 10, 2017

Very simple explanation on such a complex topic. Thank you for the article.Anonymous

February 20, 2018

How can I print this document ?